Pytorch Print Device Name . I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. Get_device_name (device = none) [source] ¶ get the name of a device. I want to print model’s parameters with its name. This tutorial shows how to get available gpu devices using pytorch. In the following code, we iterate over the range of available gpu devices and create a list of them. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Import torch for i in range(torch.cuda.device_count()): But i want to use both requires_grad and name. I found two ways to print summary. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:.

from zhuanlan.zhihu.com

This tutorial shows how to get available gpu devices using pytorch. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Import torch for i in range(torch.cuda.device_count()): Get_device_name (device = none) [source] ¶ get the name of a device. But i want to use both requires_grad and name. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. I want to print model’s parameters with its name. I found two ways to print summary. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. In the following code, we iterate over the range of available gpu devices and create a list of them.

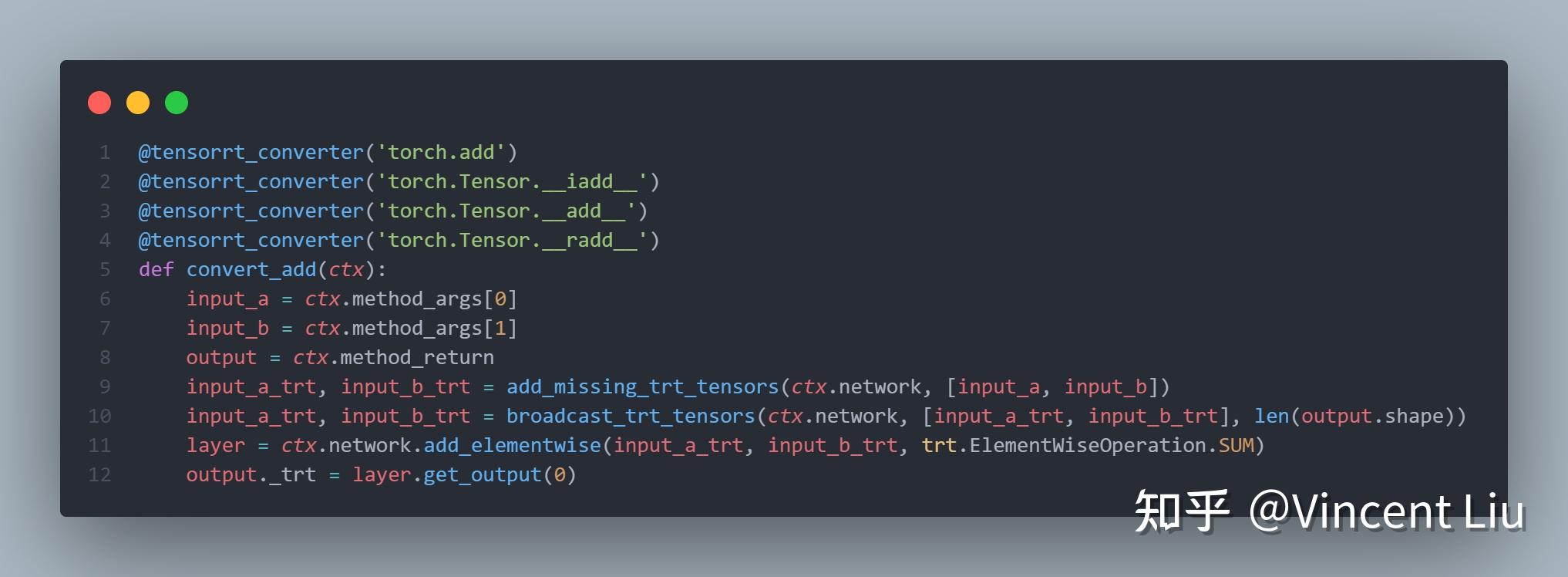

Pytorch 模型转 TensorRT (torch2trt 教程) 知乎

Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I want to print model’s parameters with its name. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Get_device_name (device = none) [source] ¶ get the name of a device. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. But i want to use both requires_grad and name. Import torch for i in range(torch.cuda.device_count()): I found two ways to print summary. In the following code, we iterate over the range of available gpu devices and create a list of them. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. This tutorial shows how to get available gpu devices using pytorch.

From www.vrogue.co

Homework 01 Neural Networks With Pytorch vrogue.co Pytorch Print Device Name I want to print model’s parameters with its name. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. I found two. Pytorch Print Device Name.

From www.codingninjas.com

Transfer Learning using PyTorch Coding Ninjas Pytorch Print Device Name I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. This tutorial shows how to get available gpu devices using pytorch. But i want to use both requires_grad and name. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:.. Pytorch Print Device Name.

From discuss.pytorch.org

How to print the computational graph of a Variable? PyTorch Forums Pytorch Print Device Name I found two ways to print summary. In the following code, we iterate over the range of available gpu devices and create a list of them. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Get_device_name (device =. Pytorch Print Device Name.

From debuggercafe.com

Text Classification using PyTorch Pytorch Print Device Name Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. But i want to use both requires_grad and name. Import torch for i in range(torch.cuda.device_count()): In case your model is stored on just one gpu, you could simply print. Pytorch Print Device Name.

From blog.csdn.net

pytorch_lightning 使用教程_pytorch lightning教程CSDN博客 Pytorch Print Device Name This tutorial shows how to get available gpu devices using pytorch. I want to print model’s parameters with its name. In the following code, we iterate over the range of available gpu devices and create a list of them. I found two ways to print summary. But i want to use both requires_grad and name. I have three gpu’s and. Pytorch Print Device Name.

From telin.ugent.be

Installing PyTorch with Cuda TELIN Helpdesk Pytorch Print Device Name This tutorial shows how to get available gpu devices using pytorch. Import torch for i in range(torch.cuda.device_count()): In the following code, we iterate over the range of available gpu devices and create a list of them. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. In case your model. Pytorch Print Device Name.

From www.youtube.com

Pytorch Image Captioning Tutorial YouTube Pytorch Print Device Name I found two ways to print summary. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Get_device_name (device = none) [source] ¶ get the name of a device. In the following code, we iterate over the range of. Pytorch Print Device Name.

From github.com

GitHub h3ma209/pytorchnamegenerator generating names with pytorch Pytorch Print Device Name Get_device_name (device = none) [source] ¶ get the name of a device. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. Import torch for i in range(torch.cuda.device_count()): Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or. Pytorch Print Device Name.

From blog.csdn.net

安装pytorch+配置pycharm解释器(超级详细适合小白)_pycharm专业安装解释器CSDN博客 Pytorch Print Device Name Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. In the following code, we iterate over the range of available gpu devices and create a list. Pytorch Print Device Name.

From www.scaler.com

What is PyTorch? Introduction to PyTorch Pytorch Print Device Name I want to print model’s parameters with its name. I found two ways to print summary. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. In case your model is stored on just one gpu, you could simply. Pytorch Print Device Name.

From python.plainenglish.io

Image Classification with PyTorch by Varrel Tantio Python in Plain Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch for i in range(torch.cuda.device_count()): But i want to use both requires_grad and name. Get_device_name (device = none) [source] ¶ get the name of a device. I found two ways to print summary. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will. Pytorch Print Device Name.

From github.com

Format Learn PyTorch for Deep Learning book homepage for launch · Issue Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. I found two ways to print summary. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. But i want to use. Pytorch Print Device Name.

From laptrinhx.com

PyTorch internals LaptrinhX Pytorch Print Device Name Get_device_name (device = none) [source] ¶ get the name of a device. I found two ways to print summary. This tutorial shows how to get available gpu devices using pytorch. I want to print model’s parameters with its name. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. Num_devices. Pytorch Print Device Name.

From pctown.co.nz

PyTorch 2.0 Izdanje Veliko ažuriranje okvira za strojno učenje Pytorch Print Device Name I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. Get_device_name (device = none) [source] ¶ get the name of a device. In the following code, we iterate over the range of available gpu devices and create a list of them. I found two ways to print summary. But i. Pytorch Print Device Name.

From laptrinhx.com

PyTorch internals LaptrinhX Pytorch Print Device Name In the following code, we iterate over the range of available gpu devices and create a list of them. This tutorial shows how to get available gpu devices using pytorch. I want to print model’s parameters with its name. Get_device_name (device = none) [source] ¶ get the name of a device. But i want to use both requires_grad and name.. Pytorch Print Device Name.

From github.com

Not using the same GPU as pytorch because pytorch device id doesn't Pytorch Print Device Name This tutorial shows how to get available gpu devices using pytorch. I found two ways to print summary. But i want to use both requires_grad and name. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. I want to print model’s parameters with its name. Get_device_name (device = none). Pytorch Print Device Name.

From stackoverflow.com

python Installed Pytorch 1.12 in the environment but detects version Pytorch Print Device Name Get_device_name (device = none) [source] ¶ get the name of a device. I found two ways to print summary. In the following code, we iterate over the range of available gpu devices and create a list of them. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. But i want to use both requires_grad and name. In case your model is. Pytorch Print Device Name.

From towardsdatascience.com

Implementing ConvNext in PyTorch. Towards Data Science Pytorch Print Device Name I found two ways to print summary. I want to print model’s parameters with its name. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch for i in range(torch.cuda.device_count()): Get_device_name (device = none) [source] ¶ get the name of a device. In case your model is stored on just one gpu, you could simply print the device of one. Pytorch Print Device Name.

From blog.csdn.net

pytorch cheatsheetCSDN博客 Pytorch Print Device Name But i want to use both requires_grad and name. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I found two ways to print summary. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. In the following code, we iterate over the range of available gpu devices and create a. Pytorch Print Device Name.

From www.vrogue.co

Multi Label Image Classification With Pytorch And Deep Learning Vrogue Pytorch Print Device Name Get_device_name (device = none) [source] ¶ get the name of a device. I want to print model’s parameters with its name. But i want to use both requires_grad and name. In the following code, we iterate over the range of available gpu devices and create a list of them. In case your model is stored on just one gpu, you. Pytorch Print Device Name.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I want to print model’s parameters with its name. Import torch for i in range(torch.cuda.device_count()): This tutorial shows how to get available gpu devices using pytorch. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. Get_device_name (device = none) [source] ¶. Pytorch Print Device Name.

From www.reddit.com

What is .PTH file in the scope of Pytorch or tensorflow? pytorch Pytorch Print Device Name I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. I found two ways to print summary. I want to print model’s parameters with its name. This tutorial shows how to get available gpu devices using pytorch. But i want to use both requires_grad and name. In case your model. Pytorch Print Device Name.

From glamkurt.weebly.com

Pytorch tutorial pycharm windows 10 glamkurt Pytorch Print Device Name I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. Import torch for i in range(torch.cuda.device_count()): Get_device_name (device = none) [source] ¶ get the name of a device. I want to print model’s parameters with its name. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch.nn as nn model. Pytorch Print Device Name.

From www.youtube.com

PyTorch Print Tensor Print Full Tensor in PyTorch PyTorch Tutorial Pytorch Print Device Name I want to print model’s parameters with its name. But i want to use both requires_grad and name. In the following code, we iterate over the range of available gpu devices and create a list of them. I found two ways to print summary. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Get_device_name (device = none) [source] ¶ get the. Pytorch Print Device Name.

From www.exxactcorp.com

Exxact Deep Learning, HPC, AV, Distribution & More Pytorch Print Device Name I want to print model’s parameters with its name. But i want to use both requires_grad and name. This tutorial shows how to get available gpu devices using pytorch. In the following code, we iterate over the range of available gpu devices and create a list of them. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch for i. Pytorch Print Device Name.

From github.com

Should print list of valid log names when invalid name is passed Pytorch Print Device Name I found two ways to print summary. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. In the following code, we iterate over the range of available gpu devices and create a list of them. I have three gpu’s and have been trying to set cuda_visible_devices in my environment,. Pytorch Print Device Name.

From zhuanlan.zhihu.com

Pytorch 模型转 TensorRT (torch2trt 教程) 知乎 Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. In the following code, we iterate over the range of available gpu devices and create a list of them. This tutorial shows how to get available gpu devices using pytorch. In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. I found. Pytorch Print Device Name.

From www.codeunderscored.com

PyTorch Reciprocal() Code Underscored Pytorch Print Device Name I want to print model’s parameters with its name. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. Get_device_name (device = none) [source] ¶ get the name of a device. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch for i in range(torch.cuda.device_count()): In case. Pytorch Print Device Name.

From wandb.ai

How To Check If PyTorch Is Using The GPU dlquestionbank Weights Pytorch Print Device Name Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. In the following code, we iterate over the range of available gpu devices and create a list of them. Get_device_name (device = none) [source] ¶ get the name of a device. This tutorial shows how to get. Pytorch Print Device Name.

From www.programmersought.com

Install pytorchGPU and cuda, pycharm (professional version) under Pytorch Print Device Name In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. I want to print model’s parameters with its name. Import torch for i in range(torch.cuda.device_count()): I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused. Pytorch Print Device Name.

From xland.cyou

PyTorch基础 (二)Dataset和DataLoader Pytorch Print Device Name In case your model is stored on just one gpu, you could simply print the device of one parameter, e.g.:. I found two ways to print summary. But i want to use both requires_grad and name. In the following code, we iterate over the range of available gpu devices and create a list of them. Num_devices = torch.cuda.device_count() for device_index. Pytorch Print Device Name.

From discuss.pytorch.org

GPU version of Pytorch cannot be enabled PyTorch Forums Pytorch Print Device Name Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the above code will print either cpu or cuda:0. But i want to use both requires_grad and name. I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. In case your model is stored on just. Pytorch Print Device Name.

From towardsai.net

PyTorch Wrapper to Build and Train Neural Networks Towards AI Pytorch Print Device Name Get_device_name (device = none) [source] ¶ get the name of a device. Import torch for i in range(torch.cuda.device_count()): I want to print model’s parameters with its name. In the following code, we iterate over the range of available gpu devices and create a list of them. This tutorial shows how to get available gpu devices using pytorch. Import torch.nn as. Pytorch Print Device Name.

From www.freecodecamp.org

Learn PyTorch for Deep Learning Free 26Hour Course Pytorch Print Device Name Num_devices = torch.cuda.device_count() for device_index in range (num_devices):. Import torch for i in range(torch.cuda.device_count()): I have three gpu’s and have been trying to set cuda_visible_devices in my environment, but am confused by the difference. I found two ways to print summary. But i want to use both requires_grad and name. Get_device_name (device = none) [source] ¶ get the name of. Pytorch Print Device Name.

From www.45fan.com

PyTorch中的CUDA的操作方法 路饭网 Pytorch Print Device Name Import torch for i in range(torch.cuda.device_count()): This tutorial shows how to get available gpu devices using pytorch. But i want to use both requires_grad and name. In the following code, we iterate over the range of available gpu devices and create a list of them. Import torch.nn as nn model = nn.sequential( nn.linear(1, 1) ) device = next(model.parameters()).device print(device) the. Pytorch Print Device Name.