Pytorch Missing Keys . My saved state_dict does not contain all the layers that are in my model. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. You can create new dictionary and modify keys without att. My initial error was only having 'one layer inside an lstm yet i encountered another problem. How can i ignore the missing key(s) in state_dict. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Exercise caution with missing keys when loading with strict=false. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. Prefix and you can load the new dictionary to your model as following:. Consider using tools like torchsummary for model architecture.

from blog.csdn.net

I tried implementing two layers (nlayers=2) by instantiating a new rnn object. How can i ignore the missing key(s) in state_dict. Consider using tools like torchsummary for model architecture. Exercise caution with missing keys when loading with strict=false. My initial error was only having 'one layer inside an lstm yet i encountered another problem. My saved state_dict does not contain all the layers that are in my model. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. You can create new dictionary and modify keys without att. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth').

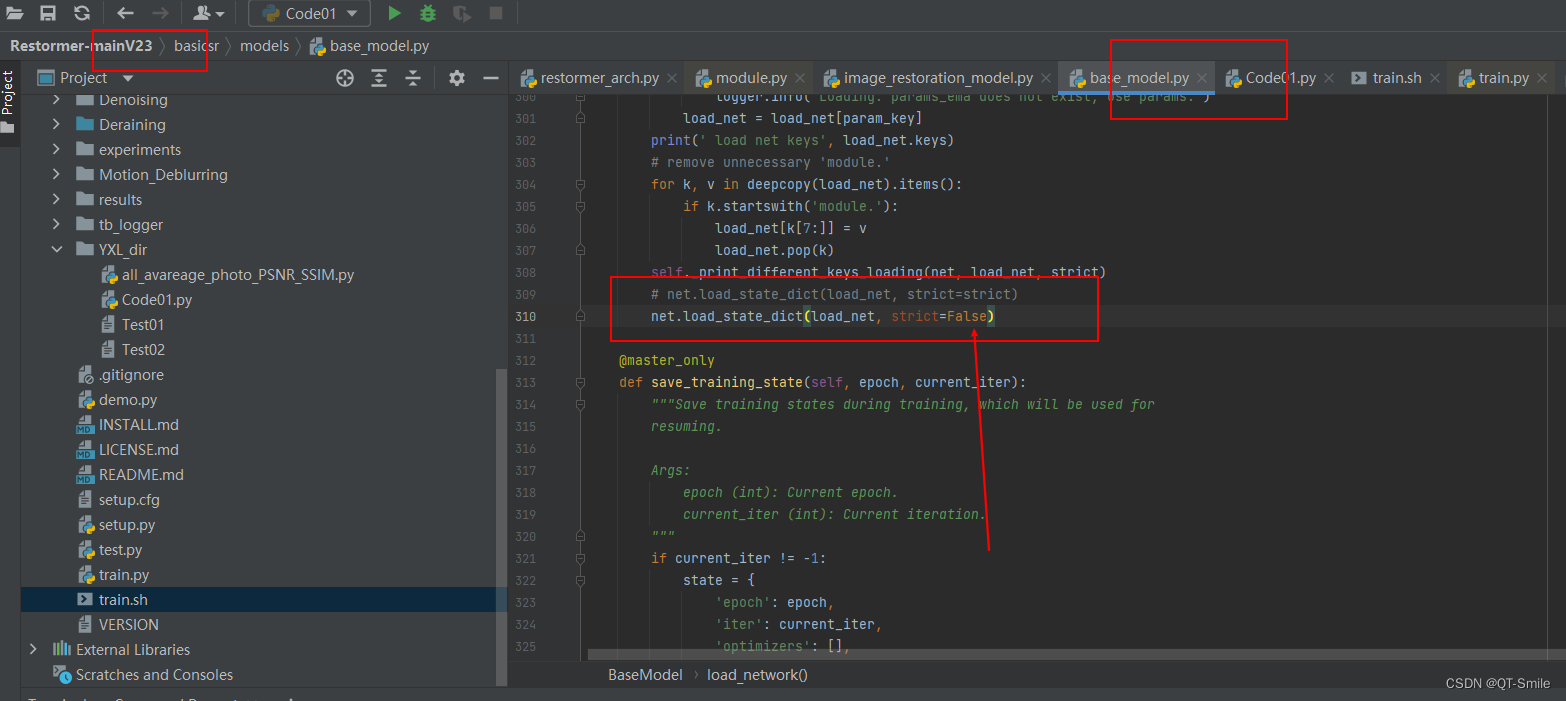

Missing key(s) in state_dict “Conv_1x1.weight“._miss key .conv1.1.bias

Pytorch Missing Keys How can i ignore the missing key(s) in state_dict. How can i ignore the missing key(s) in state_dict. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. Prefix and you can load the new dictionary to your model as following:. My saved state_dict does not contain all the layers that are in my model. Exercise caution with missing keys when loading with strict=false. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. You can create new dictionary and modify keys without att. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). My initial error was only having 'one layer inside an lstm yet i encountered another problem. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. Consider using tools like torchsummary for model architecture.

From github.com

Error while loading model file .ckpt file Missing key(s) in state Pytorch Missing Keys The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). How can i ignore the missing key(s) in state_dict. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My saved state_dict does not contain all the layers that are. Pytorch Missing Keys.

From github.com

Missing key(s) in state_dict & Unexpected key(s) in state_dict · Issue Pytorch Missing Keys I tried implementing two layers (nlayers=2) by instantiating a new rnn object. Prefix and you can load the new dictionary to your model as following:. My saved state_dict does not contain all the layers that are in my model. Consider using tools like torchsummary for model architecture. The message is missing import torch import torchvision.models as models alexnet = models.alexnet(). Pytorch Missing Keys.

From github.com

NotImplementedError Module is missing the required "forward" function Pytorch Missing Keys You can create new dictionary and modify keys without att. Prefix and you can load the new dictionary to your model as following:. Exercise caution with missing keys when loading with strict=false. How can i ignore the missing key(s) in state_dict. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. It looks like the only difference between. Pytorch Missing Keys.

From github.com

"Features_only" key missing for ViTModels? · Issue 397 Pytorch Missing Keys How can i ignore the missing key(s) in state_dict. My saved state_dict does not contain all the layers that are in my model. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(),. Pytorch Missing Keys.

From github.com

Missing keys when loading pretrained weights · Issue 278 · lukemelas Pytorch Missing Keys You can create new dictionary and modify keys without att. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. It looks like the only difference between missing keys and unexpected keys is. Pytorch Missing Keys.

From discuss.pytorch.org

Missing key(s) in state_dict PyTorch Forums Pytorch Missing Keys My saved state_dict does not contain all the layers that are in my model. Prefix and you can load the new dictionary to your model as following:. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’. Pytorch Missing Keys.

From artiper.tistory.com

[Pytorch] 모델 저장 오류 Pytorch Missing Keys I tried implementing two layers (nlayers=2) by instantiating a new rnn object. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). My initial error was only having 'one layer inside an lstm yet i encountered another problem. Consider using tools like torchsummary for model architecture. How can i ignore the missing key(s) in state_dict.. Pytorch Missing Keys.

From github.com

All keys matched successfully missing when loading state dict on Pytorch Missing Keys Prefix and you can load the new dictionary to your model as following:. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Consider using tools like torchsummary for model architecture. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. My initial error was only having 'one layer inside an lstm yet. Pytorch Missing Keys.

From discuss.pytorch.org

Forward() missing 2 required positional arguments 'input' and 'target Pytorch Missing Keys Exercise caution with missing keys when loading with strict=false. Consider using tools like torchsummary for model architecture. Prefix and you can load the new dictionary to your model as following:. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). You can create new dictionary and modify keys without att. It looks like the only. Pytorch Missing Keys.

From blog.csdn.net

Missing key(s) in state_dict “Conv_1x1.weight“._miss key .conv1.1.bias Pytorch Missing Keys My initial error was only having 'one layer inside an lstm yet i encountered another problem. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. Prefix and you can load the new dictionary to your model as following:. My saved state_dict does not contain all the. Pytorch Missing Keys.

From github.com

Missing key(s) in state_dict · Issue 75 · layumi/Person_reID_baseline Pytorch Missing Keys Exercise caution with missing keys when loading with strict=false. Prefix and you can load the new dictionary to your model as following:. Consider using tools like torchsummary for model architecture. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. The message is missing import. Pytorch Missing Keys.

From blog.csdn.net

RuntimeError Error(s) in loading state_dict for Missing key(s Pytorch Missing Keys You can create new dictionary and modify keys without att. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Consider using tools like torchsummary for model architecture. Prefix and. Pytorch Missing Keys.

From www.youtube.com

Handling Missing Keys in Dictionary in Python YouTube Pytorch Missing Keys It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. How can i ignore the missing key(s) in state_dict. You can create new dictionary and modify keys without att. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). The problem is. Pytorch Missing Keys.

From discuss.pytorch.org

Missing keys & unexpected keys in state_dict when loading pretrained Pytorch Missing Keys Prefix and you can load the new dictionary to your model as following:. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. You can create new dictionary and modify keys without att. Exercise caution with missing keys when loading with strict=false. The message is. Pytorch Missing Keys.

From github.com

missing keys for v3large? · Issue 11 · Pytorch Missing Keys The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My initial error was only having 'one layer inside an lstm yet i encountered another problem. Exercise caution with missing keys when loading with strict=false. Consider using tools like torchsummary for model architecture. It looks. Pytorch Missing Keys.

From github.com

[Feature Request] ModuleDict nonstring keys · Issue 11714 · pytorch Pytorch Missing Keys The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My saved state_dict does not contain all the layers that are in my model. How can i ignore the missing key(s) in state_dict. It looks like the only difference between missing keys and unexpected keys. Pytorch Missing Keys.

From zerotomastery.io

PyTorch Cheat Sheet + PDF Zero To Mastery Pytorch Missing Keys How can i ignore the missing key(s) in state_dict. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). My initial error was only having 'one layer inside an lstm yet i encountered another problem. Exercise caution with missing keys when loading with strict=false. Prefix and you can load the new dictionary to your model. Pytorch Missing Keys.

From blog.csdn.net

pytorch学习002 debug(load_state_dict() missing 1 required positional Pytorch Missing Keys I tried implementing two layers (nlayers=2) by instantiating a new rnn object. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. Exercise caution with missing keys when loading with strict=false. My saved state_dict does not contain all the layers that are in my model.. Pytorch Missing Keys.

From github.com

missing keys for v3large? · Issue 11 · Pytorch Missing Keys My saved state_dict does not contain all the layers that are in my model. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. How can i ignore the missing key(s) in state_dict. My initial error was only having 'one layer inside an lstm yet i encountered another problem. The message is missing import torch import torchvision.models as. Pytorch Missing Keys.

From blog.csdn.net

Missing key(s) in state_dict unexpected_keys 模型加载 [torch.load] 报错 Pytorch Missing Keys Exercise caution with missing keys when loading with strict=false. My initial error was only having 'one layer inside an lstm yet i encountered another problem. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree. Pytorch Missing Keys.

From blog.csdn.net

pytorch加载模型 model.load_state_dict 报错RuntimeError(‘Error(s) in loading Pytorch Missing Keys Consider using tools like torchsummary for model architecture. My saved state_dict does not contain all the layers that are in my model. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Prefix and you can load the new dictionary to your model as following:. You can create new dictionary and modify keys without att.. Pytorch Missing Keys.

From github.com

BEVFusion Wrong pytorch model/keys missing or misaligned while running Pytorch Missing Keys My initial error was only having 'one layer inside an lstm yet i encountered another problem. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. Prefix and you can load the new dictionary to your model as following:. The problem is that the keys in state_dict are fully qualified, which means that if you look at your. Pytorch Missing Keys.

From github.com

Error(s) in loading state_dict for Net Missing key(s) in state_dict Pytorch Missing Keys The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. Prefix and you can load the new dictionary to your model as following:. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. How can i ignore the missing key(s) in state_dict. The. Pytorch Missing Keys.

From github.com

"TypeError cannot pickle 'dict_keys' object" when GPU DDP training Pytorch Missing Keys The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). I tried implementing two layers (nlayers=2) by instantiating a new rnn object. My initial error was only having 'one layer inside an lstm yet i encountered another problem. Prefix and you can load the new dictionary to your model as following:. Consider using tools like. Pytorch Missing Keys.

From blog.csdn.net

RuntimeError Error(s) in loading state_dic ,Missing key(s) in state Pytorch Missing Keys Prefix and you can load the new dictionary to your model as following:. Consider using tools like torchsummary for model architecture. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. My saved state_dict does. Pytorch Missing Keys.

From github.com

Keys of a `ModuleDict` cannot have the same name as existing Pytorch Missing Keys Consider using tools like torchsummary for model architecture. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. How can i ignore the missing key(s) in state_dict. My initial error was only having 'one layer inside an lstm yet i encountered another problem. You can create new. Pytorch Missing Keys.

From discuss.pytorch.org

Missing key(s) in state_dict PyTorch Forums Pytorch Missing Keys I tried implementing two layers (nlayers=2) by instantiating a new rnn object. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. You can create new dictionary and modify keys. Pytorch Missing Keys.

From discuss.pytorch.org

Missing key(s) in state_dict PyTorch Forums Pytorch Missing Keys How can i ignore the missing key(s) in state_dict. My saved state_dict does not contain all the layers that are in my model. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Prefix and you can load the new dictionary to your model as following:. You can create new dictionary and modify keys without. Pytorch Missing Keys.

From discuss.pytorch.org

Missing key(s) in state_dict PyTorch Forums Pytorch Missing Keys I tried implementing two layers (nlayers=2) by instantiating a new rnn object. My initial error was only having 'one layer inside an lstm yet i encountered another problem. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. The problem is that the keys in state_dict are. Pytorch Missing Keys.

From blog.csdn.net

Pytorch 模型保存与加载总结_jupyter torch saveCSDN博客 Pytorch Missing Keys The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My initial error was only having 'one layer inside an lstm yet i encountered another problem. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Prefix and you can. Pytorch Missing Keys.

From blog.csdn.net

Error(s) pytorch 加载checkpoint state_dict出错Missing key(s) && Unexpected Pytorch Missing Keys Consider using tools like torchsummary for model architecture. My saved state_dict does not contain all the layers that are in my model. How can i ignore the missing key(s) in state_dict. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My initial error was. Pytorch Missing Keys.

From stackoverflow.com

deep learning Pytorch RuntimeError Error(s) in loading state_dict Pytorch Missing Keys The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). Prefix and you can load the new dictionary to your model as following:. I tried implementing two layers (nlayers=2) by instantiating a new rnn object. You can create new dictionary and modify keys without att. My initial error was only having 'one layer inside an. Pytorch Missing Keys.

From blog.csdn.net

pytorch错误及解决方案_optimizer got an empty parameter listCSDN博客 Pytorch Missing Keys My initial error was only having 'one layer inside an lstm yet i encountered another problem. Prefix and you can load the new dictionary to your model as following:. It looks like the only difference between missing keys and unexpected keys is that the missing keys have an extra ‘0’ in them,. Consider using tools like torchsummary for model architecture.. Pytorch Missing Keys.

From github.com

[BUG] Misleading error message in `advantage_module.set_keys()` · Issue Pytorch Missing Keys How can i ignore the missing key(s) in state_dict. You can create new dictionary and modify keys without att. The problem is that the keys in state_dict are fully qualified, which means that if you look at your network as a tree of nested. My saved state_dict does not contain all the layers that are in my model. Exercise caution. Pytorch Missing Keys.

From discuss.pytorch.org

RuntimeError Error(s) in loading state_dict for Missing key(s Pytorch Missing Keys Consider using tools like torchsummary for model architecture. You can create new dictionary and modify keys without att. My initial error was only having 'one layer inside an lstm yet i encountered another problem. How can i ignore the missing key(s) in state_dict. The message is missing import torch import torchvision.models as models alexnet = models.alexnet() torch.save(alexnet.state_dict(), './alexnet.pth'). My saved. Pytorch Missing Keys.