Torch.nn.init.kaiming_Normal_ . xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch.

from discuss.pytorch.org

learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in.

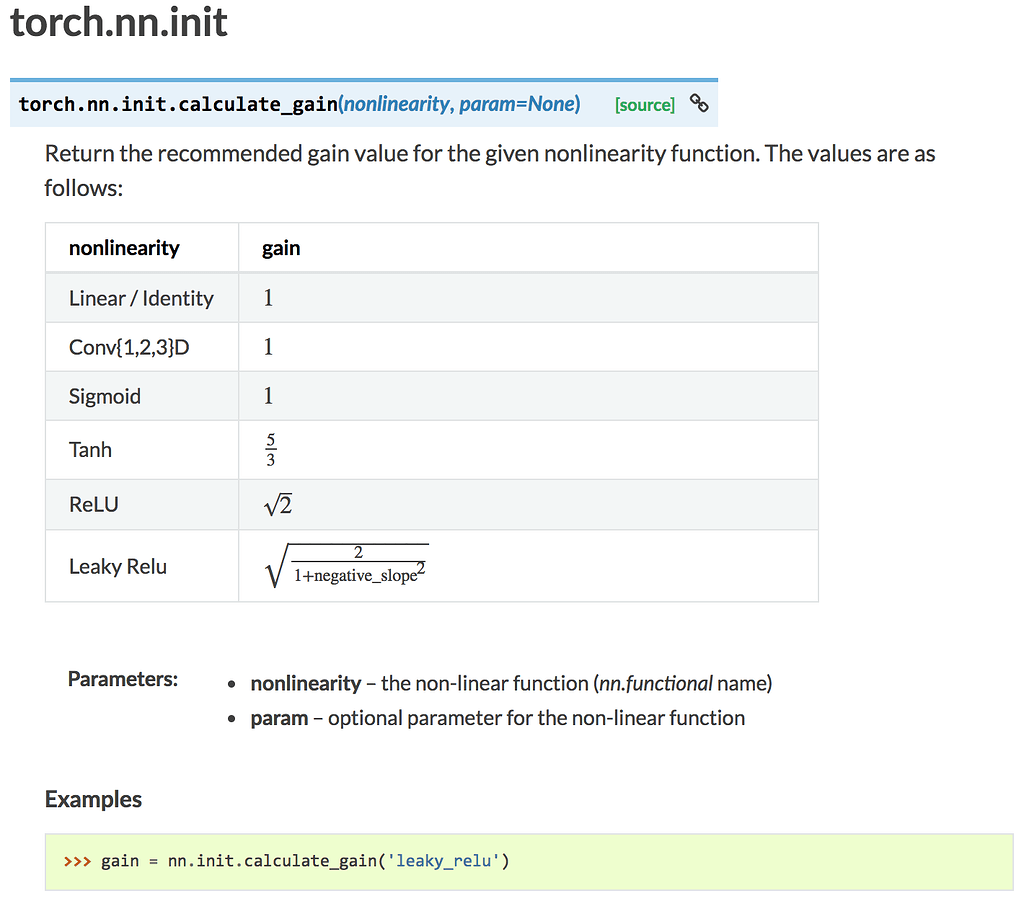

How to use torch.nn.init.calculate_gain? PyTorch Forums

Torch.nn.init.kaiming_Normal_ when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods.

From www.cnpython.com

为什么torch.nn.Sigmoid()的行为与torch.Sigmoid不同? 问答 Python中文网 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. the pytorch nn.init module is a conventional way. Torch.nn.init.kaiming_Normal_.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

模型初始化CSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead. Torch.nn.init.kaiming_Normal_.

From github.com

torch.eye(..., out=t) / nn.init.eye_ does not work on MPS for tensor Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to use. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

【pytorch 】nn.init 中实现的初始化函数 normal, Xavier==》为了保证数据的分布(均值方差一致)是一样的,类似BN Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. the pytorch nn.init module is a conventional way. Torch.nn.init.kaiming_Normal_.

From www.yisu.com

怎么在Pytorch 中对TORCH.NN.INIT 参数进行初始化 开发技术 亿速云 Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

Pytorch网络参数初始化方法总结torch.nn.init_torch.nn.init.normal_神仙院B栋4楼保安的博客CSDN博客 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: xavier initialization sets. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

PyTorch学习笔记(三)参数初始化与各种Norm层_longrootchen的博客CSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: xavier initialization sets weights to random values sampled from a normal distribution with. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

深度学习基础知识(一) 权重初始化_data.size(0)CSDN博客 Torch.nn.init.kaiming_Normal_ i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to use nn_init_kaiming_normal_ function to initialize tensor. Torch.nn.init.kaiming_Normal_.

From aitechtogether.com

pytorch常见的pytorch参数初始化方法总结 AI技术聚合 Torch.nn.init.kaiming_Normal_ Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a.. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

UltraFastLaneDetectionmaster代码学习一_ultrafastlanedetection 代码解析CSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should. Torch.nn.init.kaiming_Normal_.

From kylehh.github.io

Andrej KarpathyBatchNorm Kyle’s Blog Torch.nn.init.kaiming_Normal_ Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used. Torch.nn.init.kaiming_Normal_.

From developer.aliyun.com

TensorFlow+Pytorch识别阿猫阿狗(下)阿里云开发者社区 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch.. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

PyTorch模型参数初始化_torch kaiming initializationCSDN博客 Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear'. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

深度学习06—逻辑斯蒂回归(torch实现)_torch.nn.sigmoidCSDN博客 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: . Torch.nn.init.kaiming_Normal_.

From www.solutioninn.com

[Solved] class Conlet (torch.nn.Module) def init SolutionInn Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. xavier initialization sets weights to random values sampled from a normal distribution. Torch.nn.init.kaiming_Normal_.

From github.com

GitHub huangpan2507/long_lesson26_LR lesson26 LR多分类实践 ,其中比较重要的是初始化 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. i have read several codes that do layer initialization. Torch.nn.init.kaiming_Normal_.

From zhuanlan.zhihu.com

深度学习参数初始化详细推导:Xavier方法和kaiming方法【二】 知乎 Torch.nn.init.kaiming_Normal_ learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a. Torch.nn.init.kaiming_Normal_.

From github.com

Some models warn about `nn.init.kaiming_normal` · Issue 479 · pytorch Torch.nn.init.kaiming_Normal_ learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. xavier initialization sets weights to random values sampled. Torch.nn.init.kaiming_Normal_.

From studentprojectcode.com

How to Initialize Weights In A Pytorch Model in 2024? Torch.nn.init.kaiming_Normal_ i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

模型初始化CSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. xavier initialization sets weights to random values. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

torch.nn.Parameter()使用方法_torch parameterCSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. Kaiming_normal_ (tensor tensor, double a = 0,. Torch.nn.init.kaiming_Normal_.

From zhuanlan.zhihu.com

torch函数 知乎 Torch.nn.init.kaiming_Normal_ when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. xavier initialization sets weights to random values sampled from. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

Kaiming_normal 正态分布_kaiming normalCSDN博客 Torch.nn.init.kaiming_Normal_ i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a.. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

UltraFastLaneDetectionmaster代码学习一_ultrafastlanedetection 代码解析CSDN博客 Torch.nn.init.kaiming_Normal_ when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. . Torch.nn.init.kaiming_Normal_.

From www.yisu.com

怎么在Pytorch 中对TORCH.NN.INIT 参数进行初始化 开发技术 亿速云 Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. Kaiming_normal_ (tensor tensor, double a =. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

InceptionV1代码复现+超详细注释(PyTorch)CSDN博客 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. learn how to use torch.nn.init module to initialize neural network parameters. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

[nn.Parameter]理解总结与初始化方法大全CSDN博客 Torch.nn.init.kaiming_Normal_ Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of. Torch.nn.init.kaiming_Normal_.

From velog.io

Pytorch torch.nn.init 과 Tensorflow tf.keras.Innitializers 비교 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0. Torch.nn.init.kaiming_Normal_.

From discuss.pytorch.org

How to use torch.nn.init.calculate_gain? PyTorch Forums Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

【Pytorch】torch.nn.init.xavier_uniform_()CSDN博客 Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. xavier initialization sets weights to random values sampled from. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

torch.nn.init.kaiming_normal__torch haiming initCSDN博客 Torch.nn.init.kaiming_Normal_ xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

Pytorch 模型初始化_pytorch模型初始化CSDN博客 Torch.nn.init.kaiming_Normal_ the pytorch nn.init module is a conventional way to initialize weights in a neural network, which provides a. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. xavier initialization sets weights to random values sampled from. Torch.nn.init.kaiming_Normal_.

From forums.fast.ai

AttributeError module 'torch.nn.init' has no attribute 'kaiming_normal Torch.nn.init.kaiming_Normal_ learn how to use nn_init_kaiming_normal_ function to initialize tensor weights with a normal distribution. i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. when using kaiming_normal or kaiming_normal_ for initialisation, nonlinearity='linear' should be used instead of nonlinearity='selu' in. Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: learn how to. Torch.nn.init.kaiming_Normal_.

From blog.csdn.net

二维卷积神经网络的初始化为0及其他初始化方式对比_nn.conv2d 零初始化CSDN博客 Torch.nn.init.kaiming_Normal_ Kaiming_normal_ (tensor tensor, double a = 0, fanmodetype mode = torch:: i have read several codes that do layer initialization using nn.init.kaiming_normal_() of pytorch. xavier initialization sets weights to random values sampled from a normal distribution with a mean of 0 and a. learn how to use torch.nn.init module to initialize neural network parameters with various distributions. Torch.nn.init.kaiming_Normal_.