Partitions Count Spark . spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. read the input data with the number of partitions, that matches your core count. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. This is the most common way. Columnorname) → dataframe [source] ¶.

from dzone.com

read the input data with the number of partitions, that matches your core count. Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing.

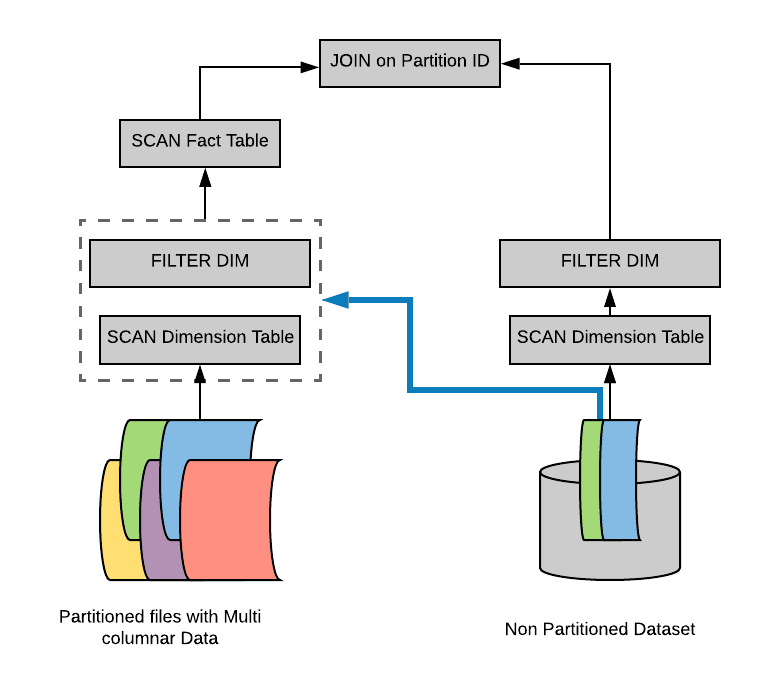

Dynamic Partition Pruning in Spark 3.0 DZone

Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. Columnorname) → dataframe [source] ¶. This is the most common way. read the input data with the number of partitions, that matches your core count. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task.

From www.youtube.com

Spark Application Partition By in Spark Chapter 2 LearntoSpark YouTube Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. This is the most common way. Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. in this method, we are going to find the number. Partitions Count Spark.

From towardsdata.dev

Partitions and Bucketing in Spark towards data Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark generally partitions your. Partitions Count Spark.

From www.youtube.com

Why should we partition the data in spark? YouTube Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Columnorname) → dataframe [source] ¶. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the. Partitions Count Spark.

From pedropark99.github.io

Introduction to pyspark 3 Introducing Spark DataFrames Partitions Count Spark This is the most common way. Columnorname) → dataframe [source] ¶. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. In this example, we have read. Partitions Count Spark.

From www.youtube.com

How to find Data skewness in spark / How to get count of rows from each partition in spark Partitions Count Spark use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Columnorname) → dataframe [source] ¶. read the input data. Partitions Count Spark.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Partitions Count Spark This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the. Partitions Count Spark.

From best-practice-and-impact.github.io

Managing Partitions — Spark at the ONS Partitions Count Spark Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. read the input data with the number of partitions, that. Partitions Count Spark.

From www.youtube.com

Number of Partitions in Dataframe Spark Tutorial Interview Question YouTube Partitions Count Spark use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. This is the most common way. read the input data with the number of partitions, that matches your core count. In this example,. Partitions Count Spark.

From livebook.manning.com

liveBook · Manning Partitions Count Spark Columnorname) → dataframe [source] ¶. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. read the input data with the number of partitions, that matches your core count. in this method, we are going to. Partitions Count Spark.

From spaziocodice.com

Spark SQL Partitions and Sizes SpazioCodice Partitions Count Spark use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. Columnorname) → dataframe [source] ¶. spark generally partitions your. Partitions Count Spark.

From www.qubole.com

Improving Recover Partitions Performance with Spark on Qubole Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Columnorname) → dataframe [source] ¶. This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id() function which is used.. Partitions Count Spark.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark Partitions Count Spark in this method, we are going to find the number of partitions using spark_partition_id() function which is used. This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. read the input data with the number of partitions, that matches your core count. spark partitioning refers to the division of. Partitions Count Spark.

From sparkbyexamples.com

Get the Size of Each Spark Partition Spark By {Examples} Partitions Count Spark in this method, we are going to find the number of partitions using spark_partition_id() function which is used. This is the most common way. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained. Partitions Count Spark.

From hadoopsters.wordpress.com

How to See Record Count Per Partition in a Spark DataFrame (i.e. Find Skew) Hadoopsters Partitions Count Spark This is the most common way. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset. Partitions Count Spark.

From toien.github.io

Spark 分区数量 Kwritin Partitions Count Spark in this method, we are going to find the number of partitions using spark_partition_id() function which is used. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient. Partitions Count Spark.

From sparkbyexamples.com

Spark Partitioning & Partition Understanding Spark By {Examples} Partitions Count Spark Columnorname) → dataframe [source] ¶. This is the most common way. read the input data with the number of partitions, that matches your core count. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. spark. Partitions Count Spark.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Partitions Count Spark spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. This is the most common way. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. In this example, we have read the csv file (link), i.e.,. Partitions Count Spark.

From exocpydfk.blob.core.windows.net

What Is Shuffle Partitions In Spark at Joe Warren blog Partitions Count Spark in this method, we are going to find the number of partitions using spark_partition_id() function which is used. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. This is the. Partitions Count Spark.

From anhcodes.dev

Spark working internals, and why should you care? Partitions Count Spark This is the most common way. read the input data with the number of partitions, that matches your core count. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. In this example, we have read the csv. Partitions Count Spark.

From dzone.com

Dynamic Partition Pruning in Spark 3.0 DZone Partitions Count Spark use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. read the input data with the number of partitions, that matches your core count. spark partitioning refers to the division of. Partitions Count Spark.

From blog.csdn.net

spark基本知识点之Shuffle_separate file for each media typeCSDN博客 Partitions Count Spark spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient. Partitions Count Spark.

From www.programmersought.com

[Spark2] [Source code learning] [Number of partitions] How does spark read local/dividable Partitions Count Spark use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. This is the most common way. Columnorname) → dataframe [source] ¶. read the input data with the number of partitions, that matches your core count. spark generally. Partitions Count Spark.

From blogs.perficient.com

Spark Partition An Overview / Blogs / Perficient Partitions Count Spark This is the most common way. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. Columnorname) → dataframe [source] ¶. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. in this method, we are going to find the number. Partitions Count Spark.

From stackoverflow.com

optimization Spark AQE drastically reduces number of partitions Stack Overflow Partitions Count Spark Columnorname) → dataframe [source] ¶. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. This is the most common way. In this example, we have read the csv file (link), i.e., the dataset. Partitions Count Spark.

From www.ziprecruiter.com

Managing Partitions Using Spark Dataframe Methods ZipRecruiter Partitions Count Spark read the input data with the number of partitions, that matches your core count. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. in this method, we are going to find the number of partitions using spark_partition_id() function which is used. Columnorname) → dataframe [source] ¶. . Partitions Count Spark.

From www.dezyre.com

How Data Partitioning in Spark helps achieve more parallelism? Partitions Count Spark Columnorname) → dataframe [source] ¶. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number. Partitions Count Spark.

From www.gangofcoders.net

How does Spark partition(ing) work on files in HDFS? Gang of Coders Partitions Count Spark In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. Columnorname) → dataframe [source] ¶. read the input data with the number of partitions, that matches your core count. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. This is. Partitions Count Spark.

From toien.github.io

Spark 分区数量 Kwritin Partitions Count Spark in this method, we are going to find the number of partitions using spark_partition_id() function which is used. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. This is the most common way. spark partitioning refers to the division of data into multiple. Partitions Count Spark.

From www.youtube.com

Partition Count And Records Per Partition In Spark Big Data Interview Questions YouTube Partitions Count Spark read the input data with the number of partitions, that matches your core count. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. in this method, we are going to find the number of partitions using spark_partition_id(). Partitions Count Spark.

From www.researchgate.net

Spark partition an LMDB Database Download Scientific Diagram Partitions Count Spark This is the most common way. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. Columnorname) → dataframe [source] ¶. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. use the `spark.sql.shuffle.partitions` configuration. Partitions Count Spark.

From www.projectpro.io

DataFrames number of partitions in spark scala in Databricks Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. This is the most common way. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. read the input. Partitions Count Spark.

From cloud-fundis.co.za

Dynamically Calculating Spark Partitions at Runtime Cloud Fundis Partitions Count Spark spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. read the input data with the number of partitions, that matches your core count. In this example,. Partitions Count Spark.

From exokeufcv.blob.core.windows.net

Max Number Of Partitions In Spark at Manda Salazar blog Partitions Count Spark This is the most common way. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism and enabling efficient processing. read the input data with the number of partitions, that matches your core count. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. In this example, we have read the csv file. Partitions Count Spark.

From exokeufcv.blob.core.windows.net

Max Number Of Partitions In Spark at Manda Salazar blog Partitions Count Spark read the input data with the number of partitions, that matches your core count. In this example, we have read the csv file (link), i.e., the dataset of 5×5, and obtained the number of. Columnorname) → dataframe [source] ¶. This is the most common way. spark partitioning refers to the division of data into multiple partitions, enhancing parallelism. Partitions Count Spark.

From exoocknxi.blob.core.windows.net

Set Partitions In Spark at Erica Colby blog Partitions Count Spark read the input data with the number of partitions, that matches your core count. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. use the `spark.sql.shuffle.partitions` configuration property to set the number of partitions. In this example, we have read the csv file. Partitions Count Spark.