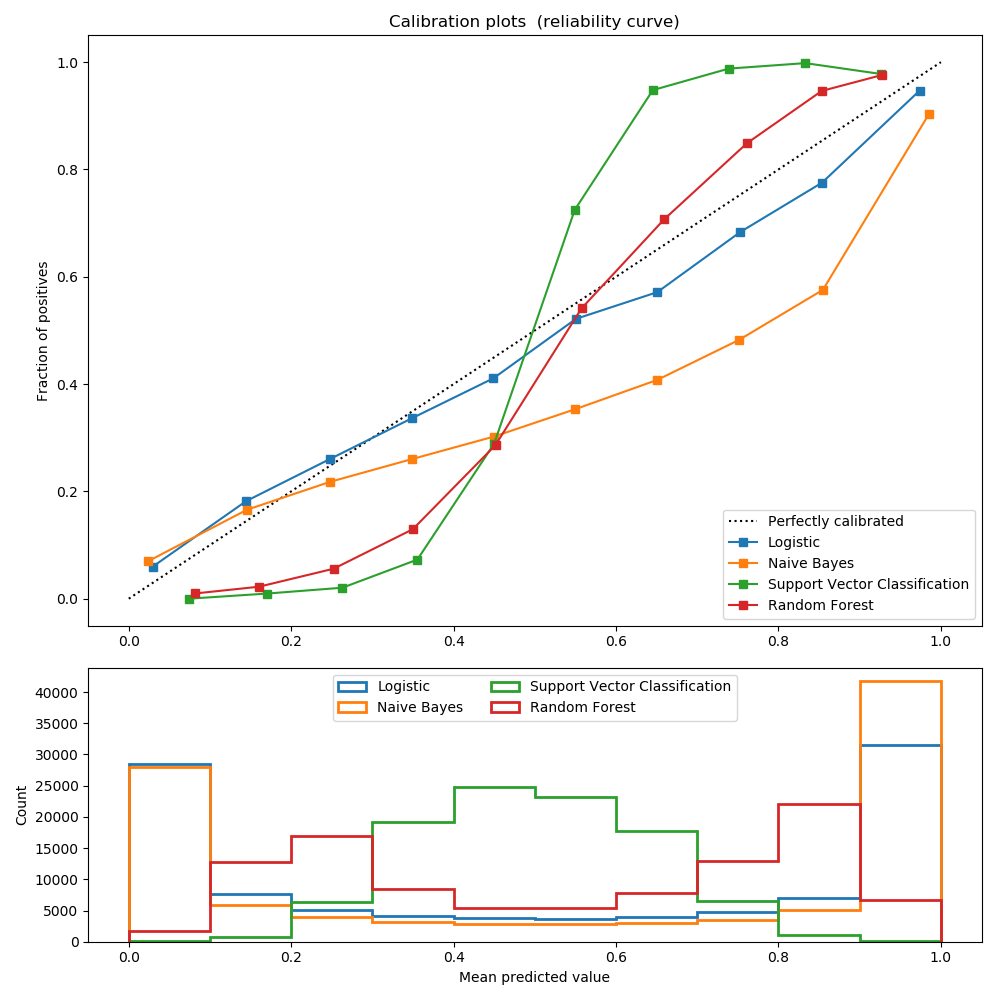

Calibration Curve Sklearn . Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and.

from scikit-learn.org

This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.

Comparison of Calibration of Classifiers — scikitlearn 0.19.2

Calibration Curve Sklearn See how to use reliability. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a.

From lijiancheng0614.github.io

Probability Calibration curves — scikitlearn 0.17 文档 Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. Calibration Curve Sklearn.

From scikit-learn.org

Probability Calibration curves — scikitlearn 0.24.2 documentation Calibration Curve Sklearn Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label. Calibration Curve Sklearn.

From www.atozcolor.com

How to Make a Calibration Curve in Excel A to Z Color Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.. Calibration Curve Sklearn.

From stackoverflow.com

python Sklearn Calibration Curve on Machine Learning Models Issue Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label. Calibration Curve Sklearn.

From scikit-learn.org

Comparison of Calibration of Classifiers — scikitlearn 0.19.2 Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. See how to use reliability. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a.. Calibration Curve Sklearn.

From ploomber.io

Can I trust my model's probabilities? A deep dive into probability Calibration Curve Sklearn This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration curves, also. Calibration Curve Sklearn.

From www.researchgate.net

Calibration curve of the neural network in the testing set Download Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false,. Calibration Curve Sklearn.

From ploomber.io

Can I trust my model's probabilities? A deep dive into probability Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and.. Calibration Curve Sklearn.

From scikit-learn.org

Probability Calibration curves — scikitlearn 0.19.2 documentation Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform'). Calibration Curve Sklearn.

From www.researchgate.net

Calibration curve for Ca. Download Scientific Diagram Calibration Curve Sklearn Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams.. Calibration Curve Sklearn.

From www.researchgate.net

Calibration curve of the GCSC injector voltage vs. film thickness Calibration Curve Sklearn Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. See how to use reliability. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves,. Calibration Curve Sklearn.

From www.researchgate.net

Model calibration curve on the validation set. Download Scientific Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize. Calibration Curve Sklearn.

From blog.sepscience.com

Calibration Curves Part 1 Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob, *, pos_label. Calibration Curve Sklearn.

From lijiancheng0614.github.io

Probability Calibration curves — scikitlearn 0.17 文档 Calibration Curve Sklearn This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Probability calibration curves are useful to visually inspect. Calibration Curve Sklearn.

From blog.csdn.net

sklearn学习07——可视化_sklearn训练过程可视化CSDN博客 Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.. Calibration Curve Sklearn.

From zhuanlan.zhihu.com

概率校准 calibration_curve(更新) 知乎 Calibration Curve Sklearn See how to use reliability. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob,. Calibration Curve Sklearn.

From www.researchgate.net

Calibration curves linking predicted probabilities to actual success Calibration Curve Sklearn Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted. Calibration Curve Sklearn.

From www.researchgate.net

Calibration curve. Download Scientific Diagram Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label. Calibration Curve Sklearn.

From weightinginbayesianmodels.github.io

Calibration Curvefitting Calibration Curve Sklearn Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Probability calibration curves. Calibration Curve Sklearn.

From www.researchgate.net

Calibration curve formed by GCMS measurements of nine calibration Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins. Calibration Curve Sklearn.

From www.researchgate.net

The calibration curves in the training dataset (from left to right are Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of. Calibration Curve Sklearn.

From www.researchgate.net

Calibration plots (reliability curve) of the XGBoost, XGBoost Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. See how to use reliability. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration_curve (y_true, y_prob,. Calibration Curve Sklearn.

From www.researchgate.net

How can I find limit of detection from a calibration curve? ResearchGate Calibration Curve Sklearn Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration_curve (y_true, y_prob, *, pos_label. Calibration Curve Sklearn.

From www.researchgate.net

Example of calibration curve in the range 0100 mg/l Download Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. See how to use reliability. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute. Calibration Curve Sklearn.

From www.researchgate.net

The calibration curve of the XGBoost model with variables related to IV Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams.. Calibration Curve Sklearn.

From chem.libretexts.org

5.4 Linear Regression and Calibration Curves Chemistry LibreTexts Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.. Calibration Curve Sklearn.

From encord.com

Calibration Curve Definition Machine Learning Glossary Encord Encord Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. This example demonstrates how to. Calibration Curve Sklearn.

From www.researchgate.net

Example of a calibration curve. Download Scientific Diagram Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.. Calibration Curve Sklearn.

From ploomber.io

Can I trust my model's probabilities? A deep dive into probability Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams.. Calibration Curve Sklearn.

From chem.libretexts.org

5.4 Linear Regression and Calibration Curves Chemistry LibreTexts Calibration Curve Sklearn This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration curves, also referred to as reliability diagrams. Calibration Curve Sklearn.

From www.youtube.com

How to Generate a ROC Curve with sklearn A Tutorial for Machine Calibration Curve Sklearn Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. See how to use reliability. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers.. Calibration Curve Sklearn.

From scikit-learn.org

Comparison of Calibration of Classifiers — scikitlearn 1.5.0 documentation Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier.. Calibration Curve Sklearn.

From www.researchgate.net

Example of a calibration curve, which plots the observed frequency of Calibration Curve Sklearn Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic predictions of a binary classifier. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform'). Calibration Curve Sklearn.

From www.geeksforgeeks.org

Probability Calibration of Classifiers in Scikit Learn Calibration Curve Sklearn See how to use reliability. Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. Probability calibration curves are useful to visually inspect the calibration of a classifier and to compare the calibration of different classifiers. Sklearn.calibration.calibration_curve(y_true, y_prob, normalize=false, n_bins=5) [source] ¶ compute true and predicted probabilities for a. Calibration curves, also. Calibration Curve Sklearn.

From stats.stackexchange.com

machine learning How to calibrate models if we don't have enough data Calibration Curve Sklearn Calibration_curve (y_true, y_prob, *, pos_label = none, n_bins = 5, strategy = 'uniform') [source] # compute true and. This example demonstrates how to visualize how well calibrated the predicted probabilities are using calibration curves, also known as reliability diagrams. See how to use reliability. Calibration curves, also referred to as reliability diagrams (wilks 1995 [2]), compare how well the probabilistic. Calibration Curve Sklearn.