List Files In Mount Point Databricks . databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. list available utilities. how does azure databricks mount cloud object storage? Display help for a command. listing all files under an azure data lake gen2 container. you can list all the files in each partition and then delete them using an apache spark job. you can list your existing mount points using the below dbutils command: List available commands for a utility. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. What is the syntax for mounting storage? # also shows the databricks.

from www.databricks.com

What is the syntax for mounting storage? Display help for a command. List available commands for a utility. listing all files under an azure data lake gen2 container. how does azure databricks mount cloud object storage? Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): # also shows the databricks. you can list your existing mount points using the below dbutils command: you can list all the files in each partition and then delete them using an apache spark job. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns.

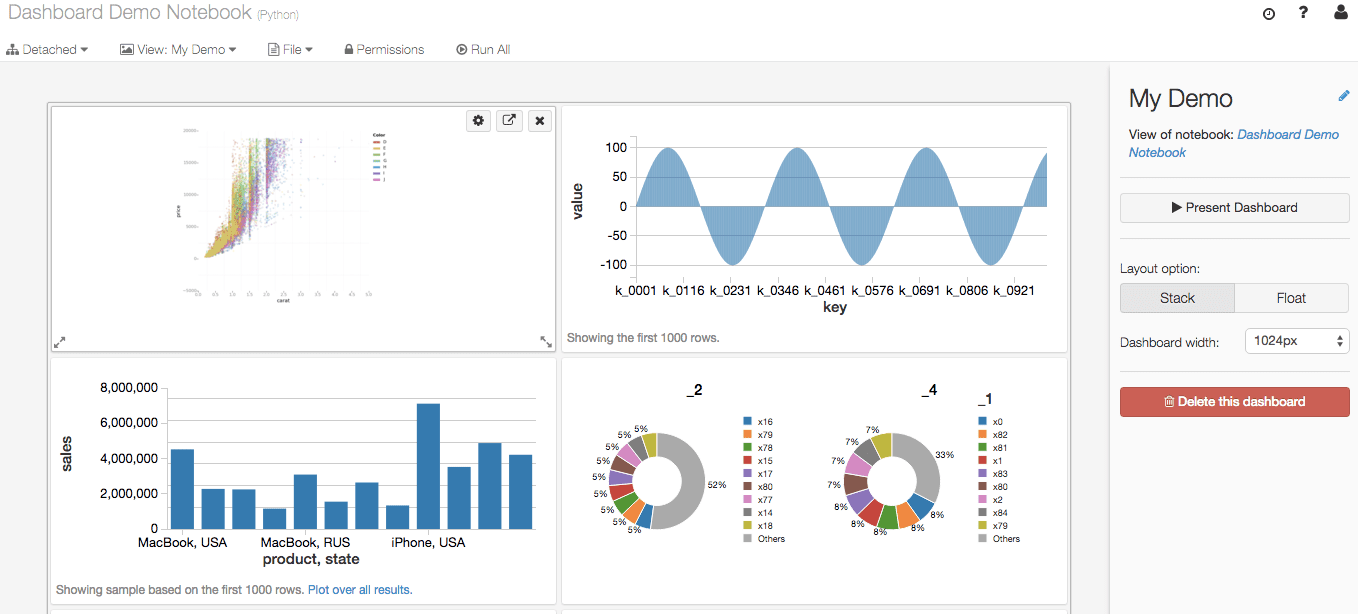

Introducing Databricks Dashboards Databricks Blog

List Files In Mount Point Databricks databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. you can list your existing mount points using the below dbutils command: you can list all the files in each partition and then delete them using an apache spark job. # also shows the databricks. Display help for a command. list available utilities. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): What is the syntax for mounting storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. List available commands for a utility. how does azure databricks mount cloud object storage? listing all files under an azure data lake gen2 container.

From www.youtube.com

6 Create Mount Point To Azure Blob Storage In Databricks Mount Blob List Files In Mount Point Databricks list available utilities. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): # also shows the databricks. how does azure databricks mount cloud object storage? Display help for a command. you can list all the files in each partition and then delete them using an apache spark job. What is the syntax for mounting storage? . List Files In Mount Point Databricks.

From tylersguides.com

Linux Filesystem Hierarchy Tyler's Guides List Files In Mount Point Databricks Display help for a command. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): listing all files under an azure data lake gen2 container. list available utilities. you can list all the files in each partition. List Files In Mount Point Databricks.

From stackoverflow.com

python How to move files from one folder to another on databricks List Files In Mount Point Databricks # also shows the databricks. you can list all the files in each partition and then delete them using an apache spark job. you can list your existing mount points using the below dbutils command: listing all files under an azure data lake gen2 container. databricks enables users to mount cloud object storage to the databricks. List Files In Mount Point Databricks.

From www.databricks.com

NFS Mounting in Databricks Product Databricks Blog List Files In Mount Point Databricks List available commands for a utility. listing all files under an azure data lake gen2 container. list available utilities. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. Display help for a command. you can list all the files in each partition and then delete them using an apache spark job. how does azure databricks mount cloud object. List Files In Mount Point Databricks.

From www.databricks.com

Introducing Databricks Dashboards Databricks Blog List Files In Mount Point Databricks # also shows the databricks. list available utilities. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. listing all files under an azure data lake gen2 container. Display help for. List Files In Mount Point Databricks.

From www.youtube.com

Mount Points and Partitions YouTube List Files In Mount Point Databricks # also shows the databricks. List available commands for a utility. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. What is the syntax for mounting storage? how does azure databricks mount cloud object storage? Display help for a command. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. . List Files In Mount Point Databricks.

From www.youtube.com

How to create Mount Point using Service Principal in Databricks YouTube List Files In Mount Point Databricks Display help for a command. listing all files under an azure data lake gen2 container. list available utilities. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list your existing mount points using the below dbutils command: What is the syntax for mounting storage? List available commands for a utility. Path = os.path.join(root, targetdirectory) for path, subdirs,. List Files In Mount Point Databricks.

From learn2skills.com

Mounting OCI File Systems From Unix Instances Learn2Skills List Files In Mount Point Databricks listing all files under an azure data lake gen2 container. you can list all the files in each partition and then delete them using an apache spark job. how does azure databricks mount cloud object storage? list available utilities. List available commands for a utility. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list. List Files In Mount Point Databricks.

From www.youtube.com

How to create Mount Point and connect Blob Storag using Access Keys List Files In Mount Point Databricks List available commands for a utility. you can list your existing mount points using the below dbutils command: Display help for a command. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. how does azure databricks mount cloud object storage?. List Files In Mount Point Databricks.

From blog.brq.com

Como configurar Mount Points do Azure Data Lake no Azure Databricks List Files In Mount Point Databricks spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list all the files in each partition and then delete them using an apache spark job. how does azure databricks mount cloud object storage? What is the syntax for mounting storage? # also shows the databricks. Display help for a command. databricks enables users to mount cloud object. List Files In Mount Point Databricks.

From www.databricks.com

How to Make RStudio on Databricks Resilient to Cluster Termination List Files In Mount Point Databricks how does azure databricks mount cloud object storage? you can list your existing mount points using the below dbutils command: list available utilities. What is the syntax for mounting storage? List available commands for a utility. listing all files under an azure data lake gen2 container. Display help for a command. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and. List Files In Mount Point Databricks.

From www.tpsearchtool.com

How To Configure Azure Data Lake Mount Points On Azure Databricks Images List Files In Mount Point Databricks databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. List available commands for a utility. What is the syntax for mounting storage? list available utilities. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. listing all files under an azure data lake gen2 container. Display help for a command.. List Files In Mount Point Databricks.

From www.slideserve.com

PPT Chapter 10 FileSystem Interface PowerPoint Presentation, free List Files In Mount Point Databricks List available commands for a utility. how does azure databricks mount cloud object storage? What is the syntax for mounting storage? you can list all the files in each partition and then delete them using an apache spark job. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): databricks enables users to mount cloud object storage. List Files In Mount Point Databricks.

From www.appsloveworld.com

[Solved]How to list all the mount points in Azure Databricks?scala List Files In Mount Point Databricks What is the syntax for mounting storage? list available utilities. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. Display help for a command. you can list your existing mount points using the below dbutils command: spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. List available commands for. List Files In Mount Point Databricks.

From stackoverflow.com

python Databricks Can we variablize the mount_point name during List Files In Mount Point Databricks List available commands for a utility. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. Display help for a command. What is the syntax for mounting storage? how does azure databricks mount cloud object storage? databricks enables users to mount cloud object storage to the databricks file system (dbfs) to. List Files In Mount Point Databricks.

From www.youtube.com

18. Create Mount point using dbutils.fs.mount() in Azure Databricks List Files In Mount Point Databricks spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list all the files in each partition and then delete them using an apache spark job. # also shows the databricks. list available utilities. What is the syntax for mounting storage? you can list your existing mount points using the below dbutils command: Display help for a command.. List Files In Mount Point Databricks.

From www.youtube.com

Create Azure Databricks Mount Points (Access Keys Method) YouTube List Files In Mount Point Databricks you can list all the files in each partition and then delete them using an apache spark job. you can list your existing mount points using the below dbutils command: listing all files under an azure data lake gen2 container. List available commands for a utility. databricks enables users to mount cloud object storage to the. List Files In Mount Point Databricks.

From www.youtube.com

25 Delete/Unmount dbutils.fs.unmount() Mount Point in Azure List Files In Mount Point Databricks # also shows the databricks. you can list all the files in each partition and then delete them using an apache spark job. Display help for a command. list available utilities. List available commands for a utility. listing all files under an azure data lake gen2 container. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path):. List Files In Mount Point Databricks.

From www.youtube.com

21 What is mount point create mount point using dbutils.fs.mount List Files In Mount Point Databricks listing all files under an azure data lake gen2 container. What is the syntax for mounting storage? databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. List available commands for a utility. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): you can list all the. List Files In Mount Point Databricks.

From azureops.org

Mount and Unmount Data Lake in Databricks AzureOps List Files In Mount Point Databricks how does azure databricks mount cloud object storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. # also shows the databricks. What is the syntax for mounting storage? listing. List Files In Mount Point Databricks.

From stackoverflow.com

python Azure Databricks save file in a mount point Stack Overflow List Files In Mount Point Databricks list available utilities. databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. you can list all the files in each partition and then delete them using an apache spark job. Display help for a command. listing all files under an azure data lake gen2 container. Path. List Files In Mount Point Databricks.

From templates.udlvirtual.edu.pe

What Is Aws Databricks Printable Templates List Files In Mount Point Databricks databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list your existing mount points using the below dbutils command: List available commands for a utility. Display help for a command. # also shows the databricks. how does azure. List Files In Mount Point Databricks.

From www.youtube.com

7 Mount ADLS Gen2 To Databricks Create Mount Point to ADLS Gen2 List Files In Mount Point Databricks Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): you can list your existing mount points using the below dbutils command: List available commands for a utility. you can list all the files in each partition and then delete them using an apache spark job. list available utilities. databricks enables users to mount cloud object. List Files In Mount Point Databricks.

From www.youtube.com

27 Update Mount Point dbutils.fs.updateMount() in Azure Databricks in List Files In Mount Point Databricks list available utilities. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): listing all files under an azure data lake gen2 container. What is the syntax for mounting storage? Display help for a command. # also shows the databricks. how does azure databricks mount cloud object storage? you. List Files In Mount Point Databricks.

From www.youtube.com

Create mount points using sas token in databricks AWS and Azure and List Files In Mount Point Databricks Display help for a command. list available utilities. List available commands for a utility. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. listing all files under an azure data lake gen2 container. What is the syntax for mounting storage? you can list your existing mount points using the below dbutils command: how does azure databricks mount cloud. List Files In Mount Point Databricks.

From ifgeekthen.nttdata.com

Securización del acceso al dato desde Azure Databricks List Files In Mount Point Databricks Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): What is the syntax for mounting storage? # also shows the databricks. list available utilities. you can list your existing mount points using the below dbutils command: List available commands for a utility. how does azure databricks mount cloud object storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks. List Files In Mount Point Databricks.

From blog.brq.com

Como configurar Mount Points do Azure Data Lake no Azure Databricks List Files In Mount Point Databricks list available utilities. # also shows the databricks. List available commands for a utility. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list all the files in each partition and then delete them using an apache spark job. how does azure databricks mount cloud object storage? you can list your existing mount points using the. List Files In Mount Point Databricks.

From www.youtube.com

Databricks Mounts Mount your AWS S3 bucket to Databricks YouTube List Files In Mount Point Databricks you can list your existing mount points using the below dbutils command: databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): how does azure databricks mount cloud object storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. . List Files In Mount Point Databricks.

From www.youtube.com

Azure Databricks Configure Datalake Mount Point Do it yourself List Files In Mount Point Databricks databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. you can list your existing mount points using the below dbutils command: how does azure databricks mount cloud object storage? list available utilities. Display help for a command. you can list all the files in each. List Files In Mount Point Databricks.

From takethenotes.com

Exploring The World Of Mount Points In Linux Disk Management Take The List Files In Mount Point Databricks you can list your existing mount points using the below dbutils command: you can list all the files in each partition and then delete them using an apache spark job. how does azure databricks mount cloud object storage? What is the syntax for mounting storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. listing all files under. List Files In Mount Point Databricks.

From stackoverflow.com

pyspark How to upload text file to FTP from Databricks notebook List Files In Mount Point Databricks # also shows the databricks. listing all files under an azure data lake gen2 container. Display help for a command. how does azure databricks mount cloud object storage? databricks enables users to mount cloud object storage to the databricks file system (dbfs) to simplify data access patterns. you can list your existing mount points using the. List Files In Mount Point Databricks.

From www.aiophotoz.com

How To Configure Azure Data Lake Mount Points On Azure Databricks List Files In Mount Point Databricks # also shows the databricks. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): What is the syntax for mounting storage? list available utilities. listing all files under an azure data lake gen2 container. List available commands for a utility. Display help for a command. you can list all. List Files In Mount Point Databricks.

From en.opensuse.org

SDBBasics of partitions, filesystems, mount points openSUSE Wiki List Files In Mount Point Databricks # also shows the databricks. Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): how does azure databricks mount cloud object storage? listing all files under an azure data lake gen2 container. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list all the files in each partition and then delete them using an apache spark. List Files In Mount Point Databricks.

From www.appsloveworld.com

[Solved]How to list all the mount points in Azure Databricks?scala List Files In Mount Point Databricks list available utilities. What is the syntax for mounting storage? Path = os.path.join(root, targetdirectory) for path, subdirs, files in os.walk(path): you can list your existing mount points using the below dbutils command: you can list all the files in each partition and then delete them using an apache spark job. spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql.. List Files In Mount Point Databricks.

From www.youtube.com

20. Delete or Unmount Mount Points in Azure Databricks YouTube List Files In Mount Point Databricks list available utilities. you can list all the files in each partition and then delete them using an apache spark job. Display help for a command. how does azure databricks mount cloud object storage? spark.read.format(json).load(s3://<<strong>bucket</strong>>/path/file.json).show() spark sql and databricks sql. you can list your existing mount points using the below dbutils command: listing all. List Files In Mount Point Databricks.