Meaning Rectified Linear Unit . The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. The rectified linear unit (relu) is the most commonly used activation function in. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. It’s simple, yet it’s far superior to. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. What is the rectified linear unit (relu)? The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore.

from www.slideteam.net

Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. The rectified linear unit (relu) is the most commonly used activation function in. It’s simple, yet it’s far superior to. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning.

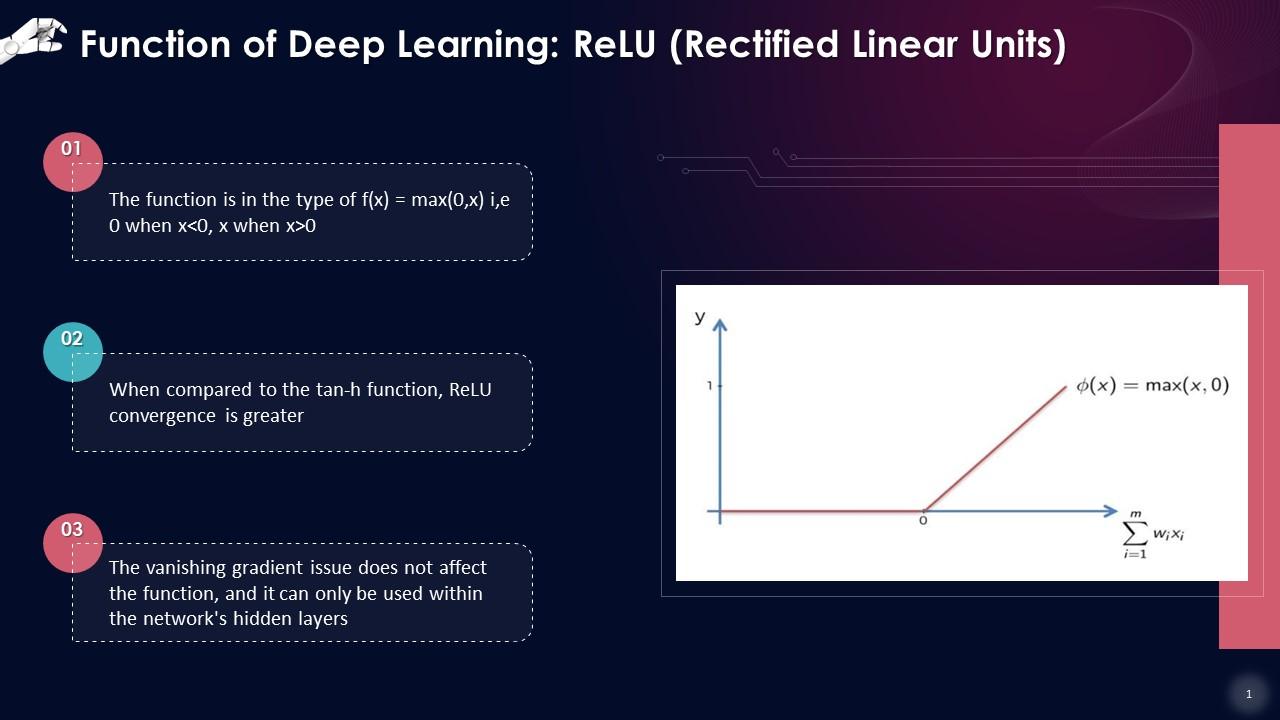

Deep Learning Function Rectified Linear Units Relu Training Ppt

Meaning Rectified Linear Unit The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. It’s simple, yet it’s far superior to. What is the rectified linear unit (relu)? The rectified linear unit (relu) is the most commonly used activation function in. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore.

From www.slideteam.net

Relu Rectified Linear Unit Activation Function Artificial Neural Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. It’s simple, yet it’s far superior to. The rectified linear activation unit, or relu, is one of the few. Meaning Rectified Linear Unit.

From www.researchgate.net

Plot of the sigmoid function, hyperbolic tangent, rectified linear unit Meaning Rectified Linear Unit The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. It’s simple,. Meaning Rectified Linear Unit.

From lme.tf.fau.de

Lecture Notes in Deep Learning Activations, Convolutions, and Pooling Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. What is the rectified linear unit (relu)? A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network. Meaning Rectified Linear Unit.

From www.nbshare.io

Rectified Linear Unit For Artificial Neural Networks Part 1 Regression Meaning Rectified Linear Unit The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. What is the rectified linear unit (relu)? A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to. Meaning Rectified Linear Unit.

From www.researchgate.net

2 Rectified Linear Unit function Download Scientific Diagram Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. What is the rectified linear unit (relu)? A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation unit, or relu,. Meaning Rectified Linear Unit.

From machinelearning.cards

Noisy Rectified Linear Unit by Chris Albon Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. A rectified linear unit, or relu, is a form of activation function used commonly. Meaning Rectified Linear Unit.

From www.researchgate.net

Functions including exponential linear unit (ELU), parametric rectified Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. The rectified. Meaning Rectified Linear Unit.

From machinelearningmastery.com

A Gentle Introduction to the Rectified Linear Unit (ReLU Meaning Rectified Linear Unit The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. What is the rectified linear unit (relu)? The distinct characteristics of the rectified linear unit—its simplicity, computational. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function Download Scientific Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. The rectified linear unit (relu) is the most commonly used activation function in. What is the rectified linear unit (relu)? The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning.. Meaning Rectified Linear Unit.

From www.researchgate.net

Leaky rectified linear unit (α = 0.1) Download Scientific Diagram Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. What is the rectified linear unit (relu)? The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. A rectified linear unit, or relu,. Meaning Rectified Linear Unit.

From ibelieveai.github.io

Deep Learning Activation Functions Praneeth Bellamkonda Meaning Rectified Linear Unit The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. It’s simple, yet. Meaning Rectified Linear Unit.

From www.slideserve.com

PPT Lecture 2. Basic Neurons PowerPoint Presentation, free download Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. It’s simple, yet it’s far superior. Meaning Rectified Linear Unit.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. Relu, or rectified linear. Meaning Rectified Linear Unit.

From www.practicalserver.net

Write a program to display a graph for ReLU (Rectified Linear Unit Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The. Meaning Rectified Linear Unit.

From www.scribd.com

Rectified Linear Unit PDF Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The rectified linear unit (relu) is the most commonly used activation function in. The rectified linear unit, or relu for short, is one of the many activation functions. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified Linear Unit (ReLU) [72] Download Scientific Diagram Meaning Rectified Linear Unit What is the rectified linear unit (relu)? The rectified linear unit (relu) is the most commonly used activation function in. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. It’s simple, yet it’s far superior to. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural. Meaning Rectified Linear Unit.

From www.oreilly.com

Rectified Linear Unit Neural Networks with R [Book] Meaning Rectified Linear Unit The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. The rectified linear unit (relu) is the most commonly used activation function in. A rectified linear unit, or relu, is a form of. Meaning Rectified Linear Unit.

From www.oreilly.com

Rectified linear unit Keras 2.x Projects [Book] Meaning Rectified Linear Unit What is the rectified linear unit (relu)? Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. A rectified linear unit, or relu, is a form of activation function. Meaning Rectified Linear Unit.

From srdas.github.io

Deep Learning Meaning Rectified Linear Unit The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. It’s simple, yet it’s far superior to. A rectified linear unit, or relu, is a form of activation function used commonly. Meaning Rectified Linear Unit.

From www.researchgate.net

Shortcut connection in residual learning. Here ReLU means rectified Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. What is the rectified linear unit (relu)? Relu, or rectified linear unit, represents a function that has transformed the landscape. Meaning Rectified Linear Unit.

From www.vrogue.co

Rectified Linear Unit Relu Activation Function Deep L vrogue.co Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. It’s simple, yet. Meaning Rectified Linear Unit.

From www.vrogue.co

Rectified Linear Unit Relu Introduction And Uses In M vrogue.co Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. What is the rectified linear unit (relu)? Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution.. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified linear unit illustration Download Scientific Diagram Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. It’s simple, yet it’s far superior to. The rectified linear unit (relu) is the most commonly used activation function in. What is the rectified. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified Linear Unit (ReLU) activation function [16] Download Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. It’s simple, yet it’s far superior to. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear unit,. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified linear unit (ReLU) activation function Download Scientific Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. It’s simple, yet it’s far superior to. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability. Meaning Rectified Linear Unit.

From stackdiary.com

ReLU (Rectified Linear Unit) Glossary & Definition Meaning Rectified Linear Unit Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear unit (relu) is the most commonly used activation function in. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. What is the rectified. Meaning Rectified Linear Unit.

From www.researchgate.net

Figure B.1 Plots of the ReLU (Rectified Linear Unit), Softplus Meaning Rectified Linear Unit What is the rectified linear unit (relu)? It’s simple, yet it’s far superior to. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. The rectified linear unit (relu) is the most commonly used activation function in. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate. Meaning Rectified Linear Unit.

From www.researchgate.net

Rectified Linear Unit Activation Function Download Scientific Diagram Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. It’s simple, yet it’s far superior to. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution.. Meaning Rectified Linear Unit.

From www.youtube.com

Tutorial 10 Activation Functions Rectified Linear Unit(relu) and Leaky Meaning Rectified Linear Unit The rectified linear unit (relu) is the most commonly used activation function in. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The rectified linear. Meaning Rectified Linear Unit.

From www.collegesearch.in

Rectifier Definition, Types, Application, Uses and Working Principle Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear unit (relu) is the most commonly used activation function in.. Meaning Rectified Linear Unit.

From pub.aimind.so

Rectified Linear Unit (ReLU) Activation Function by Cognitive Creator Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The rectified linear activation unit, or relu, is one of the few landmarks in the deep learning revolution. What is. Meaning Rectified Linear Unit.

From fyohjtdoj.blob.core.windows.net

Rectified Linear Unit Nedir at Marie Garman blog Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. What is the rectified linear unit (relu)? The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. The rectified linear unit (relu) is the most commonly used activation function in. It’s simple, yet. Meaning Rectified Linear Unit.

From www.slideteam.net

Deep Learning Function Rectified Linear Units Relu Training Ppt Meaning Rectified Linear Unit The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. What is the rectified linear unit (relu)? The rectified linear unit (relu) is the most commonly used activation function in. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity. Meaning Rectified Linear Unit.

From morioh.com

Rectified Linear Unit (ReLU) Activation Function Meaning Rectified Linear Unit It’s simple, yet it’s far superior to. A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. The rectified linear unit, or relu for short, is one of the many activation functions available to you for deep learning. Relu, or rectified linear unit, represents a function that has transformed the landscape of. Meaning Rectified Linear Unit.

From slideplayer.com

CSC 578 Neural Networks and Deep Learning ppt download Meaning Rectified Linear Unit A rectified linear unit, or relu, is a form of activation function used commonly in deep learning models. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and. The distinct characteristics of the rectified linear unit—its simplicity, computational efficiency, and ability to mitigate the vanishing gradient problem—underscore. It’s. Meaning Rectified Linear Unit.