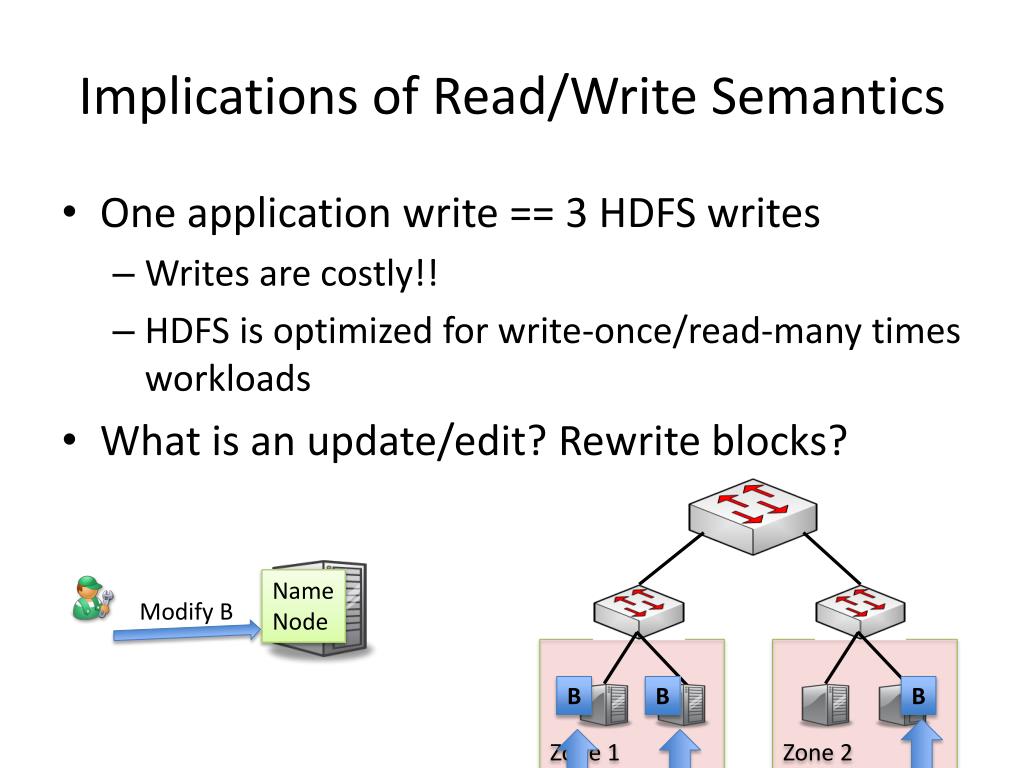

Hdfs Write Once Read Many . Therefore, that replica is selected which resides on the same rack as the reader node, if possible. We can write c++ code for hadoop using pipes api or hadoop pipes. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. A file once created, written, and closed need not be changed.

from www.slideserve.com

A file once created, written, and closed need not be changed. We can write c++ code for hadoop using pipes api or hadoop pipes. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Once the file is in hdfs then it is immutable. So, you place the file into hdfs once and can read the file 'n' number of times. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. This assumption simplifies data coherency issues.

PPT HDFS/GFS PowerPoint Presentation, free download ID6195704

Hdfs Write Once Read Many So, you place the file into hdfs once and can read the file 'n' number of times. So, you place the file into hdfs once and can read the file 'n' number of times. Once the file is in hdfs then it is immutable. We can write c++ code for hadoop using pipes api or hadoop pipes. This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. A file once created, written, and closed need not be changed.

From slideplayer.com

Chapter 21 Parallel and Distributed Storage ppt download Hdfs Write Once Read Many A file once created, written, and closed need not be changed. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. Once. Hdfs Write Once Read Many.

From www.researchgate.net

1 Hdfs write/read operation Download Scientific Diagram Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. A file once created, written, and closed need not be changed. So, you place the file into hdfs once and can read the file 'n' number of times. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Therefore,. Hdfs Write Once Read Many.

From www.modb.pro

搞懂HDFS体系架构这一篇就够了 墨天轮 Hdfs Write Once Read Many So, you place the file into hdfs once and can read the file 'n' number of times. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Once the file is in hdfs then it is immutable. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. A file. Hdfs Write Once Read Many.

From data-flair.training

Hadoop HDFS Data Read and Write Operations DataFlair Hdfs Write Once Read Many More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. A file once created, written, and closed need not be changed. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. We can write c++ code for hadoop using pipes api or hadoop pipes. Therefore, that replica is selected. Hdfs Write Once Read Many.

From slideplayer.com

Slides modified from presentation by B. Ramamurthy ppt download Hdfs Write Once Read Many Therefore, that replica is selected which resides on the same rack as the reader node, if possible. We can write c++ code for hadoop using pipes api or hadoop pipes. Once the file is in hdfs then it is immutable. A file once created, written, and closed need not be changed. This assumption simplifies data coherency issues. More precisely, for. Hdfs Write Once Read Many.

From blog.csdn.net

HDFS总结_nodemanager管理hdfsCSDN博客 Hdfs Write Once Read Many A file once created, written, and closed need not be changed. So, you place the file into hdfs once and can read the file 'n' number of times. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Once. Hdfs Write Once Read Many.

From blog.bigdataweek.com

Why Hadoop Is Important In Handling Big Data? Big Data Week Blog Hdfs Write Once Read Many So, you place the file into hdfs once and can read the file 'n' number of times. We can write c++ code for hadoop using pipes api or hadoop pipes. Once the file is in hdfs then it is immutable. A file once created, written, and closed need not be changed. More precisely, for reliable writes in a distributed cluster. Hdfs Write Once Read Many.

From slideplayer.com

Prof. JongMoon Chung’s Lecture Notes at Yonsei University ppt download Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. We can write c++ code for hadoop using pipes api or hadoop pipes. So, you place the file into hdfs once and can read the file 'n' number of times. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Therefore, that replica is. Hdfs Write Once Read Many.

From medium.com

HDFS Blocks Write and Read Operation Javarevisited Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. We can write c++ code for hadoop using pipes api or hadoop pipes. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier.. Hdfs Write Once Read Many.

From www.nexusindustrialmemory.com

WriteOnceReadMany (WORM) tamper proof technology Hdfs Write Once Read Many This assumption simplifies data coherency issues. Once the file is in hdfs then it is immutable. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. Therefore, that replica is selected which resides on the same rack as. Hdfs Write Once Read Many.

From cio-wiki.org

WORM (Write Once Read Many) CIO Wiki Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. So, you place the file into hdfs once and can read the file 'n' number of times. This assumption simplifies data coherency issues. We can write c++ code for hadoop using pipes api or hadoop pipes. A file once created, written, and closed need not be changed. It periodically receives. Hdfs Write Once Read Many.

From www.knpcode.com

HDFS Data Flow File Read And Write in HDFS KnpCode Hdfs Write Once Read Many This assumption simplifies data coherency issues. We can write c++ code for hadoop using pipes api or hadoop pipes. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. A file once created, written, and closed need not be changed. More precisely, for reliable writes in a distributed cluster with complex failure patterns,. Hdfs Write Once Read Many.

From www.researchgate.net

HDFS write data flow based on CPABE encryption algorithm HDFS write Hdfs Write Once Read Many Therefore, that replica is selected which resides on the same rack as the reader node, if possible. This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. It periodically receives a heartbeat. Hdfs Write Once Read Many.

From dbaquest.blogspot.com

Quest_in_OracleDBA HDFS Read and Write..Explained Hdfs Write Once Read Many This assumption simplifies data coherency issues. So, you place the file into hdfs once and can read the file 'n' number of times. We can write c++ code for hadoop using pipes api or hadoop pipes. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. More precisely, for reliable writes in a distributed. Hdfs Write Once Read Many.

From www.javatpoint.com

HDFS javatpoint Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. We can write c++ code for hadoop using pipes api or hadoop pipes. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly. Hdfs Write Once Read Many.

From www.geeksforgeeks.org

Anatomy of File Read and Write in HDFS Hdfs Write Once Read Many A file once created, written, and closed need not be changed. We can write c++ code for hadoop using pipes api or hadoop pipes. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. Once the file. Hdfs Write Once Read Many.

From data-flair.training

Data Read Operation in HDFS A Quick HDFS Guide DataFlair Hdfs Write Once Read Many We can write c++ code for hadoop using pipes api or hadoop pipes. Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. It periodically receives a heartbeat and a blockreport from each of the datanodes in. Hdfs Write Once Read Many.

From www.geeksforgeeks.org

Anatomy of File Read and Write in HDFS Hdfs Write Once Read Many More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Once the file is in hdfs then it is immutable. A file once created, written, and closed need not be changed. We can write c++ code for hadoop using pipes api or hadoop pipes. Therefore, that replica is selected which resides on the same rack. Hdfs Write Once Read Many.

From www.slideserve.com

PPT HDFS/GFS PowerPoint Presentation, free download ID6195704 Hdfs Write Once Read Many More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. This assumption simplifies data coherency issues. So, you place the file into hdfs once and can read the file 'n' number of times. Once the file is in hdfs then it is immutable. It periodically receives a heartbeat and a blockreport from each of the. Hdfs Write Once Read Many.

From www.knpcode.com

HDFS Data Flow File Read And Write in HDFS KnpCode Hdfs Write Once Read Many A file once created, written, and closed need not be changed. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. So, you place the file into hdfs once and can read the file 'n' number of times. Once the file is in hdfs then it is immutable. This assumption simplifies data coherency. Hdfs Write Once Read Many.

From www.javatpoint.com

HDFS javatpoint Hdfs Write Once Read Many Therefore, that replica is selected which resides on the same rack as the reader node, if possible. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. We can write c++ code for hadoop using pipes api or hadoop pipes. Once the file is in hdfs then it is immutable. More precisely, for reliable. Hdfs Write Once Read Many.

From www.researchgate.net

HDFS read operation. Download Scientific Diagram Hdfs Write Once Read Many So, you place the file into hdfs once and can read the file 'n' number of times. This assumption simplifies data coherency issues. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. More precisely, for reliable. Hdfs Write Once Read Many.

From dbaquest.blogspot.com

Quest_in_OracleDBA HDFS Read and Write..Explained Hdfs Write Once Read Many A file once created, written, and closed need not be changed. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. So, you place the file into hdfs once and can read the file 'n' number of times. We. Hdfs Write Once Read Many.

From dbaquest.blogspot.com

Quest_in_OracleDBA HDFS Read and Write..Explained Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. We. Hdfs Write Once Read Many.

From codeantenna.com

HDFS Read and Write Mechenism CodeAntenna Hdfs Write Once Read Many It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. So, you place the file into hdfs once and can read the file 'n' number of times. A file once created, written, and closed need not be changed. Therefore, that replica is selected which resides on the same rack as the reader node, if. Hdfs Write Once Read Many.

From www.slideserve.com

PPT HDFS write/read flow and performance optimization PowerPoint Hdfs Write Once Read Many So, you place the file into hdfs once and can read the file 'n' number of times. This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. A file once created, written, and closed need not be changed. It periodically receives a heartbeat and a blockreport from each of. Hdfs Write Once Read Many.

From www.geeksforgeeks.org

HDFS Data Read Operation Hdfs Write Once Read Many This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. A file once created, written, and closed need not be changed. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. It periodically receives a heartbeat and a blockreport from each of. Hdfs Write Once Read Many.

From www.researchgate.net

HDFS data write workflow Download Scientific Diagram Hdfs Write Once Read Many It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. We can write c++ code for hadoop using pipes api or hadoop pipes. A file once created, written, and closed need not be changed. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. More precisely, for. Hdfs Write Once Read Many.

From bradhedlund.com

Understanding Hadoop Clusters and the Network Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. A file once created, written, and closed need not be changed. We can write c++ code for hadoop using pipes api or hadoop pipes. So, you place the file into hdfs once and can read the file 'n' number of times. More precisely, for reliable writes in a distributed cluster. Hdfs Write Once Read Many.

From data-flair.training

Hadoop HDFS Data Read and Write Operations DataFlair Hdfs Write Once Read Many We can write c++ code for hadoop using pipes api or hadoop pipes. A file once created, written, and closed need not be changed. Once the file is in hdfs then it is immutable. This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. It periodically receives a heartbeat. Hdfs Write Once Read Many.

From slideplayer.com

Bryon Gill Pittsburgh Center ppt download Hdfs Write Once Read Many More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. So, you place the file into hdfs once and can read the file 'n' number of times. This assumption simplifies data coherency issues. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. Therefore, that replica is selected which. Hdfs Write Once Read Many.

From codeantenna.com

HDFS Read and Write Mechenism CodeAntenna Hdfs Write Once Read Many This assumption simplifies data coherency issues. We can write c++ code for hadoop using pipes api or hadoop pipes. A file once created, written, and closed need not be changed. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. It periodically receives a heartbeat and a blockreport from each of the datanodes. Hdfs Write Once Read Many.

From www.analyticsvidhya.com

An Overview of HDFS NameNodes and DataNodes Analytics Vidhya Hdfs Write Once Read Many This assumption simplifies data coherency issues. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Therefore, that replica is selected which resides on the same rack as the reader node, if possible. A file once created, written, and closed need not be changed. We can write c++ code for hadoop using pipes api or. Hdfs Write Once Read Many.

From www.developer.com

How Hadoop is Different from Conventional BI Hdfs Write Once Read Many More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. We can write c++ code for hadoop using pipes api or hadoop pipes. This assumption simplifies data coherency issues. Once the file is in hdfs then it is immutable. Therefore, that replica is selected which resides on the same rack as the reader node, if. Hdfs Write Once Read Many.

From 9to5answer.com

[Solved] Write once, read many (WORM) using Linux file 9to5Answer Hdfs Write Once Read Many Once the file is in hdfs then it is immutable. It periodically receives a heartbeat and a blockreport from each of the datanodes in the cluster. We can write c++ code for hadoop using pipes api or hadoop pipes. More precisely, for reliable writes in a distributed cluster with complex failure patterns, significantly easier. Therefore, that replica is selected which. Hdfs Write Once Read Many.