Job Speculation In Hadoop . speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. speculative execution in hadoop. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. what is speculative execution in hadoop? in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. Speculative execution in hadoop mapreduce is an option to run a duplicate. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput.

from ikkkp.github.io

speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. what is speculative execution in hadoop? Speculative execution in hadoop mapreduce is an option to run a duplicate. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. speculative execution in hadoop. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time.

MapReduce Working Principle in Hadoop Huangzl's blog

Job Speculation In Hadoop in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. what is speculative execution in hadoop? Speculative execution in hadoop mapreduce is an option to run a duplicate. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. speculative execution in hadoop.

From assets.velvetjobs.com

Hadoop Job Description Velvet Jobs Job Speculation In Hadoop what is speculative execution in hadoop? Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. in simple words, a speculative execution means that hadoop. Job Speculation In Hadoop.

From assets.velvetjobs.com

Hadoop Job Description Velvet Jobs Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. what is speculative execution in hadoop? in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. Hadoop, built on the mapreduce programming model, has been widely employed. Job Speculation In Hadoop.

From www.educba.com

Career in Hadoop Career Scope with Salary Survey Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. speculative execution in hadoop. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. Hadoop, built on the mapreduce programming model,. Job Speculation In Hadoop.

From www.geeksforgeeks.org

Hadoop Introduction Job Speculation In Hadoop the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. Speculative execution in hadoop mapreduce is an option to run a duplicate. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. speculative execution in the hadoop framework does. Job Speculation In Hadoop.

From mingyue.me

hadoop mapreduce job execution flow in detail Yan's Notes Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. what is speculative execution in hadoop? the performance. Job Speculation In Hadoop.

From www.knowledgehut.com

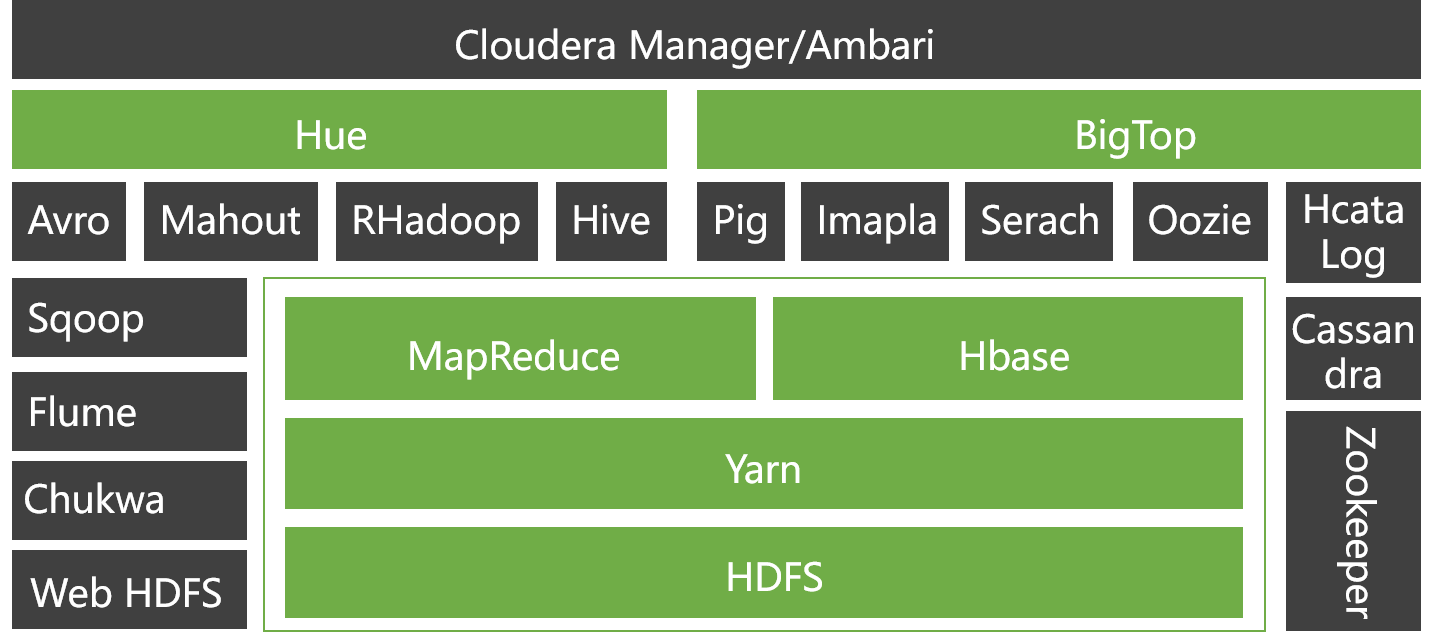

Understanding Hadoop Ecosystem Architecture, Components & Tools Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks. Job Speculation In Hadoop.

From www.geeksforgeeks.org

What is Schema On Read and Schema On Write in Hadoop? Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. what is speculative execution in hadoop? . Job Speculation In Hadoop.

From www.toptal.com

Hadoop Developer Job Description Mar 2024 Toptal® Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in. Job Speculation In Hadoop.

From www.interviewbit.com

Hadoop Architecture Detailed Explanation InterviewBit Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. speculative execution in hadoop. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in. Job Speculation In Hadoop.

From stph.scenari-community.org

MapReduce dans Hadoop Implémentation dans Hadoop Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput.. Job Speculation In Hadoop.

From wilson.velvetjobs.com

Hadoop Job Description Velvet Jobs Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. what is speculative execution in hadoop? The. Job Speculation In Hadoop.

From www.youtube.com

Hadoop Mapreduce Anatomy(MR Job Architecture) Step by step YouTube Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. as most of the tasks in a job are coming to a close, the hadoop platform will. Job Speculation In Hadoop.

From ikkkp.github.io

MapReduce Working Principle in Hadoop Huangzl's blog Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. speculative execution in the hadoop framework does not launch duplicate tasks at the same. Job Speculation In Hadoop.

From www.youtube.com

032 Job Scheduling in MapReduce in hadoop YouTube Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs. Job Speculation In Hadoop.

From data-flair.training

Map Only Job in Hadoop MapReduce with example DataFlair Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. Speculative execution in hadoop mapreduce is an option to run a duplicate. speculative execution in hadoop. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. The mapreduce model in the. Job Speculation In Hadoop.

From www.velvetjobs.com

Hadoop Administrator Job Description Velvet Jobs Job Speculation In Hadoop what is speculative execution in hadoop? Speculative execution in hadoop mapreduce is an option to run a duplicate. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. as most of the tasks in a job are coming to a. Job Speculation In Hadoop.

From mindmajix.com

Getting Started With Hadoop Job Operations Job Speculation In Hadoop in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. what is speculative execution in hadoop? The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. speculative execution. Job Speculation In Hadoop.

From www.researchgate.net

Hadoop installation and environment setup Download Scientific Diagram Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. speculative execution in hadoop. Hadoop, built on the mapreduce programming model, has been widely employed by. Job Speculation In Hadoop.

From www.researchgate.net

Overview of job execution in Hadoop MapReduce Download Scientific Diagram Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. Speculative execution in hadoop mapreduce is an option to run a duplicate. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant. Job Speculation In Hadoop.

From blog.dellemc.com

7 best practices for setting up Hadoop on an EMC Isilon cluster Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. Speculative execution in hadoop mapreduce is an option to run a duplicate. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall. Job Speculation In Hadoop.

From data-flair.training

Hadoop Optimization Job Optimization & Performance Tuning DataFlair Job Speculation In Hadoop what is speculative execution in hadoop? in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. as most of the tasks in a job are coming to a close, the hadoop. Job Speculation In Hadoop.

From www.researchgate.net

An example scenario of job scheduling in Hadoop Download Scientific Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. Speculative execution in hadoop mapreduce is an option to run a duplicate. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. the performance metrics of. Job Speculation In Hadoop.

From www.youtube.com

Daemons in Hadoop Hadoop Installation BIG DATA & HADOOP FULL COURSE Job Speculation In Hadoop what is speculative execution in hadoop? speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. Speculative execution in hadoop mapreduce is an option to run a. Job Speculation In Hadoop.

From data-flair.training

How Hadoop MapReduce Works MapReduce Tutorial DataFlair Job Speculation In Hadoop what is speculative execution in hadoop? speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. the performance metrics of mapreduce are always defined as. Job Speculation In Hadoop.

From bigdataanalyticsnews.com

Hadoop Cluster Interview Questions Big Data Analytics News Job Speculation In Hadoop as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput.. Job Speculation In Hadoop.

From wilson.velvetjobs.com

Hadoop Job Description Velvet Jobs Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. what is speculative execution in hadoop? The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. Speculative execution in hadoop mapreduce. Job Speculation In Hadoop.

From www.youtube.com

What Is Speculative Execution In Hadoop Hadoop Tutorial Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow. Job Speculation In Hadoop.

From www.oreilly.com

7. How MapReduce Works Hadoop The Definitive Guide, 4th Edition [Book] Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. speculative execution in hadoop. in simple words, a speculative execution means that hadoop in overall doesn't try to fix slow tasks as it is hard. what is speculative execution. Job Speculation In Hadoop.

From www.slideteam.net

Apache Hadoop Job Execution Flow Of Mapreduce Ppt Sample Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. speculative execution in hadoop. speculative execution in the hadoop framework does not launch duplicate tasks. Job Speculation In Hadoop.

From www.geeksforgeeks.org

Hadoop Pros and Cons Job Speculation In Hadoop The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order to reduce the overall job execution time. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. as most of the tasks in a job are coming to a close, the. Job Speculation In Hadoop.

From blog.naver.com

Installation of hadoop in the cluster A complete step by step Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. Speculative execution in hadoop mapreduce is an option to run a duplicate. The mapreduce model in the hadoop framework breaks the jobs into independent tasks. Job Speculation In Hadoop.

From www.dezyre.com

5 Reasons to Learn Hadoop Job Speculation In Hadoop Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. in simple words, a speculative execution means. Job Speculation In Hadoop.

From tutorials.freshersnow.com

Hadoop Job Profiles, Roles and Salaries Job Speculation In Hadoop speculative execution in hadoop. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. as most of the tasks in a job are coming to a close, the hadoop platform will schedule redundant copies of the.. Job Speculation In Hadoop.

From saigontechsolutions.com

Hadoop Architecture Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. Speculative execution in hadoop mapreduce is an option to run a duplicate. what is speculative execution in hadoop? The mapreduce model in the hadoop framework breaks the jobs into independent tasks and runs these tasks in parallel in order. Job Speculation In Hadoop.

From assets.velvetjobs.com

Hadoop Job Description Velvet Jobs Job Speculation In Hadoop speculative execution in the hadoop framework does not launch duplicate tasks at the same time that can race each. the performance metrics of mapreduce are always defined as the job execution time and cluster throughput. Hadoop, built on the mapreduce programming model, has been widely employed by giant companies such. speculative execution in hadoop. in simple. Job Speculation In Hadoop.