Linear Activation Keras . The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. here’s a brief overview of some commonly used activation functions in keras: in keras, i can create any network layer with a linear activation function as follows (for example, a fully. Learn framework concepts and components. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. linear output activation function.

from mlnotebook.github.io

in keras, i can create any network layer with a linear activation function as follows (for example, a fully. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. here’s a brief overview of some commonly used activation functions in keras: keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. linear output activation function. Learn framework concepts and components.

A Simple Neural Network Transfer Functions · Machine Learning Notebook

Linear Activation Keras here’s a brief overview of some commonly used activation functions in keras: here’s a brief overview of some commonly used activation functions in keras: linear output activation function. Learn framework concepts and components. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. in keras, i can create any network layer with a linear activation function as follows (for example, a fully.

From learnopencv.com

Tensorflow & Keras Tutorial Linear Regression Linear Activation Keras here’s a brief overview of some commonly used activation functions in keras: Learn framework concepts and components. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. keras.layers.activation(activation,**kwargs) applies. Linear Activation Keras.

From velog.io

딥러닝 활성함수 (Activation function) Linear Activation Keras linear output activation function. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. keras.layers.activation(activation,**kwargs) applies an activation function to an output. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. The linear. Linear Activation Keras.

From github.com

How to create a step function to use as activation function in Keras Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Learn framework concepts and components. linear output activation function. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. keras.layers.activation(activation,**kwargs) applies an activation function. Linear Activation Keras.

From github.com

Learn How to use Keras for Modeling Linear Regression Linear Activation Keras here’s a brief overview of some commonly used activation functions in keras: The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. In this article, you’ll learn the following most. Linear Activation Keras.

From www.youtube.com

Ep2.3 Linear Regression in Keras TFKDeep Learning Exploring Linear Activation Keras in keras, i can create any network layer with a linear activation function as follows (for example, a fully. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. here’s a brief overview of some commonly used activation functions in keras: keras.layers.activation(activation,**kwargs) applies an activation function to. Linear Activation Keras.

From www.researchgate.net

Model architecture using Keras visualization Download Scientific Diagram Linear Activation Keras here’s a brief overview of some commonly used activation functions in keras: Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. Learn framework concepts and components. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. in keras, i can create any network layer with a linear activation. Linear Activation Keras.

From medium.com

Introduction to Different Activation Functions for Deep Learning by Linear Activation Keras keras.layers.activation(activation,**kwargs) applies an activation function to an output. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. linear output activation function. Learn framework concepts and components. Tf_keras.activations.relu(x,. Linear Activation Keras.

From inside-machinelearning.com

Linear Regression How to do with Keras Best Tutorial Linear Activation Keras keras.layers.activation(activation,**kwargs) applies an activation function to an output. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. in some cases, activation functions have a major effect on the. Linear Activation Keras.

From towardsdatascience.com

7 popular activation functions you should know in Deep Learning and how Linear Activation Keras in keras, i can create any network layer with a linear activation function as follows (for example, a fully. linear output activation function. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead. Linear Activation Keras.

From zhuanlan.zhihu.com

Building Complex Model Using Keras Functional API 知乎 Linear Activation Keras in keras, i can create any network layer with a linear activation function as follows (for example, a fully. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. keras.layers.activation(activation,**kwargs) applies an activation function to an output. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. The linear activation function is also. Linear Activation Keras.

From python.plainenglish.io

Choosing the Right Activation Function in Deep Learning A Practical Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. keras.layers.activation(activation,**kwargs) applies an activation function to an output. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. In this article, you’ll learn the following most popular activation functions. Linear Activation Keras.

From www.researchgate.net

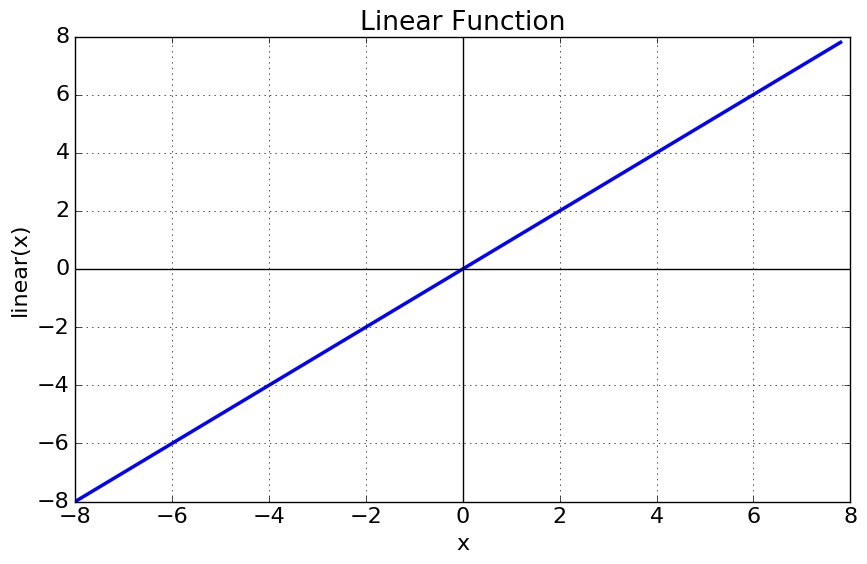

Linear activation function 9 Download Scientific Diagram Linear Activation Keras in keras, i can create any network layer with a linear activation function as follows (for example, a fully. keras.layers.activation(activation,**kwargs) applies an activation function to an output. here’s a brief overview of some commonly used activation functions in keras: The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the. Linear Activation Keras.

From machinelearningmastery.com

How to Use the Keras Functional API for Deep Learning Linear Activation Keras keras.layers.activation(activation,**kwargs) applies an activation function to an output. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. The linear activation function is also called. Linear Activation Keras.

From machinelearningmastery.com

How to Choose an Activation Function for Deep Learning Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. keras.layers.activation(activation,**kwargs) applies an activation function to an output. linear output activation function. Learn framework concepts and components. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function. Linear Activation Keras.

From github.com

Learn How to use Keras for Modeling Linear Regression Linear Activation Keras The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Learn. Linear Activation Keras.

From storevep.eksido.io

Types of Activation Functions in Deep Learning explained with Keras Linear Activation Keras In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. linear output activation function. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Learn framework concepts and components. The. Linear Activation Keras.

From mlnotebook.github.io

A Simple Neural Network Transfer Functions · Machine Learning Notebook Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. keras.layers.activation(activation,**kwargs) applies an activation function to an output. here’s a brief overview of some. Linear Activation Keras.

From dev-state.com

Part 1 Exploring Tensorflow 2 Keras API Dev State — A blog about Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. Learn framework concepts and components. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this. Linear Activation Keras.

From blog.csdn.net

kerasCSDN博客 Linear Activation Keras Learn framework concepts and components. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. keras.layers.activation(activation,**kwargs) applies an activation function to an output. linear output activation function. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. here’s a brief overview of some commonly used activation functions in. Linear Activation Keras.

From www.youtube.com

Neural Network Regression Model with Keras Keras 3 YouTube Linear Activation Keras here’s a brief overview of some commonly used activation functions in keras: linear output activation function. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. keras.layers.activation(activation,**kwargs) applies an activation function to an output. The linear activation function is also called “identity” (multiplied. Linear Activation Keras.

From codetorial.net

tf.keras.activations.linear Codetorial Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. keras.layers.activation(activation,**kwargs) applies an activation function to an output. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. Learn framework concepts and components. In. Linear Activation Keras.

From www.researchgate.net

Architecture of a simple feedforward DNN used in this study (rendered Linear Activation Keras keras.layers.activation(activation,**kwargs) applies an activation function to an output. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. here’s a brief overview of some commonly used activation functions in keras: in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Learn framework concepts and components. linear output activation function. In this article,. Linear Activation Keras.

From keras3.posit.co

Rectified linear unit activation function with upper bound of 6. — op Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. linear output activation function. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. keras.layers.activation(activation,**kwargs) applies an activation function to an output. here’s a brief overview of some commonly used activation functions in keras: in some cases,. Linear Activation Keras.

From medium.com

Building Neural Network using Keras for Classification by Renu Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. Learn framework concepts and components. keras.layers.activation(activation,**kwargs) applies an activation function to an output. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted. Linear Activation Keras.

From www.youtube.com

Linear Regression Tutorial using Tensorflow and Keras YouTube Linear Activation Keras in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. keras.layers.activation(activation,**kwargs) applies an activation function to an output. linear output activation function. here’s a brief overview of some commonly used activation functions in keras: Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in keras, i can create any network. Linear Activation Keras.

From www.youtube.com

Deep Learning in TensorFlow 6 L1 Keras Functional API Introduction Linear Activation Keras Learn framework concepts and components. here’s a brief overview of some commonly used activation functions in keras: linear output activation function. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article,. Linear Activation Keras.

From ziwangdeng.com

What are the popular activation functions in Keras? AI Hobbyist Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. here’s a brief overview of some commonly used activation functions in keras: The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. Learn framework concepts. Linear Activation Keras.

From www.aiproblog.com

How to Choose an Activation Function for Deep Learning Linear Activation Keras Learn framework concepts and components. here’s a brief overview of some commonly used activation functions in keras: In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. in some cases, activation functions have a major effect on the model’s ability to converge and the. Linear Activation Keras.

From codetorial.net

tf.keras.activations.relu Codetorial Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. keras.layers.activation(activation,**kwargs) applies an activation function to an output. Learn framework concepts and components. here’s a brief overview of some commonly used activation functions in keras: In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. in keras, i. Linear Activation Keras.

From www.youtube.com

Linear Regression Tutorial using Tensorflow and Keras YouTube Linear Activation Keras Learn framework concepts and components. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in some cases, activation functions have a major effect on the model’s ability to converge and. Linear Activation Keras.

From www.ausgas.co

keras linear layer input layer keras Aep22 Linear Activation Keras in keras, i can create any network layer with a linear activation function as follows (for example, a fully. keras.layers.activation(activation,**kwargs) applies an activation function to an output. In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. here’s. Linear Activation Keras.

From storevep.eksido.io

Types of Activation Functions in Deep Learning explained with Keras Linear Activation Keras Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed. keras.layers.activation(activation,**kwargs) applies an activation function to an output. Learn framework concepts and components. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. In. Linear Activation Keras.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Linear Activation Keras In this article, you’ll learn the following most popular activation functions in deep learning and how to use them with keras and tensorflow 2. here’s a brief overview of some commonly used activation functions in keras: Learn framework concepts and components. linear output activation function. The linear activation function is also called “identity” (multiplied by 1.0) or “no. Linear Activation Keras.

From iq.opengenus.org

Linear Activation Function Linear Activation Keras Learn framework concepts and components. here’s a brief overview of some commonly used activation functions in keras: Tf_keras.activations.relu(x, alpha=0.0, max_value=none, threshold=0.0) applies. in keras, i can create any network layer with a linear activation function as follows (for example, a fully. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because. Linear Activation Keras.

From www.oreilly.com

Back Matter Computer Vision Using Deep Learning Neural Network Linear Activation Keras keras.layers.activation(activation,**kwargs) applies an activation function to an output. The linear activation function is also called “identity” (multiplied by 1.0) or “no activation.” this is because the linear activation function does not change the weighted sum of the input in any way and instead returns the value directly. here’s a brief overview of some commonly used activation functions in. Linear Activation Keras.