Define Markov Process . a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent of.

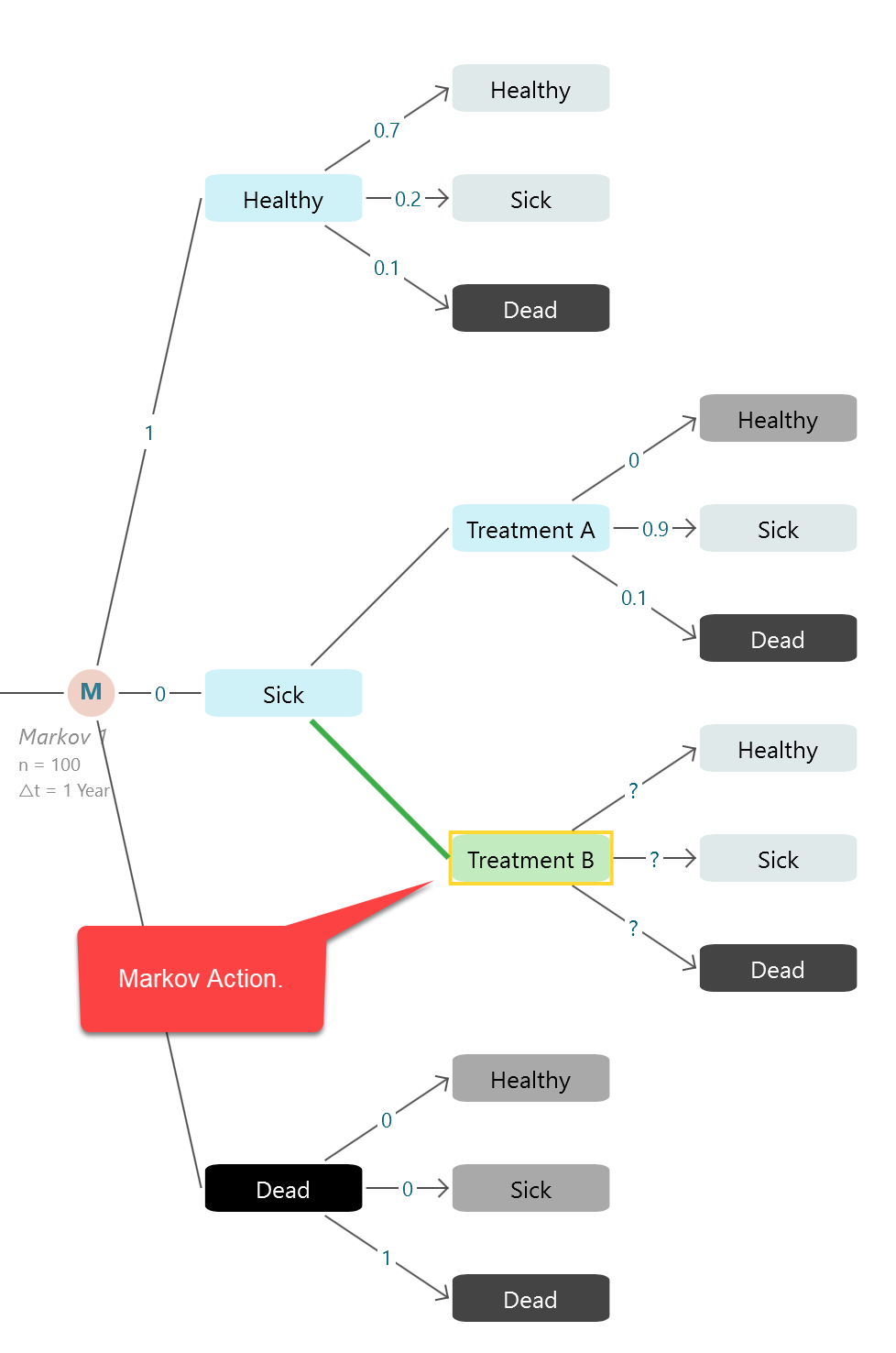

from www.spicelogic.com

markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of.

Markov Models Introduction to the Markov Models

Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of.

From timeseriesreasoning.com

Introduction to Discrete Time Markov Processes Time Series Analysis Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of. . Define Markov Process.

From www.researchgate.net

(A) Graphical representation of the Markov process (X t ) t∈N . (B Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a. Define Markov Process.

From www.geeksforgeeks.org

Markov Decision Process Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that. Define Markov Process.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.researchgate.net

Markov Decision Process in This Article Download Scientific Diagram Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.researchgate.net

A corresponding Markov process (for µ = +∞) to Eyal and Sirer[23 Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. . Define Markov Process.

From www.slideserve.com

PPT An Introduction to Markov Decision Processes Sarah Hickmott Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a. Define Markov Process.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a. Define Markov Process.

From www.spicelogic.com

Markov Models Introduction to the Markov Models Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process for which $ t $ is contained in the natural numbers is called a. Define Markov Process.

From www.slideserve.com

PPT Stochastic Processes PowerPoint Presentation, free download ID Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.slideserve.com

PPT Markov Chains PowerPoint Presentation, free download ID6008214 Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.thoughtco.com

Definition and Example of a Markov Transition Matrix Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the. Define Markov Process.

From medium.com

Markov Decision Process(MDP) Simplified by Bibek Chaudhary Medium Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a. Define Markov Process.

From www.youtube.com

Markov Decision Process YouTube Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. . Define Markov Process.

From deepai.org

Markov Model Definition DeepAI Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. . Define Markov Process.

From www.youtube.com

Markov process YouTube Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the. Define Markov Process.

From optimization.cbe.cornell.edu

Markov decision process Cornell University Computational Optimization Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future. Define Markov Process.

From www.introtoalgo.com

Markov Chain Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent of. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of. Define Markov Process.

From medium.com

The Markov Property, Chain, Reward Process and Decision Process by Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. . Define Markov Process.

From www.theaidream.com

Introduction to Hidden Markov Model(HMM) and its application in Stock Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future. Define Markov Process.

From towardsdatascience.com

Reinforcement Learning — Part 2. Markov Decision Processes by Andreas Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of. Define Markov Process.

From quantrl.com

Markov Decision Processes Quant RL Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. . Define Markov Process.

From www.youtube.com

Markov Chains nstep Transition Matrix Part 3 YouTube Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.slideserve.com

PPT IsolatedWord Speech Recognition Using Hidden Markov Models Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. . Define Markov Process.

From www.researchgate.net

Three different subMarkov processes Download Scientific Diagram Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.youtube.com

Markov decision process YouTube Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. . Define Markov Process.

From www.slideserve.com

PPT Markov Decision Process PowerPoint Presentation, free download Define Markov Process markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic. Define Markov Process.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the. Define Markov Process.

From www.eng.buffalo.edu

A General Result for Markov Processes 1. Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent of. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of. Define Markov Process.

From www.52coding.com.cn

RL Markov Decision Processes NIUHE Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the. Define Markov Process.

From www.slideserve.com

PPT Markov Processes and BirthDeath Processes PowerPoint Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that. Define Markov Process.

From datascience.stackexchange.com

reinforcement learning What is the difference between State Value Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to. Define Markov Process.

From subscription.packtpub.com

Introducing the Markov decision process HandsOn Reinforcement Define Markov Process a markov process is a random process indexed by time, and with the property that the future is independent of. a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future. Define Markov Process.

From www.geeksforgeeks.org

Markov Decision Process Define Markov Process a markov process for which $ t $ is contained in the natural numbers is called a markov chain (however, the latter. markov process, sequence of possibly dependent random variables (x1, x2, x3,.)—identified by increasing values of a. a markov process is a random process indexed by time, and with the property that the future is independent. Define Markov Process.

From www.youtube.com

Markov Decision Processes YouTube Define Markov Process a markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. a markov process is a random process indexed by time, and with the property that the future is independent of. a markov process is a random process indexed by time, and with the property that the future. Define Markov Process.