Standard Tokenizer . The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. I am using default tokenizer (standard) for my index in elastic search. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. And adding documents to it.

from rwatokenizer.medium.com

The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. I am using default tokenizer (standard) for my index in elastic search. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language.

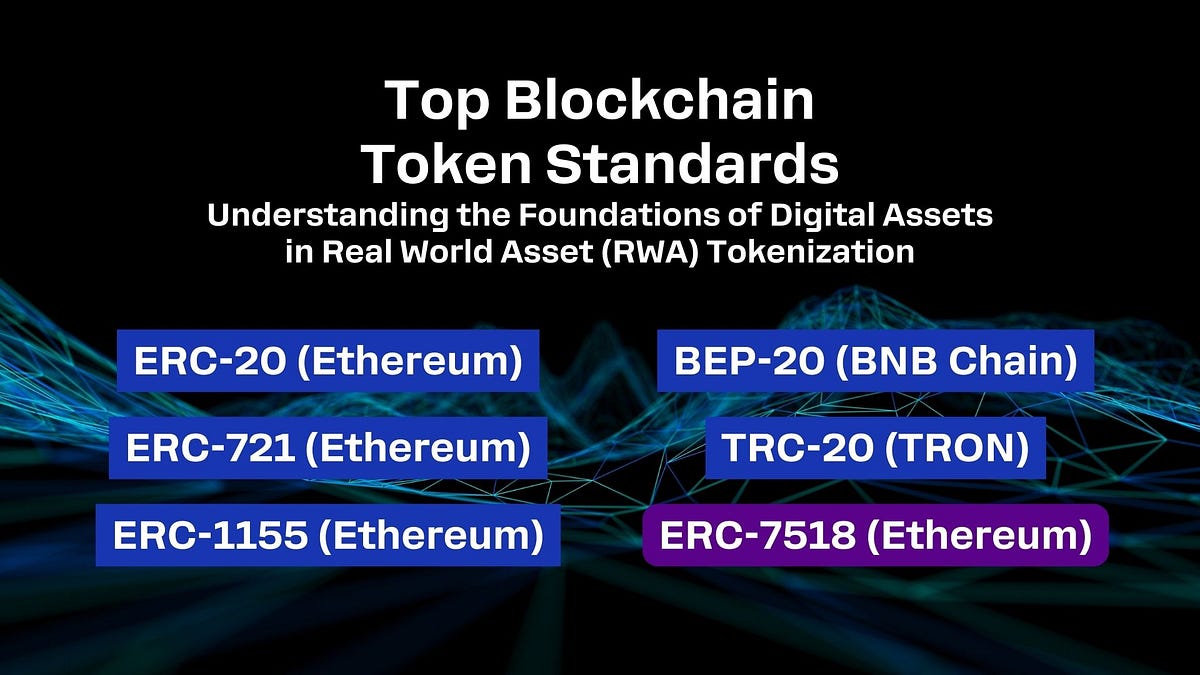

Top Blockchain Token Standards Understanding the Foundations of

Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in.

From slideplayer.com

Project Description 2 Indexing. Indexing Tokenize a text document, and Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text. Standard Tokenizer.

From www.youtube.com

BYTE PAIR ENOCDING TOKENIZER BPE tokenizer tokenizers in nlp Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default. Standard Tokenizer.

From www.ppmy.cn

揭示GPT Tokenizer的工作原理 Standard Tokenizer And adding documents to it. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based. Standard Tokenizer.

From scorpil.com

Understanding Generative AI Part One Tokenizer · Scorpil Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer uses the unicode text segmentation algorithm (as defined in. Standard Tokenizer.

From www.scielo.org.mx

Tokenizer Adapted for the Nasa Yuwe Language Standard Tokenizer The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. I am using default tokenizer. Standard Tokenizer.

From www.scielo.org.mx

Tokenizer Adapted for the Nasa Yuwe Language Standard Tokenizer A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. And adding documents to it. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The. Standard Tokenizer.

From github.com

GitHub ks777/Tokenizer standard and basic C program for System Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The. Standard Tokenizer.

From leegicheol.github.io

Elasticsearch 기본과 특징 cheeolee study Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer divides text into terms on word boundaries, as defined. Standard Tokenizer.

From maelfabien.github.io

Getting Started with Dev Tools in Elasticsearch Standard Tokenizer The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default. Standard Tokenizer.

From thetokenizer.io

Standard DAO AMA Tokenizing Assets with Claus Skaaning of Standard Tokenizer The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. A. Standard Tokenizer.

From chatgptplus.blog

What are tokens and how to use OpenAI Tokenizer to count them Standard Tokenizer I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer provides grammar based tokenization (based on the. Standard Tokenizer.

From blog.csdn.net

chatGPT成功之道数据_spark鈥檚 standard tokenizerCSDN博客 Standard Tokenizer The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. And adding documents to it. The. Standard Tokenizer.

From www.bitbond.com

Howto Tokenize An Asset In 4 Easy Steps Bitbond Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. I am using default tokenizer (standard) for my index in elastic search. A tokenizer of type standard providing grammar based tokenizer. Standard Tokenizer.

From codesandbox.io

gpttokenizer Codesandbox Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar. Standard Tokenizer.

From wikidocs.net

K_2.1. Tokenized Inputs Outputs Transformer, T5_EN Deep Learning Standard Tokenizer And adding documents to it. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing. Standard Tokenizer.

From vinija.ai

Vinija's Notes • Natural Language Processing • Tokenizer Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer divides text into terms on word boundaries, as defined. Standard Tokenizer.

From blog.csdn.net

Spark ml之Tokenizer_spark鈥檚 standard tokenizerCSDN博客 Standard Tokenizer A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer uses the unicode. Standard Tokenizer.

From blog.csdn.net

chatGPT成功之道数据_spark鈥檚 standard tokenizerCSDN博客 Standard Tokenizer The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it.. Standard Tokenizer.

From www.slideserve.com

PPT Tokenizer PowerPoint Presentation, free download ID3662416 Standard Tokenizer The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The. Standard Tokenizer.

From www.scaler.com

StringTokenizer in Java Scaler Topics Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer uses the unicode text segmentation algorithm (as defined in. Standard Tokenizer.

From www.bitbond.com

Howto Tokenize An Asset In 4 Easy Steps Bitbond Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. I. Standard Tokenizer.

From dev3.sh

What Is Tokenization and What Assets Can Be Tokenized Dev3 Standard Tokenizer The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. The standard tokenizer uses the unicode text segmentation algorithm. Standard Tokenizer.

From chain.link

How to Tokenize An Asset Chainlink Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. The standard tokenizer divides text. Standard Tokenizer.

From rwatokenizer.medium.com

Top Blockchain Token Standards Understanding the Foundations of Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text. Standard Tokenizer.

From slideplayer.com

Project Description 2 Indexing. Indexing Tokenize a text document, and Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode. Standard Tokenizer.

From www.slideserve.com

PPT Protocols PowerPoint Presentation, free download ID2589241 Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer uses the unicode text segmentation algorithm (as defined in. Standard Tokenizer.

From aqlu.gitbook.io

Standard Tokenizer Elasticsearch Reference Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. And adding documents to it. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified. Standard Tokenizer.

From www.reddit.com

Gold Standard Foundation did tokenize carbon credits on Hedera. r/Hedera Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer in elasticsearch is a powerful. Standard Tokenizer.

From blog.csdn.net

chatGPT成功之道数据_spark鈥檚 standard tokenizerCSDN博客 Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text. Standard Tokenizer.

From blog.csdn.net

Tokenizer的系统梳理,并手推每个方法的具体实现CSDN博客 Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. And adding documents to it. I am using default tokenizer (standard) for my index in elastic search. A tokenizer of type standard providing grammar. Standard Tokenizer.

From port139.hatenablog.com

Autopsy 4.4.0 におけるキーワード検索(Standard Tokenizer) port139 Blog Standard Tokenizer The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer. Standard Tokenizer.

From www.npmjs.com

gpttokenizer npm Standard Tokenizer A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer divides text into terms on word boundaries, as defined by the unicode text segmentation. The standard tokenizer in elasticsearch is a powerful tool designed to break text. Standard Tokenizer.

From www.researchgate.net

Examples using the tokenizer of the pretrained language models. The Standard Tokenizer I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation. Standard Tokenizer.

From github.com

GitHub alasdairforsythe/tokenmonster Ungreedy subword tokenizer and Standard Tokenizer The standard tokenizer in elasticsearch is a powerful tool designed to break text into individual tokens based on the. A tokenizer of type standard providing grammar based tokenizer that is a good tokenizer for most european language. I am using default tokenizer (standard) for my index in elastic search. The standard tokenizer divides text into terms on word boundaries, as. Standard Tokenizer.

From opster.com

Elasticsearch Text Analyzers Tokenizers, Standard Analyzers & Stopwords Standard Tokenizer The standard tokenizer uses the unicode text segmentation algorithm (as defined in unicode standard annex #29) to find the boundaries. I am using default tokenizer (standard) for my index in elastic search. And adding documents to it. The standard tokenizer provides grammar based tokenization (based on the unicode text segmentation algorithm, as specified in. The standard tokenizer divides text into. Standard Tokenizer.