Pytorch Loss Mean . With reduction set to 'none') loss can be described as: Pytorch provides a wide array of loss functions under its nn (neural network) module. Crossentropyloss — pytorch 2.5 documentation. Users can also define their own loss functions. Interfacing between the forward and backward pass within. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Loss functions are an important component of a neural network. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the.

from www.cnblogs.com

While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Crossentropyloss — pytorch 2.5 documentation. Loss functions are an important component of a neural network. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Interfacing between the forward and backward pass within. With reduction set to 'none') loss can be described as: Users can also define their own loss functions. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Pytorch provides a wide array of loss functions under its nn (neural network) module.

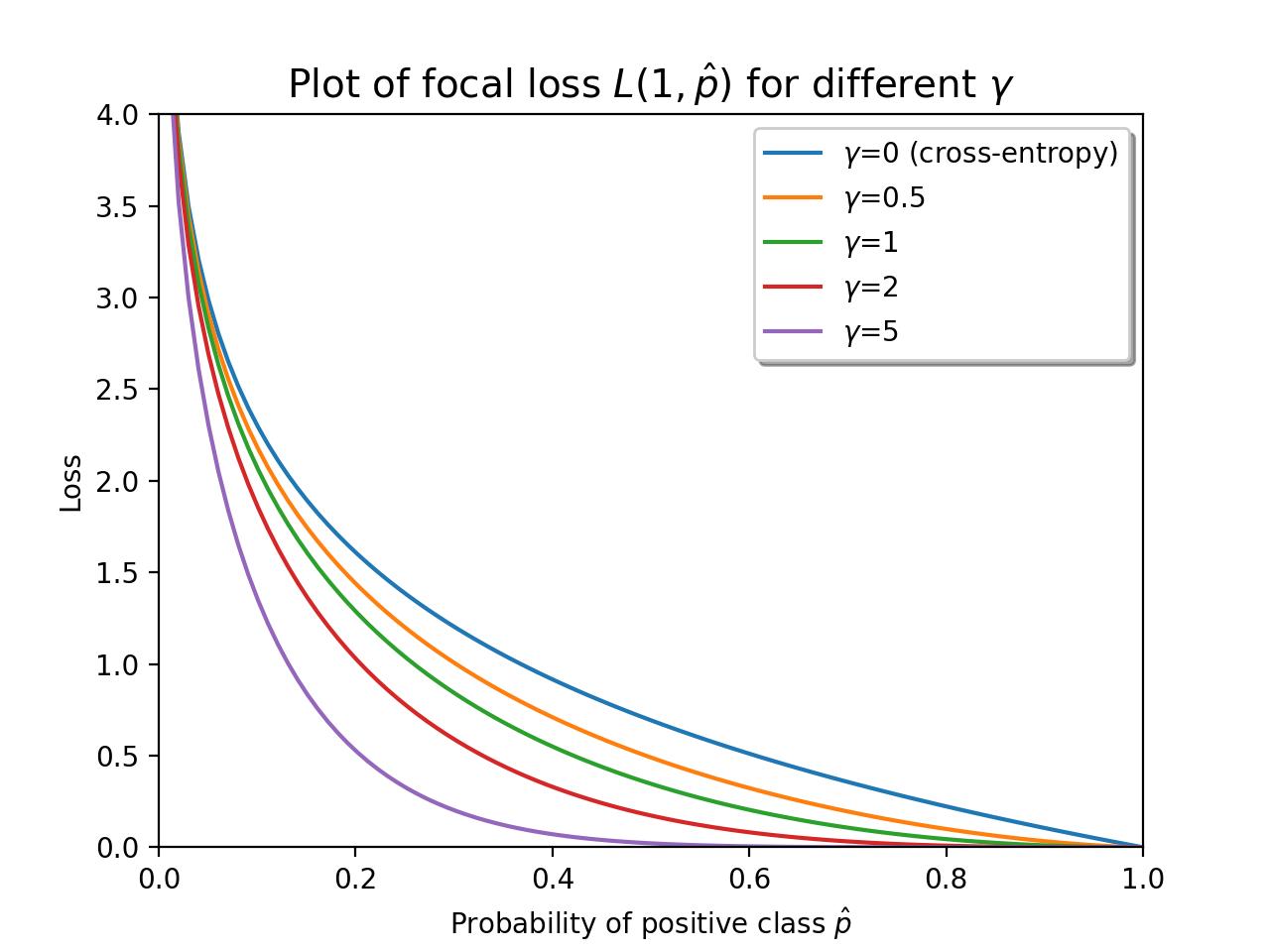

Pytorch下二进制语义分割Focal Loss的实现 GShang 博客园

Pytorch Loss Mean Users can also define their own loss functions. Interfacing between the forward and backward pass within. Users can also define their own loss functions. Loss functions are an important component of a neural network. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. With reduction set to 'none') loss can be described as: Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Crossentropyloss — pytorch 2.5 documentation. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Pytorch provides a wide array of loss functions under its nn (neural network) module.

From pythonguides.com

PyTorch Batch Normalization Python Guides Pytorch Loss Mean While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Crossentropyloss — pytorch 2.5 documentation. Interfacing between the forward and backward pass within. Loss functions are an important component of a neural network. Thus, the objective of any learning process would be to minimize such losses so. Pytorch Loss Mean.

From jamesmccaffrey.wordpress.com

Why You Can’t Use Accuracy to Compute Loss in PyTorch James D. McCaffrey Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. With reduction set to 'none') loss can be. Pytorch Loss Mean.

From nixtlaverse.nixtla.io

PyTorch Losses Nixtla Pytorch Loss Mean Crossentropyloss — pytorch 2.5 documentation. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Interfacing between the forward and backward pass within. Loss functions are an important component of a neural network. While experimenting with my model i see that the various. Pytorch Loss Mean.

From analyticsindiamag.com

Ultimate Guide To Loss functions In PyTorch With Python Implementation Pytorch Loss Mean If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Interfacing between the forward and backward pass within. Pytorch provides a wide array of loss functions under its nn (neural network) module. Crossentropyloss — pytorch 2.5 documentation. With reduction set to 'none') loss. Pytorch Loss Mean.

From debuggercafe.com

Training from Scratch using PyTorch Pytorch Loss Mean Crossentropyloss — pytorch 2.5 documentation. Users can also define their own loss functions. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions. Pytorch Loss Mean.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Loss Mean Users can also define their own loss functions. Pytorch provides a wide array of loss functions under its nn (neural network) module. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. With reduction set to 'none') loss can be described as: Loss functions are an important. Pytorch Loss Mean.

From www.educba.com

PyTorch Loss What is PyTorch loss? How to add PyTorch Loss? Pytorch Loss Mean Pytorch provides a wide array of loss functions under its nn (neural network) module. Users can also define their own loss functions. With reduction set to 'none') loss can be described as: Interfacing between the forward and backward pass within. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none. Pytorch Loss Mean.

From www.youtube.com

Dive into Deep Learning Lecture 4 Logistic/Softmax regression and Pytorch Loss Mean Interfacing between the forward and backward pass within. Loss functions are an important component of a neural network. Pytorch provides a wide array of loss functions under its nn (neural network) module. Users can also define their own loss functions. Crossentropyloss — pytorch 2.5 documentation. Thus, the objective of any learning process would be to minimize such losses so that. Pytorch Loss Mean.

From discuss.pytorch.org

Difference between MeanSquaredError & Loss (where loss = mse) ignite Pytorch Loss Mean Loss functions are an important component of a neural network. Interfacing between the forward and backward pass within. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Users can also define their own loss functions. Crossentropyloss — pytorch 2.5 documentation. While experimenting with my model i see. Pytorch Loss Mean.

From github.com

NLPLossPytorch/label_smoothing.py at master · shuxinyin/NLPLoss Pytorch Loss Mean With reduction set to 'none') loss can be described as: Interfacing between the forward and backward pass within. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Crossentropyloss — pytorch 2.5 documentation. Thus, the objective of any learning process would be to minimize such losses so. Pytorch Loss Mean.

From datagy.io

Mean Absolute Error (MAE) Loss Function in PyTorch • datagy Pytorch Loss Mean Interfacing between the forward and backward pass within. Users can also define their own loss functions. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you. Pytorch Loss Mean.

From www.cnblogs.com

Pytorch下二进制语义分割Focal Loss的实现 GShang 博客园 Pytorch Loss Mean Pytorch provides a wide array of loss functions under its nn (neural network) module. With reduction set to 'none') loss can be described as: Crossentropyloss — pytorch 2.5 documentation. Interfacing between the forward and backward pass within. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Loss. Pytorch Loss Mean.

From datagy.io

PyTorch Loss Functions The Complete Guide • datagy Pytorch Loss Mean If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Loss functions are an important component of a neural network. With. Pytorch Loss Mean.

From discuss.pytorch.org

Sudden peaks in mean loss during training vision PyTorch Forums Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Loss functions are an important component of a neural network. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none |. Pytorch Loss Mean.

From github.com

GitHub yiftachbeer/mmd_loss_pytorch A differentiable implementation Pytorch Loss Mean Pytorch provides a wide array of loss functions under its nn (neural network) module. Users can also define their own loss functions. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Crossentropyloss — pytorch 2.5 documentation. Thus, the objective of. Pytorch Loss Mean.

From discuss.pytorch.org

Plotting loss curve PyTorch Forums Pytorch Loss Mean Crossentropyloss — pytorch 2.5 documentation. Users can also define their own loss functions. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. With reduction set to 'none') loss can be described as: Loss functions are an important component of a neural network. Pytorch provides a wide array. Pytorch Loss Mean.

From datagy.io

Mean Squared Error (MSE) Loss Function in PyTorch • datagy Pytorch Loss Mean Interfacing between the forward and backward pass within. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Pytorch provides a wide array of loss functions under its nn (neural network) module. Users can also define their own loss functions. With. Pytorch Loss Mean.

From discuss.pytorch.org

[Code review] Combining BCE and MSE loss vision PyTorch Forums Pytorch Loss Mean Loss functions are an important component of a neural network. Crossentropyloss — pytorch 2.5 documentation. With reduction set to 'none') loss can be described as: If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Users can also define their own loss functions.. Pytorch Loss Mean.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Loss functions are an important component of a neural network. Pytorch provides a wide array of loss functions under its nn (neural network) module. Thus, the objective of any learning process. Pytorch Loss Mean.

From debuggercafe.com

PyTorch Implementation of Stochastic Gradient Descent with Warm Restarts Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Loss functions are an. Pytorch Loss Mean.

From www.askpython.com

A Quick Guide to Pytorch Loss Functions AskPython Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Crossentropyloss — pytorch 2.5 documentation. Users can also define their own loss functions. If loss is already a scalar, then you can just call backward loss.backward() but if it is not. Pytorch Loss Mean.

From blog.paperspace.com

PyTorch Loss Functions Pytorch Loss Mean If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Pytorch provides a wide array of loss functions under its. Pytorch Loss Mean.

From discuss.pytorch.org

Loss increasing instead of decreasing PyTorch Forums Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Pytorch provides a wide array of loss functions under its nn (neural network) module. If loss is already a scalar, then you can just call backward loss.backward() but if it is. Pytorch Loss Mean.

From encord.com

Machine Learning CrossEntropy Loss Functions Pytorch Loss Mean Interfacing between the forward and backward pass within. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and. Pytorch Loss Mean.

From github.com

pytorchloss/label_smooth.py at master · CoinCheung/pytorchloss · GitHub Pytorch Loss Mean With reduction set to 'none') loss can be described as: Interfacing between the forward and backward pass within. If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Loss functions are an important component of a neural network. A loss function, also known. Pytorch Loss Mean.

From www.youtube.com

Pytorch for Beginners 16 Loss Functions Regression Loss (L1 and Pytorch Loss Mean While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Users can also define their own loss functions. Interfacing between the forward and backward pass within. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made. Pytorch Loss Mean.

From 9to5answer.com

[Solved] Custom loss function in PyTorch 9to5Answer Pytorch Loss Mean Pytorch provides a wide array of loss functions under its nn (neural network) module. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Loss. Pytorch Loss Mean.

From www.v7labs.com

The Essential Guide to Pytorch Loss Functions Pytorch Loss Mean Users can also define their own loss functions. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |.. Pytorch Loss Mean.

From deepai.org

PyTorch Loss Functions The Ultimate Guide DeepAI Pytorch Loss Mean While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Users can also define their own loss functions. Interfacing between the forward and backward pass. Pytorch Loss Mean.

From discuss.pytorch.org

Noob question about how Loss functions work in PyTorch PyTorch Forums Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Crossentropyloss — pytorch 2.5 documentation. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Interfacing between the forward. Pytorch Loss Mean.

From velog.io

Difference Between PyTorch and TF(TensorFlow) Pytorch Loss Mean A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. With reduction set to 'none') loss can be. Pytorch Loss Mean.

From stackoverflow.com

Pytorch Loss and Accuracy Curve for BERT Stack Overflow Pytorch Loss Mean Crossentropyloss — pytorch 2.5 documentation. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |. Pytorch provides a wide array of loss functions under its nn (neural network) module. Users can also define their own loss functions. Loss functions are an important component of a neural network.. Pytorch Loss Mean.

From leechanhyuk.github.io

[Concept summary] Cost(Loss) function의 종류 및 특징 My Record Pytorch Loss Mean Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely match the. Loss functions are an important component of a neural network. Users can also define their own loss functions. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none. Pytorch Loss Mean.

From nuguziii.github.io

[PyTorch] 자주쓰는 Loss Function (CrossEntropy, MSE) 정리 ZZEN’s Blog Pytorch Loss Mean If loss is already a scalar, then you can just call backward loss.backward() but if it is not scalar, then you can convert that to a scalar. Crossentropyloss — pytorch 2.5 documentation. Users can also define their own loss functions. Thus, the objective of any learning process would be to minimize such losses so that the resulting output would closely. Pytorch Loss Mean.

From machinelearningknowledge.ai

Ultimate Guide to PyTorch Loss Functions MLK Machine Learning Knowledge Pytorch Loss Mean Interfacing between the forward and backward pass within. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. While experimenting with my model i see that the various loss classes for pytorch will accept a reduction parameter (none | sum |.. Pytorch Loss Mean.