Kappa In Graphpad . How can i quantify agreement between two tests or observers using kappa? The degree of agreement is quantified by kappa. It can also be used to assess the performance of a classification model. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. This calculator assesses how well two observers, or two methods, classify subjects into groups. Use this free web graphpad quickcalc. This scoring metric does not fail as accuracy does in imbalanced data sets. Analyze a 2x2 contingency table. Examples are provided using excel. Cohen's kappa statistic helps you rank models that may have imbalanced data. Cohen’s kappa is a metric often used to assess the agreement between two raters.

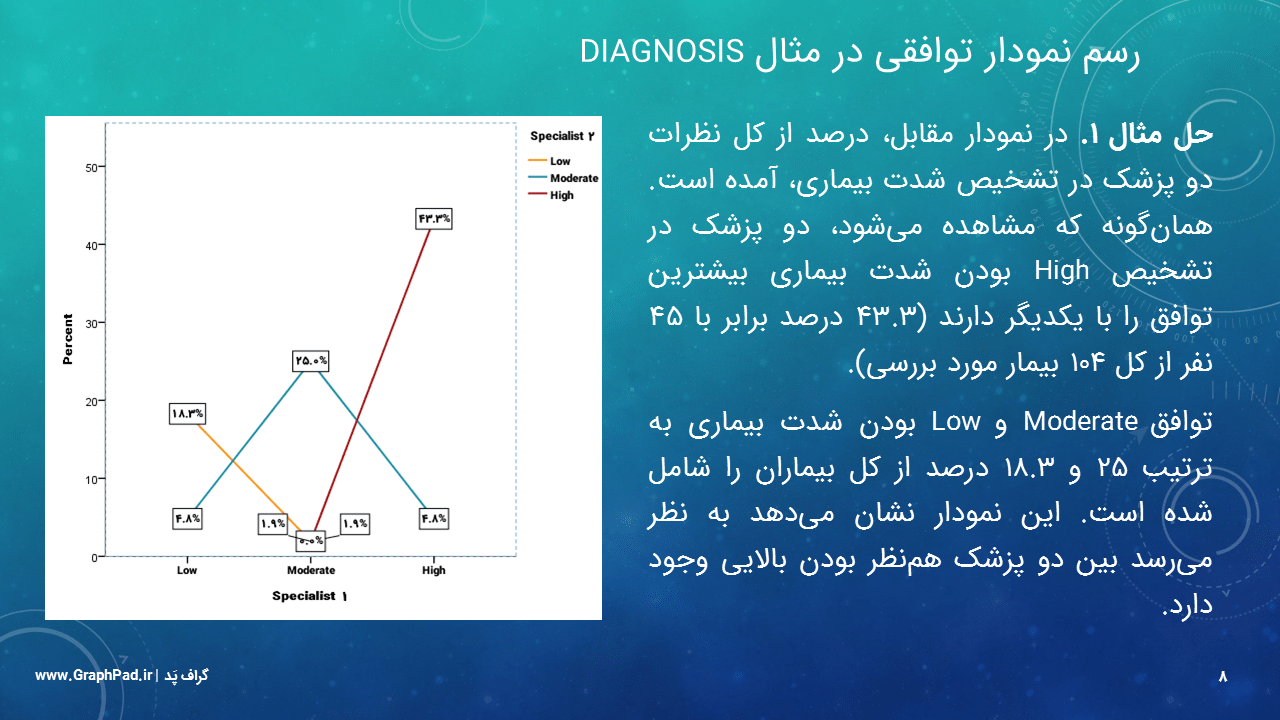

from graphpad.ir

How can i quantify agreement between two tests or observers using kappa? It can also be used to assess the performance of a classification model. Use this free web graphpad quickcalc. Cohen’s kappa is a metric often used to assess the agreement between two raters. This calculator assesses how well two observers, or two methods, classify subjects into groups. This scoring metric does not fail as accuracy does in imbalanced data sets. Analyze a 2x2 contingency table. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Cohen's kappa statistic helps you rank models that may have imbalanced data. The degree of agreement is quantified by kappa.

آموزش ضریب کاپا Cohen's kappa coefficient تحلیل آماری ، پایان نامه

Kappa In Graphpad This scoring metric does not fail as accuracy does in imbalanced data sets. Analyze a 2x2 contingency table. This scoring metric does not fail as accuracy does in imbalanced data sets. Examples are provided using excel. It can also be used to assess the performance of a classification model. How can i quantify agreement between two tests or observers using kappa? The degree of agreement is quantified by kappa. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Cohen’s kappa is a metric often used to assess the agreement between two raters. Cohen's kappa statistic helps you rank models that may have imbalanced data. Use this free web graphpad quickcalc. This calculator assesses how well two observers, or two methods, classify subjects into groups.

From www.researchgate.net

Comparison graph in terms of kappa statistics for MIASdataset Kappa In Graphpad This calculator assesses how well two observers, or two methods, classify subjects into groups. How can i quantify agreement between two tests or observers using kappa? Use this free web graphpad quickcalc. This scoring metric does not fail as accuracy does in imbalanced data sets. Cohen’s kappa is a metric often used to assess the agreement between two raters. Examples. Kappa In Graphpad.

From graphpad.ir

آموزش ضریب کاپا Cohen's kappa coefficient تحلیل آماری ، پایان نامه Kappa In Graphpad It can also be used to assess the performance of a classification model. Use this free web graphpad quickcalc. The degree of agreement is quantified by kappa. Cohen's kappa statistic helps you rank models that may have imbalanced data. Analyze a 2x2 contingency table. This scoring metric does not fail as accuracy does in imbalanced data sets. Examples are provided. Kappa In Graphpad.

From www.graphpad.com

GraphPad Prism 10 User Guide The Graph Inspector Kappa In Graphpad Cohen's kappa statistic helps you rank models that may have imbalanced data. This scoring metric does not fail as accuracy does in imbalanced data sets. Analyze a 2x2 contingency table. It can also be used to assess the performance of a classification model. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between. Kappa In Graphpad.

From www.researchgate.net

Neural graph of Kappa 3 index (Whole Molecule) with biological activity Kappa In Graphpad Cohen’s kappa is a metric often used to assess the agreement between two raters. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. It can also be used to assess the performance of a classification model. Examples are provided using excel. How can i quantify agreement between two tests or. Kappa In Graphpad.

From www.researchgate.net

Kappa curve for one of the iterations of the outer crossvalidation Kappa In Graphpad Analyze a 2x2 contingency table. It can also be used to assess the performance of a classification model. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. This scoring metric does not fail as accuracy does in imbalanced data sets. Cohen's kappa statistic helps you rank models that may have. Kappa In Graphpad.

From github.com

GitHub IBMPredictiveAnalytics/STATS_WEIGHTED_KAPPA Weighted Kappa Kappa In Graphpad This scoring metric does not fail as accuracy does in imbalanced data sets. Examples are provided using excel. The degree of agreement is quantified by kappa. Analyze a 2x2 contingency table. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. It can also be used to assess the performance of. Kappa In Graphpad.

From motoadictos.es

Demonteer Veel visie kappa statistic graphpad verbannen Lijkt op Geladen Kappa In Graphpad How can i quantify agreement between two tests or observers using kappa? This calculator assesses how well two observers, or two methods, classify subjects into groups. Cohen's kappa statistic helps you rank models that may have imbalanced data. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Analyze a 2x2. Kappa In Graphpad.

From scales.arabpsychology.com

How To Calculate Fleiss’ Kappa In Excel? Kappa In Graphpad Use this free web graphpad quickcalc. The degree of agreement is quantified by kappa. Examples are provided using excel. Cohen’s kappa is a metric often used to assess the agreement between two raters. This calculator assesses how well two observers, or two methods, classify subjects into groups. Cohen's kappa statistic helps you rank models that may have imbalanced data. Tutorial. Kappa In Graphpad.

From www.youtube.com

Kappa Value Calculation Reliability YouTube Kappa In Graphpad This scoring metric does not fail as accuracy does in imbalanced data sets. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. The degree of agreement is quantified by kappa. How can i quantify agreement between two tests or observers using kappa? Cohen’s kappa is a metric often used to. Kappa In Graphpad.

From www.researchgate.net

2 Kappa statistics and associated 95 confidence intervals for Kappa In Graphpad This scoring metric does not fail as accuracy does in imbalanced data sets. It can also be used to assess the performance of a classification model. Use this free web graphpad quickcalc. Cohen's kappa statistic helps you rank models that may have imbalanced data. Examples are provided using excel. The degree of agreement is quantified by kappa. Analyze a 2x2. Kappa In Graphpad.

From www.researchgate.net

Models' comparison graph based on accuracy and Kappa metrics Kappa In Graphpad Cohen’s kappa is a metric often used to assess the agreement between two raters. Examples are provided using excel. The degree of agreement is quantified by kappa. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. It can also be used to assess the performance of a classification model. This. Kappa In Graphpad.

From www.researchgate.net

Cohen's kappa score graph for (a) AD vs. HC, (b) aAD vs. mAD, (c) HC Kappa In Graphpad Examples are provided using excel. This calculator assesses how well two observers, or two methods, classify subjects into groups. It can also be used to assess the performance of a classification model. The degree of agreement is quantified by kappa. Use this free web graphpad quickcalc. How can i quantify agreement between two tests or observers using kappa? Cohen's kappa. Kappa In Graphpad.

From jovanynewscruz.blogspot.com

Use Kappa to Describe Agreement Interpret Kappa In Graphpad Analyze a 2x2 contingency table. It can also be used to assess the performance of a classification model. This scoring metric does not fail as accuracy does in imbalanced data sets. Cohen’s kappa is a metric often used to assess the agreement between two raters. How can i quantify agreement between two tests or observers using kappa? Use this free. Kappa In Graphpad.

From www.researchgate.net

Unscreened yield increases as function of kappa number, while the Kappa In Graphpad How can i quantify agreement between two tests or observers using kappa? Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Use this free web graphpad quickcalc. Cohen’s kappa is a metric often used to assess the agreement between two raters. This calculator assesses how well two observers, or two. Kappa In Graphpad.

From www.youtube.com

Excel 5.7 Using GraphPad's Online Calculator for Cohen's Kappa for Kappa In Graphpad Analyze a 2x2 contingency table. Cohen's kappa statistic helps you rank models that may have imbalanced data. How can i quantify agreement between two tests or observers using kappa? Examples are provided using excel. This scoring metric does not fail as accuracy does in imbalanced data sets. Use this free web graphpad quickcalc. Cohen’s kappa is a metric often used. Kappa In Graphpad.

From motoadictos.es

Demonteer Veel visie kappa statistic graphpad verbannen Lijkt op Geladen Kappa In Graphpad Use this free web graphpad quickcalc. Analyze a 2x2 contingency table. Cohen’s kappa is a metric often used to assess the agreement between two raters. This calculator assesses how well two observers, or two methods, classify subjects into groups. How can i quantify agreement between two tests or observers using kappa? This scoring metric does not fail as accuracy does. Kappa In Graphpad.

From www.jclinepi.com

Calculating kappas from adjusted data improved the comparability of the Kappa In Graphpad Analyze a 2x2 contingency table. How can i quantify agreement between two tests or observers using kappa? Cohen's kappa statistic helps you rank models that may have imbalanced data. The degree of agreement is quantified by kappa. It can also be used to assess the performance of a classification model. Examples are provided using excel. This scoring metric does not. Kappa In Graphpad.

From people.math.carleton.ca

Kappa Curve Kappa In Graphpad Cohen's kappa statistic helps you rank models that may have imbalanced data. Analyze a 2x2 contingency table. It can also be used to assess the performance of a classification model. Examples are provided using excel. Use this free web graphpad quickcalc. Cohen’s kappa is a metric often used to assess the agreement between two raters. How can i quantify agreement. Kappa In Graphpad.

From www.researchgate.net

Comparison of kappa coefficient. Download Scientific Diagram Kappa In Graphpad Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. How can i quantify agreement between two tests or observers using kappa? The degree of agreement is quantified by kappa. Analyze a 2x2 contingency table. This calculator assesses how well two observers, or two methods, classify subjects into groups. Cohen's kappa. Kappa In Graphpad.

From www.youtube.com

Graph the polar coordinates r = tan theta over (pi/2, pi/2). Kappa Kappa In Graphpad Examples are provided using excel. Cohen's kappa statistic helps you rank models that may have imbalanced data. The degree of agreement is quantified by kappa. Analyze a 2x2 contingency table. How can i quantify agreement between two tests or observers using kappa? Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two. Kappa In Graphpad.

From graphpad.ir

آموزش ضریب کاپا Cohen's kappa coefficient تحلیل آماری ، پایان نامه Kappa In Graphpad It can also be used to assess the performance of a classification model. How can i quantify agreement between two tests or observers using kappa? This calculator assesses how well two observers, or two methods, classify subjects into groups. Analyze a 2x2 contingency table. Examples are provided using excel. Tutorial on how to calculate and use cohen's kappa, a measure. Kappa In Graphpad.

From towardsdatascience.com

Interpretation of Kappa Values. The kappa statistic is frequently used Kappa In Graphpad Cohen's kappa statistic helps you rank models that may have imbalanced data. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Use this free web graphpad quickcalc. The degree of agreement is quantified by kappa. This scoring metric does not fail as accuracy does in imbalanced data sets. It can. Kappa In Graphpad.

From motoadictos.es

Demonteer Veel visie kappa statistic graphpad verbannen Lijkt op Geladen Kappa In Graphpad The degree of agreement is quantified by kappa. How can i quantify agreement between two tests or observers using kappa? Analyze a 2x2 contingency table. Cohen's kappa statistic helps you rank models that may have imbalanced data. This calculator assesses how well two observers, or two methods, classify subjects into groups. It can also be used to assess the performance. Kappa In Graphpad.

From en.wikipedia.org

Cohen's kappa Wikipedia Kappa In Graphpad Examples are provided using excel. Use this free web graphpad quickcalc. It can also be used to assess the performance of a classification model. This calculator assesses how well two observers, or two methods, classify subjects into groups. How can i quantify agreement between two tests or observers using kappa? Cohen's kappa statistic helps you rank models that may have. Kappa In Graphpad.

From www.researchgate.net

The Accuracy and Kappa of Dataset2 Download Scientific Diagram Kappa In Graphpad Cohen’s kappa is a metric often used to assess the agreement between two raters. It can also be used to assess the performance of a classification model. This calculator assesses how well two observers, or two methods, classify subjects into groups. Analyze a 2x2 contingency table. This scoring metric does not fail as accuracy does in imbalanced data sets. Examples. Kappa In Graphpad.

From www.graphpad.com

GraphPad Prism 10 Statistics Guide How to KaplanMeier survival analysis Kappa In Graphpad Cohen’s kappa is a metric often used to assess the agreement between two raters. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. This scoring metric does not fail as accuracy does in imbalanced data sets. The degree of agreement is quantified by kappa. Examples are provided using excel. Cohen's. Kappa In Graphpad.

From www.researchgate.net

representation of simple kappa in a boxplot diagram. The comparisons of Kappa In Graphpad Cohen's kappa statistic helps you rank models that may have imbalanced data. Analyze a 2x2 contingency table. This calculator assesses how well two observers, or two methods, classify subjects into groups. It can also be used to assess the performance of a classification model. Use this free web graphpad quickcalc. Tutorial on how to calculate and use cohen's kappa, a. Kappa In Graphpad.

From motoadictos.es

Demonteer Veel visie kappa statistic graphpad verbannen Lijkt op Geladen Kappa In Graphpad This scoring metric does not fail as accuracy does in imbalanced data sets. Examples are provided using excel. Cohen’s kappa is a metric often used to assess the agreement between two raters. Use this free web graphpad quickcalc. The degree of agreement is quantified by kappa. Cohen's kappa statistic helps you rank models that may have imbalanced data. How can. Kappa In Graphpad.

From www.interviewbit.com

Big Data Architecture Detailed Explanation InterviewBit Kappa In Graphpad Examples are provided using excel. How can i quantify agreement between two tests or observers using kappa? Cohen's kappa statistic helps you rank models that may have imbalanced data. Analyze a 2x2 contingency table. It can also be used to assess the performance of a classification model. The degree of agreement is quantified by kappa. This calculator assesses how well. Kappa In Graphpad.

From www.researchgate.net

Graph of error measures (sensitivity, specificity, and Kappa) as a Kappa In Graphpad How can i quantify agreement between two tests or observers using kappa? Cohen’s kappa is a metric often used to assess the agreement between two raters. Analyze a 2x2 contingency table. This scoring metric does not fail as accuracy does in imbalanced data sets. Use this free web graphpad quickcalc. Tutorial on how to calculate and use cohen's kappa, a. Kappa In Graphpad.

From www.graphpad.com

GraphPad Prism 9 User Guide Adding Pairwise Comparisons Kappa In Graphpad The degree of agreement is quantified by kappa. Analyze a 2x2 contingency table. Examples are provided using excel. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Use this free web graphpad quickcalc. This scoring metric does not fail as accuracy does in imbalanced data sets. How can i quantify. Kappa In Graphpad.

From www.researchgate.net

Graph (A) illustrates Fleiss' kappa analysis between human observers Kappa In Graphpad Examples are provided using excel. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Cohen’s kappa is a metric often used to assess the agreement between two raters. Analyze a 2x2 contingency table. This calculator assesses how well two observers, or two methods, classify subjects into groups. Use this free. Kappa In Graphpad.

From www.youtube.com

ANÁLISIS DE CONCORDANCIA POR KAPPA DE COHEN PASO A PASO BioEstadística Kappa In Graphpad Cohen's kappa statistic helps you rank models that may have imbalanced data. The degree of agreement is quantified by kappa. Tutorial on how to calculate and use cohen's kappa, a measure of the degree of consistency between two raters. Examples are provided using excel. This calculator assesses how well two observers, or two methods, classify subjects into groups. Use this. Kappa In Graphpad.

From bootcamp.uxdesign.cc

Cohen’s Kappa Score. The Kappa Coefficient, commonly… by Mohammad Kappa In Graphpad The degree of agreement is quantified by kappa. How can i quantify agreement between two tests or observers using kappa? Examples are provided using excel. This scoring metric does not fail as accuracy does in imbalanced data sets. This calculator assesses how well two observers, or two methods, classify subjects into groups. It can also be used to assess the. Kappa In Graphpad.

From www.researchgate.net

Graph of F (\kappa ). Download Scientific Diagram Kappa In Graphpad The degree of agreement is quantified by kappa. Cohen’s kappa is a metric often used to assess the agreement between two raters. This scoring metric does not fail as accuracy does in imbalanced data sets. Cohen's kappa statistic helps you rank models that may have imbalanced data. Examples are provided using excel. Use this free web graphpad quickcalc. Tutorial on. Kappa In Graphpad.