Gini Index Gain . Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. The other way of splitting a decision tree is via the gini index. Understand the definitions, formulas and examples of these criteria and. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Compare the advantages and disadvantages of both. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. The entropy and information gain method focuses on purity. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Let’s consider the dataset below, dataset for. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy.

from www.youtube.com

Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. Compare the advantages and disadvantages of both. In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Let’s consider the dataset below, dataset for. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Understand the definitions, formulas and examples of these criteria and. The entropy and information gain method focuses on purity.

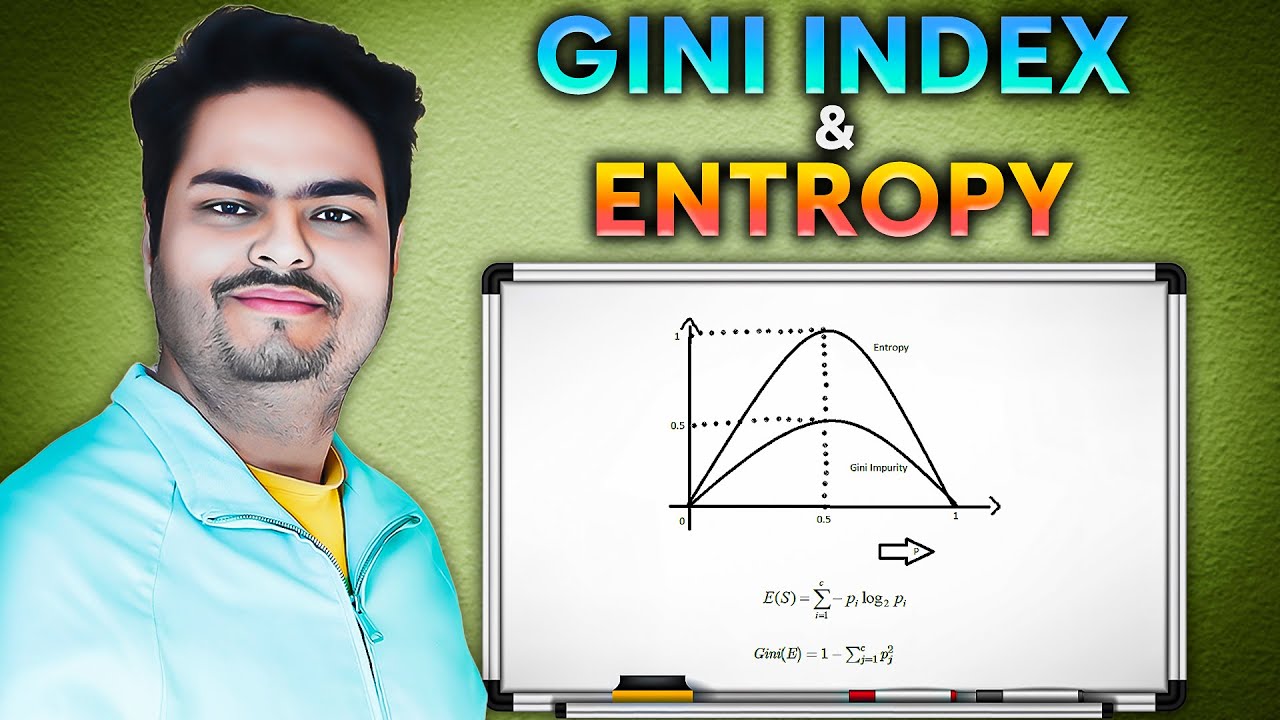

Gini Index and EntropyGini Index and Information gain in Decision Tree

Gini Index Gain Compare the advantages and disadvantages of both. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. The entropy and information gain method focuses on purity. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. The other way of splitting a decision tree is via the gini index. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Understand the definitions, formulas and examples of these criteria and. Let’s consider the dataset below, dataset for. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Compare the advantages and disadvantages of both. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split.

From energyeducation.ca

Gini coefficient Energy Education Gini Index Gain Learn how to use gini index to split a decision tree and reduce impurity in machine learning. The entropy and information gain method focuses on purity. Compare the advantages and disadvantages of both. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to. Gini Index Gain.

From www.geeksforgeeks.org

ML Gini Impurity and Entropy in Decision Tree Gini Index Gain Understand the definitions, formulas and examples of these criteria and. Let’s consider the dataset below, dataset for. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1. Gini Index Gain.

From ekamperi.github.io

Decision Trees Gini index vs entropy Let’s talk about science! Gini Index Gain The entropy and information gain method focuses on purity. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Understand the definitions, formulas and examples of these criteria and. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Let’s. Gini Index Gain.

From marketbusinessnews.com

What is the Gini Index? What does it measure? Market Business News Gini Index Gain Understand the definitions, formulas and examples of these criteria and. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. The entropy and information gain method focuses on purity. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. In machine learning, it is. Gini Index Gain.

From www.researchgate.net

Tree Size of Gain Ratio, Information Gain, Gini Index and Randomized Gini Index Gain Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Understand the definitions, formulas and examples of these criteria and. The entropy and information gain method focuses on purity. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. In machine learning, it is utilized as an. Gini Index Gain.

From www.researchgate.net

How to calculate the ginigain of a decisionTree(RandomForest Gini Index Gain The entropy and information gain method focuses on purity. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Compare the advantages and. Gini Index Gain.

From blog.binomoidr.com

Understanding Gini Index and its Relevance Across the World Gini Index Gain Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. The other way of splitting a decision tree is via the gini index. Let’s consider the dataset below, dataset for. Find the formula,. Gini Index Gain.

From www.youtube.com

Gini Index and EntropyGini Index and Information gain in Decision Tree Gini Index Gain Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. Compare. Gini Index Gain.

From www.intelligenteconomist.com

The Gini Coefficient Intelligent Economist Gini Index Gain Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. The entropy and information gain method focuses on purity. Learn how to calculate. Gini Index Gain.

From discover.join1440.com

What is the Gini coefficient? Discover by 1440 Gini Index Gain Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its. Gini Index Gain.

From www.slideserve.com

PPT Continuous Attributes Computing GINI Index / 2 PowerPoint Gini Index Gain Understand the definitions, formulas and examples of these criteria and. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. In machine learning, it is utilized as an impurity measure in decision tree. Gini Index Gain.

From www.slideserve.com

PPT Continuous Attributes Computing GINI Index / 2 PowerPoint Gini Index Gain Compare the advantages and disadvantages of both. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. Learn how to use gini index to split a decision tree and reduce impurity in machine. Gini Index Gain.

From towardsdatascience.com

Decision Trees Explained — Entropy, Information Gain, Gini Index, CCP Gini Index Gain Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need. Gini Index Gain.

From www.youtube.com

GINI Index With a Simple Example Gain in Gini Index (Decision Tree Gini Index Gain In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Gini index doesn’t commit the. Gini Index Gain.

From www.slideserve.com

PPT The Gini Index PowerPoint Presentation, free download ID355591 Gini Index Gain Understand the definitions, formulas and examples of these criteria and. The entropy and information gain method focuses on purity. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Learn. Gini Index Gain.

From www.youtube.com

Gini Index and EntropyGini Index and Information gain in Decision Tree Gini Index Gain In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Compare the advantages and disadvantages of both. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Understand the definitions, formulas and examples of these criteria and. The gini index. Gini Index Gain.

From www.semanticscholar.org

Figure 1 from Evaluating the Impact of GINI Index and Information Gain Gini Index Gain Let’s consider the dataset below, dataset for. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Understand the definitions, formulas and examples of. Gini Index Gain.

From www.javatpoint.com

Gini Index in Machine Learning Javatpoint Gini Index Gain Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Gini index favours larger partitions (distributions) and is very easy to implement whereas. Gini Index Gain.

From www.youtube.com

19 Machine learning equations for Decision tree (Entropy, Gini Index Gini Index Gain In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Understand the definitions, formulas and examples of. Gini Index Gain.

From www.slideserve.com

PPT Continuous Attributes Computing GINI Index / 2 PowerPoint Gini Index Gain Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. In machine learning, it is utilized as an impurity measure in decision tree algorithms for classification tasks. Let’s consider the dataset below, dataset for. Learn how to calculate gini impurity and entropy for splitting features in decision. Gini Index Gain.

From www.researchgate.net

The Gini Index equals this formula with areas A and B. Gini Index = A Gini Index Gain The entropy and information gain method focuses on purity. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Compare the advantages and disadvantages of both. The other way of splitting a decision tree is via the gini index. Gini index doesn’t commit the logarithm function and picks over information gain, learn why. Gini Index Gain.

From mikail-eliyah.medium.com

Gini Index, Information Gain, And Entropy by Mi'kail Eli'yah Medium Gini Index Gain Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. Compare the advantages and disadvantages of both. Learn. Gini Index Gain.

From www.researchgate.net

Outofbag error with Gini Index, Gain Ratio, Information Gain, and Gini Index Gain The entropy and information gain method focuses on purity. Let’s consider the dataset below, dataset for. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. In machine learning, it is utilized as. Gini Index Gain.

From www.numpyninja.com

Decision Tree, Information Gain and Gini Index for Dummies Gini Index Gain Let’s consider the dataset below, dataset for. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Understand the definitions, formulas and examples of these criteria and. Gini index favours larger partitions (distributions) and is very easy. Gini Index Gain.

From www.researchgate.net

Gini Index by country (18702020) Download Scientific Diagram Gini Index Gain The other way of splitting a decision tree is via the gini index. Gini impurity, like information gain and entropy, is just a metric used by decision tree algorithms to measure the quality of a split. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e. Gini Index Gain.

From www.researchgate.net

(PDF) Evaluating the Impact of GINI Index and Information Gain on Gini Index Gain Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various. Gini Index Gain.

From www.studyiq.com

Gini Coefficient, Definition, Formula, Importance, Calculation Gini Index Gain The other way of splitting a decision tree is via the gini index. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Gini index favours larger partitions (distributions) and. Gini Index Gain.

From www.youtube.com

Build Decision Tree Classifier using Gini Index Machine Learning for Gini Index Gain The other way of splitting a decision tree is via the gini index. Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform an experiment with data and splitting criterion. The entropy and information gain method focuses on purity. In. Gini Index Gain.

From ekamperi.github.io

Decision Trees Gini index vs entropy Let’s talk about science! Gini Index Gain Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. Understand the definitions, formulas and examples of these criteria and. Compare the advantages and disadvantages of both. The entropy and information gain method focuses on purity. Learn how to calculate gini impurity and entropy for splitting features in decision tree. Gini Index Gain.

From www.researchgate.net

Interpretation of the Gini Index Download Scientific Diagram Gini Index Gain Compare the advantages and disadvantages of both. The entropy and information gain method focuses on purity. Understand the definitions, formulas and examples of these criteria and. Gini index doesn’t commit the logarithm function and picks over information gain, learn why gini index can be used to split a decision tree. The gini index measures the probability of a haphazardly picked. Gini Index Gain.

From www.investopedia.com

Gini Index Explained and Gini Coefficients Around the World Gini Index Gain Compare the advantages and disadvantages of both. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. Gini index doesn’t. Gini Index Gain.

From www.researchgate.net

(PDF) Theoretical Comparison between the Gini Index and Information Gini Index Gain Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Let’s consider the dataset below, dataset for. Learn how to measure the quality of a split in decision tree using information gain, gain ratio and gini index. The other way of splitting a decision tree is via the gini index. Learn how to. Gini Index Gain.

From ourworldindata.org

Gini Index around 2015 vs. Gini Index around 2000 Our World in Data Gini Index Gain Compare the advantages and disadvantages of both. Learn how to use gini index to split a decision tree and reduce impurity in machine learning. Find the formula, calculator, example and comparison with other splitting measures like information gain and entropy. Learn how to calculate gini impurity and entropy for splitting features in decision tree algorithm. Gini index doesn’t commit the. Gini Index Gain.

From jcsites.juniata.edu

Classification Gini Index Gain Let’s consider the dataset below, dataset for. The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). The entropy and information gain method focuses on purity. In machine learning, it is utilized as an impurity measure in decision tree algorithms. Gini Index Gain.

From informacionpublica.svet.gob.gt

Gini Index Explained And Gini Coefficients Around The Gini Index Gain The gini index measures the probability of a haphazardly picked test being misclassified by a decision tree algorithm, and its value goes from 0 (perfectly pure) to 1 (perfectly impure). Gini index favours larger partitions (distributions) and is very easy to implement whereas information gain supports smaller partitions (distributions) with various distinct values, i.e there is a need to perform. Gini Index Gain.