What Is The Measuring Instrument Sensitivity . When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Sensitivity is defined a threshold, or a coefficient of some. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. Accuracy is how well the measurements match up to a standard,. See examples of how to. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. However, it is important to.

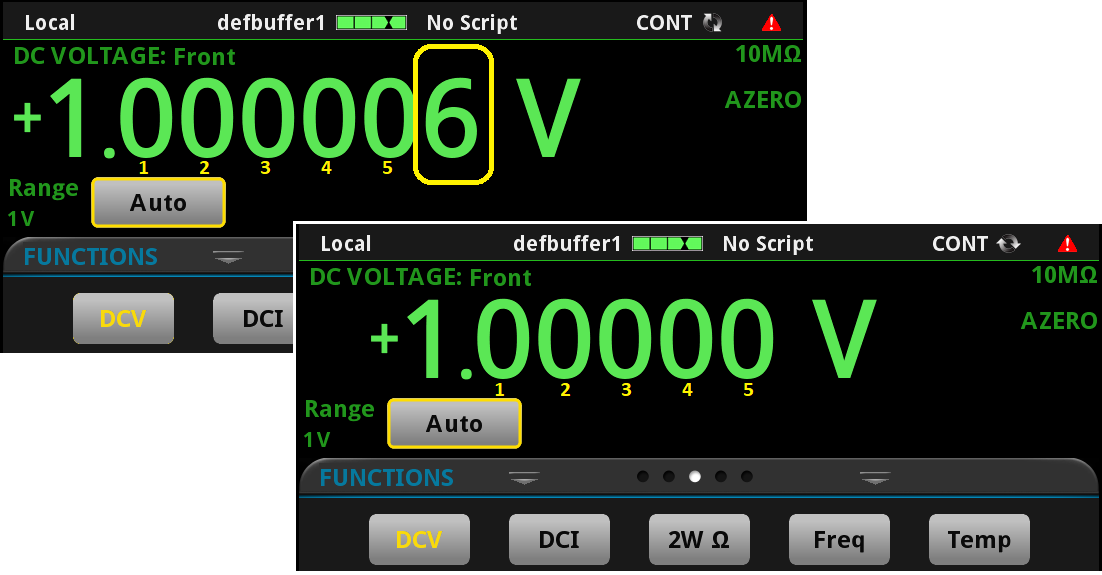

from www.tek.com

Sensitivity is defined a threshold, or a coefficient of some. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Accuracy is how well the measurements match up to a standard,. However, it is important to. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. See examples of how to. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths.

What is the Difference Between Accuracy, Resolution, and Sensitivity

What Is The Measuring Instrument Sensitivity The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. Accuracy is how well the measurements match up to a standard,. See examples of how to. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. However, it is important to. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Sensitivity is defined a threshold, or a coefficient of some. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system.

From www.slideserve.com

PPT Instrumentation and Measurements PowerPoint Presentation, free What Is The Measuring Instrument Sensitivity Sensitivity is defined a threshold, or a coefficient of some. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. See examples of how to. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. The sensitivity of measurement is. What Is The Measuring Instrument Sensitivity.

From www.dreamstime.com

Sensitivity Level Meter, Measuring Scale. Sensitivity Level Speedometer What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. However, it is important to. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. See examples of how to. Sensitivity is defined a threshold, or a coefficient of some.. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Instrument QC and Qualification PowerPoint Presentation, free What Is The Measuring Instrument Sensitivity The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. However, it is important to. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Accuracy is how well the measurements match up to a standard,. It is defined as the ratio. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Instrumentation and Measurements PowerPoint Presentation, free What Is The Measuring Instrument Sensitivity The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. It is defined as the ratio of the changes in the output of. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

(PDF) A Measuring Instrument for Ethical Sensitivity in the Therapeutic What Is The Measuring Instrument Sensitivity Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. Accuracy is how well the measurements match up to a standard,. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. When referring sample to quality, you want to evaluate the accuracy and. What Is The Measuring Instrument Sensitivity.

From www.youtube.com

DERIVATION OF DIFFLECTION SENSITIVITY(ELECTRONIC MEASUREMENTS AND What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. Accuracy is how well the measurements match up to. What Is The Measuring Instrument Sensitivity.

From www.aaronswansonpt.com

Sensitivity and Specificity What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. It is defined as the. What Is The Measuring Instrument Sensitivity.

From mech-engg33.blogspot.com

Static Sensitivity of measurements Definition Example Explanation What Is The Measuring Instrument Sensitivity Sensitivity is defined a threshold, or a coefficient of some. See examples of how to. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. However, it is important to. Accuracy is. What Is The Measuring Instrument Sensitivity.

From www.youtube.com

Strain Gage Sensitivity (with a good instrument) YouTube What Is The Measuring Instrument Sensitivity See examples of how to. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. Accuracy is how well the measurements match up to a standard,. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. However, it is important to. Sensitivity. What Is The Measuring Instrument Sensitivity.

From www.youtube.com

System (Sensor) Sensitivity with Practice Problem Bioinstrumentation What Is The Measuring Instrument Sensitivity However, it is important to. See examples of how to. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Sensitivity describes the smallest absolute amount of change that can. What Is The Measuring Instrument Sensitivity.

From learnmech.com

Some important terminologies used in measurement What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. However, it is important to. Sensitivity is. What Is The Measuring Instrument Sensitivity.

From www.dreamstime.com

Sensitivity Measuring Device with Arrow and Scale. Stock Vector What Is The Measuring Instrument Sensitivity Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. See examples of how to. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between accuracy, precision,. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Instrument Characteristics PowerPoint Presentation, free download What Is The Measuring Instrument Sensitivity However, it is important to. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Sensitivity is defined a threshold, or a coefficient of some. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between. What Is The Measuring Instrument Sensitivity.

From www.pinterest.com

25 Types of Measuring Instruments and Their Uses [with Pictures & Names What Is The Measuring Instrument Sensitivity When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Accuracy is how well the measurements match up to a standard,. It is defined as the. What Is The Measuring Instrument Sensitivity.

From pandai.me

The Use of Measuring Instruments, Accuracy, Consistency, Sensitivity What Is The Measuring Instrument Sensitivity The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. See examples of how to. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. However, it is important to. When referring sample to quality, you want to evaluate the accuracy and precision of. What Is The Measuring Instrument Sensitivity.

From www.dreamstime.com

Sensitivity Measuring Device with Arrow and Scale. Stock Vector What Is The Measuring Instrument Sensitivity See examples of how to. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. However, it is important to. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. The sensitivity of measurement is a measure of the. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Instrumentation and Measurements PowerPoint Presentation, free What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Sensitivity is defined a threshold, or a coefficient of some. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Accuracy is how well the measurements match up to a standard,. It is defined as the ratio of the changes in the. What Is The Measuring Instrument Sensitivity.

From gcsephysicsninja.com

15. Sensitivity, range and linearity of measuring instruments What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Accuracy is how well the measurements match up to a standard,. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. See examples of how to. The sensitivity of measurement is a measure of the change in instrument output that occurs when. What Is The Measuring Instrument Sensitivity.

From app.pandai.org

The use of measuring instruments, accuracy, consistency, sensitivity What Is The Measuring Instrument Sensitivity It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Sensitivity is defined a threshold, or. What Is The Measuring Instrument Sensitivity.

From slidetodoc.com

RESEARCH METHODS Lecture 18 CRITERIA FOR GOOD MEASUREMENT What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Sensitivity is defined a threshold, or a coefficient of some. See examples of how to. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. However, it is important to.. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Instrumentation and Measurements PowerPoint Presentation, free What Is The Measuring Instrument Sensitivity Sensitivity is defined a threshold, or a coefficient of some. Accuracy is how well the measurements match up to a standard,. See examples of how to. However, it is important to. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between. What Is The Measuring Instrument Sensitivity.

From www.tek.com

What is the Difference Between Accuracy, Resolution, and Sensitivity What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. See examples of how to. However, it is important to. Sensitivity is defined a threshold, or a coefficient of some.. What Is The Measuring Instrument Sensitivity.

From www.dreamstime.com

Sensitivity Measuring Device with Arrow and Scale. 3D Render Stock What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a.. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Sensitivity functions for each instrument in (a) the vertical direction What Is The Measuring Instrument Sensitivity The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Sensitivity is defined a threshold, or a coefficient of some. Learn the. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT PHYSICS PowerPoint Presentation, free download ID5747323 What Is The Measuring Instrument Sensitivity Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Sensitivity is defined a threshold, or a coefficient of some. The sensitivity of measurement is a measure of the change in. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Estimated instrument sensitivity for different concepts, compared to What Is The Measuring Instrument Sensitivity Accuracy is how well the measurements match up to a standard,. Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Sensitivity is defined a threshold, or a coefficient of some. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths.. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Properties and sensitivity of the measuring devices Download Table What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity as applied to a measurement system. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. Sensitivity is defined a threshold, or a coefficient of. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Schematic of instrument sensitivity calibration. Download Scientific What Is The Measuring Instrument Sensitivity When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Learn the difference between accuracy, precision,. What Is The Measuring Instrument Sensitivity.

From www.youtube.com

Sensitivity Accuracy Precision and Resolution Value in Instrumentation What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Sensitivity is defined a threshold, or a coefficient of some. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. The sensitivity of measurement is a measure of the change in instrument output. What Is The Measuring Instrument Sensitivity.

From www.quesba.com

What is the sensitivity of an instrument whose output is...get 4 What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. Accuracy is how well the measurements match up to a standard,. When referring sample to quality, you want to evaluate the accuracy. What Is The Measuring Instrument Sensitivity.

From www.slideserve.com

PPT Electrical Measuring Instrument & Machines PowerPoint What Is The Measuring Instrument Sensitivity Learn the difference between accuracy, precision, resolution, and sensitivity in data acquisition devices. Sensitivity is defined a threshold, or a coefficient of some. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. See examples of how to. When referring sample to quality, you want to. What Is The Measuring Instrument Sensitivity.

From www.youtube.com

scienceform1 The Use of Measuring Instruments, Accuracy, Consistency What Is The Measuring Instrument Sensitivity Accuracy is how well the measurements match up to a standard,. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. Sensitivity is defined a threshold, or a coefficient of some. When referring sample to quality, you want to evaluate the accuracy and precision of your. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Sensitivity of the measuring system. Download Scientific Diagram What Is The Measuring Instrument Sensitivity See examples of how to. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured changes by a. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. When referring sample to quality, you want to. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

Instrument Sensitivity and Specificity Download Table What Is The Measuring Instrument Sensitivity Sensitivity is defined a threshold, or a coefficient of some. It is defined as the ratio of the changes in the output of an instrument to a change in the value of the quantity being. However, it is important to. The sensitivity of measurement is a measure of the change in instrument output that occurs when the quantity being measured. What Is The Measuring Instrument Sensitivity.

From www.researchgate.net

The sensitivity measuring device for experiment. Download Scientific What Is The Measuring Instrument Sensitivity Accuracy is how well the measurements match up to a standard,. When referring sample to quality, you want to evaluate the accuracy and precision of your measurement. Sensitivity describes the smallest absolute amount of change that can be detected by a measurement, often expressed in terms of millivolts, microhms, or tenths. See examples of how to. However, it is important. What Is The Measuring Instrument Sensitivity.