Spark Take Function . In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Why is take(100) basically instant, whereas df.limit(100).repartition(1). Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. It works by first scanning one partition, and use the results from that. Return the first 2 rows of the dataframe. Take the first num elements of the rdd. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Spark provides two main methods to access the first n rows of a dataframe or rdd: Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when.

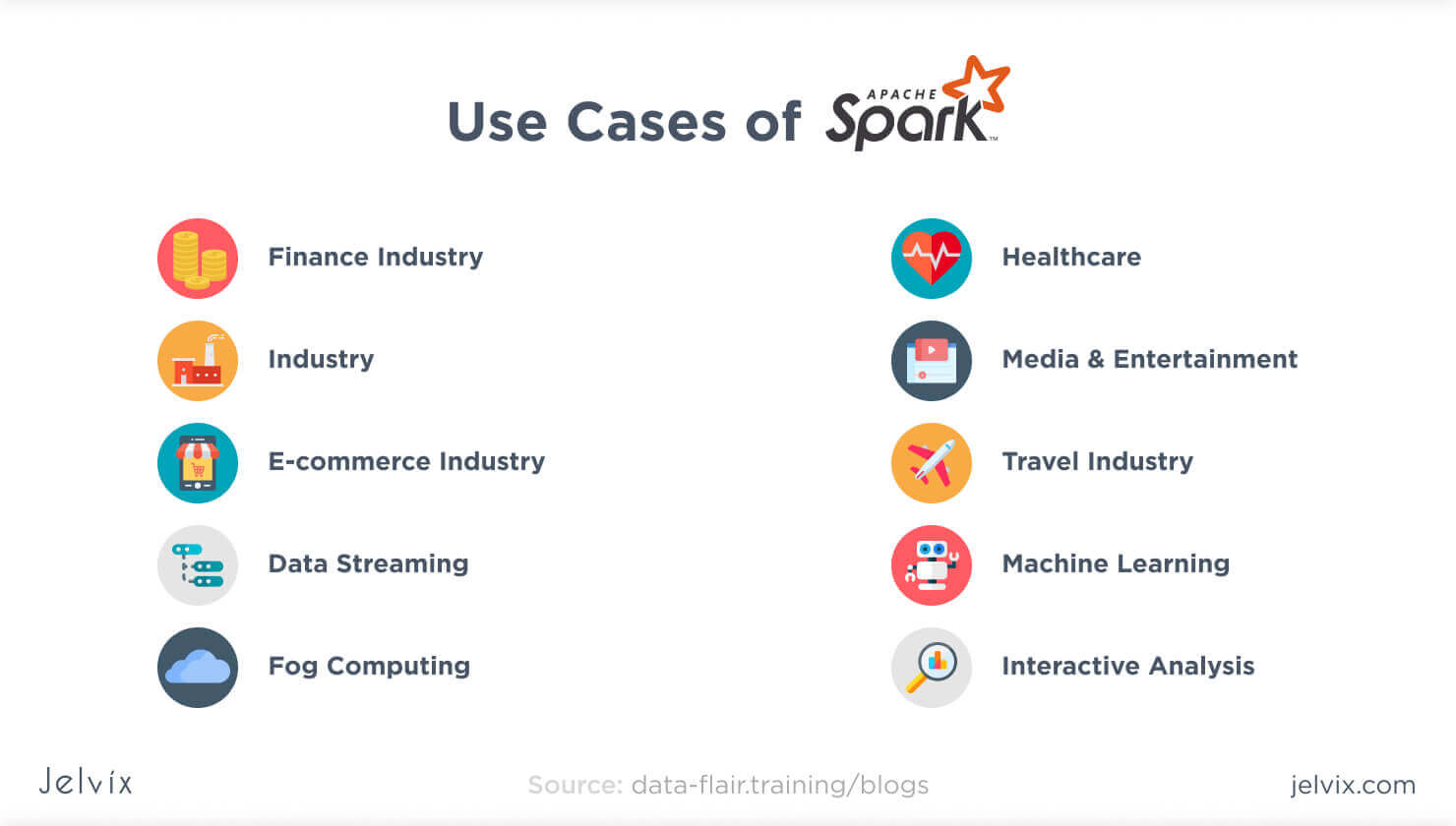

from jelvix.com

Take the first num elements of the rdd. Why is take(100) basically instant, whereas df.limit(100).repartition(1). It works by first scanning one partition, and use the results from that. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Return the first 2 rows of the dataframe. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Spark provides two main methods to access the first n rows of a dataframe or rdd:

Spark vs Hadoop What to Choose to Process Big Data

Spark Take Function Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. It works by first scanning one partition, and use the results from that. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Why is take(100) basically instant, whereas df.limit(100).repartition(1). Take the first num elements of the rdd. Return the first 2 rows of the dataframe. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Spark provides two main methods to access the first n rows of a dataframe or rdd: In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first ().

From www.databricks.com

What's new for Spark SQL in Apache Spark 1.3 Databricks Blog Spark Take Function In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Spark provides two main methods to access the first n rows of a dataframe or rdd: Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Why is take(100) basically instant, whereas df.limit(100).repartition(1).. Spark Take Function.

From subscription.packtpub.com

Apache Spark Quick Start Guide Spark Take Function In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Take the first num elements of the rdd. Return the first 2 rows of the dataframe. In this. Spark Take Function.

From www.youtube.com

spark functions [explode and explode_outer] YouTube Spark Take Function In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Why is take(100) basically instant, whereas df.limit(100).repartition(1). Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. In this pyspark tutorial, we will discuss how to display top and bottom rows in. Spark Take Function.

From i-sparkplug.com

What is the function of a spark plugs? Spark Take Function In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to. Spark Take Function.

From techvidvan.com

SparkContext In Apache Spark Entry Point to Spark Core TechVidvan Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. It works by first scanning one partition, and use the results from that. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Why is take(100) basically instant, whereas. Spark Take Function.

From sparkbyexamples.com

Spark Window Functions with Examples Spark By {Examples} Spark Take Function Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Why is take(100) basically instant, whereas df.limit(100).repartition(1). Spark provides two main methods to access the first n rows of a dataframe or rdd: It works by first scanning one partition, and use the results from that. Pyspark dataframe's take(~) method returns the. Spark Take Function.

From www.youtube.com

Apache Spark 2 Spark SQL Analytics Functions or Windowing Functions Spark Take Function Spark provides two main methods to access the first n rows of a dataframe or rdd: Take the first num elements of the rdd. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark dataframe's take(~) method returns the first num number of rows as. Spark Take Function.

From www.simplilearn.com

Spark Parallelize The Essential Element of Spark Spark Take Function In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (),. Spark Take Function.

From slideplayer.com

Spark with R Martijn Tennekes ppt download Spark Take Function It works by first scanning one partition, and use the results from that. Why is take(100) basically instant, whereas df.limit(100).repartition(1). In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Take the first num elements of the rdd. Pyspark dataframe's take(~) method returns the first num number of rows as. Spark Take Function.

From databricks.com

Apache Spark Key Terms, Explained The Databricks Blog Spark Take Function Return the first 2 rows of the dataframe. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for. Spark Take Function.

From techvidvan.com

Spark Tutorial Apache Spark Introduction for Beginners TechVidvan Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Why is take(100) basically instant, whereas df.limit(100).repartition(1). In apache spark, count(), isempty(), and take(n) are one of the few. Spark Take Function.

From www.youtube.com

What are Spark builtin functions and how to use them? YouTube Spark Take Function It works by first scanning one partition, and use the results from that. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Return the first 2 rows of the dataframe. I want to access the first 100 rows of a spark data frame and write. Spark Take Function.

From www.databricks.com

Introducing Window Functions in Spark SQL Databricks Blog Spark Take Function In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Take the first num elements of the rdd. It works by first scanning one partition, and use the results. Spark Take Function.

From www.simplilearn.com

Implementation of Spark Applications Tutorial Simplilearn Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Spark provides two main methods to access the first n rows of a dataframe or rdd: In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark provides a. Spark Take Function.

From data-flair.training

How Apache Spark Works Runtime Spark Architecture DataFlair Spark Take Function Return the first 2 rows of the dataframe. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Why is take(100) basically instant, whereas df.limit(100).repartition(1). It. Spark Take Function.

From www.youtube.com

Spark Scenario Based Question Spark SQL Functions Coalesce Spark Take Function Take the first num elements of the rdd. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Why is take(100) basically instant, whereas df.limit(100).repartition(1). In this pyspark tutorial, we. Spark Take Function.

From typefully.com

Spark's Distributed Execution Prashant Spark Take Function It works by first scanning one partition, and use the results from that. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Take the first num elements of the. Spark Take Function.

From sparkbyexamples.com

Use length function in substring in Spark Spark By {Examples} Spark Take Function Why is take(100) basically instant, whereas df.limit(100).repartition(1). It works by first scanning one partition, and use the results from that. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Return the first 2 rows of the dataframe. Pyspark dataframe's take(~) method returns the first num number of rows as. Spark Take Function.

From medium.com

Spark Joins for Dummies. Practical examples of using join in… by Spark Take Function Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. It works by first scanning one partition, and use the results from that. Take the first num elements of the rdd. Spark provides two main methods to access the first n rows of a dataframe or rdd: Return the first 2 rows. Spark Take Function.

From thecodinginterface.com

Example Driven High Level Overview of Spark with Python The Coding Spark Take Function I want to access the first 100 rows of a spark data frame and write the result back to a csv file. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to. Spark Take Function.

From sparkbyexamples.com

Spark Most Used JSON Functions with Examples Spark By {Examples} Spark Take Function I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Return. Spark Take Function.

From mindmajix.com

What is Spark SQL Spark SQL Tutorial Spark Take Function Why is take(100) basically instant, whereas df.limit(100).repartition(1). It works by first scanning one partition, and use the results from that. Return the first 2 rows of the dataframe. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Take the first num elements of the rdd. Pyspark dataframe's. Spark Take Function.

From autosourcepk.blogspot.com

Auto source SPARK PLUG FUNCTIONS, CONSTRUCTION, WORKING PRINCIPLE AND Spark Take Function Spark provides two main methods to access the first n rows of a dataframe or rdd: Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Why is take(100) basically instant, whereas df.limit(100).repartition(1). In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when.. Spark Take Function.

From izhangzhihao.github.io

Spark The Definitive Guide In Short — MyNotes Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Return the first 2 rows of the dataframe. I want to access the first 100 rows of a spark data frame and write the result. Spark Take Function.

From mindmajix.com

What is Spark SQL Spark SQL Tutorial Spark Take Function Take the first num elements of the rdd. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Spark provides two main methods to access the first n rows of a dataframe or rdd: Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample (). Spark Take Function.

From flametree.djnavarro.net

Using spark functions • flametree Spark Take Function It works by first scanning one partition, and use the results from that. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Return the first 2 rows of the dataframe. Spark provides two main methods to access the first n rows of a dataframe or rdd: Pyspark dataframe's take(~). Spark Take Function.

From sparkbyexamples.com

Spark SQL Functions Archives Page 5 of 5 Spark By {Examples} Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. It works by first scanning one partition, and use the results from that. In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Spark provides two main methods to access the first n. Spark Take Function.

From www.youtube.com

spark functions [split] work with spark YouTube Spark Take Function I want to access the first 100 rows of a spark data frame and write the result back to a csv file. Spark provides two main methods to access the first n rows of a dataframe or rdd: In apache spark, count(), isempty(), and take(n) are one of the few different action methods used for different purposes when. Pyspark provides. Spark Take Function.

From jelvix.com

Spark vs Hadoop What to Choose to Process Big Data Spark Take Function Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. Spark provides two main methods to access the first n rows of a dataframe or rdd: In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark dataframe's. Spark Take Function.

From techvidvan.com

Apache Spark Transformation Operations TechVidvan Spark Take Function It works by first scanning one partition, and use the results from that. Take the first num elements of the rdd. Return the first 2 rows of the dataframe. Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. In apache spark, count(), isempty(), and take(n) are one of the few different. Spark Take Function.

From newbedev.com

Spark functions vs UDF performance? Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. Spark provides two main methods to access the first n rows of a dataframe or rdd: Take the first num elements of the rdd. I want to access the first 100 rows of a spark data frame and write the result back to. Spark Take Function.

From sparkbyexamples.com

Apache Spark Spark by {Examples} Spark Take Function Pyspark dataframe's take(~) method returns the first num number of rows as a list of row objects. It works by first scanning one partition, and use the results from that. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. In this pyspark tutorial, we will discuss how. Spark Take Function.

From techvidvan.com

Apache Spark SQL Tutorial Quick Guide For Beginners TechVidvan Spark Take Function Take the first num elements of the rdd. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Why is take(100) basically instant, whereas df.limit(100).repartition(1). Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to get the random sampling. In apache spark,. Spark Take Function.

From www.learntospark.com

How to Find Duplicates in Spark Apache Spark Window Function Spark Take Function I want to access the first 100 rows of a spark data frame and write the result back to a csv file. In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Pyspark provides a pyspark.sql.dataframe.sample (), pyspark.sql.dataframe.sampleby (), rdd.sample (), and rdd.takesample () methods to. Spark Take Function.

From sparkbyexamples.com

Spark split() function to convert string to Array column Spark By Spark Take Function In this pyspark tutorial, we will discuss how to display top and bottom rows in pyspark dataframe using head (), tail (), first (). Take the first num elements of the rdd. I want to access the first 100 rows of a spark data frame and write the result back to a csv file. It works by first scanning one. Spark Take Function.