Error Timer-Driven Process Thread-10 . The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. The putsql processor comes up with error message failed to update. So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. Hi, i am loading records from a csv file into oracle 12c. Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. High volume dataflow with lots of flowfiles active.

from dzone.com

After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. High volume dataflow with lots of flowfiles active. Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out of heap memory include: The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. The putsql processor comes up with error message failed to update. Hi, i am loading records from a csv file into oracle 12c.

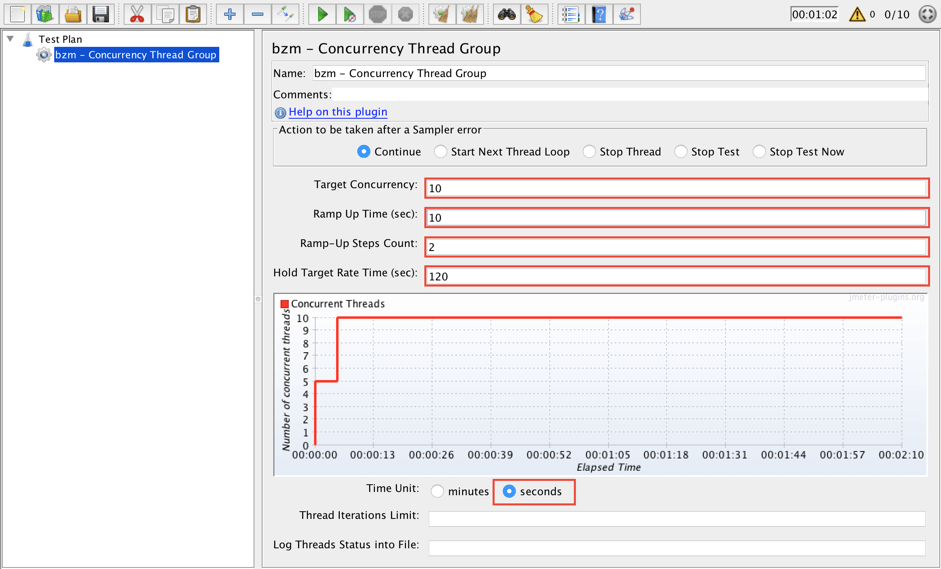

Using JMeter's Throughput Shaping Timer Plugin DZone

Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. The putsql processor comes up with error message failed to update. Everything worked fine, but after a while i received the following error: Hi, i am loading records from a csv file into oracle 12c. High volume dataflow with lots of flowfiles active. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. So some common reason for running out of heap memory include:

From www.researchgate.net

Flow chart of the 3 parallel threads used. a) VDZ timer b) GPS and c Error Timer-Driven Process Thread-10 The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. The putsql processor comes up with error message failed to update. I´m using apache nifi to ingest and preprocess. Error Timer-Driven Process Thread-10.

From openport.net

How to get process threads in Windows 10 C/C++ Source Code Included Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. High volume dataflow with lots of flowfiles active. The error you. Error Timer-Driven Process Thread-10.

From www.youtube.com

LabVIEW code Timerdriven background process using interrupt request Error Timer-Driven Process Thread-10 Everything worked fine, but after a while i received the following error: The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. When using putkudu, we have the option of automatically updating the. Error Timer-Driven Process Thread-10.

From medium.com

Event Loop and Worker Threads Unlocking Asynchronous Processing in Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. Hi, i am loading records from a csv file into oracle 12c. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. The putsql. Error Timer-Driven Process Thread-10.

From stackoverflow.com

jmeter Concurrency Thread Group and Throughput shaping timer Stack Error Timer-Driven Process Thread-10 The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out of heap memory include: The putsql processor comes up with error message. Error Timer-Driven Process Thread-10.

From stackoverflow.com

put Marklogic using Nifi throws error "NullPointer Exception" Stack Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: The putsql processor comes up with error message failed to update. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. Hi, i am loading records from a csv file into oracle 12c. I´m using apache nifi to ingest and preprocess some. Error Timer-Driven Process Thread-10.

From www.slideserve.com

PPT Clock Driven Schedulers PowerPoint Presentation, free download Error Timer-Driven Process Thread-10 After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. High volume dataflow with lots of flowfiles active. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. Hi, i am loading records from a csv file into oracle 12c. So some. Error Timer-Driven Process Thread-10.

From slideplayer.com

GUIs and Events CSE 3541/5541 Matt Boggus. ppt download Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. After almost exactly 1 week of running 1.18.0,. Error Timer-Driven Process Thread-10.

From www.geeksforgeeks.org

Node Event Loop Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. High volume dataflow with lots of flowfiles active. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. When using putkudu,. Error Timer-Driven Process Thread-10.

From openport.net

How to get process threads in Windows 10 C/C++ Source Code Included Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. Hi, i am loading records from a csv file into oracle 12c. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long. Error Timer-Driven Process Thread-10.

From www.mathworks.com

TimerDriven Task MATLAB & Simulink Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. The putsql processor comes up with error message failed to update. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by. Error Timer-Driven Process Thread-10.

From www.threads.net

One of the main things I have learned from the trump cult is that a Error Timer-Driven Process Thread-10 The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. Hi, i am loading records from a csv file into oracle 12c. Everything worked fine, but after a while. Error Timer-Driven Process Thread-10.

From blog.csdn.net

NIFI源码学习(二)处理器执行与调度_maximum timer driven thread countCSDN博客 Error Timer-Driven Process Thread-10 When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. Everything worked fine, but after a while i received the following error: The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. After almost exactly 1 week of running. Error Timer-Driven Process Thread-10.

From www.pinterest.com

PLC Timers Program Ladder logic, Timers, Timer Error Timer-Driven Process Thread-10 After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. The putsql processor comes up with error message failed to update. Hi, i am loading records from a csv file into oracle 12c. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by. Error Timer-Driven Process Thread-10.

From stackoverflow.com

json NiFi Update Record escapeJson Record Path Not Working Stack Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: Hi, i am loading records from a csv file into oracle 12c. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they. Error Timer-Driven Process Thread-10.

From stackoverflow.com

groovy How to use 'DBCPConnectionPoolLookup' in 'ExecuteGroovyScript Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. High volume dataflow with lots of flowfiles active. Hi, i am loading records from a csv file into oracle 12c. The putsql processor comes up with error message failed to update. I´m. Error Timer-Driven Process Thread-10.

From www.artsthread.com

Chaotic timer Timer driven by user’s stress level manifesting chaotic Error Timer-Driven Process Thread-10 The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out. Error Timer-Driven Process Thread-10.

From community.cloudera.com

Nifi PutEmail Error 5.7.3 STARTTLS is required to... Cloudera Error Timer-Driven Process Thread-10 When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out of heap memory include: The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. I´m using apache nifi to ingest and preprocess. Error Timer-Driven Process Thread-10.

From dzone.com

Using JMeter's Throughput Shaping Timer Plugin DZone Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. Hi, i am loading records from a csv file into oracle 12c. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0,. Error Timer-Driven Process Thread-10.

From www.electricalengineering.xyz

Timer Instruction in PLCs PLC Ladder Programming Language Error Timer-Driven Process Thread-10 When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. High volume dataflow with lots of flowfiles active. So some common reason for running out of heap memory include:. Error Timer-Driven Process Thread-10.

From exoieuqni.blob.core.windows.net

Thread And Process In C at Brian Schlater blog Error Timer-Driven Process Thread-10 Hi, i am loading records from a csv file into oracle 12c. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. So some common reason for running out. Error Timer-Driven Process Thread-10.

From owlcation.com

System Threading Timer in C Explained With Examples Owlcation Error Timer-Driven Process Thread-10 So some common reason for running out of heap memory include: Hi, i am loading records from a csv file into oracle 12c. Everything worked fine, but after a while i received the following error: I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. High volume dataflow with. Error Timer-Driven Process Thread-10.

From hxeidydvd.blob.core.windows.net

What Is Core And Thread In A Processor at Rhonda Welch blog Error Timer-Driven Process Thread-10 I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. High volume dataflow with lots of flowfiles active. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. Hi, i am loading records from a csv file into oracle. Error Timer-Driven Process Thread-10.

From www.mitre10.co.nz

Jackson Timer Timers Mitre 10™ Error Timer-Driven Process Thread-10 The putsql processor comes up with error message failed to update. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out of heap memory include: After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot.. Error Timer-Driven Process Thread-10.

From blog.csdn.net

NIFI源码学习(二)处理器执行与调度_maximum timer driven thread countCSDN博客 Error Timer-Driven Process Thread-10 Hi, i am loading records from a csv file into oracle 12c. The putsql processor comes up with error message failed to update. So some common reason for running out of heap memory include: Everything worked fine, but after a while i received the following error: I´m using apache nifi to ingest and preprocess some csv files, but when runing. Error Timer-Driven Process Thread-10.

From www.artsthread.com

Chaotic timer Timer driven by user’s stress level manifesting chaotic Error Timer-Driven Process Thread-10 After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. Hi, i. Error Timer-Driven Process Thread-10.

From danielmangum.com

RISCV Bytes Timer Interrupts · Daniel Mangum Error Timer-Driven Process Thread-10 Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. High volume dataflow with lots of flowfiles active. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it. Error Timer-Driven Process Thread-10.

From slideplayer.com

Overview of the Lab 2 Assignment Multicore RealTime Tasks ppt download Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. The putsql processor comes up with error message failed to update. Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common reason for running out of. Error Timer-Driven Process Thread-10.

From owlcation.com

System Threading Timer in C Explained With Examples Owlcation Error Timer-Driven Process Thread-10 Everything worked fine, but after a while i received the following error: So some common reason for running out of heap memory include: High volume dataflow with lots of flowfiles active. The putsql processor comes up with error message failed to update. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time,. Error Timer-Driven Process Thread-10.

From www.seleniumeasy.com

JMeter Timers Constant timer Example Selenium Easy Error Timer-Driven Process Thread-10 When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. Hi, i am loading records from a csv file into oracle 12c. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. Everything worked fine, but after a while i received the. Error Timer-Driven Process Thread-10.

From www.youtube.com

C Threads(4) Using System Threading Timer in YouTube Error Timer-Driven Process Thread-10 When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari. Error Timer-Driven Process Thread-10.

From owlcation.com

System Threading Timer in C Explained With Examples Owlcation Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. The putsql processor comes up with error message failed to update. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. So some common. Error Timer-Driven Process Thread-10.

From www.software-testing-tutorials-automation.com

JMeter Constant Throughput Timer Example Error Timer-Driven Process Thread-10 High volume dataflow with lots of flowfiles active. I´m using apache nifi to ingest and preprocess some csv files, but when runing during a long time, it always fails. Hi, i am loading records from a csv file into oracle 12c. The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics. Error Timer-Driven Process Thread-10.

From programmerah.com

The showdialog() method in thread/threading. Timer/task reported an Error Timer-Driven Process Thread-10 Hi, i am loading records from a csv file into oracle 12c. So some common reason for running out of heap memory include: High volume dataflow with lots of flowfiles active. After almost exactly 1 week of running 1.18.0, previously configured getsmbfile processes are reporting that they cannot. The error you provided is saying that the ambarireportingtask you have running. Error Timer-Driven Process Thread-10.

From www.reddit.com

The timer does not seem to be working. It runs once if I call the Error Timer-Driven Process Thread-10 The error you provided is saying that the ambarireportingtask you have running can not connect to the ambari metrics collector. Everything worked fine, but after a while i received the following error: When using putkudu, we have the option of automatically updating the kudu table schema to match the data's schema by setting. After almost exactly 1 week of running. Error Timer-Driven Process Thread-10.