Torch Nn Embedding Initialization . learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution.

from www.tutorialexample.com

nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init.

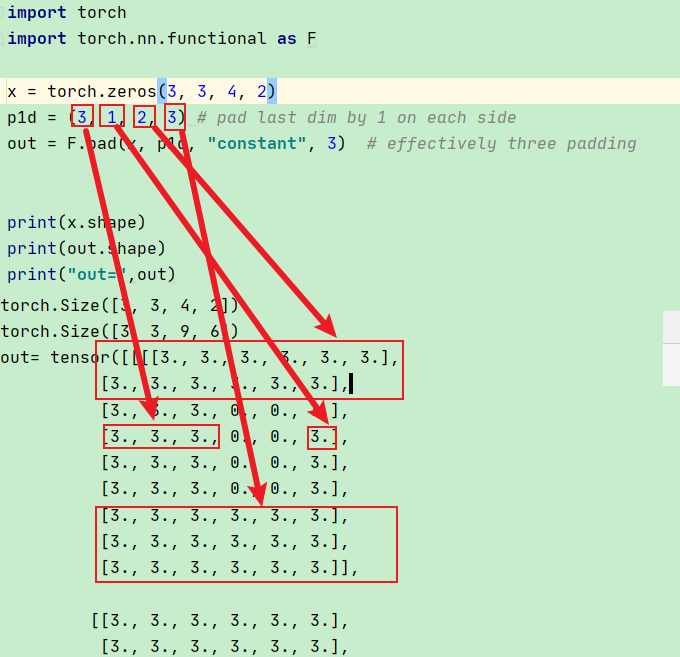

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial

Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings.

From blog.csdn.net

对nn.Embedding的理解以及nn.Embedding不能嵌入单个数值问题_nn.embedding(beCSDN博客 Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use torch.nn.init module to. Torch Nn Embedding Initialization.

From www.ppmy.cn

nn.embedding函数详解(pytorch) Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. they are. Torch Nn Embedding Initialization.

From www.researchgate.net

Architecture of NN model used for entity embedding Download Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn. Torch Nn Embedding Initialization.

From blog.csdn.net

什么是embedding(把物体编码为一个低维稠密向量),pytorch中nn.Embedding原理及使用_embedding_dim Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the. Torch Nn Embedding Initialization.

From blog.csdn.net

torch.nn.Embedding()的固定化_embedding 固定初始化CSDN博客 Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights. Torch Nn Embedding Initialization.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Torch Nn Embedding Initialization to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. they are initialized using. Torch Nn Embedding Initialization.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. . Torch Nn Embedding Initialization.

From www.researchgate.net

Brief illustration of the absolute embedding neural network (NN) model Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use. Torch Nn Embedding Initialization.

From www.youtube.com

torch.nn.Embedding How embedding weights are updated in Torch Nn Embedding Initialization to initialize the weights of a single layer, use a function from torch.nn.init. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From www.youtube.com

torch.nn.Embedding explained (+ Characterlevel language model) YouTube Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. there seem to be two ways of initializing embedding. Torch Nn Embedding Initialization.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. learn how to use torch.nn.init module to. Torch Nn Embedding Initialization.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. there. Torch Nn Embedding Initialization.

From www.ppmy.cn

nn.embedding函数详解(pytorch) Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights. Torch Nn Embedding Initialization.

From zhuanlan.zhihu.com

torch.nn 之 Normalization Layers 知乎 Torch Nn Embedding Initialization to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. there seem to be two ways of initializing embedding. Torch Nn Embedding Initialization.

From blog.51cto.com

【Pytorch基础教程28】浅谈torch.nn.embedding_51CTO博客_Pytorch 教程 Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. to. Torch Nn Embedding Initialization.

From www.youtube.com

torch.nn.TransformerEncoderLayer Part 1 Transformer Embedding and Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From blog.csdn.net

torch.nn.Embedding参数解析CSDN博客 Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. . Torch Nn Embedding Initialization.

From blog.csdn.net

关于nn.embedding的理解_nn.embedding怎么处理floatCSDN博客 Torch Nn Embedding Initialization there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. nn.embedding is a pytorch layer. Torch Nn Embedding Initialization.

From blog.csdn.net

【Pytorch学习】nn.Embedding的讲解及使用CSDN博客 Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights of a single layer, use a function from torch.nn.init. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. learn how to use word embeddings. Torch Nn Embedding Initialization.

From discuss.pytorch.org

Initialization of the hidden states of torch.nn.lstm vision PyTorch Torch Nn Embedding Initialization there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the weights of a single layer, use a function from torch.nn.init. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From zhuanlan.zhihu.com

Torch.nn.Embedding的用法 知乎 Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the weights of a single layer, use a function from torch.nn.init. nn.embedding is a pytorch layer that maps indices from a. Torch Nn Embedding Initialization.

From blog.csdn.net

torch.nn.Embedding参数详解之num_embeddings,embedding_dim_torchembeddingCSDN博客 Torch Nn Embedding Initialization there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the weights of a single layer, use a function from torch.nn.init. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. nn.embedding is a pytorch layer that maps indices. Torch Nn Embedding Initialization.

From www.youtube.com

torch.nn.TransformerDecoderLayer Part 2 Embedding, First MultiHead Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. there seem to be two ways. Torch Nn Embedding Initialization.

From ericpengshuai.github.io

【PyTorch】RNN 像我这样的人 Torch Nn Embedding Initialization they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. nn.embedding is a pytorch layer. Torch Nn Embedding Initialization.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. to. Torch Nn Embedding Initialization.

From blog.csdn.net

【Pytorch学习】nn.Embedding的讲解及使用CSDN博客 Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights of a single layer, use a function from torch.nn.init. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. there seem to be two ways of initializing embedding. Torch Nn Embedding Initialization.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch Nn Embedding Initialization there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as. Torch Nn Embedding Initialization.

From discuss.pytorch.org

Initialization of the hidden states of torch.nn.lstm vision PyTorch Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. to initialize the weights of a single layer, use a function from torch.nn.init. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From blog.csdn.net

torch.nn.embedding的工作原理_nn.embedding原理CSDN博客 Torch Nn Embedding Initialization there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. to initialize the weights of a single layer, use a function from torch.nn.init. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From t.zoukankan.com

pytorch中,嵌入层torch.nn.embedding的计算方式 走看看 Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic.. Torch Nn Embedding Initialization.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch Nn Embedding Initialization learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. they are initialized using the nn.init.uniform_() function from the. Torch Nn Embedding Initialization.

From blog.csdn.net

【Pytorch基础教程28】浅谈torch.nn.embedding_torch embeddingCSDN博客 Torch Nn Embedding Initialization nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. to initialize the weights of a single layer, use a function from torch.nn.init. there seem to be. Torch Nn Embedding Initialization.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch Nn Embedding Initialization learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. they are initialized using the nn.init.uniform_() function from the torch.nn.init module and the weights are initialized with random. nn.embedding is a pytorch. Torch Nn Embedding Initialization.

From gotutiyan.hatenablog.com

【Pytorch】nn.Embeddingの使い方を丁寧に gotutiyan’s blog Torch Nn Embedding Initialization to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. there seem to be two ways of initializing embedding layers in pytorch 1.0 using an uniform distribution. learn how to use torch.nn.init module to initialize. Torch Nn Embedding Initialization.

From blog.csdn.net

【python函数】torch.nn.Embedding函数用法图解CSDN博客 Torch Nn Embedding Initialization to initialize the weights of a single layer, use a function from torch.nn.init. learn how to use word embeddings to represent words as dense vectors of real numbers, capturing their semantic. learn how to use torch.nn.init module to initialize neural network parameters with various distributions and methods. they are initialized using the nn.init.uniform_() function from the. Torch Nn Embedding Initialization.