Databricks List S3 Files . This article explains how to connect to aws s3 from databricks. You can use hadoop api for accessing files on s3 (spark uses it as well): Access s3 buckets using instance profiles. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Most examples in this article focus on using volumes. Work with files and object storage efficiently. There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3 files in a bucket/folder. For this example, we are using data files stored. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Databricks provides several apis for listing files in cloud object storage. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. You can use the utilities to:

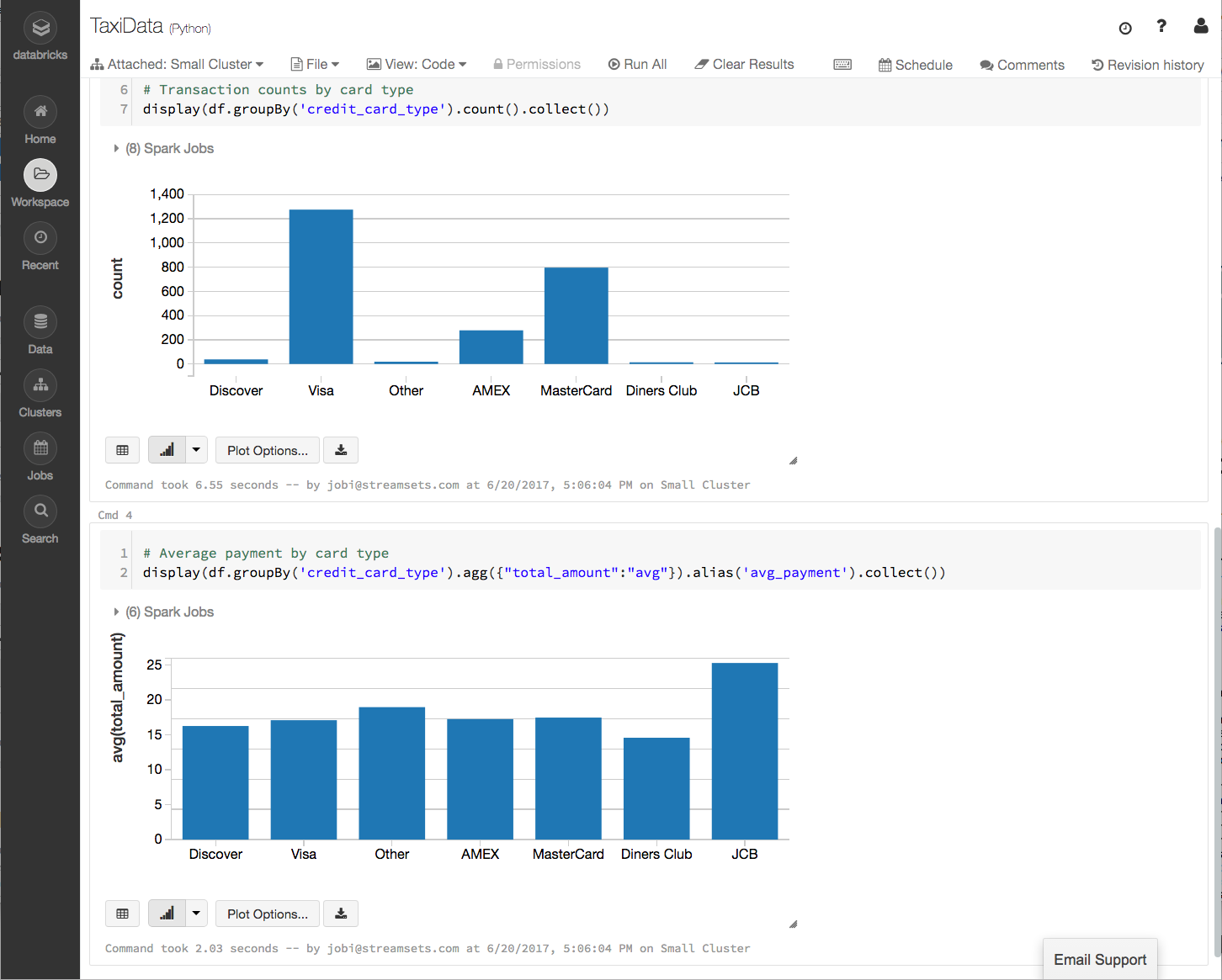

from streamsets.com

For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage. I'm trying to generate a list of all s3 files in a bucket/folder. You can use hadoop api for accessing files on s3 (spark uses it as well): You can use the utilities to: Work with files and object storage efficiently. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Access s3 buckets using instance profiles. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Most examples in this article focus on using volumes.

Triggering Databricks Notebook Jobs from StreamSets Data Collector

Databricks List S3 Files Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3 files in a bucket/folder. You can use hadoop api for accessing files on s3 (spark uses it as well): You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. Work with files and object storage efficiently. Most examples in this article focus on using volumes. You can use the utilities to: In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Databricks provides several apis for listing files in cloud object storage. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. This article explains how to connect to aws s3 from databricks. Access s3 buckets using instance profiles.

From www.datamesh-architecture.com

Data Mesh Architecture Databricks Databricks List S3 Files Most examples in this article focus on using volumes. Access s3 buckets using instance profiles. Work with files and object storage efficiently. Databricks provides several apis for listing files in cloud object storage. This article explains how to connect to aws s3 from databricks. In this approach, you can directly query the files in the s3 landing bucket using sql. Databricks List S3 Files.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks List S3 Files For this example, we are using data files stored. Access s3 buckets using instance profiles. Most examples in this article focus on using volumes. I'm trying to generate a list of all s3 files in a bucket/folder. You can use hadoop api for accessing files on s3 (spark uses it as well): In this approach, you can directly query the. Databricks List S3 Files.

From www.databricks.com

Optimizing AWS S3 Access for Databricks Databricks Blog Databricks List S3 Files There are usually in the magnitude of millions of files in the folder. For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. In this approach, you can directly query the files in the. Databricks List S3 Files.

From zesty.co

The Ultimate Guide to S3 Costs Zesty Databricks List S3 Files In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Most examples in this article focus on using volumes. Access s3 buckets using instance profiles. You can use the utilities to: Work with files and object storage efficiently. This article explains how to connect to aws s3 from databricks. There are. Databricks List S3 Files.

From www.techdevpillar.com

How to list files in S3 bucket with AWS CLI and python Tech Dev Pillar Databricks List S3 Files You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. There are usually in the magnitude of millions of files in the folder. You can use the utilities to: Connecting an aws s3 bucket to databricks makes. Databricks List S3 Files.

From docs.gcp.databricks.com

SET RECIPIENT Databricks on Google Cloud Databricks List S3 Files Work with files and object storage efficiently. You can use hadoop api for accessing files on s3 (spark uses it as well): Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. You can use the utilities to: I'm trying to generate a list of all s3. Databricks List S3 Files.

From www.devopsschool.com

What is Databricks and use cases of Databricks? Databricks List S3 Files You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. Databricks provides. Databricks List S3 Files.

From streamsets.com

Triggering Databricks Notebook Jobs from StreamSets Data Collector Databricks List S3 Files Most examples in this article focus on using volumes. There are usually in the magnitude of millions of files in the folder. You can use the utilities to: I'm trying to generate a list of all s3 files in a bucket/folder. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands.. Databricks List S3 Files.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks List S3 Files You can use the utilities to: In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Databricks provides several apis for listing files in cloud object storage. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. This. Databricks List S3 Files.

From binaryguy.tech

Quickest Ways to List Files in S3 Bucket Databricks List S3 Files I'm trying to generate a list of all s3 files in a bucket/folder. Most examples in this article focus on using volumes. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. There are usually in the magnitude of millions of files in the folder. Work with files and object storage. Databricks List S3 Files.

From www.youtube.com

Databricks File System(DBFS) overview in Azure Databricks explained in Databricks List S3 Files Access s3 buckets using instance profiles. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Work with files and object storage efficiently. You can use the utilities to: There are usually in the magnitude of millions of files in the folder. I'm trying to generate a list of all s3. Databricks List S3 Files.

From towardsdatascience.com

Building a Data Mesh on Databricks — Fast by Sven Balnojan Towards Databricks List S3 Files I'm trying to generate a list of all s3 files in a bucket/folder. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. You can use the utilities to: This article explains how to connect to aws s3 from databricks. Most examples in this article focus on using volumes. There are. Databricks List S3 Files.

From klaodvfml.blob.core.windows.net

How To Secure S3 Buckets at Marcos Hutchings blog Databricks List S3 Files You can use hadoop api for accessing files on s3 (spark uses it as well): You can use the utilities to: For this example, we are using data files stored. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. I'm trying to generate a list of. Databricks List S3 Files.

From stackoverflow.com

databricks load file from s3 bucket path parameter Stack Overflow Databricks List S3 Files Access s3 buckets using instance profiles. Most examples in this article focus on using volumes. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. There are usually in the magnitude. Databricks List S3 Files.

From community.boomi.com

Article Inserting Data into Databricks with AWS S3 Boomi Community Databricks List S3 Files For this example, we are using data files stored. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Databricks provides several apis for listing files in cloud object storage. This article explains how to connect to aws s3 from databricks. Connecting an aws s3 bucket to databricks makes data processing. Databricks List S3 Files.

From hevodata.com

Databricks S3 Integration 3 Easy Steps Databricks List S3 Files In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Most examples in this article focus on using volumes. Access s3 buckets using instance profiles. I'm trying to generate a list of all s3 files in a bucket/folder. Connecting an aws s3 bucket to databricks makes data processing and analytics easier,. Databricks List S3 Files.

From www.youtube.com

Read JSON File in Databricks Databricks Tutorial for Beginners Databricks List S3 Files This article explains how to connect to aws s3 from databricks. Databricks provides several apis for listing files in cloud object storage. You can use the utilities to: In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Work with files and object storage efficiently. Most examples in this article focus. Databricks List S3 Files.

From blog.csdn.net

databricks spark 集群连接AWS s3 数据_databricks spark读取s3文件CSDN博客 Databricks List S3 Files I'm trying to generate a list of all s3 files in a bucket/folder. You can use the utilities to: Access s3 buckets using instance profiles. You can use hadoop api for accessing files on s3 (spark uses it as well): For this example, we are using data files stored. This article explains how to connect to aws s3 from databricks.. Databricks List S3 Files.

From community.qlik.com

AWS S3 Storage and DataBricks Data Connection Qlik Community 2002618 Databricks List S3 Files There are usually in the magnitude of millions of files in the folder. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. I'm trying to generate a list of all s3 files in a bucket/folder. Most examples in this article focus on using volumes. Work with. Databricks List S3 Files.

From 9to5answer.com

[Solved] Scala & DataBricks Getting a list of Files 9to5Answer Databricks List S3 Files For this example, we are using data files stored. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. You can use hadoop api for accessing files on. Databricks List S3 Files.

From docs.databricks.com

Databricks administration introduction Databricks on AWS Databricks List S3 Files This article explains how to connect to aws s3 from databricks. Work with files and object storage efficiently. Databricks provides several apis for listing files in cloud object storage. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. There are usually in the magnitude of millions of files in the folder. I'm trying. Databricks List S3 Files.

From docs.amperity.com

Connect Databricks to Amazon S3 — DataGrid Databricks List S3 Files I'm trying to generate a list of all s3 files in a bucket/folder. There are usually in the magnitude of millions of files in the folder. Most examples in this article focus on using volumes. This article explains how to connect to aws s3 from databricks. You can use hadoop api for accessing files on s3 (spark uses it as. Databricks List S3 Files.

From www.youtube.com

Databricks Tutorial 3 Databricks Clusters, Databricks Cluster Databricks List S3 Files This article explains how to connect to aws s3 from databricks. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. For this example, we are using data files stored. Most examples in this article. Databricks List S3 Files.

From www.freecodecamp.org

How to Read and Write Data using Azure Databricks Databricks List S3 Files Most examples in this article focus on using volumes. Databricks provides several apis for listing files in cloud object storage. For this example, we are using data files stored. You can use the utilities to: Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. There are usually in the magnitude of millions. Databricks List S3 Files.

From databricks.com

Using AWS Lambda with Databricks for ETL Automation and ML Model Databricks List S3 Files Most examples in this article focus on using volumes. Work with files and object storage efficiently. You can use the utilities to: Databricks provides several apis for listing files in cloud object storage. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. You can use hadoop api for accessing files. Databricks List S3 Files.

From www.youtube.com

How to Mount or Connect your AWS S3 Bucket in Databricks YouTube Databricks List S3 Files Access s3 buckets using instance profiles. This article explains how to connect to aws s3 from databricks. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. I'm trying to generate a list of all s3 files in a bucket/folder. Most examples in this article focus on using volumes. There are usually in the. Databricks List S3 Files.

From qiita.com

Databricksから S3 bucket へのアクセス方法を整理してみた S3 Qiita Databricks List S3 Files Databricks provides several apis for listing files in cloud object storage. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. You can list files on a distributed file system (dbfs, s3 or hdfs) using %fs commands. You can use the utilities to: Access s3 buckets using instance profiles. I'm trying. Databricks List S3 Files.

From www.mssqltips.com

Databricks Unity Catalog and Volumes StepbyStep Guide Databricks List S3 Files Work with files and object storage efficiently. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. For this example, we are using data files stored. You can use hadoop api for accessing files on s3 (spark uses it as well): There are usually in the magnitude of millions of files. Databricks List S3 Files.

From www.databricks.com

Optimizing AWS S3 Access for Databricks Databricks Blog Databricks List S3 Files For this example, we are using data files stored. Work with files and object storage efficiently. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. I'm trying to generate a list of all s3 files in a bucket/folder. In this approach, you can directly query the. Databricks List S3 Files.

From medium.com

5 reasons to choose Delta format (on Databricks) by Laurent Leturgez Databricks List S3 Files I'm trying to generate a list of all s3 files in a bucket/folder. You can use the utilities to: Databricks provides several apis for listing files in cloud object storage. Most examples in this article focus on using volumes. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and. Databricks List S3 Files.

From databricks.com

How to Orchestrate Databricks Workloads on AWS With Managed Workflows Databricks List S3 Files You can use hadoop api for accessing files on s3 (spark uses it as well): There are usually in the magnitude of millions of files in the folder. Databricks provides several apis for listing files in cloud object storage. Access s3 buckets using instance profiles. Most examples in this article focus on using volumes. Work with files and object storage. Databricks List S3 Files.

From www.element61.be

Empowering your Databricks Lakehouse with Microsoft Fabric element61 Databricks List S3 Files Work with files and object storage efficiently. In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. Most examples in this article focus on using volumes. I'm trying. Databricks List S3 Files.

From grabngoinfo.com

Databricks Mount To AWS S3 And Import Data Grab N Go Info Databricks List S3 Files You can use hadoop api for accessing files on s3 (spark uses it as well): In this approach, you can directly query the files in the s3 landing bucket using sql or spark commands. For this example, we are using data files stored. Databricks provides several apis for listing files in cloud object storage. Access s3 buckets using instance profiles.. Databricks List S3 Files.

From community.boomi.com

Article Inserting Data into Databricks with AWS S3 Boomi Community Databricks List S3 Files Databricks provides several apis for listing files in cloud object storage. Connecting an aws s3 bucket to databricks makes data processing and analytics easier, faster, and cheaper by using s3’s strong and expandable storage. Work with files and object storage efficiently. You can use hadoop api for accessing files on s3 (spark uses it as well): You can list files. Databricks List S3 Files.

From www.youtube.com

Databricks Notebook Development Overview YouTube Databricks List S3 Files You can use the utilities to: Most examples in this article focus on using volumes. For this example, we are using data files stored. Work with files and object storage efficiently. You can use hadoop api for accessing files on s3 (spark uses it as well): In this approach, you can directly query the files in the s3 landing bucket. Databricks List S3 Files.