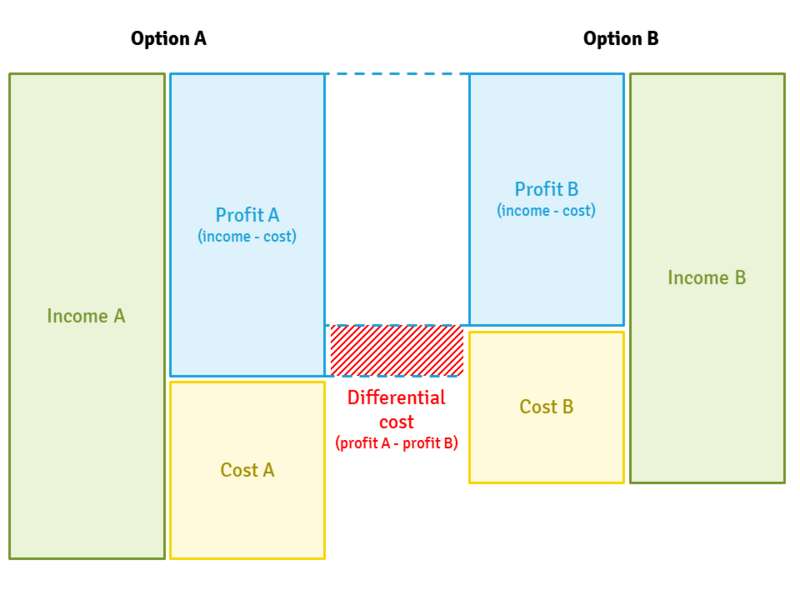

Differential Cost Function . The weights and bias are then. The cost occurs when a business faces several similar options, and a choice must be made by. Let f be a monotonic production function. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The partial differentiation of cost function with respect to weights and bias is computed. Differential cost refers to the difference between the cost of two alternative decisions. The derivative of cost function: You have to get the partial derivative with respect $\theta_j$. Remember that the hypothesis function here is equal to the sigmoid function. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output.

from ceopedia.org

Remember that the hypothesis function here is equal to the sigmoid function. Let f be a monotonic production function. The cost occurs when a business faces several similar options, and a choice must be made by. You have to get the partial derivative with respect $\theta_j$. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The weights and bias are then. Differential cost refers to the difference between the cost of two alternative decisions. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The partial differentiation of cost function with respect to weights and bias is computed. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in.

Differential costing CEOpedia Management online

Differential Cost Function Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The derivative of cost function: The cost occurs when a business faces several similar options, and a choice must be made by. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The partial differentiation of cost function with respect to weights and bias is computed. Differential cost refers to the difference between the cost of two alternative decisions. You have to get the partial derivative with respect $\theta_j$. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The weights and bias are then. Remember that the hypothesis function here is equal to the sigmoid function. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. Let f be a monotonic production function.

From www.slideserve.com

PPT Differential Cost Analysis PowerPoint Presentation, free download Differential Cost Function The weights and bias are then. Let f be a monotonic production function. You have to get the partial derivative with respect $\theta_j$. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. A cost function is a measure of how good a neural network did with respect to it's given training sample and. Differential Cost Function.

From www.researchgate.net

1 Illustration of the way in which differential costs can maintain Differential Cost Function Let f be a monotonic production function. The cost occurs when a business faces several similar options, and a choice must be made by. The derivative of cost function: You have to get the partial derivative with respect $\theta_j$. The weights and bias are then. Differential cost refers to the difference between the cost of two alternative decisions. Remember that. Differential Cost Function.

From www.svtuition.org

Sunk Cost and Differential Cost Accounting Education Differential Cost Function Remember that the hypothesis function here is equal to the sigmoid function. Differential cost refers to the difference between the cost of two alternative decisions. Let f be a monotonic production function. The partial differentiation of cost function with respect to weights and bias is computed. The associated cost function c(w,y) is • continuous • concave in w • monotone. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function Let f be a monotonic production function. The weights and bias are then. Remember that the hypothesis function here is equal to the sigmoid function. The cost occurs when a business faces several similar options, and a choice must be made by. The derivative of cost function: You have to get the partial derivative with respect $\theta_j$. The associated cost. Differential Cost Function.

From www.slideserve.com

PPT § 12 Functions PowerPoint Presentation, free download ID3758171 Differential Cost Function The derivative of cost function: The partial differentiation of cost function with respect to weights and bias is computed. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. Differential cost refers to the difference between the cost of two alternative decisions. Remember that the hypothesis. Differential Cost Function.

From www.youtube.com

Introduction to Cost Functions YouTube Differential Cost Function The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The derivative of cost function: Remember that the hypothesis function here is equal to the sigmoid function. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The partial differentiation. Differential Cost Function.

From www.slideserve.com

PPT Differential Cost Analysis PowerPoint Presentation, free download Differential Cost Function You have to get the partial derivative with respect $\theta_j$. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The partial differentiation of cost function with respect to weights. Differential Cost Function.

From www.slideserve.com

PPT Transln Cost Fuction PowerPoint Presentation, free download ID Differential Cost Function The weights and bias are then. The derivative of cost function: The cost occurs when a business faces several similar options, and a choice must be made by. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. You have to get the partial derivative with respect $\theta_j$. Remember that the hypothesis function here. Differential Cost Function.

From www.youtube.com

Cost Functions under Variable Returns YouTube Differential Cost Function The weights and bias are then. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Differential cost refers to the difference between the cost of two alternative decisions. Remember. Differential Cost Function.

From www.economicshelp.org

Diagrams of Cost Curves Economics Help Differential Cost Function Differential cost refers to the difference between the cost of two alternative decisions. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. You have to get the partial derivative. Differential Cost Function.

From www.youtube.com

Differential Cost Analysis YouTube Differential Cost Function Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The derivative of cost function: The cost occurs when a business faces several similar options, and a choice must be. Differential Cost Function.

From www.scribd.com

Module 6 Differential Cost Analysis1 PDF Decision Making Cost Differential Cost Function Remember that the hypothesis function here is equal to the sigmoid function. The derivative of cost function: The weights and bias are then. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. Differential cost refers to the difference between the cost of two alternative decisions.. Differential Cost Function.

From www.slideserve.com

PPT Fundamentals of Cost Analysis for Decision Making PowerPoint Differential Cost Function The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The derivative of cost function: Differential cost refers to the difference between the cost of two alternative decisions. The partial. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Let f be a monotonic production function. Remember that the hypothesis function here is equal to the sigmoid function. The cost occurs when a business faces several similar options, and a choice must be made by. The derivative of cost function: The partial differentiation. Differential Cost Function.

From www.ilearnlot.com

How to the Classification of Cost according to 4 functions? ilearnlot Differential Cost Function The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The partial differentiation of cost function with respect to weights and bias is computed. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. Since the hypothesis function for logistic. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function The cost occurs when a business faces several similar options, and a choice must be made by. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The derivative of cost function: Differential cost refers to the difference between the cost of two alternative decisions. You. Differential Cost Function.

From www.researchgate.net

Example Cost Functions Download Scientific Diagram Differential Cost Function Remember that the hypothesis function here is equal to the sigmoid function. The weights and bias are then. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. Let f be a monotonic production function. Differential cost refers to the difference between the cost of two. Differential Cost Function.

From ceopedia.org

Differential costing CEOpedia Management online Differential Cost Function Remember that the hypothesis function here is equal to the sigmoid function. You have to get the partial derivative with respect $\theta_j$. The weights and bias are then. The derivative of cost function: The partial differentiation of cost function with respect to weights and bias is computed. Let f be a monotonic production function. The cost occurs when a business. Differential Cost Function.

From www.youtube.com

What is a Cost Function (Cost Accounting Tutorial 6) YouTube Differential Cost Function Let f be a monotonic production function. You have to get the partial derivative with respect $\theta_j$. The weights and bias are then. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The cost occurs when a business faces several similar options, and a choice. Differential Cost Function.

From www.youtube.com

Understanding Differential Pricing YouTube Differential Cost Function A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Differential cost refers to the difference between the cost of two alternative decisions. The partial differentiation of cost function with. Differential Cost Function.

From theinvestorsbook.com

What is Differential Costing? Definition, Differential Cost, Formula Differential Cost Function The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. The cost occurs when a business faces several similar options, and a choice must be made by. The derivative of cost function: Let f be a monotonic production function. Remember that the hypothesis function here is equal to the sigmoid function. Differential cost refers. Differential Cost Function.

From www.slideserve.com

PPT Cost Functions PowerPoint Presentation, free download ID6759619 Differential Cost Function The weights and bias are then. The derivative of cost function: The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The cost occurs when a business faces several similar. Differential Cost Function.

From www.superfastcpa.com

What is Differential Cost? Differential Cost Function Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The weights and bias are then. Let f be a monotonic production. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function Differential cost refers to the difference between the cost of two alternative decisions. The partial differentiation of cost function with respect to weights and bias is computed. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. A cost function is a measure of how good a neural network did with respect to it's. Differential Cost Function.

From studylib.net

Chapter 8 Costs Functions Differential Cost Function The weights and bias are then. Differential cost refers to the difference between the cost of two alternative decisions. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. Let f be a monotonic production function. The associated cost function c(w,y) is • continuous • concave. Differential Cost Function.

From www.studocu.com

Differential COST Analysis COSTING FOR MANAGERIAL DECISIONS Differential Cost Function The cost occurs when a business faces several similar options, and a choice must be made by. Differential cost refers to the difference between the cost of two alternative decisions. Remember that the hypothesis function here is equal to the sigmoid function. The weights and bias are then. The derivative of cost function: The associated cost function c(w,y) is •. Differential Cost Function.

From www.youtube.com

Differential cost meaning of Differential cost YouTube Differential Cost Function Remember that the hypothesis function here is equal to the sigmoid function. The partial differentiation of cost function with respect to weights and bias is computed. The derivative of cost function: The weights and bias are then. Differential cost refers to the difference between the cost of two alternative decisions. You have to get the partial derivative with respect $\theta_j$.. Differential Cost Function.

From www.slideserve.com

PPT Differential Cost Analysis PowerPoint Presentation, free download Differential Cost Function You have to get the partial derivative with respect $\theta_j$. The partial differentiation of cost function with respect to weights and bias is computed. Remember that the hypothesis function here is equal to the sigmoid function. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Differential cost refers to the difference between the. Differential Cost Function.

From medium.com

Understanding and Calculating the Cost Function for Linear Regression Differential Cost Function The derivative of cost function: Let f be a monotonic production function. Remember that the hypothesis function here is equal to the sigmoid function. The associated cost function c(w,y) is • continuous • concave in w • monotone nondecreasing in. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient. Differential Cost Function.

From andrea-has-reed.blogspot.com

Only Variable Costs Can Be Differential Costs. AndreahasReed Differential Cost Function A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The weights and bias are then. The derivative of cost function: Remember that the hypothesis function here is equal to the sigmoid function. Differential cost refers to the difference between the cost of two alternative decisions.. Differential Cost Function.

From www.studocu.com

Applications OF Differential COST Analysis APPLICATIONS OF Differential Cost Function The weights and bias are then. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. Differential cost refers to the difference between the cost of two alternative decisions. The partial differentiation of cost function with respect to weights and bias is computed. The associated cost. Differential Cost Function.

From www.slideserve.com

PPT Fundamentals of Cost Analysis for Decision Making PowerPoint Differential Cost Function The partial differentiation of cost function with respect to weights and bias is computed. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. Differential cost refers to the difference between the cost of two alternative decisions. The derivative of cost function: The cost occurs when. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function You have to get the partial derivative with respect $\theta_j$. The weights and bias are then. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. The partial differentiation of cost function with respect to weights and bias is computed. The derivative of cost function: Remember. Differential Cost Function.

From www.slideshare.net

Differential Cost Analysis chapter7 Differential Cost Function You have to get the partial derivative with respect $\theta_j$. Since the hypothesis function for logistic regression is sigmoid in nature hence, the first important step is finding the gradient of the sigmoid function. Remember that the hypothesis function here is equal to the sigmoid function. The cost occurs when a business faces several similar options, and a choice must. Differential Cost Function.

From www.slideserve.com

PPT Differential Analysis The Key to Decision Making PowerPoint Differential Cost Function The cost occurs when a business faces several similar options, and a choice must be made by. A cost function is a measure of how good a neural network did with respect to it's given training sample and the expected output. The partial differentiation of cost function with respect to weights and bias is computed. The derivative of cost function:. Differential Cost Function.