Spark Find Number Of Partitions . In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: Spark distributes data across nodes based on various partitioning methods such. how does spark partitioning work? in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. How to calculate the spark partition size. Using the `rdd.getnumpartitions ()` method. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task.

from blog.csdn.net

methods to get the current number of partitions of a dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. how does spark partitioning work? Spark distributes data across nodes based on various partitioning methods such. there are four ways to get the number of partitions of a spark dataframe: Using the `rdd.getnumpartitions ()` method. How to calculate the spark partition size.

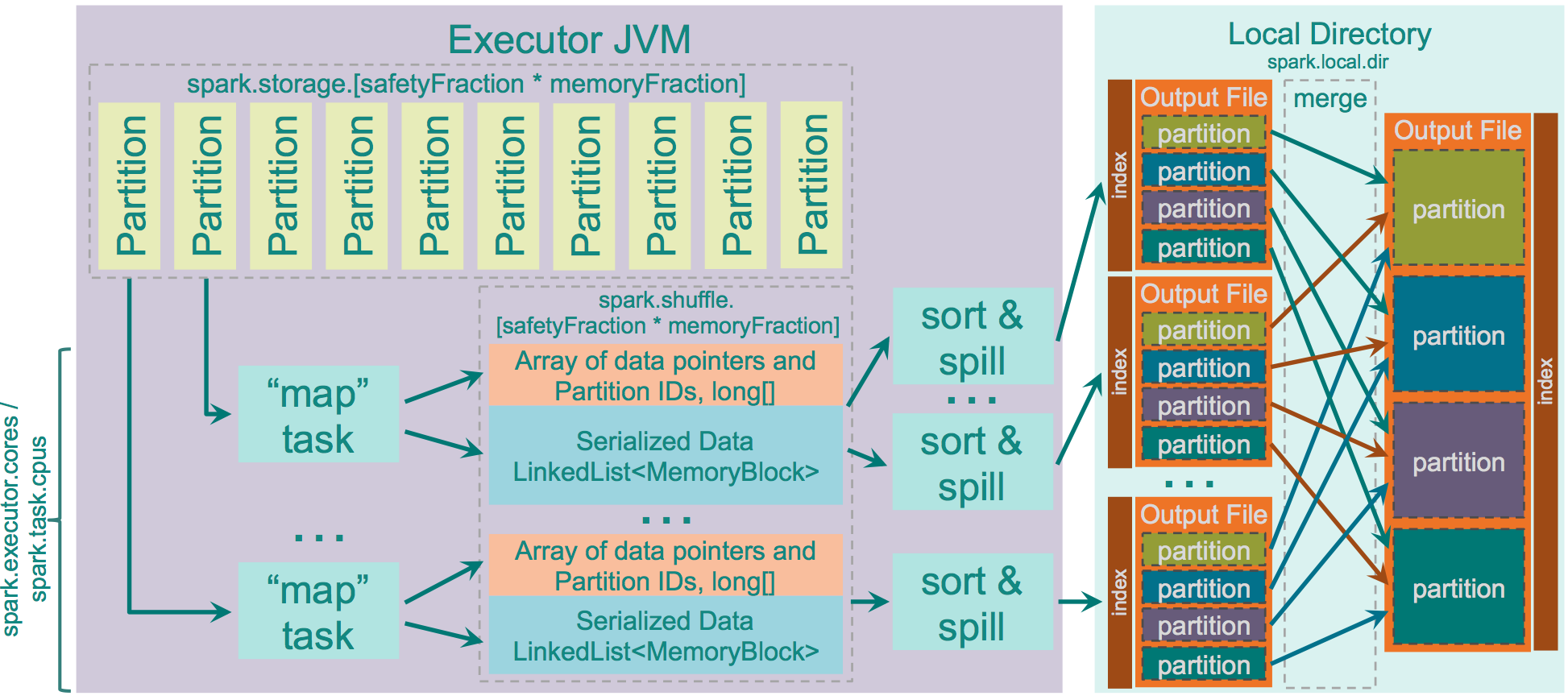

spark基本知识点之Shuffle_separate file for each media typeCSDN博客

Spark Find Number Of Partitions Using the `rdd.getnumpartitions ()` method. methods to get the current number of partitions of a dataframe. Using the `rdd.getnumpartitions ()` method. In apache spark, you can use the rdd.getnumpartitions() method to get the number. there are four ways to get the number of partitions of a spark dataframe: How to calculate the spark partition size. Spark distributes data across nodes based on various partitioning methods such. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. how does spark partitioning work? spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task.

From www.gangofcoders.net

How does Spark partition(ing) work on files in HDFS? Gang of Coders Spark Find Number Of Partitions spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. In apache spark, you can use the rdd.getnumpartitions() method to get the number. Using the `rdd.getnumpartitions ()` method. How to calculate the spark partition size. methods to get the current number of partitions of a. Spark Find Number Of Partitions.

From blog.csdn.net

spark基本知识点之Shuffle_separate file for each media typeCSDN博客 Spark Find Number Of Partitions Spark distributes data across nodes based on various partitioning methods such. there are four ways to get the number of partitions of a spark dataframe: methods to get the current number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. Using the `rdd.getnumpartitions ()` method. in pyspark, you. Spark Find Number Of Partitions.

From exokeufcv.blob.core.windows.net

Max Number Of Partitions In Spark at Manda Salazar blog Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. there are four ways to get the number of partitions of a spark dataframe: spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. . Spark Find Number Of Partitions.

From blog.csdn.net

Spark分区 partition 详解_spark partitionCSDN博客 Spark Find Number Of Partitions how does spark partitioning work? spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. How to calculate the spark. Spark Find Number Of Partitions.

From stackoverflow.com

How does Spark SQL decide the number of partitions it will use when Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. How to calculate the spark partition size. Spark distributes data across nodes based on various partitioning methods such. methods to get the. Spark Find Number Of Partitions.

From www.programmersought.com

[Spark2] [Source code learning] [Number of partitions] How does spark Spark Find Number Of Partitions how does spark partitioning work? Spark distributes data across nodes based on various partitioning methods such. Using the `rdd.getnumpartitions ()` method. there are four ways to get the number of partitions of a spark dataframe: In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. spark generally. Spark Find Number Of Partitions.

From towardsdata.dev

Partitions and Bucketing in Spark towards data Spark Find Number Of Partitions Spark distributes data across nodes based on various partitioning methods such. methods to get the current number of partitions of a dataframe. How to calculate the spark partition size. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. in pyspark, you can use. Spark Find Number Of Partitions.

From stackoverflow.com

optimization Spark AQE drastically reduces number of partitions Spark Find Number Of Partitions How to calculate the spark partition size. there are four ways to get the number of partitions of a spark dataframe: In apache spark, you can use the rdd.getnumpartitions() method to get the number. Using the `rdd.getnumpartitions ()` method. Spark distributes data across nodes based on various partitioning methods such. in pyspark, you can use the rdd.getnumpartitions() method. Spark Find Number Of Partitions.

From www.qubole.com

Improving Recover Partitions Performance with Spark on Qubole Spark Find Number Of Partitions Spark distributes data across nodes based on various partitioning methods such. Using the `rdd.getnumpartitions ()` method. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. how does spark partitioning work? there are four ways to get the number of partitions of a spark. Spark Find Number Of Partitions.

From spaziocodice.com

Spark SQL Partitions and Sizes SpazioCodice Spark Find Number Of Partitions there are four ways to get the number of partitions of a spark dataframe: In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. Using the `rdd.getnumpartitions ()` method. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair. Spark Find Number Of Partitions.

From stackoverflow.com

pyspark Spark number of tasks vs number of partitions Stack Overflow Spark Find Number Of Partitions methods to get the current number of partitions of a dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Spark distributes data across nodes based on various partitioning methods such. how does spark partitioning work? Using the `rdd.getnumpartitions ()` method. spark generally partitions your rdd based. Spark Find Number Of Partitions.

From medium.com

Managing Partitions with Spark. If you ever wonder why everyone moved Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. Using the `rdd.getnumpartitions ()` method. how does spark partitioning work? in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each. Spark Find Number Of Partitions.

From toien.github.io

Spark 分区数量 Kwritin Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. Using the `rdd.getnumpartitions ()` method. How to calculate the spark partition size. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. how does spark partitioning work? there are four ways. Spark Find Number Of Partitions.

From sparkbyexamples.com

Spark Get Current Number of Partitions of DataFrame Spark By {Examples} Spark Find Number Of Partitions how does spark partitioning work? spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. How to calculate the spark partition size. Spark distributes data across nodes based on various partitioning methods such. Using the `rdd.getnumpartitions ()` method. methods to get the current number. Spark Find Number Of Partitions.

From laptrinhx.com

Managing Partitions Using Spark Dataframe Methods LaptrinhX / News Spark Find Number Of Partitions how does spark partitioning work? methods to get the current number of partitions of a dataframe. Using the `rdd.getnumpartitions ()` method. How to calculate the spark partition size. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. in pyspark, you can use. Spark Find Number Of Partitions.

From exocpydfk.blob.core.windows.net

What Is Shuffle Partitions In Spark at Joe Warren blog Spark Find Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. How to calculate the spark partition size. Using the `rdd.getnumpartitions ()` method. In apache spark, you can use the rdd.getnumpartitions() method to get the number. how does spark partitioning work? there are four ways to get the number of. Spark Find Number Of Partitions.

From stackoverflow.com

scala Apache spark Number of tasks less than the number of Spark Find Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. how does spark partitioning work? Using the `rdd.getnumpartitions ()` method. Spark distributes data across nodes based on various partitioning methods such. How to calculate the spark partition. Spark Find Number Of Partitions.

From stackoverflow.com

Why is the number of spark streaming tasks different from the Kafka Spark Find Number Of Partitions how does spark partitioning work? How to calculate the spark partition size. Spark distributes data across nodes based on various partitioning methods such. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. methods to get the current number of partitions of a dataframe.. Spark Find Number Of Partitions.

From www.projectpro.io

How Data Partitioning in Spark helps achieve more parallelism? Spark Find Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. how does spark partitioning work? Spark distributes data across nodes based on various partitioning methods. Spark Find Number Of Partitions.

From laptrinhx.com

Determining Number of Partitions in Apache Spark— Part I LaptrinhX Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. Spark distributes data across nodes based on various partitioning methods such. methods to get the current number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: spark. Spark Find Number Of Partitions.

From cloud-fundis.co.za

Dynamically Calculating Spark Partitions at Runtime Cloud Fundis Spark Find Number Of Partitions Spark distributes data across nodes based on various partitioning methods such. In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. there are four ways to get the number of partitions of a spark dataframe: spark generally partitions your rdd based on the number of executors in cluster. Spark Find Number Of Partitions.

From exoxseaze.blob.core.windows.net

Number Of Partitions Formula at Melinda Gustafson blog Spark Find Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. How to calculate the. Spark Find Number Of Partitions.

From www.youtube.com

Number of Partitions in Dataframe Spark Tutorial Interview Question Spark Find Number Of Partitions methods to get the current number of partitions of a dataframe. Using the `rdd.getnumpartitions ()` method. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: Spark distributes data across nodes based on various partitioning. Spark Find Number Of Partitions.

From best-practice-and-impact.github.io

Managing Partitions — Spark at the ONS Spark Find Number Of Partitions How to calculate the spark partition size. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors in cluster so that each executor gets fair share of the task. there are four ways to get the number of. Spark Find Number Of Partitions.

From medium.com

Managing Spark Partitions. How data is partitioned and when do you Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: Spark distributes data across nodes based on various partitioning methods such. spark. Spark Find Number Of Partitions.

From blogs.perficient.com

Spark Partition An Overview / Blogs / Perficient Spark Find Number Of Partitions How to calculate the spark partition size. how does spark partitioning work? Spark distributes data across nodes based on various partitioning methods such. In apache spark, you can use the rdd.getnumpartitions() method to get the number. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions. Spark Find Number Of Partitions.

From toien.github.io

Spark 分区数量 Kwritin Spark Find Number Of Partitions methods to get the current number of partitions of a dataframe. How to calculate the spark partition size. In apache spark, you can use the rdd.getnumpartitions() method to get the number. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. spark generally partitions your rdd based on the. Spark Find Number Of Partitions.

From www.projectpro.io

DataFrames number of partitions in spark scala in Databricks Spark Find Number Of Partitions How to calculate the spark partition size. Using the `rdd.getnumpartitions ()` method. Spark distributes data across nodes based on various partitioning methods such. In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. in pyspark, you can use the rdd.getnumpartitions() method to find out. Spark Find Number Of Partitions.

From www.youtube.com

How to find Data skewness in spark / How to get count of rows from each Spark Find Number Of Partitions how does spark partitioning work? Using the `rdd.getnumpartitions ()` method. In apache spark, you can use the rdd.getnumpartitions() method to get the number. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: spark. Spark Find Number Of Partitions.

From medium.com

Managing Spark Partitions. How data is partitioned and when do you Spark Find Number Of Partitions in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. how does spark partitioning work? Spark distributes data across nodes based on various partitioning methods such. methods to get the current number of partitions of a dataframe. spark generally partitions your rdd based on the number of executors. Spark Find Number Of Partitions.

From exokeufcv.blob.core.windows.net

Max Number Of Partitions In Spark at Manda Salazar blog Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. Spark distributes data across nodes based on various partitioning methods such. Using the `rdd.getnumpartitions ()` method. there are four ways to get the number of partitions of a spark dataframe: in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions. Spark Find Number Of Partitions.

From www.youtube.com

Why should we partition the data in spark? YouTube Spark Find Number Of Partitions there are four ways to get the number of partitions of a spark dataframe: in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. How to calculate the spark partition size. how does spark partitioning work?. Spark Find Number Of Partitions.

From stackoverflow.com

Partition a Spark DataFrame based on values in an existing column into Spark Find Number Of Partitions In apache spark, you can use the rdd.getnumpartitions() method to get the number. methods to get the current number of partitions of a dataframe. how does spark partitioning work? Using the `rdd.getnumpartitions ()` method. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. there are four ways. Spark Find Number Of Partitions.

From medium.com

Guide to Selection of Number of Partitions while reading Data Files in Spark Find Number Of Partitions methods to get the current number of partitions of a dataframe. In apache spark, you can use the rdd.getnumpartitions() method to get the number. in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: . Spark Find Number Of Partitions.

From naifmehanna.com

Efficiently working with Spark partitions · Naif Mehanna Spark Find Number Of Partitions How to calculate the spark partition size. methods to get the current number of partitions of a dataframe. there are four ways to get the number of partitions of a spark dataframe: in pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In apache spark, you can use the. Spark Find Number Of Partitions.