Aws S3 Bucket Object Zip File . We'll then set up a passthrough stream into an s3 upload. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. End users upload zip files to the root of an amazon s3 bucket. First, let's create the archival stream. To store your data in amazon s3, you work with resources known as buckets and objects. A bucket is a container for objects. This is simple enough to do: How to extract large zip files in an amazon s3 bucket by using aws ec2 and python

from trailhead.salesforce.com

S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. First, let's create the archival stream. A bucket is a container for objects. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. We'll then set up a passthrough stream into an s3 upload. How to extract large zip files in an amazon s3 bucket by using aws ec2 and python This is simple enough to do: End users upload zip files to the root of an amazon s3 bucket. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all.

Get Object Storage with Amazon S3 Salesforce Trailhead

Aws S3 Bucket Object Zip File To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). We'll then set up a passthrough stream into an s3 upload. To store your data in amazon s3, you work with resources known as buckets and objects. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. First, let's create the archival stream. A bucket is a container for objects. End users upload zip files to the root of an amazon s3 bucket. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). This is simple enough to do: How to extract large zip files in an amazon s3 bucket by using aws ec2 and python

From exotjiiiv.blob.core.windows.net

Aws_S3_Bucket_Object Folder at Jennifer Rowe blog Aws S3 Bucket Object Zip File End users upload zip files to the root of an amazon s3 bucket. A bucket is a container for objects. First, let's create the archival stream. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. S3 sends an event notification to aws lambda with the payload. Aws S3 Bucket Object Zip File.

From www.pulumi.com

Serverless App to Copy and Zip Objects Between Amazon S3 Buckets Aws S3 Bucket Object Zip File Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. First, let's create the archival stream. We'll then set up a passthrough stream into an s3 upload. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this. Aws S3 Bucket Object Zip File.

From medium.com

How to copy S3 bucket objects from one AWS account to another AWS Aws S3 Bucket Object Zip File S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. End users upload zip files to the root of an amazon s3 bucket. A bucket is. Aws S3 Bucket Object Zip File.

From baeldung-cn.com

Listing All AWS S3 Objects in a Bucket Using Java Baeldung中文网 Aws S3 Bucket Object Zip File In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. This is simple enough to do: We'll then set up a passthrough stream into an s3 upload.. Aws S3 Bucket Object Zip File.

From docs.aws.amazon.com

Naming S3 buckets in your data layers AWS Prescriptive Guidance Aws S3 Bucket Object Zip File End users upload zip files to the root of an amazon s3 bucket. To store your data in amazon s3, you work with resources known as buckets and objects. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. In a nutshell, first create an object using bytesio method, then. Aws S3 Bucket Object Zip File.

From linuxbeast.com

How to Copy S3 Bucket Objects Across AWS Accounts Linuxbeast Aws S3 Bucket Object Zip File End users upload zip files to the root of an amazon s3 bucket. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. How to extract large zip files in an amazon s3 bucket by using aws ec2 and python Stream the zip file from the source. Aws S3 Bucket Object Zip File.

From raaviblog.com

How to Get AWS S3 bucket object data using Postman A Turning Point Aws S3 Bucket Object Zip File A bucket is a container for objects. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. To store your. Aws S3 Bucket Object Zip File.

From s3browser.com

Working with Amazon S3 Object Lock how to prevent accidental or Aws S3 Bucket Object Zip File First, let's create the archival stream. We'll then set up a passthrough stream into an s3 upload. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. How to extract large zip files in an amazon s3 bucket by using aws ec2 and python End users upload zip files to. Aws S3 Bucket Object Zip File.

From deliciousbrains.com

Amazon S3 Bucket Object Ownership Aws S3 Bucket Object Zip File We'll then set up a passthrough stream into an s3 upload. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for. Aws S3 Bucket Object Zip File.

From www.youtube.com

14 AWS S3 Bucket Object Basics Introduction of AWS S3 [2024 Aws S3 Bucket Object Zip File A bucket is a container for objects. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. To store your data in amazon s3, you work with. Aws S3 Bucket Object Zip File.

From mulesy.com

Create Object In S3 bucket MuleSoft Amazon S3 Connector Aws S3 Bucket Object Zip File To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. Stream the zip file from the source bucket and read. Aws S3 Bucket Object Zip File.

From aws.amazon.com

Synchronizing Amazon S3 Buckets Using AWS Step Functions AWS Compute Blog Aws S3 Bucket Object Zip File Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. To store your data in amazon s3, you work with resources known as buckets and objects. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been.. Aws S3 Bucket Object Zip File.

From www.armorcode.com

AWS S3 Bucket Security The Top CSPM Practices ArmorCode Aws S3 Bucket Object Zip File To store your data in amazon s3, you work with resources known as buckets and objects. A bucket is a container for objects. End users upload zip files to the root of an amazon s3 bucket. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket.. Aws S3 Bucket Object Zip File.

From vpnoverview.com

A Complete Guide to Securing and Protecting AWS S3 Buckets Aws S3 Bucket Object Zip File In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. We'll then set up a passthrough stream into an s3 upload. To store your data in amazon s3, you work with resources known as buckets and objects. End users upload zip files to the root of an. Aws S3 Bucket Object Zip File.

From www.skillshats.com

How to upload an object on AWS S3 Bucket? SkillsHats Aws S3 Bucket Object Zip File To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. This is simple enough to do: S3 sends an. Aws S3 Bucket Object Zip File.

From www.vrogue.co

Aws S3 Bucket A Complete Guide To Create And Access D vrogue.co Aws S3 Bucket Object Zip File This is simple enough to do: To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. First, let's create the. Aws S3 Bucket Object Zip File.

From finleysmart.z13.web.core.windows.net

List Objects In S3 Bucket Aws Cli Aws S3 Bucket Object Zip File In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. This is simple enough to do: To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). To store your data. Aws S3 Bucket Object Zip File.

From www.hava.io

Amazon S3 Fundamentals Aws S3 Bucket Object Zip File To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). First, let's create the archival stream. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. This is simple enough. Aws S3 Bucket Object Zip File.

From www.youtube.com

AWSS3 Bucket Tutorial How to Upload an Object to S3 Bucket in AWS Aws S3 Bucket Object Zip File To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). First, let's create the archival stream. End users upload zip files to the root of an amazon s3 bucket. In a nutshell, first create an object using bytesio method, then use the zipfile. Aws S3 Bucket Object Zip File.

From buddymantra.com

Amazon S3 Bucket Everything You Need to Know About Cloud Storage Aws S3 Bucket Object Zip File Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. End users upload zip files to the root of an amazon s3 bucket. To store your data in amazon s3, you work with resources known as buckets and objects. To convert all files in your s3. Aws S3 Bucket Object Zip File.

From cto.ai

How To Copy AWS S3 Files Between Buckets Aws S3 Bucket Object Zip File This is simple enough to do: How to extract large zip files in an amazon s3 bucket by using aws ec2 and python Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. A bucket is a container for objects. End users upload zip files to. Aws S3 Bucket Object Zip File.

From deliciousbrains.com

Amazon S3 Bucket Object Ownership Aws S3 Bucket Object Zip File End users upload zip files to the root of an amazon s3 bucket. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). To store your data in amazon s3, you work with resources known as buckets and objects. How to extract large. Aws S3 Bucket Object Zip File.

From www.skillshats.com

How to upload an object on AWS S3 Bucket? SkillsHats Aws S3 Bucket Object Zip File How to extract large zip files in an amazon s3 bucket by using aws ec2 and python This is simple enough to do: End users upload zip files to the root of an amazon s3 bucket. We'll then set up a passthrough stream into an s3 upload. A bucket is a container for objects. To store your data in amazon. Aws S3 Bucket Object Zip File.

From aws.amazon.com

Store and Retrieve a File with Amazon S3 Aws S3 Bucket Object Zip File How to extract large zip files in an amazon s3 bucket by using aws ec2 and python This is simple enough to do: S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. Stream the zip file from the source bucket and read and write its contents on the fly. Aws S3 Bucket Object Zip File.

From towardsaws.com

Setup a Custom Domain to Access Your AWS S3 Bucket Objects by Aws S3 Bucket Object Zip File A bucket is a container for objects. First, let's create the archival stream. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. We'll then set up a passthrough stream into an s3 upload. S3 sends an event notification to aws lambda with the payload containing. Aws S3 Bucket Object Zip File.

From www.youtube.com

Creating a Custom Domain for AWS S3 Bucket Objects using PHP YouTube Aws S3 Bucket Object Zip File This is simple enough to do: A bucket is a container for objects. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. End users upload zip files to the root of an amazon s3 bucket. First, let's create the archival stream. Stream the zip file from the source bucket. Aws S3 Bucket Object Zip File.

From medium.com

Access AWS S3 buckets objects using lambda function and API Gateway Aws S3 Bucket Object Zip File First, let's create the archival stream. In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. This is simple enough to do: To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for. Aws S3 Bucket Object Zip File.

From medium.com

Serve static assets on S3 Bucket — A complete flask guide. by Aws S3 Bucket Object Zip File A bucket is a container for objects. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. To store your data in amazon s3, you work. Aws S3 Bucket Object Zip File.

From serverless-stack.com

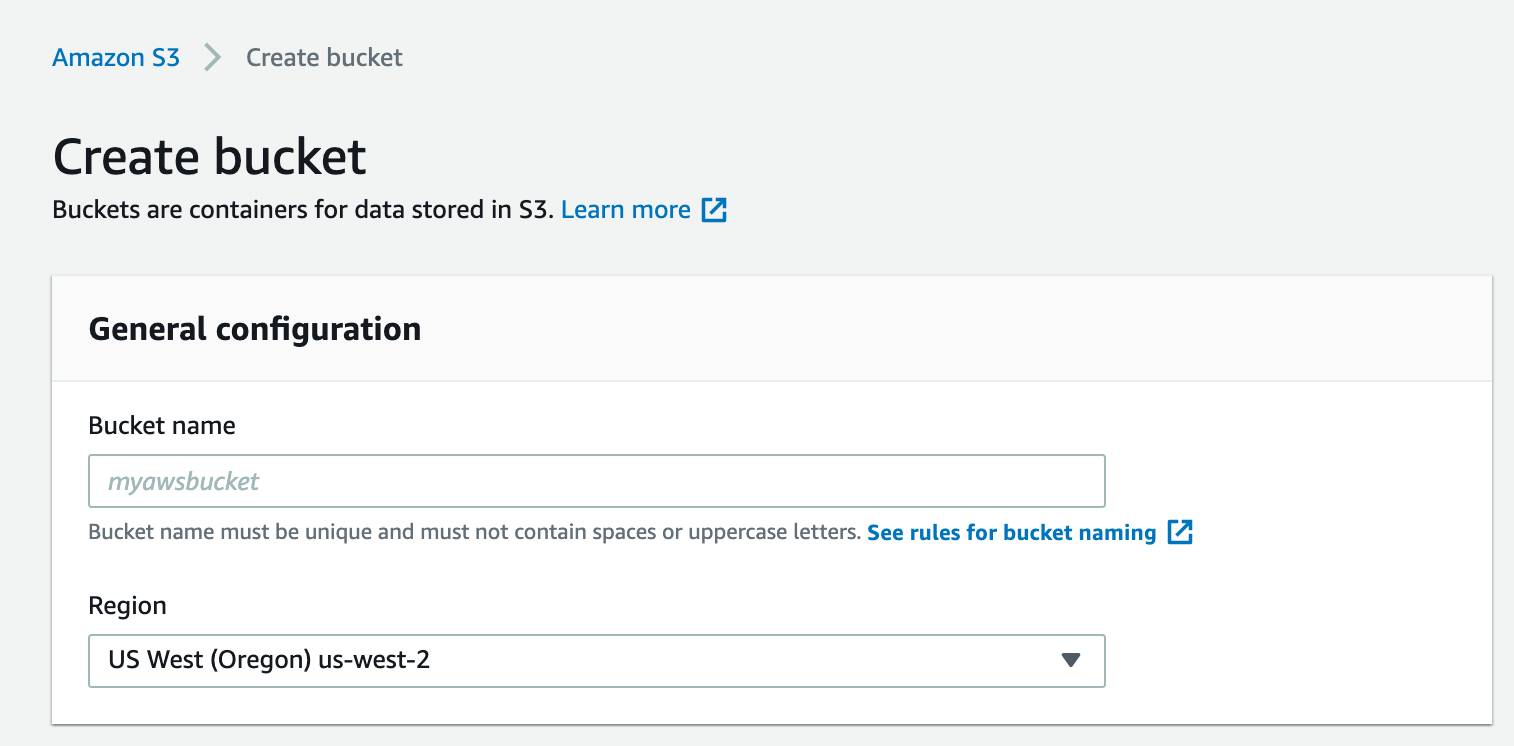

Create an S3 Bucket for File Uploads Aws S3 Bucket Object Zip File In a nutshell, first create an object using bytesio method, then use the zipfile method to write into this object by iterating all. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. First, let's create the archival stream. To store your data in amazon s3,. Aws S3 Bucket Object Zip File.

From www.vrogue.co

How To Create An S3 Bucket On Aws Complete Guide vrogue.co Aws S3 Bucket Object Zip File S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. A bucket is a container for objects. This is simple enough to do: How to extract large zip files in an amazon s3 bucket by using aws ec2 and python First, let's create the archival stream. Stream the zip file. Aws S3 Bucket Object Zip File.

From binaryguy.tech

Quickest Ways to List Files in S3 Bucket Aws S3 Bucket Object Zip File Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. S3 sends an event notification to aws lambda with the payload containing one (or more) zip files that have been. To store your data in amazon s3, you work with resources known as buckets and objects.. Aws S3 Bucket Object Zip File.

From trailhead.salesforce.com

Get Object Storage with Amazon S3 Salesforce Trailhead Aws S3 Bucket Object Zip File First, let's create the archival stream. A bucket is a container for objects. To store your data in amazon s3, you work with resources known as buckets and objects. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. We'll then set up a passthrough stream. Aws S3 Bucket Object Zip File.

From www.geeksforgeeks.org

How To Aceses AWS S3 Bucket Using AWS CLI ? Aws S3 Bucket Object Zip File This is simple enough to do: A bucket is a container for objects. End users upload zip files to the root of an amazon s3 bucket. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. In a nutshell, first create an object using bytesio method,. Aws S3 Bucket Object Zip File.

From cloudkatha.com

How to Create S3 Bucket in AWS Step by Step CloudKatha Aws S3 Bucket Object Zip File End users upload zip files to the root of an amazon s3 bucket. Stream the zip file from the source bucket and read and write its contents on the fly using python back to another s3 bucket. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws. Aws S3 Bucket Object Zip File.

From exotjiiiv.blob.core.windows.net

Aws_S3_Bucket_Object Folder at Jennifer Rowe blog Aws S3 Bucket Object Zip File We'll then set up a passthrough stream into an s3 upload. To convert all files in your s3 bucket into one single zip file you can use use aws lambda (python) with the aws sdk for python (boto3). This is simple enough to do: To store your data in amazon s3, you work with resources known as buckets and objects.. Aws S3 Bucket Object Zip File.