Airflow.providers.amazon.aws.operators.s3 Delete_Objects . However, most of these are available. See here for more information about amazon s3. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. You can use the following. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. It provides methods for various s3 operations, such as.

from airflow.apache.org

So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. However, most of these are available. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. It provides methods for various s3 operations, such as. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. See here for more information about amazon s3. You can use the following.

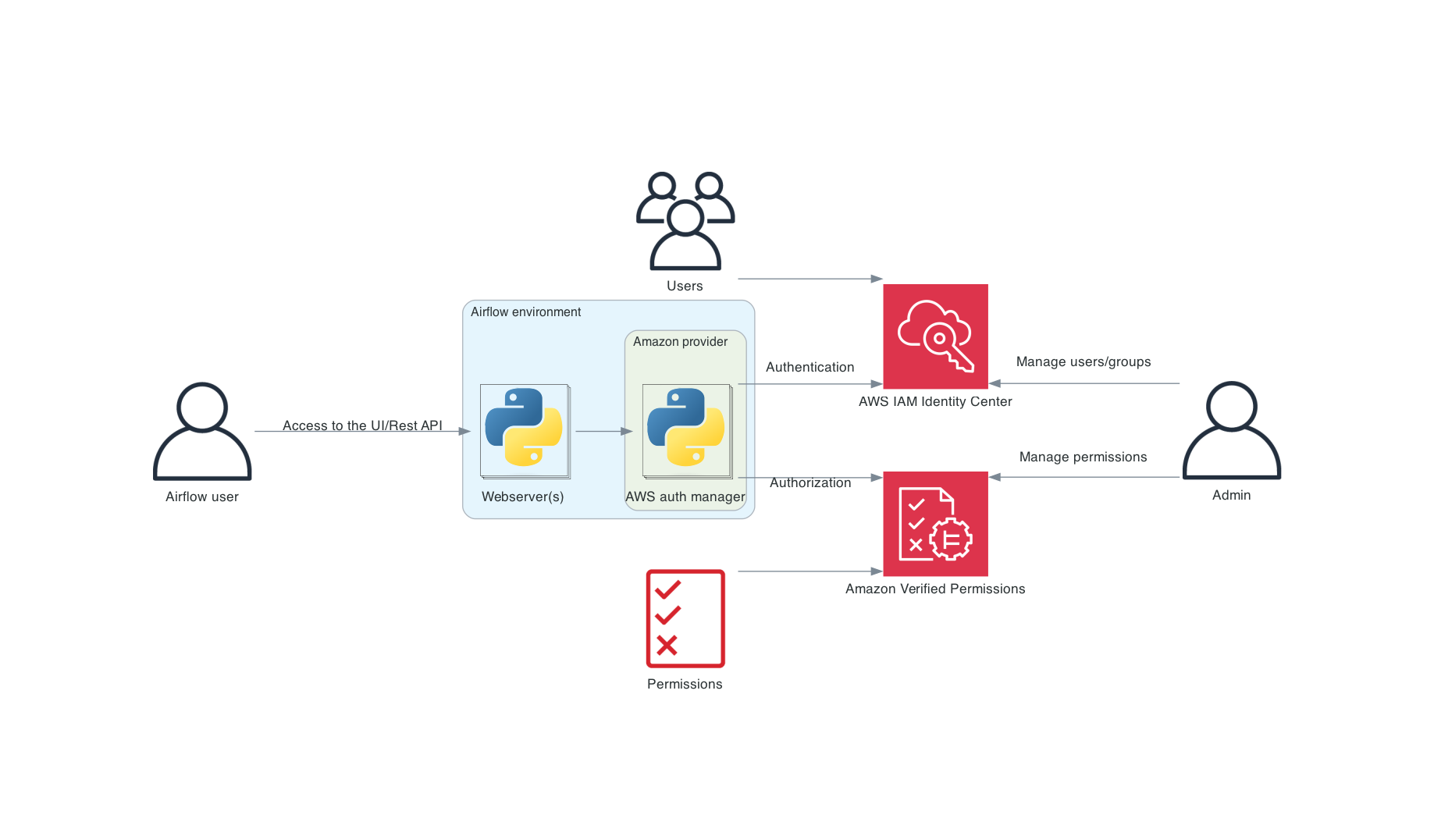

AWS auth manager — apacheairflowprovidersamazon Documentation

Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. It provides methods for various s3 operations, such as. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. See here for more information about amazon s3. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can use the following. However, most of these are available. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to.

From www.youtube.com

AWS CLI S3 List, Create, Sync, Delete, Move Buckets and Objects in Airflow.providers.amazon.aws.operators.s3 Delete_Objects However, most of these are available. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. You can use the following. See here for more information about amazon s3. To list all amazon s3 prefixes within an amazon s3 bucket you can. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From mulesy.com

Delete Object From S3 MuleSoft Amazon S3 Connector Airflow.providers.amazon.aws.operators.s3 Delete_Objects See here for more information about amazon s3. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. However, most of these are available. To list all amazon s3 prefixes within an amazon s3. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Amazon Simple Storage Services (S3) AWS Architecture Blog Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can use the following. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. See here for more information about amazon s3. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. However, most of these are available. Apache airflow's s3 hook allows for easy. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

S3 Object Lock Amazon S3 Amazon Services Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can use the following. It provides methods for various s3 operations, such as. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Using AWS DevOps Tools to model and provision AWS Glue workflows AWS Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. You can use the following. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). However, most of these are available. See here for more information about amazon s3. It provides methods for various s3 operations, such as. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. So. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From fyokpfjsm.blob.core.windows.net

Aws Cli Delete Contents Of S3 Bucket at Wendy Humes blog Airflow.providers.amazon.aws.operators.s3 Delete_Objects See here for more information about amazon s3. It provides methods for various s3 operations, such as. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. However, most of these are available.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From hevodata.com

All About Airflow Redshift Operator Made Easy 101 Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3deleteobjectsoperator (*, bucket, keys =. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From s3browser.com

Amazon S3 MultiObject Delete. The super fast way to delete large Airflow.providers.amazon.aws.operators.s3 Delete_Objects However, most of these are available. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can use the following. It provides methods for various s3 operations, such as. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. S3deleteobjectsoperator (*, bucket,. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From stackoverflow.com

amazon web services AWS S3 Lifecycle policy delete objects in a Airflow.providers.amazon.aws.operators.s3 Delete_Objects Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. It provides methods for various s3 operations, such as. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. So if you are deleting those five files and combining them into a single operators/dms.py,. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From docs.aws.amazon.com

Amazon S3 Object Replication Across AWS Regions File Gateway for Airflow.providers.amazon.aws.operators.s3 Delete_Objects However, most of these are available. See here for more information about amazon s3. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. It provides methods for various s3 operations, such as. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs).. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

一种使用 AWS 云原生服务部署高可用 APACHE AIRFLOW 集群的方案 亚马逊AWS官方博客 Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

How USAA built an Amazon S3 malware scanning solution AWS Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Adding and removing object tags with Amazon S3 Batch Operations AWS Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. See here for more information about amazon s3. However, most of these are available. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. You can use the following. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Migrating from selfmanaged Apache Airflow to Amazon Managed Workflows Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. See here for more information. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From www.youtube.com

7 ways to delete objects from AWS S3 How and when to use them Hands Airflow.providers.amazon.aws.operators.s3 Delete_Objects To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. It provides methods for various s3 operations, such as. See here for more information about amazon s3. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From tianzhui.cloud

AWS reInvent 2020 Data pipelines with Amazon Managed Workflows for Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can use the following. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). To list all. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From hevodata.com

Working with Amazon S3 Keys 3 Critical Aspects Learn Hevo Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can use the following. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. It provides methods for various s3 operations, such as. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. So if you are deleting those five files and combining them into. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Amazon S3 Cloud Object Storage AWS Airflow.providers.amazon.aws.operators.s3 Delete_Objects Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. You can use the following. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. However, most of these are available. See. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Automating Amazon CloudWatch dashboards and alarms for Amazon Managed Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can use the following. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. It provides methods for various s3 operations,. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From www.youtube.com

Airflow AWS S3 Sensor Operator Airflow Tutorial P12 YouTube Airflow.providers.amazon.aws.operators.s3 Delete_Objects Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. See here for more information about amazon s3. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). It provides methods for various s3 operations, such as. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From techdirectarchive.com

How to delete AWS S3 Bucket and Objects via AWS CLI from Linux Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can use the following. However, most of these are available. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Airflow.providers.amazon.aws.operators.s3 module is available. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From www.youtube.com

How to create S3 connection for AWS and MinIO in latest airflow version Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). See here for more information about amazon s3. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. However, most of these are available. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. So if you are deleting those five files and combining them into a single operators/dms.py,. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From highskyit.com

AWS Deny user to Delete Object & Put Object from S3 Bucket Highsky Airflow.providers.amazon.aws.operators.s3 Delete_Objects However, most of these are available. You can use the following. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. You can just use s3deletebucketoperator with. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From towardsdatascience.com

Apache Airflow for Data Science — How to Download Files from Amazon S3 Airflow.providers.amazon.aws.operators.s3 Delete_Objects However, most of these are available. It provides methods for various s3 operations, such as. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. You can use the following. See here for more information about amazon s3. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id =. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From blog.awsfundamentals.com

AWS S3 Sync An Extensive Guide Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. It provides methods for various s3 operations, such as. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. To list all. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Workflow Management Amazon Managed Workflows for Apache Airflow (MWAA Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From airflow.apache.org

AWS Secrets Manager Backend — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can use the following. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. See here for more information about amazon s3. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. Apache airflow's. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From airflow.apache.org

Writing logs to Amazon S3 — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.operators.s3 Delete_Objects It provides methods for various s3 operations, such as. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3deleteobjectsoperator (*, bucket, keys = none, prefix =. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From airflow.apache.org

AWS auth manager — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.operators.s3 Delete_Objects You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. You can use the following. See here for more information about amazon s3. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From airflow.apache.org

AWS Secrets Manager Backend — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.operators.s3 Delete_Objects It provides methods for various s3 operations, such as. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). However, most of these are available. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. S3deleteobjectsoperator (*, bucket, keys =. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From docs.aws.amazon.com

Amazon Managed Workflows for Apache Airflow란 무엇입니까? Amazon Managed Airflow.providers.amazon.aws.operators.s3 Delete_Objects Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. However, most of these are available. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From stackoverflow.com

amazon web services AWS S3 delete all the objects or within in a Airflow.providers.amazon.aws.operators.s3 Delete_Objects S3deleteobjectsoperator (*, bucket, keys = none, prefix = none, aws_conn_id = 'aws_default',. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. See here. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From www.youtube.com

AWS S3 versioning S3 versioning Restore S3 file S3 Delete Marker Airflow.providers.amazon.aws.operators.s3 Delete_Objects See here for more information about amazon s3. It provides methods for various s3 operations, such as. S3getbuckettaggingoperator (bucket_name, aws_conn_id = 'aws_default', ** kwargs). Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. You can just use s3deletebucketoperator with force_delete=true that forcibly delete all objects in the bucket. Apache airflow's s3 hook allows for easy interaction with aws s3 buckets.. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From www.skillshats.com

How to delete AWS S3 bucket? SkillsHats Airflow.providers.amazon.aws.operators.s3 Delete_Objects To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. Airflow.providers.amazon.aws.operators.s3 module is available in airflow version 2.6.0 onwards. You can use the following. See here for more information about amazon s3. It provides. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.

From aws.amazon.com

Amazon S3 AWS Big Data Blog Airflow.providers.amazon.aws.operators.s3 Delete_Objects Apache airflow's s3 hook allows for easy interaction with aws s3 buckets. To list all amazon s3 prefixes within an amazon s3 bucket you can use s3listprefixesoperator. So if you are deleting those five files and combining them into a single operators/dms.py, then the deleted files need to. You can use the following. You can just use s3deletebucketoperator with force_delete=true. Airflow.providers.amazon.aws.operators.s3 Delete_Objects.