Multiprocessing Pyspark . A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames.

from sparkbyexamples.com

Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames.

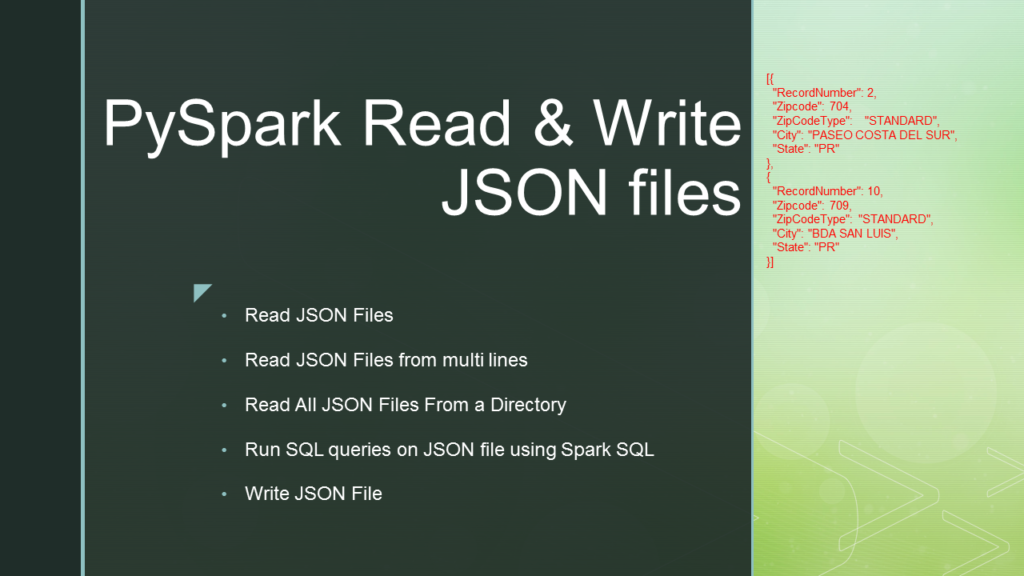

PySpark Read JSON file into DataFrame Spark By {Examples}

Multiprocessing Pyspark Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python.

From sparkbyexamples.com

PySpark Create DataFrame with Examples Spark By {Examples} Multiprocessing Pyspark The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In a python context,. Multiprocessing Pyspark.

From sparkbyexamples.com

PySpark Install on Linux Ubuntu Spark By {Examples} Multiprocessing Pyspark The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. Spark is a distributed parallel. Multiprocessing Pyspark.

From www.deeplearningnerds.com

PySpark Concatenate DataFrames Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. I have setup a. Multiprocessing Pyspark.

From dev.to

Python, Spark and the JVM An overview of the PySpark Runtime Multiprocessing Pyspark I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for. Multiprocessing Pyspark.

From blog.51cto.com

pyspark dataframe纵向合并 pyspark dataframe rdd_mob64ca140a1f7c的技术博客_51CTO博客 Multiprocessing Pyspark Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. A new. Multiprocessing Pyspark.

From dataengineeracademy.com

PySpark tutorial for beginners Key Data Engineering Practices Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In a python. Multiprocessing Pyspark.

From pub.towardsai.net

Pyspark MLlib Classification using Pyspark ML by Muttineni Sai Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. In a. Multiprocessing Pyspark.

From www.acontis.com

Symmetric Multiprocessing (SMP) and Asymmetric Multiprocessing (AMP Multiprocessing Pyspark In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for. Multiprocessing Pyspark.

From sparkbyexamples.com

PySpark Check Column Exists in DataFrame Spark By {Examples} Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In. Multiprocessing Pyspark.

From nhasachtinhoc.blogspot.com

Chia Sẻ Khóa Học PySpark & AWS Làm Chủ Big Data Với PySpark Và AWS Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. In a python context, think of. Multiprocessing Pyspark.

From www.programmingfunda.com

PySpark Sort Function with Examples » Programming Funda Multiprocessing Pyspark I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. Spark. Multiprocessing Pyspark.

From www.projectpro.io

PySpark ProjectBuild a Data Pipeline using Kafka and Redshift Multiprocessing Pyspark I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Pyspark.sparkcontext.parallelize is a. Multiprocessing Pyspark.

From www.youtube.com

Pyspark Scenarios 14 How to implement Multiprocessing in Azure Multiprocessing Pyspark Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is. Multiprocessing Pyspark.

From www.acontis.com

Symmetric Multiprocessing (SMP) and Asymmetric Multiprocessing (AMP Multiprocessing Pyspark A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In a python context, think. Multiprocessing Pyspark.

From analyticslearn.com

PySpark UDF A Comprehensive Guide AnalyticsLearn Multiprocessing Pyspark I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. Spark. Multiprocessing Pyspark.

From blog.cellenza.com

PySpark Unit Test Best Practices Le blog de Cellenza Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data. Multiprocessing Pyspark.

From www.embedded.com

embedded DBMS supports asymmetric multiprocessing systems Multiprocessing Pyspark A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Pyspark.sparkcontext.parallelize. Multiprocessing Pyspark.

From pyspark.com

Pyspark Fundamentals Pyspark Multiprocessing Pyspark Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames.. Multiprocessing Pyspark.

From sparkbyexamples.com

PySpark Join Two or Multiple DataFrames Spark by {Examples} Multiprocessing Pyspark Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. Pyspark.sparkcontext.parallelize is. Multiprocessing Pyspark.

From www.shiksha.com

Difference Between Multiprocessing and Multiprogramming Shiksha Online Multiprocessing Pyspark I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In. Multiprocessing Pyspark.

From ashishware.com

Creating scalable NLP pipelines using PySpark and Nlphose Multiprocessing Pyspark In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the. Multiprocessing Pyspark.

From www.educba.com

PySpark DataFrame Working of DataFrame in PySpark with Examples Multiprocessing Pyspark In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it. Multiprocessing Pyspark.

From vitolavecchia.altervista.org

Differenza tra multiprocessing e multithreading in informatica Multiprocessing Pyspark The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In the pyspark. Multiprocessing Pyspark.

From www.amazon.com

Data Structures and Algorithms with Python With an Introduction to Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. In a python. Multiprocessing Pyspark.

From towardsdatascience.com

How to use PySpark on your computer by Favio Vázquez Towards Data Multiprocessing Pyspark In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it. Multiprocessing Pyspark.

From mvje.tistory.com

[python] 멀티프로세싱 Pool 사용법 및 코드 예시 multiprocessing.Pool python 속도 향상 Multiprocessing Pyspark Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local.. Multiprocessing Pyspark.

From www.shiksha.com

Difference Between Multiprocessing and Multiprogramming Shiksha Online Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. In. Multiprocessing Pyspark.

From www.oreilly.com

1. Introduction to Spark and PySpark Data Algorithms with Spark [Book] Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using. Multiprocessing Pyspark.

From sparkbyexamples.com

PySpark Read JSON file into DataFrame Spark By {Examples} Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In a python context,. Multiprocessing Pyspark.

From sparkbyexamples.com

What is PySpark DataFrame? Spark By {Examples} Multiprocessing Pyspark Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In a python context,. Multiprocessing Pyspark.

From www.icongen.in

Everything You Need to Know About Big Data, Hadoop, Spark, and Pyspark Multiprocessing Pyspark A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. In a python. Multiprocessing Pyspark.

From www.linkedin.com

Multiprocessing and Multithreading Key Concepts for Data Scientists Multiprocessing Pyspark In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it. Multiprocessing Pyspark.

From www.ninjaone.com

What Is Symmetric Multiprocessing (SMP) NinjaOne Multiprocessing Pyspark Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. I have setup a spark on yarn cluster on my laptop, and have problem running multiple concurrent jobs in spark, using python. A new feature in spark that enables parallelized processing on pandas data frames within a spark environment. Spark is. Multiprocessing Pyspark.

From www.odbms.org

Shared Data in Asymmetric Multiprocessing (AMP) Configurations Multiprocessing Pyspark In the pyspark ver, user defined aggregation functions are still not fully supported and i decided to leave it for now. Pyspark.sparkcontext.parallelize is a function in sparkcontext that is used to create a resilient distributed dataset (rdd) from a local. In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. The multiprocessing. Multiprocessing Pyspark.

From blog.csdn.net

Python大数据之PySpark_spark pythonCSDN博客 Multiprocessing Pyspark In pyspark, parallel processing is done using rdds (resilient distributed datasets), which are the fundamental data structure in. In a python context, think of pyspark as a way to handle parallel processing without the need for the threading or multiprocessing modules. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python.. Multiprocessing Pyspark.