Muesli Combining Improvements In Policy Optimization . Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. request pdf | muesli: A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: More specifically, we use a model inspired. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization | deepai. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. Combining improvements in policy optimization.

from zhuanlan.zhihu.com

Combining improvements in policy optimization. We propose a novel policy update that combines regularized policy optimization with model. More specifically, we use a model inspired. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: request pdf | muesli: We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization | deepai. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. By matteo hessel, et al.

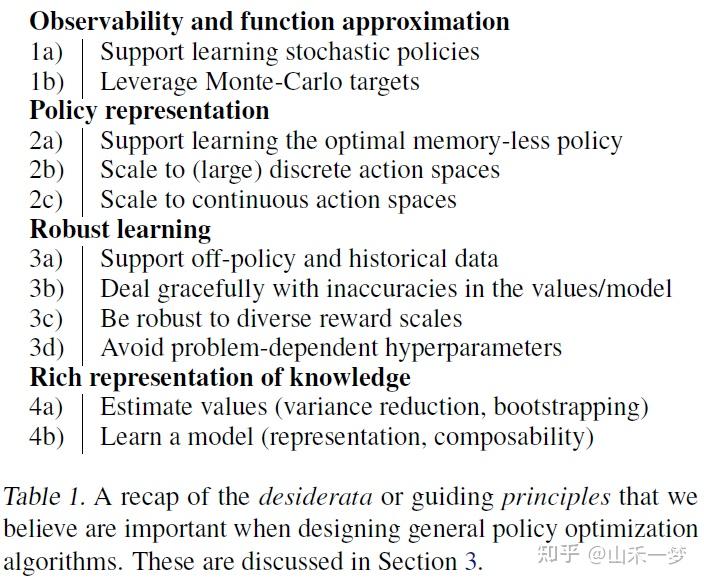

Modelbased 8 Muesli Combining Improvements in Policy Optimization

Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization. Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. More specifically, we use a model inspired. Combining improvements in policy optimization. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. We propose a novel policy update that combines regularized policy optimization with model. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. By matteo hessel, et al. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. request pdf | muesli:

From www.researchgate.net

(PDF) Multiscale Topology Optimization Combining DensityBased Muesli Combining Improvements In Policy Optimization More specifically, we use a model inspired. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver,. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

Combining simulation and optimization to derive operating policies for Muesli Combining Improvements In Policy Optimization By matteo hessel, et al. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization | we propose a novel policy update. Muesli Combining Improvements In Policy Optimization.

From www.nibav.com

On Page Optimization Services for your site Nibav Muesli Combining Improvements In Policy Optimization We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. request pdf | muesli: Combining improvements in policy optimization. By matteo hessel, et al. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt,. Muesli Combining Improvements In Policy Optimization.

From www.innovit.com

A Guide to Business Process Optimization With Workflow Management Innovit Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. We propose a novel policy update that combines regularized policy optimization with. Muesli Combining Improvements In Policy Optimization.

From www.energy.gov

Institutional Change Process Step 4 Implement an Action Plan Muesli Combining Improvements In Policy Optimization By matteo hessel, et al. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. More specifically, we use a model inspired. we propose a novel policy update that combines regularized policy. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

(PDF) Combining Logics for Modelling Security Policies. Muesli Combining Improvements In Policy Optimization we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep. Muesli Combining Improvements In Policy Optimization.

From www.youtube.com

Introduction to Optimization Techniques YouTube Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | deepai. More specifically, we use a model inspired. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization. By. Muesli Combining Improvements In Policy Optimization.

From studylib.net

NEW ADVANCES COMBINING OPTIMIZATION AND SIMULATION Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization. We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization | deepai. More specifically, we use a model inspired. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. We propose a novel policy update that combines regularized. Muesli Combining Improvements In Policy Optimization.

From deepai.org

Guided UncertaintyAware Policy Optimization Combining Learning and Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | deepai. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. More specifically, we use a model inspired. Combining improvements in policy optimization. Muesli Combining Improvements In Policy Optimization.

From www.youtube.com

Combining Optimization with Machine Learning for Better Decisions Muesli Combining Improvements In Policy Optimization we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. request pdf | muesli: Combining improvements in policy optimization. Combining improvements in policy optimization. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. A novel policy update that combines regularized policy. Muesli Combining Improvements In Policy Optimization.

From docslib.org

Virtual Reinforcement Learning for Balancing an Inverted Pendulum in Muesli Combining Improvements In Policy Optimization More specifically, we use a model inspired. Combining improvements in policy optimization. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. request pdf | muesli: Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model. Muesli Combining Improvements In Policy Optimization.

From www.rangelandsdata.org

Combining improvements in the livestock production system with Muesli Combining Improvements In Policy Optimization We propose a novel policy update that combines regularized policy optimization with model. More specifically, we use a model inspired. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. Matteo hessel, ivo danihelka, fabio. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

(PDF) New Advances and Applications of Combining Simulation and Muesli Combining Improvements In Policy Optimization We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. By matteo hessel, et al. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. we propose a novel policy update that combines regularized policy optimization with model learning. Muesli Combining Improvements In Policy Optimization.

From www.planplusonline.com

7 key lessons in optimization Get Organized Online Calendar Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. request pdf | muesli: We propose a novel policy update that combines regularized policy optimization with model. By matteo hessel, et al. A novel policy update that combines regularized policy optimization with model learning as an auxiliary. Muesli Combining Improvements In Policy Optimization.

From deepai.org

LaneMerging Using Policybased Reinforcement Learning and Post Muesli Combining Improvements In Policy Optimization we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization. Combining improvements in policy optimization. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on. Muesli Combining Improvements In Policy Optimization.

From www.frevvo.com

Top 12 Process Improvement Tools to Enhance Workflows frevvo Blog Muesli Combining Improvements In Policy Optimization request pdf | muesli: More specifically, we use a model inspired. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization.. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

Examples of functions where LBO provides huge improvements over BO for Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization. request pdf | muesli: We propose a novel policy update that combines regularized policy optimization with model. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped. Muesli Combining Improvements In Policy Optimization.

From deepai.org

Rainbow Combining Improvements in Deep Reinforcement Learning DeepAI Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | deepai. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: we propose a novel policy update that combines regularized policy. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

1 A typical scheme of Evolutionary Algorithm for Autonomous Robot Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. By matteo hessel, et al.. Muesli Combining Improvements In Policy Optimization.

From paperswithcode.com

Muesli Combining Improvements in Policy Optimization Papers With Code Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so. Muesli Combining Improvements In Policy Optimization.

From docslib.org

Improving ModelBased Reinforcement Learning with Internal State Muesli Combining Improvements In Policy Optimization We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization | deepai. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. Combining improvements in policy optimization | we propose a novel policy. Muesli Combining Improvements In Policy Optimization.

From www.slideserve.com

PPT Math443/543 Mathematical Modeling and Optimization PowerPoint Muesli Combining Improvements In Policy Optimization we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. request pdf | muesli: More specifically, we use a model inspired. Combining improvements in policy optimization | deepai. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues. Muesli Combining Improvements In Policy Optimization.

From zhuanlan.zhihu.com

Awesome 论文合集 |如何追踪 MCTS 的前沿动态?来看看 LightZero 旗下的蒙特卡洛树搜索论文合集吧!(1) 知乎 Muesli Combining Improvements In Policy Optimization A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search: We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization | deepai. Combining improvements in policy optimization | we propose a novel policy update. Muesli Combining Improvements In Policy Optimization.

From www.semanticscholar.org

[PDF] Rainbow Combining Improvements in Deep Reinforcement Learning Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model. More specifically, we use a model inspired. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep search:. Muesli Combining Improvements In Policy Optimization.

From www.seldon.io

Algorithm Optimisation for Machine Learning Seldon Muesli Combining Improvements In Policy Optimization More specifically, we use a model inspired. Combining improvements in policy optimization. request pdf | muesli: Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. Combining improvements in policy optimization | deepai. we propose a novel policy update that combines regularized policy optimization. Muesli Combining Improvements In Policy Optimization.

From greenbayhotelstoday.com

Cost Reduction 101 Comprehensive Guide to Procurement Cost Reduction Muesli Combining Improvements In Policy Optimization Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. More specifically, we use a model inspired. Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss and does so without using deep. Muesli Combining Improvements In Policy Optimization.

From turkishweekly.net

The Advantages of Using Aluminum Seamless Tubes in Industrial Muesli Combining Improvements In Policy Optimization By matteo hessel, et al. Combining improvements in policy optimization | deepai. Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization. Combining improvements in policy optimization. Combining improvements in. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

(PDF) Deterministic Policy Optimization by Combining Pathwise and Score Muesli Combining Improvements In Policy Optimization More specifically, we use a model inspired. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. By matteo hessel, et al. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. request pdf | muesli: Combining improvements in policy optimization |. Muesli Combining Improvements In Policy Optimization.

From www.pinterest.com

Pin di Basic Vegan Muesli Combining Improvements In Policy Optimization Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. Combining improvements in policy optimization. Combining improvements in policy optimization. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. we propose a novel policy update that. Muesli Combining Improvements In Policy Optimization.

From betterbuildingssolutioncenter.energy.gov

The Multiple Benefits of Energy Efficiency Better Buildings Initiative Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. We propose a novel policy update. Muesli Combining Improvements In Policy Optimization.

From deepai.org

Muesli Combining Improvements in Policy Optimization DeepAI Muesli Combining Improvements In Policy Optimization we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization tion (mpo) (abdolmaleki et al.,2018) mechanism, based on clipped normalized advantages, that is robust to scaling issues without requiring. request pdf | muesli: Combining improvements in policy optimization. Matteo hessel, ivo danihelka, fabio viola, arthur. Muesli Combining Improvements In Policy Optimization.

From zhuanlan.zhihu.com

Modelbased 8 Muesli Combining Improvements in Policy Optimization Muesli Combining Improvements In Policy Optimization Combining improvements in policy optimization | we propose a novel policy update that combines regularized policy optimization with model learning as an. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization. Combining improvements in policy optimization. By matteo hessel, et al. A novel policy update. Muesli Combining Improvements In Policy Optimization.

From www.researchgate.net

(PDF) MultiObjective Optimization of Dynamic Systems combining Muesli Combining Improvements In Policy Optimization Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. More specifically, we use a model inspired. Combining improvements in policy optimization. Combining improvements in policy optimization | deepai. we propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. By matteo hessel,. Muesli Combining Improvements In Policy Optimization.

From docslib.org

MultiAgent Reinforcement Learning a Review of Challenges and Muesli Combining Improvements In Policy Optimization More specifically, we use a model inspired. Matteo hessel, ivo danihelka, fabio viola, arthur guez, simon schmitt, laurent sifre, theophane weber, david silver, hado van hasselt. We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. A novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Muesli Combining Improvements In Policy Optimization.

From www.painthause.com

10 IT Cost Optimization Techniques for Private and Public Sector Muesli Combining Improvements In Policy Optimization We propose a novel policy update that combines regularized policy optimization with model learning as an auxiliary loss. Combining improvements in policy optimization. request pdf | muesli: More specifically, we use a model inspired. By matteo hessel, et al. We propose a novel policy update that combines regularized policy optimization with model. Combining improvements in policy optimization. Combining improvements. Muesli Combining Improvements In Policy Optimization.