Machine Translation Evaluation Versus Quality Estimation . We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; White et al., 1994), where the evaluators. Machine translation quality estimation vs. In this paper we compare and contrast two approaches to machine translation (mt):

from www.slideshare.net

Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this paper we compare and contrast two approaches to machine translation (mt): Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Machine translation quality estimation vs. White et al., 1994), where the evaluators.

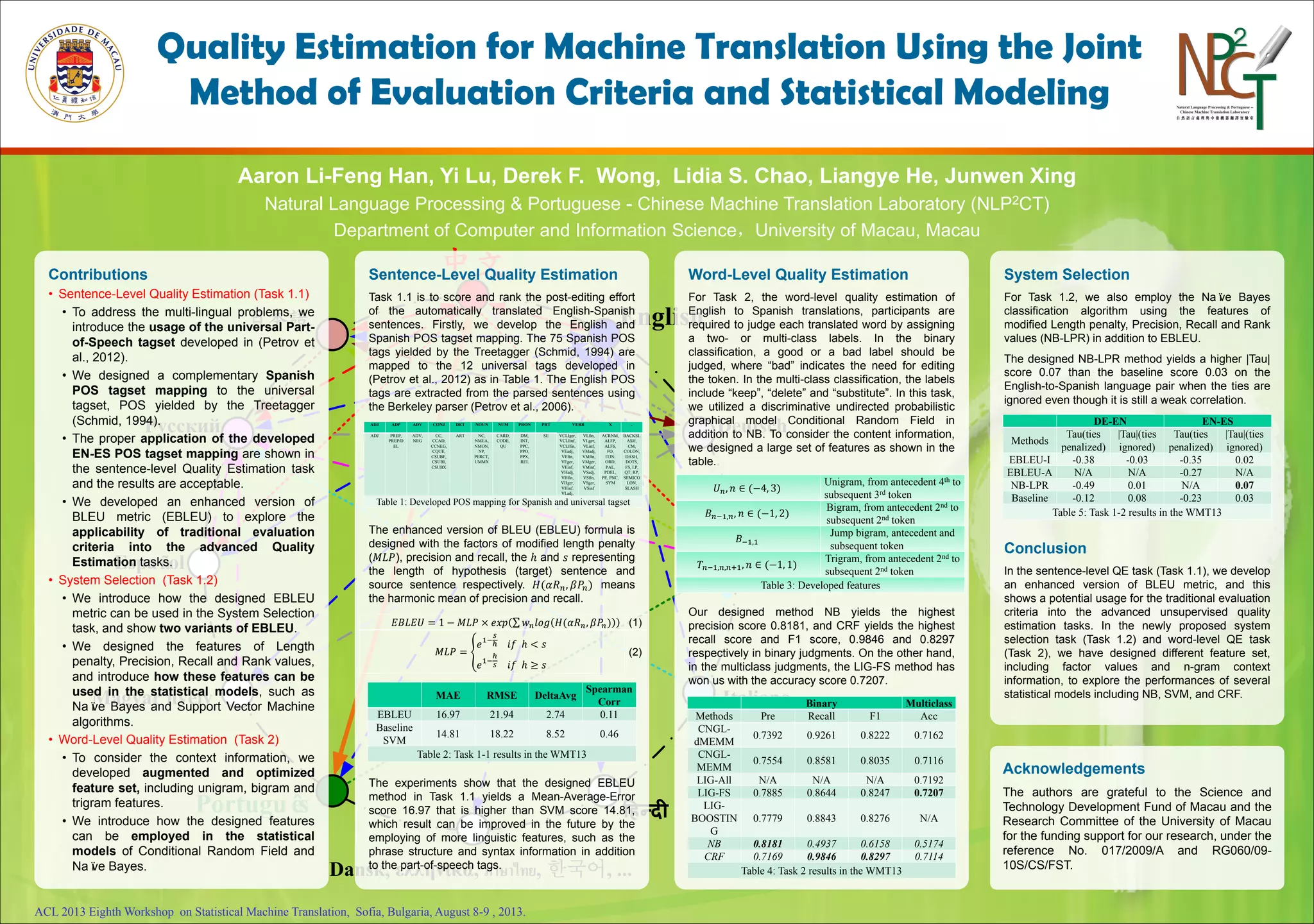

ACLWMT13 poster.Quality Estimation for Machine Translation Using the

Machine Translation Evaluation Versus Quality Estimation Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Machine translation quality estimation vs. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; In this paper we compare and contrast two approaches to machine translation (mt): Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. White et al., 1994), where the evaluators.

From www.taus.net

DeMT™ Estimate API Machine Translation Quality Estimation TAUS Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this paper we compare and contrast two approaches to machine translation (mt): Assigning overall scores was the very first method of manual mt. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Improving Evaluation of Documentlevel Machine Translation Machine Translation Evaluation Versus Quality Estimation Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Unlike quality evaluation, machine translation. Machine Translation Evaluation Versus Quality Estimation.

From dipteshkanojia.github.io

Quality Estimation for Machine Translation Kanojia, Diptesh Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. White et al., 1994), where the evaluators. Machine translation quality estimation vs. We show that this approach yields better correlation with human evaluation as. Machine Translation Evaluation Versus Quality Estimation.

From www.slideserve.com

PPT Statistical Machine Translation Part I Introduction PowerPoint Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): White et al., 1994), where the evaluators. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. We. Machine Translation Evaluation Versus Quality Estimation.

From www.aclweb.org

Improving Evaluation of Machine Translation Quality Estimation ACL Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. White et al., 1994), where the evaluators. In this paper we compare and contrast two approaches to machine translation (mt): Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; We show that this approach yields. Machine Translation Evaluation Versus Quality Estimation.

From www.aclweb.org

Improving Evaluation of Documentlevel Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. White et al., 1994), where the evaluators.. Machine Translation Evaluation Versus Quality Estimation.

From www.slideserve.com

PPT Rationale for a multilingual corpus for machine translation Machine Translation Evaluation Versus Quality Estimation Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Machine translation quality estimation vs. Most evaluation metrics for. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Exploring Consensus in Machine Translation for Quality Estimation Machine Translation Evaluation Versus Quality Estimation Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Machine translation quality estimation vs. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Enhancing Machine Translation Quality Estimation via FineGrained Machine Translation Evaluation Versus Quality Estimation Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Most evaluation metrics for machine translation (mt) require reference. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Multimodal Quality Estimation for Machine Translation Machine Translation Evaluation Versus Quality Estimation Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Most evaluation metrics for machine translation (mt). Machine Translation Evaluation Versus Quality Estimation.

From blog.modernmt.com

Understanding Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. White et al., 1994), where. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Finegrained evaluation of Quality Estimation for Machine Machine Translation Evaluation Versus Quality Estimation We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Machine translation quality estimation vs. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. In this paper we compare and contrast two approaches to machine translation (mt): Assigning overall scores was the very first method of manual. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Quality Estimation Of Machine Translation Outputs Through Stemming Machine Translation Evaluation Versus Quality Estimation White et al., 1994), where the evaluators. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order. Machine Translation Evaluation Versus Quality Estimation.

From aclanthology.org

Metrics for Evaluation of Wordlevel Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this paper we compare and contrast two approaches to machine translation (mt): Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Assigning overall scores was the very first method. Machine Translation Evaluation Versus Quality Estimation.

From deepai.org

MDQE A More Accurate Direct Pretraining for Machine Translation Machine Translation Evaluation Versus Quality Estimation Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this paper. Machine Translation Evaluation Versus Quality Estimation.

From www.slideshare.net

ACLWMT13 poster.Quality Estimation for Machine Translation Using the Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations. Machine Translation Evaluation Versus Quality Estimation.

From www.mdpi.com

Applied Sciences Free FullText Comparative Analysis of Current Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Machine. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) A Quality Estimation and Quality Evaluation Tool for the Machine Translation Evaluation Versus Quality Estimation White et al., 1994), where the evaluators. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Machine translation quality estimation vs. Assigning overall scores was the very first method. Machine Translation Evaluation Versus Quality Estimation.

From www.mdpi.com

Informatics Free FullText The Role of Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Most evaluation metrics for machine translation. Machine Translation Evaluation Versus Quality Estimation.

From blog.pangeanic.com

Techniques for Measuring Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; In this paper we compare and contrast two approaches to machine translation (mt): Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. White et al., 1994), where the evaluators. Unlike quality evaluation, machine translation quality. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) An Overview on Machine Translation Evaluation Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. White et al., 1994), where the evaluators. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; We show that this approach yields better correlation with human evaluation as compared to. Machine Translation Evaluation Versus Quality Estimation.

From www.perlego.com

[PDF] Quality Estimation for Machine Translation de Lucia Specia libro Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): White et al., 1994), where the evaluators. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. We show that this approach yields. Machine Translation Evaluation Versus Quality Estimation.

From aclanthology.org

Quality Estimation for Machine Translation Using the Joint Method of Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Machine translation quality estimation vs. In this paper we compare and contrast two approaches to machine translation (mt): White et al., 1994), where the evaluators. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Assigning overall scores. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Integrating Fuzzy Matches into Sentencelevel Quality Estimation Machine Translation Evaluation Versus Quality Estimation We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. White et al., 1994), where the evaluators. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. In. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

Who needs machine translation evaluation? Download Scientific Diagram Machine Translation Evaluation Versus Quality Estimation Machine translation quality estimation vs. White et al., 1994), where the evaluators. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Assigning overall scores was the very first method of manual mt evaluation. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Comparative Quality Estimation for Machine Translation Machine Translation Evaluation Versus Quality Estimation Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Machine translation quality estimation vs. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. White et al., 1994), where the evaluators.. Machine Translation Evaluation Versus Quality Estimation.

From aclanthology.org

PreQuEL Quality Estimation of Machine Translation Outputs in Advance Machine Translation Evaluation Versus Quality Estimation Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Machine translation quality estimation vs. White et al., 1994), where the evaluators. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Recent shared. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Enhancing machine translation with quality estimation and Machine Translation Evaluation Versus Quality Estimation Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. In this paper we compare and contrast two approaches to machine translation (mt): Unlike quality evaluation, machine translation quality estimation (mtqe). Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Quality Estimation for Machine Translation Using the Joint Method Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Recent shared evaluation tasks have shown progress on the average quality of machine. Machine Translation Evaluation Versus Quality Estimation.

From www.taus.net

Quality Estimation for Machine Translation Machine Translation Evaluation Versus Quality Estimation Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; In this paper we compare and contrast two approaches to machine translation (mt): Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. We show. Machine Translation Evaluation Versus Quality Estimation.

From crosslang.com

Machine Translation Evaluation Tool CrossLang Machine Translation Evaluation Versus Quality Estimation In this paper we compare and contrast two approaches to machine translation (mt): Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Machine translation quality estimation vs. White et al., 1994), where the evaluators. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. Most. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Machine Translation Evaluation Manual Versus Automatic—A Machine Translation Evaluation Versus Quality Estimation Assigning overall scores was the very first method of manual mt evaluation (alpac, 1966; Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. We show that this approach yields better. Machine Translation Evaluation Versus Quality Estimation.

From www.taus.net

Three Facts about Machine Translation Quality Estimation Machine Translation Evaluation Versus Quality Estimation White et al., 1994), where the evaluators. Recent shared evaluation tasks have shown progress on the average quality of machine translation (mt) systems, particularly in the. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a. Machine Translation Evaluation Versus Quality Estimation.

From www.researchgate.net

(PDF) Tailoring Domain Adaptation for Machine Translation Quality Machine Translation Evaluation Versus Quality Estimation Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to produce a score reflecting. White et al., 1994), where the evaluators. Most evaluation metrics for machine translation (mt) require reference translations for each sentence in order to. We show that this approach. Machine Translation Evaluation Versus Quality Estimation.

From deepai.org

PreQuEL Quality Estimation of Machine Translation Outputs in Advance Machine Translation Evaluation Versus Quality Estimation White et al., 1994), where the evaluators. Machine translation quality estimation vs. In this paper we compare and contrast two approaches to machine translation (mt): Unlike quality evaluation, machine translation quality estimation (mtqe) doesn’t rely on human. We show that this approach yields better correlation with human evaluation as compared to commonly used metrics, even with models. Recent shared evaluation. Machine Translation Evaluation Versus Quality Estimation.