Pytorch Categorical Embedding . since we only need to embed categorical columns, we split our input into two parts: here’s the deal: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. To fully understand how embedding layers work in pytorch, we’ll build a simple example. extract the learned embedding ¶. the most common approach to create continuous values from categorical data is nn.embedding. Internally in pytorch tabular, a model has three. pytorch tabular has implemented a few sota models for tabular data. This mapping is done through an embedding matrix, which is a. We then choose our batch size and feed it along with the dataset to the dataloader. For the models that support (categoryembeddingmodel and. Deep learning is generally done in batches.

from discuss.pytorch.org

Deep learning is generally done in batches. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. since we only need to embed categorical columns, we split our input into two parts: This mapping is done through an embedding matrix, which is a. pytorch tabular has implemented a few sota models for tabular data. To fully understand how embedding layers work in pytorch, we’ll build a simple example. For the models that support (categoryembeddingmodel and. We then choose our batch size and feed it along with the dataset to the dataloader. here’s the deal: the most common approach to create continuous values from categorical data is nn.embedding.

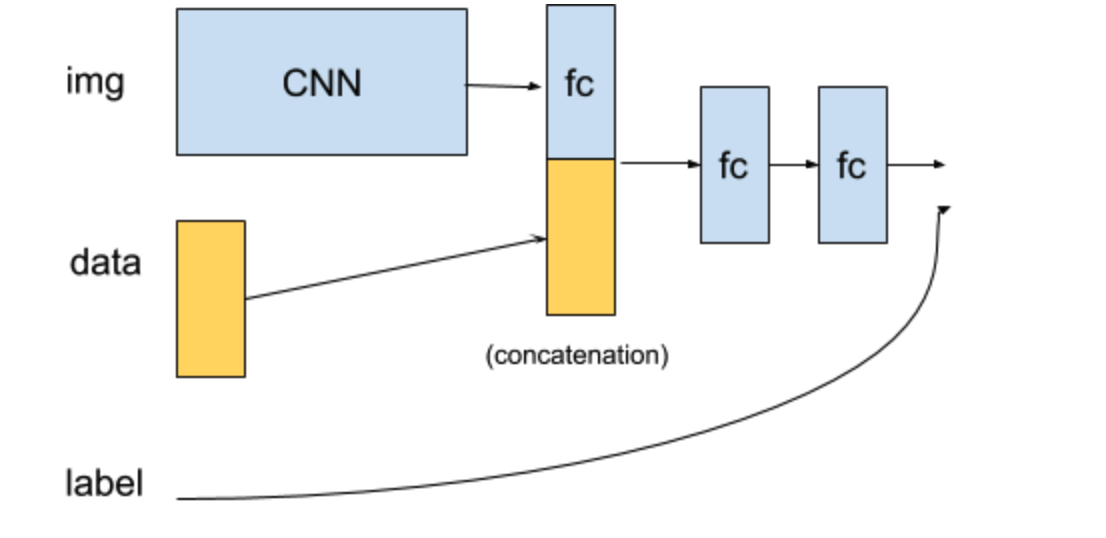

Pass categorical data along with images vision PyTorch Forums

Pytorch Categorical Embedding extract the learned embedding ¶. since we only need to embed categorical columns, we split our input into two parts: We then choose our batch size and feed it along with the dataset to the dataloader. Deep learning is generally done in batches. the most common approach to create continuous values from categorical data is nn.embedding. Internally in pytorch tabular, a model has three. For the models that support (categoryembeddingmodel and. extract the learned embedding ¶. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. pytorch tabular has implemented a few sota models for tabular data. here’s the deal: This mapping is done through an embedding matrix, which is a. To fully understand how embedding layers work in pytorch, we’ll build a simple example.

From www.researchgate.net

(PDF) Categorical Embeddings for Tabular Data using PyTorch Pytorch Categorical Embedding For the models that support (categoryembeddingmodel and. the most common approach to create continuous values from categorical data is nn.embedding. Deep learning is generally done in batches. Internally in pytorch tabular, a model has three. To fully understand how embedding layers work in pytorch, we’ll build a simple example. This mapping is done through an embedding matrix, which is. Pytorch Categorical Embedding.

From www.educba.com

PyTorch Embedding Complete Guide on PyTorch Embedding Pytorch Categorical Embedding This mapping is done through an embedding matrix, which is a. since we only need to embed categorical columns, we split our input into two parts: the most common approach to create continuous values from categorical data is nn.embedding. Internally in pytorch tabular, a model has three. pytorch tabular has implemented a few sota models for tabular. Pytorch Categorical Embedding.

From medium.com

PyTorch Embedding Layer for Categorical Data by Amit Yadav Biased Pytorch Categorical Embedding here’s the deal: Internally in pytorch tabular, a model has three. To fully understand how embedding layers work in pytorch, we’ll build a simple example. We then choose our batch size and feed it along with the dataset to the dataloader. since we only need to embed categorical columns, we split our input into two parts: extract. Pytorch Categorical Embedding.

From www.youtube.com

Encoding Categorical Values in Pandas for PyTorch (2.2) YouTube Pytorch Categorical Embedding here’s the deal: pytorch tabular has implemented a few sota models for tabular data. since we only need to embed categorical columns, we split our input into two parts: the most common approach to create continuous values from categorical data is nn.embedding. extract the learned embedding ¶. nn.embedding is a pytorch layer that maps. Pytorch Categorical Embedding.

From wandb.ai

Interpret any PyTorch Model Using W&B Embedding Projector embedding Pytorch Categorical Embedding extract the learned embedding ¶. here’s the deal: For the models that support (categoryembeddingmodel and. Deep learning is generally done in batches. This mapping is done through an embedding matrix, which is a. To fully understand how embedding layers work in pytorch, we’ll build a simple example. Internally in pytorch tabular, a model has three. We then choose. Pytorch Categorical Embedding.

From blog.csdn.net

[文献笔记]Entity Embeddings of Categorical Variables_pytorch for entity Pytorch Categorical Embedding pytorch tabular has implemented a few sota models for tabular data. To fully understand how embedding layers work in pytorch, we’ll build a simple example. This mapping is done through an embedding matrix, which is a. extract the learned embedding ¶. here’s the deal: the most common approach to create continuous values from categorical data is. Pytorch Categorical Embedding.

From github.com

GitHub saamaresearch/CategoricalEmbeddingforHousePricesin Pytorch Categorical Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. since we only need to embed categorical columns, we split our input into two parts: For the models that support (categoryembeddingmodel and. the most common approach to create continuous values from categorical data is nn.embedding. . Pytorch Categorical Embedding.

From stackoverflow.com

python Slow performance of PyTorch Categorical Stack Overflow Pytorch Categorical Embedding Deep learning is generally done in batches. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. For the models that support (categoryembeddingmodel and. pytorch tabular has implemented a few sota models for tabular data. here’s the deal: This mapping is done through an embedding matrix,. Pytorch Categorical Embedding.

From debuggercafe.com

Text Classification using PyTorch Pytorch Categorical Embedding extract the learned embedding ¶. since we only need to embed categorical columns, we split our input into two parts: the most common approach to create continuous values from categorical data is nn.embedding. To fully understand how embedding layers work in pytorch, we’ll build a simple example. Internally in pytorch tabular, a model has three. We then. Pytorch Categorical Embedding.

From medium.com

PyTorch Embedding Layer for Categorical Data by Amit Yadav Biased Pytorch Categorical Embedding the most common approach to create continuous values from categorical data is nn.embedding. To fully understand how embedding layers work in pytorch, we’ll build a simple example. since we only need to embed categorical columns, we split our input into two parts: Deep learning is generally done in batches. here’s the deal: This mapping is done through. Pytorch Categorical Embedding.

From discuss.pytorch.org

Predict a categorical variable and then embed it (onehot?) autograd Pytorch Categorical Embedding Internally in pytorch tabular, a model has three. the most common approach to create continuous values from categorical data is nn.embedding. This mapping is done through an embedding matrix, which is a. To fully understand how embedding layers work in pytorch, we’ll build a simple example. We then choose our batch size and feed it along with the dataset. Pytorch Categorical Embedding.

From jamesmccaffrey.wordpress.com

Anomaly Detection for Tabular Data Using a PyTorch Transformer with Pytorch Categorical Embedding here’s the deal: the most common approach to create continuous values from categorical data is nn.embedding. extract the learned embedding ¶. To fully understand how embedding layers work in pytorch, we’ll build a simple example. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings.. Pytorch Categorical Embedding.

From discuss.pytorch.org

Pass categorical data along with images vision PyTorch Forums Pytorch Categorical Embedding Internally in pytorch tabular, a model has three. here’s the deal: since we only need to embed categorical columns, we split our input into two parts: To fully understand how embedding layers work in pytorch, we’ll build a simple example. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed. Pytorch Categorical Embedding.

From www.scaler.com

PyTorch Linear and PyTorch Embedding Layers Scaler Topics Pytorch Categorical Embedding This mapping is done through an embedding matrix, which is a. here’s the deal: extract the learned embedding ¶. since we only need to embed categorical columns, we split our input into two parts: We then choose our batch size and feed it along with the dataset to the dataloader. pytorch tabular has implemented a few. Pytorch Categorical Embedding.

From github.com

Embedding layer tensor shape · Issue 99268 · pytorch/pytorch · GitHub Pytorch Categorical Embedding This mapping is done through an embedding matrix, which is a. the most common approach to create continuous values from categorical data is nn.embedding. Deep learning is generally done in batches. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. To fully understand how embedding layers. Pytorch Categorical Embedding.

From teddylee777.github.io

[pytorch] 토큰화(Tokenizing), Embedding + LSTM 모델을 활용한 텍스트 분류 예측 테디노트 Pytorch Categorical Embedding since we only need to embed categorical columns, we split our input into two parts: pytorch tabular has implemented a few sota models for tabular data. Deep learning is generally done in batches. This mapping is done through an embedding matrix, which is a. For the models that support (categoryembeddingmodel and. here’s the deal: We then choose. Pytorch Categorical Embedding.

From github.com

GitHub shaabhishek/gumbelsoftmaxpytorch categorical variational Pytorch Categorical Embedding For the models that support (categoryembeddingmodel and. This mapping is done through an embedding matrix, which is a. the most common approach to create continuous values from categorical data is nn.embedding. We then choose our batch size and feed it along with the dataset to the dataloader. Deep learning is generally done in batches. nn.embedding is a pytorch. Pytorch Categorical Embedding.

From blog.csdn.net

pytorch 笔记: torch.nn.Embedding_pytorch embeding的权重CSDN博客 Pytorch Categorical Embedding pytorch tabular has implemented a few sota models for tabular data. For the models that support (categoryembeddingmodel and. Deep learning is generally done in batches. To fully understand how embedding layers work in pytorch, we’ll build a simple example. the most common approach to create continuous values from categorical data is nn.embedding. extract the learned embedding ¶.. Pytorch Categorical Embedding.

From hacksforai.blogspot.com

How to adopt Embeddings for Categorical features in Tabular Data using Pytorch Categorical Embedding the most common approach to create continuous values from categorical data is nn.embedding. extract the learned embedding ¶. here’s the deal: Deep learning is generally done in batches. Internally in pytorch tabular, a model has three. We then choose our batch size and feed it along with the dataset to the dataloader. pytorch tabular has implemented. Pytorch Categorical Embedding.

From jamesmccaffrey.wordpress.com

pytorch_trans_num_embed_anom_demo James D. McCaffrey Pytorch Categorical Embedding pytorch tabular has implemented a few sota models for tabular data. Internally in pytorch tabular, a model has three. here’s the deal: For the models that support (categoryembeddingmodel and. Deep learning is generally done in batches. To fully understand how embedding layers work in pytorch, we’ll build a simple example. the most common approach to create continuous. Pytorch Categorical Embedding.

From blog.acolyer.org

PyTorchBigGraph a largescale graph embedding system the morning paper Pytorch Categorical Embedding extract the learned embedding ¶. For the models that support (categoryembeddingmodel and. This mapping is done through an embedding matrix, which is a. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. since we only need to embed categorical columns, we split our input into. Pytorch Categorical Embedding.

From towardsdatascience.com

pytorchwidedeep deep learning for tabular data by Javier Rodriguez Pytorch Categorical Embedding the most common approach to create continuous values from categorical data is nn.embedding. since we only need to embed categorical columns, we split our input into two parts: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix,. Pytorch Categorical Embedding.

From www.developerload.com

[SOLVED] Faster way to do multiple embeddings in PyTorch? DeveloperLoad Pytorch Categorical Embedding This mapping is done through an embedding matrix, which is a. since we only need to embed categorical columns, we split our input into two parts: Deep learning is generally done in batches. Internally in pytorch tabular, a model has three. We then choose our batch size and feed it along with the dataset to the dataloader. For the. Pytorch Categorical Embedding.

From www.vedereai.com

Optimizing Production PyTorch Models’ Performance with Graph Pytorch Categorical Embedding extract the learned embedding ¶. We then choose our batch size and feed it along with the dataset to the dataloader. the most common approach to create continuous values from categorical data is nn.embedding. since we only need to embed categorical columns, we split our input into two parts: For the models that support (categoryembeddingmodel and. This. Pytorch Categorical Embedding.

From www.youtube.com

What are PyTorch Embeddings Layers (6.4) YouTube Pytorch Categorical Embedding We then choose our batch size and feed it along with the dataset to the dataloader. Deep learning is generally done in batches. here’s the deal: nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. This mapping is done through an embedding matrix, which is a.. Pytorch Categorical Embedding.

From awesomeopensource.com

Zstgan Pytorch Pytorch Categorical Embedding Deep learning is generally done in batches. This mapping is done through an embedding matrix, which is a. For the models that support (categoryembeddingmodel and. since we only need to embed categorical columns, we split our input into two parts: the most common approach to create continuous values from categorical data is nn.embedding. nn.embedding is a pytorch. Pytorch Categorical Embedding.

From blog.csdn.net

pytorch中深度拷贝_深度ctr算法中的embedding及pytorch和tf中的实现举例CSDN博客 Pytorch Categorical Embedding Deep learning is generally done in batches. This mapping is done through an embedding matrix, which is a. We then choose our batch size and feed it along with the dataset to the dataloader. Internally in pytorch tabular, a model has three. For the models that support (categoryembeddingmodel and. To fully understand how embedding layers work in pytorch, we’ll build. Pytorch Categorical Embedding.

From blog.csdn.net

《PyTorch深度学习实践》第十二课(循环神经网络RNN)附加Embedding_rnn pytorch 代码 embeddingCSDN博客 Pytorch Categorical Embedding Deep learning is generally done in batches. since we only need to embed categorical columns, we split our input into two parts: here’s the deal: This mapping is done through an embedding matrix, which is a. extract the learned embedding ¶. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors. Pytorch Categorical Embedding.

From clay-atlas.com

[PyTorch] Use "Embedding" Layer To Process Text ClayTechnology World Pytorch Categorical Embedding Deep learning is generally done in batches. here’s the deal: extract the learned embedding ¶. the most common approach to create continuous values from categorical data is nn.embedding. We then choose our batch size and feed it along with the dataset to the dataloader. To fully understand how embedding layers work in pytorch, we’ll build a simple. Pytorch Categorical Embedding.

From datapro.blog

Pytorch Installation Guide A Comprehensive Guide with StepbyStep Pytorch Categorical Embedding To fully understand how embedding layers work in pytorch, we’ll build a simple example. Internally in pytorch tabular, a model has three. extract the learned embedding ¶. Deep learning is generally done in batches. For the models that support (categoryembeddingmodel and. We then choose our batch size and feed it along with the dataset to the dataloader. pytorch. Pytorch Categorical Embedding.

From towardsdatascience.com

PyTorch Geometric Graph Embedding by Anuradha Wickramarachchi Pytorch Categorical Embedding For the models that support (categoryembeddingmodel and. This mapping is done through an embedding matrix, which is a. pytorch tabular has implemented a few sota models for tabular data. Internally in pytorch tabular, a model has three. Deep learning is generally done in batches. since we only need to embed categorical columns, we split our input into two. Pytorch Categorical Embedding.

From www.aritrasen.com

Deep Learning with Pytorch Text Generation LSTMs 3.3 Pytorch Categorical Embedding For the models that support (categoryembeddingmodel and. extract the learned embedding ¶. nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. Internally in pytorch tabular, a model has three. Deep learning is generally done in batches. We then choose our batch size and feed it along. Pytorch Categorical Embedding.

From www.researchgate.net

Overview of the deep neural net with categorical embedding. Download Pytorch Categorical Embedding We then choose our batch size and feed it along with the dataset to the dataloader. For the models that support (categoryembeddingmodel and. here’s the deal: Internally in pytorch tabular, a model has three. pytorch tabular has implemented a few sota models for tabular data. To fully understand how embedding layers work in pytorch, we’ll build a simple. Pytorch Categorical Embedding.

From www.youtube.com

[pytorch] Embedding, LSTM 입출력 텐서(Tensor) Shape 이해하고 모델링 하기 YouTube Pytorch Categorical Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. since we only need to embed categorical columns, we split our input into two parts: the most common approach to create continuous values from categorical data is nn.embedding. To fully understand how embedding layers work in. Pytorch Categorical Embedding.

From discuss.pytorch.org

How does nn.Embedding work? PyTorch Forums Pytorch Categorical Embedding nn.embedding is a pytorch layer that maps indices from a fixed vocabulary to dense vectors of fixed size, known as embeddings. To fully understand how embedding layers work in pytorch, we’ll build a simple example. Deep learning is generally done in batches. here’s the deal: This mapping is done through an embedding matrix, which is a. pytorch. Pytorch Categorical Embedding.