Rectifier Neural Definition . In essence, the function returns 0 if it receives a. Rectified linear units find applications in computer vision and. The rectifier is,, the most popular activation function for deep neural networks. A rectified linear unit is a form of activation function used commonly in deep learning models. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. What is a rectified linear unit? It is also known as the rectifier activation function.

from www.scienceabc.com

What is a rectified linear unit? In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Rectified linear units find applications in computer vision and. It is also known as the rectifier activation function. The rectifier is,, the most popular activation function for deep neural networks. In essence, the function returns 0 if it receives a. A rectified linear unit is a form of activation function used commonly in deep learning models.

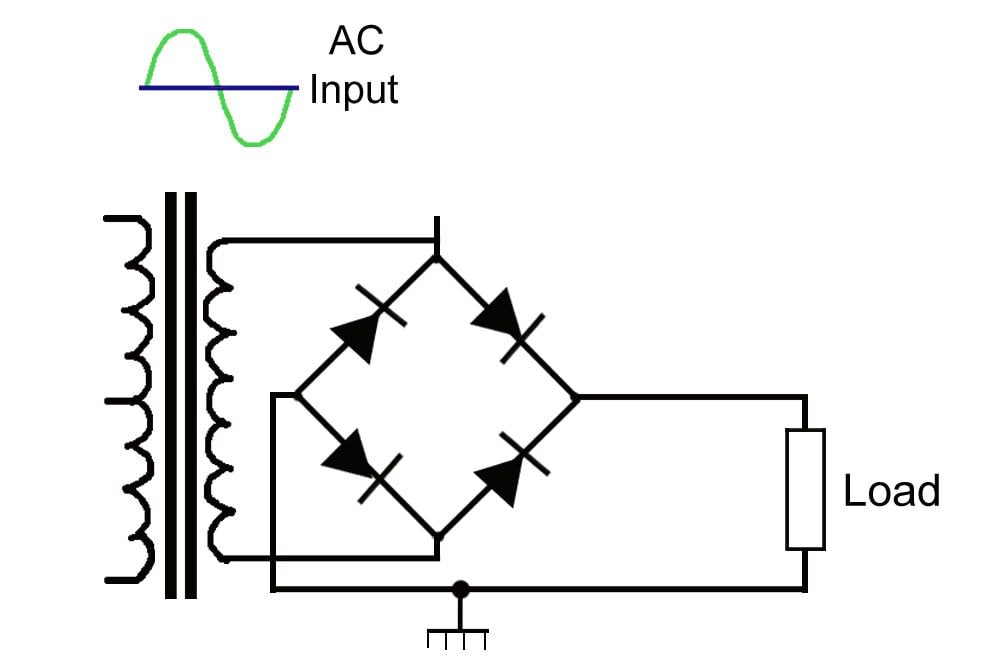

Rectifier What It Is? How Does It Work?

Rectifier Neural Definition Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. In essence, the function returns 0 if it receives a. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. What is a rectified linear unit? Rectified linear units find applications in computer vision and. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. The rectifier is,, the most popular activation function for deep neural networks. It is also known as the rectifier activation function.

From www.researchgate.net

A neural network representation (the rectifier linear function in the Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. It is also known as the rectifier activation function. Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. What is a rectified linear unit? Rectified linear units find applications in computer vision and speech. Rectifier Neural Definition.

From www.etechnog.com

What is a Rectifier? Block Diagram, Working Principle ETechnoG Rectifier Neural Definition In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. The rectifier is,, the most popular activation function for deep neural networks. Relu, or rectified linear unit, represents a function that has transformed the landscape. Rectifier Neural Definition.

From how2electronics.com

Half Wave Rectifier Basics, Circuit, Working & Applications Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. The rectifier is,, the most popular activation function for deep neural networks. In essence, the function returns 0 if it receives a. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Relu, or rectified linear. Rectifier Neural Definition.

From www.youtube.com

Rectifier definition of RECTIFIER YouTube Rectifier Neural Definition Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. In essence, the function returns 0 if it receives a. Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified. Rectifier Neural Definition.

From www.collegesearch.in

Rectifier Definition, Types, Application, Uses and Working Principle Rectifier Neural Definition The rectifier is,, the most popular activation function for deep neural networks. What is a rectified linear unit? Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. It is also known as the rectifier activation function. A rectified linear unit is a form of activation function used commonly in deep learning. Rectifier Neural Definition.

From electricalworkbook.com

Controlled Rectifier Definition, Classification, Applications Rectifier Neural Definition Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Rectified linear units find applications in computer vision and. In this tutorial, you will discover the rectified linear. Rectifier Neural Definition.

From www.wikiwand.com

Rectifier (neural networks) Wikiwand Rectifier Neural Definition Rectified linear units find applications in computer vision and. What is a rectified linear unit? In essence, the function returns 0 if it receives a. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. A. Rectifier Neural Definition.

From www.slideserve.com

PPT RECTIFICATION PowerPoint Presentation, free download ID4459485 Rectifier Neural Definition In essence, the function returns 0 if it receives a. It is also known as the rectifier activation function. The rectifier is,, the most popular activation function for deep neural networks. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. A rectified linear unit. Rectifier Neural Definition.

From schematicutricles.z21.web.core.windows.net

Full Wave Rectifier Circuit Diagram Class 12 Rectifier Neural Definition Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation function used commonly in deep learning models. In this tutorial, you will discover the rectified linear activation function. Rectifier Neural Definition.

From www.semanticscholar.org

Figure 3 from Artificial Neural Networks for Control of a Grid Rectifier Neural Definition In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. A. Rectifier Neural Definition.

From www.semanticscholar.org

Rectifier (neural networks) Semantic Scholar Rectifier Neural Definition Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. What is a rectified linear unit? Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. The. Rectifier Neural Definition.

From www.electricaltechnology.org

What is a Rectifier? Types of Rectifiers and their Operation Rectifier Neural Definition Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation. Rectifier Neural Definition.

From survival8.blogspot.com

survival8 Activation Functions in Neural Networks Rectifier Neural Definition In essence, the function returns 0 if it receives a. It is also known as the rectifier activation function. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Rectified linear units find applications in computer vision and. The rectifier is,, the most popular activation function for deep neural networks. What is. Rectifier Neural Definition.

From electronicslesson.com

Rectifier Definition, Types, Applications Rectifier Neural Definition It is also known as the rectifier activation function. The rectifier is,, the most popular activation function for deep neural networks. In essence, the function returns 0 if it receives a. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. What is a rectified linear unit? Rectified linear units find applications in computer. Rectifier Neural Definition.

From www.studocu.com

Rectifier Circuits [ Notes ] RECTIFIER CIRCUIT I. Definition A Rectifier Neural Definition In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. The rectifier is,, the most popular activation function for deep neural networks. A rectified linear unit is. Rectifier Neural Definition.

From www.youtube.com

Critical initialisation for deep signal propagation in noisy rectifier Rectifier Neural Definition Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. What is a rectified linear unit? It is also known as the rectifier activation function. Rectified linear units find applications in computer vision and. The rectifier is,, the most popular activation function for deep neural networks. Relu, or rectified linear unit, represents. Rectifier Neural Definition.

From www.slideserve.com

PPT Silicon Controlled Rectifiers PowerPoint Presentation, free Rectifier Neural Definition In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. It is also known as the rectifier activation function. What is a rectified linear unit? Rectified linear units find applications. Rectifier Neural Definition.

From circuitevaporicex4.z21.web.core.windows.net

Circuit Diagram Of A Full Wave Rectifier Rectifier Neural Definition In essence, the function returns 0 if it receives a. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. It is also known as the rectifier activation function. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified. Rectifier Neural Definition.

From www.electricaltechnology.org

What is a Rectifier? Types of Rectifiers and their Operation Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. What is a rectified linear unit? It is also known as the rectifier activation function. The rectifier is,, the most popular activation function for deep neural networks. Rectified linear units find applications in computer vision and. Rectified linear units find applications in computer vision. Rectifier Neural Definition.

From testbook.com

Rectifiers Definition, Working Principle, Types, Applications Rectifier Neural Definition What is a rectified linear unit? In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and speech recognition using deep. Rectifier Neural Definition.

From deepai.com

Rectifier Neural Network with a DualPathway Architecture for Image Rectifier Neural Definition The rectifier is,, the most popular activation function for deep neural networks. A rectified linear unit is a form of activation function used commonly in deep learning models. It is also known as the rectifier activation function. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Relu, or rectified linear unit,. Rectifier Neural Definition.

From www.pinterest.com

Rectifier (neural networks) Wikipedia Networking, Wikipedia, Line chart Rectifier Neural Definition Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation function used commonly in deep learning models. It is also known as the rectifier activation function. In essence, the function returns 0 if it receives a. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network. Rectifier Neural Definition.

From www.electricaltechnology.org

What is a Rectifier? Types of Rectifiers and their Operation Rectifier Neural Definition Rectified linear units find applications in computer vision and. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. In essence, the function returns 0 if it receives a. What is a rectified linear unit? A rectified linear unit is a form of activation function used commonly in deep learning models. In. Rectifier Neural Definition.

From www.semanticscholar.org

Rectifier (neural networks) Semantic Scholar Rectifier Neural Definition Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. The rectifier is,, the most popular activation function for deep neural networks. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Rectified linear units find applications in computer vision and. Relu, or rectified linear unit,. Rectifier Neural Definition.

From www.mdpi.com

Electronics Free FullText A Novel SelfAdaptive Rectifier with Rectifier Neural Definition The rectifier is,, the most popular activation function for deep neural networks. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and. What is a rectified linear unit? Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Rectifier Neural Definition.

From www.scienceabc.com

Rectifier What It Is? How Does It Work? Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. What is a rectified linear unit? It is also known as the rectifier activation function. Rectified linear units find applications. Rectifier Neural Definition.

From electricalworkbook.com

Controlled Rectifier Definition, Classification, Applications Rectifier Neural Definition It is also known as the rectifier activation function. Rectified linear units find applications in computer vision and. A rectified linear unit is a form of activation function used commonly in deep learning models. What is a rectified linear unit? The rectifier is,, the most popular activation function for deep neural networks. Rectified linear units find applications in computer vision. Rectifier Neural Definition.

From www.slideteam.net

Rectifier Function In A Neural Network Training Ppt Rectifier Neural Definition Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. Rectified linear units find applications in computer vision and. The rectifier is,, the most popular activation function for deep neural networks. In essence, the function returns 0 if it receives a. A rectified linear unit is. Rectifier Neural Definition.

From www.collegesearch.in

Rectifier Definition, Types, Application, Uses and Working Principle Rectifier Neural Definition Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. In essence, the function returns 0 if it receives a. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and speech. Rectifier Neural Definition.

From www.electricity-magnetism.org

What is a halfwave rectifier? Rectifier Neural Definition It is also known as the rectifier activation function. A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks.. Rectifier Neural Definition.

From www.electronics-lab.com

The Signal Diode Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. In essence,. Rectifier Neural Definition.

From jayabarumandiri.blogspot.com

What is Rectifier? Rectifier Neural Definition Rectified linear units find applications in computer vision and. In essence, the function returns 0 if it receives a. What is a rectified linear unit? In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. The rectifier is,, the most popular activation function for deep neural networks. Relu, or rectified linear unit, represents a. Rectifier Neural Definition.

From www.geeksforgeeks.org

Rectifiers Definition, Working, Types, Circuits & Applications Rectifier Neural Definition A rectified linear unit is a form of activation function used commonly in deep learning models. Rectified linear units find applications in computer vision and speech recognition using deep neural nets and computational neuroscience. It is also known as the rectifier activation function. What is a rectified linear unit? Rectified linear units find applications in computer vision and. Relu, or. Rectifier Neural Definition.

From www.geeksforgeeks.org

Rectifiers Definition, Working, Types, Circuits & Applications Rectifier Neural Definition In essence, the function returns 0 if it receives a. Rectified linear units find applications in computer vision and. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. Relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs with its functional simplicity and operational efficiency. It. Rectifier Neural Definition.

From www.geeksforgeeks.org

Rectifiers Definition, Working, Types, Circuits & Applications Rectifier Neural Definition What is a rectified linear unit? Rectified linear units find applications in computer vision and. In this tutorial, you will discover the rectified linear activation function for deep learning neural networks. In essence, the function returns 0 if it receives a. A rectified linear unit is a form of activation function used commonly in deep learning models. It is also. Rectifier Neural Definition.