Multithreading Spark Jobs . This can be achieved by either statically or. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. Mllib), then your code we’ll be parallelized and distributed natively by spark. This is where we can induce multiple threads to run each of these jobs. Delta live tables do this for you automatically. It covers a spark job optimization technique to enhance the performance of independent running queries using. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. The best approach to speeding up small jobs is to run multiple operations in parallel. If you’re using spark data frames and libraries (e.g. This can be set using a spark config property: The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. The execution mode shall be set to fair.

from study.sf.163.com

Mllib), then your code we’ll be parallelized and distributed natively by spark. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Delta live tables do this for you automatically. The best approach to speeding up small jobs is to run multiple operations in parallel. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. This is where we can induce multiple threads to run each of these jobs. If you’re using spark data frames and libraries (e.g. This can be achieved by either statically or. The execution mode shall be set to fair.

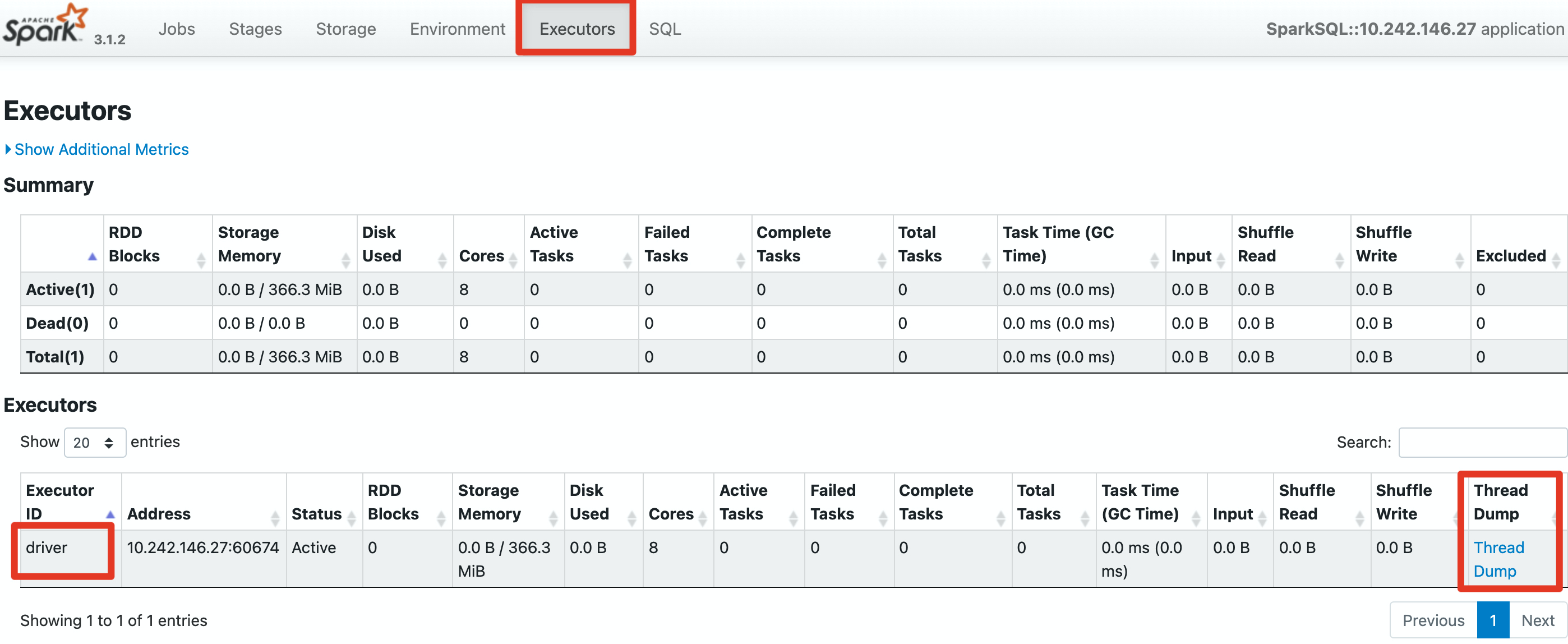

Spark FAQ如何看到卡住spark任务的driver thread dump 《有数中台FAQ》

Multithreading Spark Jobs The best approach to speeding up small jobs is to run multiple operations in parallel. It covers a spark job optimization technique to enhance the performance of independent running queries using. Mllib), then your code we’ll be parallelized and distributed natively by spark. Delta live tables do this for you automatically. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. The execution mode shall be set to fair. This is where we can induce multiple threads to run each of these jobs. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. If you’re using spark data frames and libraries (e.g. This can be set using a spark config property: Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. This can be achieved by either statically or. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. The best approach to speeding up small jobs is to run multiple operations in parallel.

From www.researchgate.net

Orchestration of a Spark job on the Cloud Data Hub (CDH) Download Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. This is where we can induce multiple threads to run each of these jobs. Delta live tables do this for you automatically. If you’re using spark data frames and libraries (e.g. The execution mode shall be set to fair. It covers. Multithreading Spark Jobs.

From docs4.incorta.com

Spark Application Model Multithreading Spark Jobs Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. Mllib), then your code we’ll be parallelized and distributed natively by spark. This is where we can induce multiple threads to run each of these jobs. Delta live. Multithreading Spark Jobs.

From www.codingninjas.com

Benefits of Multithreading Coding Ninjas Multithreading Spark Jobs This can be set using a spark config property: The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. Delta live tables do this for you automatically. The execution mode shall be set to fair. If you’re using spark data frames and libraries (e.g. This can be achieved by either statically or. This is. Multithreading Spark Jobs.

From www.oreilly.com

Visualizing Spark application using web UI Scala and Spark for Big Multithreading Spark Jobs If you’re using spark data frames and libraries (e.g. The execution mode shall be set to fair. It covers a spark job optimization technique to enhance the performance of independent running queries using. Mllib), then your code we’ll be parallelized and distributed natively by spark. The multiprocessing library can be used to run concurrent python threads, and even perform operations. Multithreading Spark Jobs.

From slideplayer.com

Chapter 4 Threads Overview Multithreading Models Thread Libraries Multithreading Spark Jobs This is where we can induce multiple threads to run each of these jobs. This can be set using a spark config property: If you’re using spark data frames and libraries (e.g. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. Mllib), then your code we’ll be parallelized and distributed natively. Multithreading Spark Jobs.

From www.kindsonthegenius.com

Spark Your First Spark Program! Apache Spark Tutorial Multithreading Spark Jobs This can be set using a spark config property: Mllib), then your code we’ll be parallelized and distributed natively by spark. This can be achieved by either statically or. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. The execution mode shall be set to fair. The concept involves utilizing. Multithreading Spark Jobs.

From carsoncousitony.blogspot.com

Livy Run Spark Job With Out Uploading Jar File Carson Cousitony Multithreading Spark Jobs The execution mode shall be set to fair. The best approach to speeding up small jobs is to run multiple operations in parallel. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. This is where we can induce multiple threads to run each of these jobs. Delta live tables do this for you automatically. Inside. Multithreading Spark Jobs.

From www.youtube.com

Spark Stages And Tasks (Part1) Spark Driver and Executor Bigdata Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Mllib), then your code we’ll be parallelized and distributed natively by spark. This can be set using a spark config property: This can be achieved by either statically or. Delta live tables do this for you automatically. If you’re using spark. Multithreading Spark Jobs.

From www.youtube.com

Creating and Submitting Java Spark Jobs to Spark Clusters YouTube Multithreading Spark Jobs This can be set using a spark config property: This is where we can induce multiple threads to run each of these jobs. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. The best approach to speeding up small jobs is to run multiple operations in parallel. Inside a given. Multithreading Spark Jobs.

From daimlinc.com

How to Tune Spark Jobs A stepbystep approach DAIMLINC Multithreading Spark Jobs The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. This is where we can induce multiple threads to run each of these jobs. Delta live tables do this for you automatically. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. Mllib), then your code we’ll be parallelized and. Multithreading Spark Jobs.

From medium.com

Quick Start Guide — Submit Spark (2.4) Jobs on Minikube/AWS Multithreading Spark Jobs If you’re using spark data frames and libraries (e.g. This can be set using a spark config property: This can be achieved by either statically or. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they. Multithreading Spark Jobs.

From ilum.cloud

How to optimize your Spark Cluster with Interactive Spark Jobs Multithreading Spark Jobs The execution mode shall be set to fair. The best approach to speeding up small jobs is to run multiple operations in parallel. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Delta live. Multithreading Spark Jobs.

From docs.amazonaws.cn

Monitoring jobs using the Apache Spark web UI Amazon Glue Multithreading Spark Jobs I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. If you’re using spark data frames and libraries (e.g. This is where we can induce multiple threads to run each of these jobs. Delta live tables do this for you automatically. The best approach to speeding up small jobs is to run multiple operations in parallel.. Multithreading Spark Jobs.

From fractal.ai

Databricks Spark jobs optimization techniques Multithreading Fractal Multithreading Spark Jobs I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. It covers a spark job optimization technique to enhance the performance of independent running queries using. Delta live tables do this for you automatically. The execution mode shall be set to fair. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently. Multithreading Spark Jobs.

From blog.taboola.com

Samplex Scale Up Your Spark Jobs Multithreading Spark Jobs Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. This is where we can induce multiple threads to run each of these jobs. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. The execution mode shall be set to fair. This. Multithreading Spark Jobs.

From blog.cellenza.com

Using Spark with (K8s) Le blog de Cellenza Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Delta live tables do this for you automatically. This is where we can induce multiple threads to run each of these jobs. This can be set using a spark config property: I create multiple threads using python's threading module and submit. Multithreading Spark Jobs.

From ashkrit.blogspot.com

Are you ready Anatomy of Apache Spark Job Multithreading Spark Jobs This is where we can induce multiple threads to run each of these jobs. This can be set using a spark config property: The execution mode shall be set to fair. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. The concept involves utilizing one or several job clusters to. Multithreading Spark Jobs.

From stackoverflow.com

multithreading Spark Streaming Kafka job stuck in 'processing' stage Multithreading Spark Jobs The best approach to speeding up small jobs is to run multiple operations in parallel. Delta live tables do this for you automatically. This can be set using a spark config property: This is where we can induce multiple threads to run each of these jobs. It covers a spark job optimization technique to enhance the performance of independent running. Multithreading Spark Jobs.

From questdb.io

Integrate Apache Spark and QuestDB for TimeSeries Analytics Multithreading Spark Jobs The execution mode shall be set to fair. Mllib), then your code we’ll be parallelized and distributed natively by spark. This can be achieved by either statically or. This is where we can induce multiple threads to run each of these jobs. The best approach to speeding up small jobs is to run multiple operations in parallel. This can be. Multithreading Spark Jobs.

From medium.com

MultiThreading in SpringBatch. Spring Batch jobs allow us to perform Multithreading Spark Jobs If you’re using spark data frames and libraries (e.g. This is where we can induce multiple threads to run each of these jobs. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. This can be set using a spark config property: Inside a given spark application (sparkcontext instance), multiple parallel jobs can run. Multithreading Spark Jobs.

From www.javacodegeeks.com

Anatomy of Apache Spark Job Java Code Geeks Multithreading Spark Jobs The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. It covers a spark job optimization technique to enhance the performance of independent running queries using. The best approach to speeding up small jobs is to run multiple operations in parallel. If you’re using spark data frames and libraries (e.g. Inside a given spark. Multithreading Spark Jobs.

From www.amazon.ca

Spark Plug Rethreading Tap Thread Repair Set Kit M10 x 1 4 Inserts Re Multithreading Spark Jobs The execution mode shall be set to fair. Mllib), then your code we’ll be parallelized and distributed natively by spark. Delta live tables do this for you automatically. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were. Multithreading Spark Jobs.

From docs.liveramp.com

Working with Spark Jobs Submit Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. This can be achieved by either statically or. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. Mllib),. Multithreading Spark Jobs.

From medium.com

Component of Spark Application/Job!!! by Badwaik Ojas Medium Multithreading Spark Jobs Delta live tables do this for you automatically. It covers a spark job optimization technique to enhance the performance of independent running queries using. This is where we can induce multiple threads to run each of these jobs. The best approach to speeding up small jobs is to run multiple operations in parallel. The concept involves utilizing one or several. Multithreading Spark Jobs.

From www.researchgate.net

Orchestration of a Spark job on the Cloud Data Hub (CDH) Download Multithreading Spark Jobs Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. It covers a spark job optimization technique to enhance the performance of independent running queries using. Delta live tables do this for you automatically. This can be set using a spark config property: I create multiple threads using python's threading module and. Multithreading Spark Jobs.

From www.youtube.com

7. Create and Run Spark Job in Databricks YouTube Multithreading Spark Jobs Mllib), then your code we’ll be parallelized and distributed natively by spark. Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. The best approach to speeding up small jobs is to run multiple operations in parallel. This is where we can induce multiple threads to run each of these jobs. Delta. Multithreading Spark Jobs.

From www.youtube.com

Submitting and Monitoring Spark Jobs YouTube Multithreading Spark Jobs Mllib), then your code we’ll be parallelized and distributed natively by spark. Delta live tables do this for you automatically. The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. If you’re using spark data frames and libraries (e.g. This can be set using a spark config property: This is where. Multithreading Spark Jobs.

From www.researchgate.net

Example of a Spark job DAG. Download Scientific Diagram Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. This can be achieved by either statically or. Mllib), then your code we’ll be parallelized and distributed natively by spark. The execution mode shall be set to fair. The concept involves utilizing one or several job clusters to execute numerous jobs. Multithreading Spark Jobs.

From medium.com

Spark jobs, stages, and tasks. Apache Spark is an opensource… by Multithreading Spark Jobs This can be achieved by either statically or. It covers a spark job optimization technique to enhance the performance of independent running queries using. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. Mllib), then your code we’ll be parallelized and distributed natively by spark. The execution mode shall be set to fair.. Multithreading Spark Jobs.

From zephyrnet.com

Introdução ao multithreading e multiprocessamento em Python KDnuggets Multithreading Spark Jobs The multiprocessing library can be used to run concurrent python threads, and even perform operations with spark data frames. Mllib), then your code we’ll be parallelized and distributed natively by spark. This can be achieved by either statically or. I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. Delta live tables do this for you. Multithreading Spark Jobs.

From study.sf.163.com

Spark FAQ如何看到卡住spark任务的driver thread dump 《有数中台FAQ》 Multithreading Spark Jobs Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. This is where we can induce multiple threads to run each of these jobs. This can be achieved by either statically or. This can be set using a spark config property: The concept involves utilizing one or several job clusters to execute. Multithreading Spark Jobs.

From kontext.tech

Spark Basics Application, Driver, Executor, Job, Stage and Task Multithreading Spark Jobs I create multiple threads using python's threading module and submit multiple spark jobs simultaneously. Delta live tables do this for you automatically. It covers a spark job optimization technique to enhance the performance of independent running queries using. The concept involves utilizing one or several job clusters to execute numerous jobs concurrently through multithreading. This is where we can induce. Multithreading Spark Jobs.

From daimlinc.com

How to Tune Spark Jobs A stepbystep approach DAIMLINC Multithreading Spark Jobs Inside a given spark application (sparkcontext instance), multiple parallel jobs can run simultaneously if they were submitted from. It covers a spark job optimization technique to enhance the performance of independent running queries using. This can be set using a spark config property: If you’re using spark data frames and libraries (e.g. The multiprocessing library can be used to run. Multithreading Spark Jobs.

From www.youtube.com

Multithreading In D365 Batch Jobs YouTube Multithreading Spark Jobs This is where we can induce multiple threads to run each of these jobs. The execution mode shall be set to fair. If you’re using spark data frames and libraries (e.g. It covers a spark job optimization technique to enhance the performance of independent running queries using. This can be set using a spark config property: The best approach to. Multithreading Spark Jobs.

From www.youtube.com

multithreading duration of jobs YouTube Multithreading Spark Jobs If you’re using spark data frames and libraries (e.g. It covers a spark job optimization technique to enhance the performance of independent running queries using. The best approach to speeding up small jobs is to run multiple operations in parallel. Mllib), then your code we’ll be parallelized and distributed natively by spark. I create multiple threads using python's threading module. Multithreading Spark Jobs.