What Is The Meaning Of Rdd . learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Explore the advantages and disadvantages of rdds in distributed computing and data mining. Explore how to create and operate on rdds using transformations and actions. See examples of how to create and use them, and compare their features and performance. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Learn when to use rdds,. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Unlike a normal list, they can be. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning.

from erikerlandson.github.io

in pyspark, a resilient distributed dataset (rdd) is a collection of elements. See examples of how to create and use them, and compare their features and performance. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Explore the advantages and disadvantages of rdds in distributed computing and data mining. Explore how to create and operate on rdds using transformations and actions. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Learn when to use rdds,. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. Unlike a normal list, they can be.

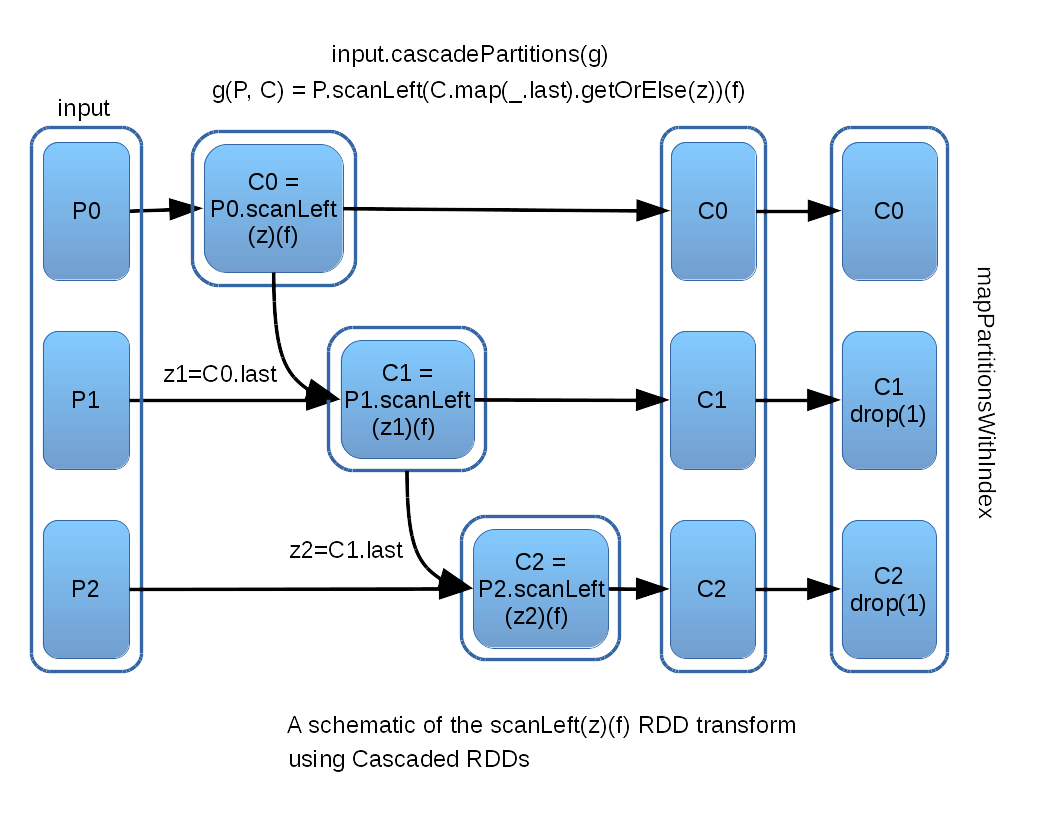

Implementing an RDD scanLeft Transform With Cascade RDDs tool monkey

What Is The Meaning Of Rdd learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Explore how to create and operate on rdds using transformations and actions. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. Learn when to use rdds,. Unlike a normal list, they can be. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Explore the advantages and disadvantages of rdds in distributed computing and data mining. See examples of how to create and use them, and compare their features and performance.

From www.slideserve.com

PPT Regression Discontinuity Design PowerPoint Presentation, free What Is The Meaning Of Rdd learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Learn when to use rdds,. Explore how to create and operate on rdds using transformations and actions. Explore the advantages and disadvantages of rdds in distributed computing and data mining. learn the similarities and differences of spark rdd, dataframe, and dataset, three. What Is The Meaning Of Rdd.

From www.slideserve.com

PPT Radiological Dispersion Devices and Nuclear Weapons An Overview What Is The Meaning Of Rdd in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Explore the advantages and disadvantages of rdds in distributed computing and data mining. Unlike a normal list, they can be. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions. What Is The Meaning Of Rdd.

From data-flair.training

Spark RDD Introduction, Features & Operations of RDD DataFlair What Is The Meaning Of Rdd Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Learn when to use rdds,. Explore how to create and operate on rdds using transformations and actions. Explore the advantages and disadvantages of rdds in distributed computing and data mining. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform. What Is The Meaning Of Rdd.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} What Is The Meaning Of Rdd Unlike a normal list, they can be. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Explore the advantages and disadvantages of rdds in distributed computing and data mining. Learn when to use rdds,. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Explore how to create and operate on rdds using transformations. What Is The Meaning Of Rdd.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark What Is The Meaning Of Rdd Explore the advantages and disadvantages of rdds in distributed computing and data mining. Learn when to use rdds,. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. See examples of how to create and use them, and compare their features and performance. learn what is rdd (resilient. What Is The Meaning Of Rdd.

From data-flair.training

How to the Limitations of RDD in Apache Spark? DataFlair What Is The Meaning Of Rdd Learn when to use rdds,. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Explore how to create and operate on rdds using transformations and actions. Unlike a normal list, they can be. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured. What Is The Meaning Of Rdd.

From abs-tudelft.github.io

Resilient Distributed Datasets for Big Data Lab Manual What Is The Meaning Of Rdd learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Unlike a normal list, they can be. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. See examples of how to create and use them, and compare their features and performance.. What Is The Meaning Of Rdd.

From oakwood.cuhkemba.net

11 Shining Features of Spark RDD You Must Know DataFlair What Is The Meaning Of Rdd See examples of how to create and use them, and compare their features and performance. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Unlike a normal list, they can be. Learn when to use rdds,.. What Is The Meaning Of Rdd.

From sample.solutions

RDD Sample Generation Sample Solutions What Is The Meaning Of Rdd Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Explore how to create and operate on rdds using transformations and actions. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. See examples of how to create and use them, and compare their features. What Is The Meaning Of Rdd.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu What Is The Meaning Of Rdd Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. See examples of how to create and use them, and compare their features and performance. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Explore the advantages and disadvantages of rdds in distributed computing and data mining. Explore how to create and operate on. What Is The Meaning Of Rdd.

From www.hadoopinrealworld.com

What is RDD? Hadoop In Real World What Is The Meaning Of Rdd in pyspark, a resilient distributed dataset (rdd) is a collection of elements. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. Explore how to. What Is The Meaning Of Rdd.

From medium.zenika.com

A comparison between RDD, DataFrame and Dataset in Spark from a What Is The Meaning Of Rdd Learn when to use rdds,. Unlike a normal list, they can be. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Understand its features, such. What Is The Meaning Of Rdd.

From stackoverflow.com

apache spark What does the number meaning after the rdd Stack Overflow What Is The Meaning Of Rdd Unlike a normal list, they can be. Explore how to create and operate on rdds using transformations and actions. Learn when to use rdds,. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. See examples of how to create. What Is The Meaning Of Rdd.

From leverageedu.com

What is thе Full Form of RDD? Leverage Edu What Is The Meaning Of Rdd Explore the advantages and disadvantages of rdds in distributed computing and data mining. See examples of how to create and use them, and compare their features and performance. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Learn when to use rdds,. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Explore how. What Is The Meaning Of Rdd.

From www.educba.com

What is RDD? Comprehensive Guide to RDD with Advantages What Is The Meaning Of Rdd Learn when to use rdds,. Explore how to create and operate on rdds using transformations and actions. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Unlike a normal list, they can be. Explore the advantages. What Is The Meaning Of Rdd.

From slideplayer.com

The Regression Discontinuity Design ppt download What Is The Meaning Of Rdd learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Learn when to use rdds,. Explore the advantages and disadvantages of rdds in distributed computing and data mining. learn what is rdd in apache spark, how it differs from dsm,. What Is The Meaning Of Rdd.

From www.analyticsvidhya.com

Create RDD in Apache Spark using Pyspark Analytics Vidhya What Is The Meaning Of Rdd Explore how to create and operate on rdds using transformations and actions. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. See examples of how to create and use them, and compare their features and performance. learn what is rdd (resilient distributed dataset) in spark,. What Is The Meaning Of Rdd.

From www.prathapkudupublog.com

Snippets Lineage of RDD What Is The Meaning Of Rdd learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. Learn when to use rdds,. See examples of how to create and use them, and compare their features and performance. Unlike a normal list, they can be. Explore how to create and operate on rdds using transformations. What Is The Meaning Of Rdd.

From japaneseclass.jp

RDD RDD JapaneseClass.jp What Is The Meaning Of Rdd Learn when to use rdds,. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working. What Is The Meaning Of Rdd.

From stackoverflow.com

apache spark What does the number meaning after the rdd Stack Overflow What Is The Meaning Of Rdd learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Unlike a normal list, they can be. Learn when to use rdds,. learn the similarities and differences of spark rdd, dataframe, and dataset, three important. What Is The Meaning Of Rdd.

From japaneseclass.jp

Images of RDD JapaneseClass.jp What Is The Meaning Of Rdd learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Learn when to use rdds,. See examples of how to create and use them, and compare their features and performance. in. What Is The Meaning Of Rdd.

From www.pdfprof.com

ada spark tutorial What Is The Meaning Of Rdd in pyspark, a resilient distributed dataset (rdd) is a collection of elements. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. learn what. What Is The Meaning Of Rdd.

From intellipaat.com

What is RDD in Spark Learn about spark RDD Intellipaat What Is The Meaning Of Rdd Explore how to create and operate on rdds using transformations and actions. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Explore the advantages and disadvantages of rdds in distributed computing and data. What Is The Meaning Of Rdd.

From erikerlandson.github.io

Deferring Spark Actions to Lazy Transforms With the Promise RDD tool What Is The Meaning Of Rdd learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. in pyspark, a resilient distributed dataset (rdd) is a collection of elements. Learn when to use rdds,. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in. What Is The Meaning Of Rdd.

From andr-robot.github.io

RDD、DataFrame和DataSet区别 Alpha Carpe diem What Is The Meaning Of Rdd learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. See examples of how to create and use them, and compare their features and performance. Explore the advantages and disadvantages. What Is The Meaning Of Rdd.

From www.simplilearn.com

RDDs in Spark Tutorial Simplilearn What Is The Meaning Of Rdd Unlike a normal list, they can be. See examples of how to create and use them, and compare their features and performance. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in. What Is The Meaning Of Rdd.

From www.educba.com

What is RDD? How It Works Skill & Scope Features & Operations What Is The Meaning Of Rdd learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. Explore the advantages and disadvantages of rdds in distributed computing and data mining. in pyspark, a resilient distributed dataset. What Is The Meaning Of Rdd.

From www.javatpoint.com

PySpark RDD javatpoint What Is The Meaning Of Rdd learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Unlike a normal list, they can be. Learn when to use rdds,. Explore how to create and operate on rdds using transformations and actions. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions. What Is The Meaning Of Rdd.

From www.reddit.com

What’s a ‘Generated’ type transaction? I received a little amount of What Is The Meaning Of Rdd Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. Explore how to create and operate on rdds using transformations and actions. Unlike a normal list, they can be. Learn when to use rdds,. Explore the advantages and disadvantages of rdds in distributed computing and data mining. learn what is rdd (resilient distributed dataset) in spark, a. What Is The Meaning Of Rdd.

From www.researchgate.net

RDD results (visual), Note Dots represent means of grouped data into What Is The Meaning Of Rdd Unlike a normal list, they can be. Explore how to create and operate on rdds using transformations and actions. Explore the advantages and disadvantages of rdds in distributed computing and data mining. See examples of how to create and use them, and compare their features and performance. learn the similarities and differences of spark rdd, dataframe, and dataset, three. What Is The Meaning Of Rdd.

From www.hadoopinrealworld.com

What is RDD? Hadoop In Real World What Is The Meaning Of Rdd Unlike a normal list, they can be. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. See. What Is The Meaning Of Rdd.

From erikerlandson.github.io

Implementing an RDD scanLeft Transform With Cascade RDDs tool monkey What Is The Meaning Of Rdd Explore the advantages and disadvantages of rdds in distributed computing and data mining. learn what is rdd (resilient distributed dataset) in spark, a core data structure for distributed computing. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Learn when to use rdds,. Explore how to create. What Is The Meaning Of Rdd.

From www.interviewbit.com

Top PySpark Interview Questions and Answers (2024) InterviewBit What Is The Meaning Of Rdd learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. Explore how to create and operate on rdds using transformations and actions. See examples of how to create and use them, and compare their features and performance. in pyspark, a resilient distributed dataset (rdd) is a collection of. What Is The Meaning Of Rdd.

From www.slideserve.com

PPT Regression Discontinuity Design PowerPoint Presentation, free What Is The Meaning Of Rdd Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn what is rdd in apache spark, how it differs from dsm, and how to create, transform and cache rdds. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. Unlike a normal list,. What Is The Meaning Of Rdd.

From intellipaat.com

What is RDD in Spark Learn about spark RDD Intellipaat What Is The Meaning Of Rdd Explore how to create and operate on rdds using transformations and actions. Understand its features, such as immutability, fault tolerance, lazy evaluation, and partitioning. learn the similarities and differences of spark rdd, dataframe, and dataset, three important abstractions for working with structured data in spark scala. learn what is rdd in apache spark, how it differs from dsm,. What Is The Meaning Of Rdd.