Airflow Aws_Access_Key_Id . Then, we will dive into how to use airflow to download data from an api and upload it to s3. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: Extra (optional) specify the extra. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Password (optional) specify the aws secret access key. Specify the aws secret access key. Specify the aws access key id. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. To use iam instance profile, create an “empty” connection (i.e. One with no aws access key id or aws secret access key specified, or aws://). We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files.

from www.filestash.app

In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: To use iam instance profile, create an “empty” connection (i.e. Password (optional) specify the aws secret access key. Then, we will dive into how to use airflow to download data from an api and upload it to s3. Extra (optional) specify the extra. One with no aws access key id or aws secret access key specified, or aws://). Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. Specify the aws access key id.

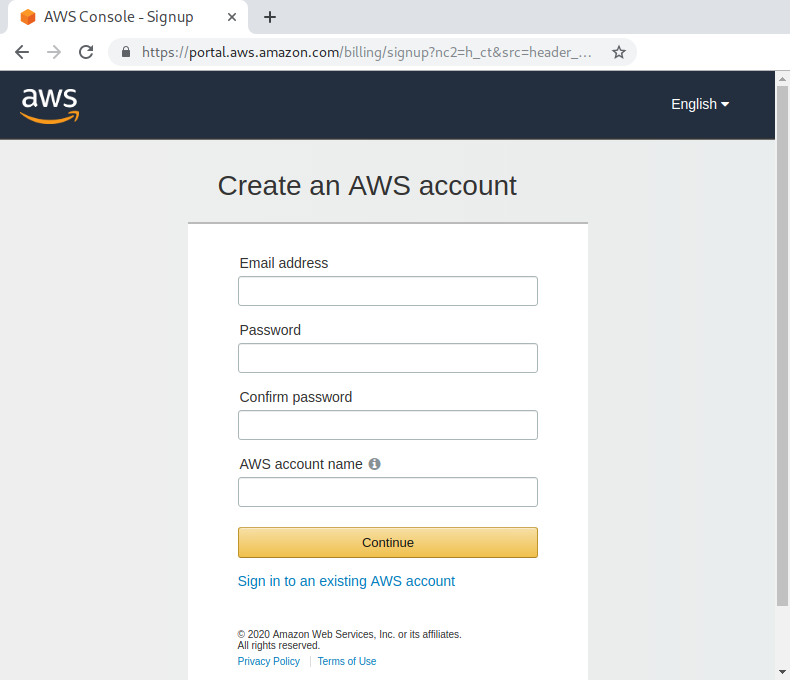

How to create your AWS access Key ID and AWS Secret Access key

Airflow Aws_Access_Key_Id We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. To use iam instance profile, create an “empty” connection (i.e. Password (optional) specify the aws secret access key. Then, we will dive into how to use airflow to download data from an api and upload it to s3. Specify the aws access key id. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: Extra (optional) specify the extra. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. One with no aws access key id or aws secret access key specified, or aws://). Specify the aws secret access key.

From devocean.sk.com

Apache Airflow for Data Science — How to Upload Files to Amazon S3 Airflow Aws_Access_Key_Id Specify the aws access key id. One with no aws access key id or aws secret access key specified, or aws://). Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. Specify the aws secret access key. Then, we will dive into how. Airflow Aws_Access_Key_Id.

From speakerdeck.com

An attacker’s guide to AWS Access Keys Speaker Deck Airflow Aws_Access_Key_Id Extra (optional) specify the extra. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Password (optional) specify the aws secret access key. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret. Airflow Aws_Access_Key_Id.

From laptrinhx.com

How to create an Amazon S3 Bucket and AWS Access Key ID and Secret Airflow Aws_Access_Key_Id Password (optional) specify the aws secret access key. One with no aws access key id or aws secret access key specified, or aws://). In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret. Airflow Aws_Access_Key_Id.

From stackoverflow.com

access keys How do I get AWS_ACCESS_KEY_ID for Amazon? Stack Overflow Airflow Aws_Access_Key_Id Specify the aws secret access key. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. Extra (optional) specify the extra. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Password (optional) specify the aws secret. Airflow Aws_Access_Key_Id.

From nerdyelectronics.com

Create AWS Access key ID and secret access key NerdyElectronics Airflow Aws_Access_Key_Id To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Password (optional) specify the aws secret access key. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Specify the aws secret access key. Then, we will. Airflow Aws_Access_Key_Id.

From k21academy.com

How To Create Access Keys And Secret Keys In AWS Airflow Aws_Access_Key_Id Password (optional) specify the aws secret access key. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. Specify the aws access key id. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: To use iam instance profile, create. Airflow Aws_Access_Key_Id.

From www.twingate.com

Simple & Secure Access to Airflow on AWS Twingate Airflow Aws_Access_Key_Id To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. To use iam instance profile, create an “empty” connection (i.e. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating. Airflow Aws_Access_Key_Id.

From www.youtube.com

How to get AWS access key and secret key id for s3 YouTube Airflow Aws_Access_Key_Id To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. Specify the. Airflow Aws_Access_Key_Id.

From code2care.org

Configure AWS Access ID and Secret Keys using CLI on Mac Airflow Aws_Access_Key_Id To use iam instance profile, create an “empty” connection (i.e. Then, we will dive into how to use airflow to download data from an api and upload it to s3. Specify the aws access key id. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: One with no aws access key id or aws secret access. Airflow Aws_Access_Key_Id.

From speakerdeck.com

An attacker’s guide to AWS Access Keys Speaker Deck Airflow Aws_Access_Key_Id Then, we will dive into how to use airflow to download data from an api and upload it to s3. One with no aws access key id or aws secret access key specified, or aws://). Password (optional) specify the aws secret access key. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Specify. Airflow Aws_Access_Key_Id.

From mattsosna.com

AWS Essentials for Data Science 2. Storage Matt Sosna Airflow Aws_Access_Key_Id To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. One with no aws access key id or aws secret access key specified, or aws://). Specify the aws access key id. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a. Airflow Aws_Access_Key_Id.

From www.linkedin.com

AWS IAM Access keys rotation using Lambda function Airflow Aws_Access_Key_Id Specify the aws access key id. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. To use iam instance profile, create an “empty” connection (i.e. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Then, we will dive into how to use. Airflow Aws_Access_Key_Id.

From s3browser.com

Access Key Id and Secret Access Key. How to retrieve your AWS Access Airflow Aws_Access_Key_Id To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Specify the aws access key id. Specify the aws secret access key. We will cover topics such as setting up an. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Specify the. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id Then, we will dive into how to use airflow to download data from an api and upload it to s3. Extra (optional) specify the extra. Password (optional) specify the aws secret access key. Specify the aws access key id. One with no aws access key id or aws secret access key specified, or aws://). To set up the github actions. Airflow Aws_Access_Key_Id.

From www.msp360.com

How to Get AWS Access Key ID and Secret Access Key Airflow Aws_Access_Key_Id To use iam instance profile, create an “empty” connection (i.e. Extra (optional) specify the extra. Specify the aws secret access key. Then, we will dive into how to use airflow to download data from an api and upload it to s3. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync. Airflow Aws_Access_Key_Id.

From www.decodingdevops.com

How To Create AWS Access Key and Secret Key DecodingDevops Airflow Aws_Access_Key_Id To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Extra (optional) specify the extra. To use iam instance profile, create an “empty” connection (i.e. Specify the aws secret access key. One with no aws access key id or aws secret access key specified, or aws://). Specify. Airflow Aws_Access_Key_Id.

From paladincloud.io

How to rotate keys with AWS KMS Paladin Cloud Airflow Aws_Access_Key_Id To use iam instance profile, create an “empty” connection (i.e. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Specify the aws secret access key. Specify the aws access key id. To achieve this i am using gcp composer (airflow) service where i am scheduling this. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to. Airflow Aws_Access_Key_Id.

From mail.codejava.net

How to Generate AWS Access Key ID and Secret Access Key Airflow Aws_Access_Key_Id Then, we will dive into how to use airflow to download data from an api and upload it to s3. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: We will cover topics. Airflow Aws_Access_Key_Id.

From www.msp360.com

How to Get AWS Access Key ID and Secret Access Key Airflow Aws_Access_Key_Id Specify the aws access key id. Specify the aws secret access key. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. In the extra field, input json with aws credentials. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id Password (optional) specify the aws secret access key. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. Learn. Airflow Aws_Access_Key_Id.

From support.kochava.com

AWS Access and Secret Keys Airflow Aws_Access_Key_Id Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Then, we will dive into how to use airflow to download data from an api and upload it to s3. One with no aws access key id or aws secret access key specified, or aws://). To set up the github actions to automatically sync. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. Specify the aws secret access key. Specify the aws access key id. One with no aws access key id or aws secret access key specified, or aws://). Then, we will dive. Airflow Aws_Access_Key_Id.

From www.filestash.app

How to create your AWS access Key ID and AWS Secret Access key Airflow Aws_Access_Key_Id We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload data to s3. To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Specify the. Airflow Aws_Access_Key_Id.

From www.scaler.com

AWS Access Key Scaler Topics Airflow Aws_Access_Key_Id Password (optional) specify the aws secret access key. Specify the aws secret access key. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. To use iam instance profile, create an “empty” connection (i.e. Then, we will dive into how to use airflow. Airflow Aws_Access_Key_Id.

From blog.gurjot.dev

AWS Access Keys Airflow Aws_Access_Key_Id Password (optional) specify the aws secret access key. Then, we will dive into how to use airflow to download data from an api and upload it to s3. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload. Airflow Aws_Access_Key_Id.

From www.youtube.com

How to get AWS access key and secret key id YouTube Airflow Aws_Access_Key_Id Extra (optional) specify the extra. Specify the aws access key id. To use iam instance profile, create an “empty” connection (i.e. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample. Airflow Aws_Access_Key_Id.

From www.youtube.com

How to Create AWS Access Key ID and Secret Access Key AWS Access Keys Airflow Aws_Access_Key_Id To set up the github actions to automatically sync our dags folder containing the actual dag code to s3, we can use the. Extra (optional) specify the extra. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Password (optional) specify the aws secret access key. Learn how to use. Airflow Aws_Access_Key_Id.

From www.youtube.com

Authenticating to AWS Services using Access Keys YouTube Airflow Aws_Access_Key_Id To use iam instance profile, create an “empty” connection (i.e. Learn how to use the secret key for the apache airflow connection (myconn) on this page using the sample code at using a secret key in aws. Then, we will dive into how to use airflow to download data from an api and upload it to s3. To achieve this. Airflow Aws_Access_Key_Id.

From blog.csdn.net

如何获取AWS的Access Key ID 和 Secret Access Key (Unable to find credentials Airflow Aws_Access_Key_Id Specify the aws secret access key. Extra (optional) specify the extra. Password (optional) specify the aws secret access key. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api,. Airflow Aws_Access_Key_Id.

From awsteele.com

AWS Access Key ID formats Aidan Steele’s blog (usually about AWS) Airflow Aws_Access_Key_Id Specify the aws access key id. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Then, we will dive into how to use airflow to download data from an api and upload it to s3. In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: One. Airflow Aws_Access_Key_Id.

From orca.security

AWS Access Keys Cloud Risk Encyclopedia Orca Security Airflow Aws_Access_Key_Id To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. Password (optional) specify the aws secret access key. We will cover topics such as setting up an s3 bucket, configuring an airflow connection to s3, creating a python task to access the api, and creating an airflow dag to upload. Airflow Aws_Access_Key_Id.

From www.youtube.com

How to Rotate Access Keys by AWS CLI How to Create AWS Access Key ID Airflow Aws_Access_Key_Id Specify the aws access key id. Extra (optional) specify the extra parameters (as json dictionary) that can be used in aws connection. To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. One with no aws access key id or aws secret access key specified, or aws://). Learn how to. Airflow Aws_Access_Key_Id.

From www.cloudockit.com

Cloudockit AWS Authentication Using Access Keys Airflow Aws_Access_Key_Id Specify the aws access key id. Extra (optional) specify the extra. One with no aws access key id or aws secret access key specified, or aws://). In the extra field, input json with aws credentials and region, e.g., {aws_access_key_id: To achieve this i am using gcp composer (airflow) service where i am scheduling this rsync operation to sync files. To. Airflow Aws_Access_Key_Id.