Pyspark Reduce Example . It is a wider transformation as it. The first trick is to stack any number of dataframes using the. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. See understanding treereduce () in spark. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Here’s an example of how to use reduce() in pyspark: Let's consider the pair rdd: Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:.

from data-flair.training

The first trick is to stack any number of dataframes using the. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. It is a wider transformation as it. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Here’s an example of how to use reduce() in pyspark: See understanding treereduce () in spark.

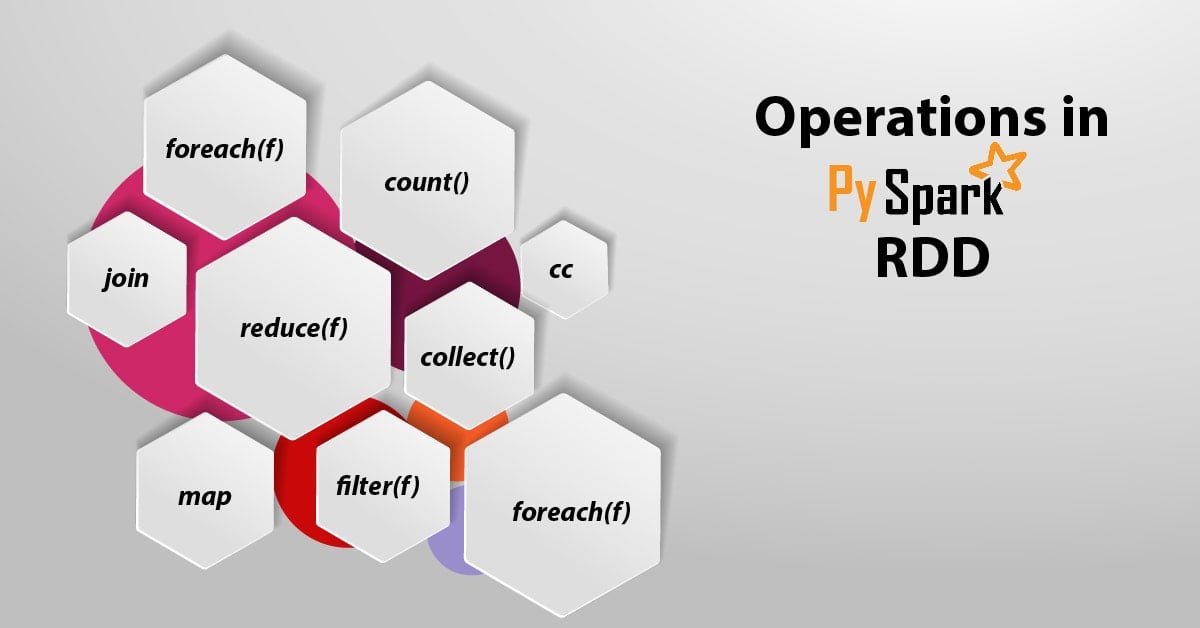

PySpark RDD With Operations and Commands DataFlair

Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. It is a wider transformation as it. Let's consider the pair rdd: I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. The first trick is to stack any number of dataframes using the. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Here’s an example of how to use reduce() in pyspark: See understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative.

From www.analyticsvidhya.com

An Endtoend Guide on Building a Regression Pipeline Using Pyspark Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: The first trick is to stack any number of dataframes using the. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. See understanding treereduce () in spark. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd.. Pyspark Reduce Example.

From docs.whylabs.ai

Apache Spark WhyLabs Documentation Pyspark Reduce Example The first trick is to stack any number of dataframes using the. See understanding treereduce () in spark. Here’s an example of how to use reduce() in pyspark: Let's consider the pair rdd: X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Callable [[t, t], t]) → t [source] ¶ reduces the. Pyspark Reduce Example.

From www.youtube.com

Pyspark RDD Operations Actions in Pyspark RDD Fold vs Reduce Glom Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. The first trick is to stack any number of dataframes using the. Learn to use reduce() with java, python examples Let's consider the pair rdd: I’ll show two examples where i use python’s ‘reduce’ from the functools library. Pyspark Reduce Example.

From morioh.com

TFIDF Calculation Using MapReduce Algorithm in PySpark Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. The first trick is to stack any number of dataframes using the. Rdd = sc.parallelize([1, 2, 3, 4,. Pyspark Reduce Example.

From blog.csdn.net

PySpark reduce reduceByKey用法_pyspark reducebykeyCSDN博客 Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Let's consider the pair rdd: Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. Here’s an example of how to use reduce(). Pyspark Reduce Example.

From developer.ibm.com

Getting started with PySpark IBM Developer Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: Learn to use reduce() with java, python examples Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. It is a wider transformation as it. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Callable [[t, t],. Pyspark Reduce Example.

From www.projectpro.io

PySpark Machine Learning Tutorial for Beginners Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Here’s. Pyspark Reduce Example.

From mavink.com

Que Es Pyspark Pyspark Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. Let's consider the pair rdd: Here’s an example of how to use reduce() in pyspark: It is a wider transformation as it. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Rdd = sc.parallelize([1, 2, 3, 4,. Pyspark Reduce Example.

From www.babbel.com

Launch an AWS EMR cluster with Pyspark and Jupyter Notebook inside a VPC Pyspark Reduce Example It is a wider transformation as it. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of. Pyspark Reduce Example.

From sparkbyexamples.com

PySpark RDD Tutorial Learn with Examples Spark By {Examples} Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on. Pyspark Reduce Example.

From sparkbyexamples.com

PySpark persist() Explained with Examples Spark By {Examples} Pyspark Reduce Example The first trick is to stack any number of dataframes using the. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples It is a wider transformation as it. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Pyspark reducebykey() transformation is used to merge the values. Pyspark Reduce Example.

From realpython.com

First Steps With PySpark and Big Data Processing Real Python Pyspark Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Let's consider the pair rdd: Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in. Pyspark Reduce Example.

From sparkbyexamples.com

PySpark Tutorial For Beginners Python Examples Spark by {Examples} Pyspark Reduce Example Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. It is a wider transformation as it. Let's consider the pair rdd: Spark rdd reduce () aggregate action function is used to calculate min, max, and total of. Pyspark Reduce Example.

From realpython.com

First Steps With PySpark and Big Data Processing Real Python Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: Let's consider the pair rdd: Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. See understanding treereduce () in spark. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms.. Pyspark Reduce Example.

From ashishware.com

Creating scalable NLP pipelines using PySpark and Nlphose Pyspark Reduce Example The first trick is to stack any number of dataframes using the. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Here’s an example of how to use reduce() in pyspark: See understanding treereduce () in spark. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Callable [[t, t], t]) → t [source] ¶. Pyspark Reduce Example.

From sparkbyexamples.com

PySpark Tutorial For Beginners Python Examples Spark by {Examples} Pyspark Reduce Example It is a wider transformation as it. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Here’s an example of how to use reduce() in pyspark: Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce. Pyspark Reduce Example.

From data-flair.training

PySpark RDD With Operations and Commands DataFlair Pyspark Reduce Example I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. The first trick is to stack any number of dataframes using the. Learn to use reduce() with java, python examples To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Callable [[t, t], t]) → t [source] ¶. Pyspark Reduce Example.

From www.youtube.com

Practical RDD action reduce in PySpark using Jupyter PySpark 101 Pyspark Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. It is a wider transformation as it. Learn to use reduce() with java, python examples X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce. Pyspark Reduce Example.

From www.dataiku.com

How to use PySpark in Dataiku DSS Dataiku Pyspark Reduce Example Let's consider the pair rdd: See understanding treereduce () in spark. It is a wider transformation as it. Here’s an example of how to use reduce() in pyspark: X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. I’ll show two. Pyspark Reduce Example.

From sparkbyexamples.com

PySpark transform() Function with Example Spark By {Examples} Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Learn to use reduce() with java, python examples Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will. Pyspark Reduce Example.

From towardsdatascience.com

Big Data Analyses with Machine Learning and PySpark by Cvetanka Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. See understanding treereduce () in spark. Learn to use reduce() with java, python examples The first trick is to stack any number of dataframes using the. I’ll show two examples where i use. Pyspark Reduce Example.

From renien.com

Accessing PySpark in PyCharm Renien John Joseph Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples It is a wider transformation as it. Pyspark reducebykey() transformation is used to. Pyspark Reduce Example.

From brandiscrafts.com

Pyspark Reduce Function? The 16 Detailed Answer Pyspark Reduce Example Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. See understanding treereduce () in spark. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Learn to use reduce() with java, python examples To summarize reduce, excluding driver side processing, uses exactly. Pyspark Reduce Example.

From 8vi.cat

Setup pyspark in Mac OS X and Visual Studio Code Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative. Pyspark Reduce Example.

From www.kdnuggets.com

Learn how to use PySpark in under 5 minutes (Installation + Tutorial Pyspark Reduce Example The first trick is to stack any number of dataframes using the. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. See. Pyspark Reduce Example.

From nyu-cds.github.io

BigData with PySpark MapReduce Primer Pyspark Reduce Example Learn to use reduce() with java, python examples Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. Let's consider the pair rdd: Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative.. Pyspark Reduce Example.

From legiit.com

Big Data, Map Reduce And PySpark Using Python Legiit Pyspark Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. See understanding treereduce () in spark. Let's consider the pair rdd: It is a wider transformation as it. To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Spark rdd reduce () aggregate action function is used to. Pyspark Reduce Example.

From bogotobogo.com

Apache Spark 2 tutorial with PySpark (Spark Python API) Shell 2018 Pyspark Reduce Example Let's consider the pair rdd: Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. It is a wider transformation as it. Learn to use reduce() with java, python examples Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Here’s an example of how to use reduce(). Pyspark Reduce Example.

From sparkbyexamples.com

PySpark Create DataFrame with Examples Spark By {Examples} Pyspark Reduce Example It is a wider transformation as it. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a. Pyspark Reduce Example.

From docs.oracle.com

Exercise 3 Machine Learning with PySpark Pyspark Reduce Example Learn to use reduce() with java, python examples Let's consider the pair rdd: X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. See understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of. Pyspark Reduce Example.

From fyodlejvy.blob.core.windows.net

How To Create Rdd From Csv File In Pyspark at Patricia Lombard blog Pyspark Reduce Example Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain rdd. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Pyspark reducebykey() transformation is used to merge the values of each key using. Pyspark Reduce Example.

From blog.ditullio.fr

Quick setup for PySpark with IPython notebook Nico's Blog Pyspark Reduce Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and associative. I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Learn to use reduce() with java, python examples X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there. Pyspark Reduce Example.

From stackoverflow.com

pyspark Reduce Spark Tasks Stack Overflow Pyspark Reduce Example See understanding treereduce () in spark. Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. X = sc.parallelize([(a, 1), (b, 1), (a, 4), (c, 7)]) is there a more efficient alternative to:. Let's consider the pair rdd: It is a wider transformation as it. Here’s an example of how. Pyspark Reduce Example.

From ittutorial.org

PySpark RDD Example IT Tutorial Pyspark Reduce Example Here’s an example of how to use reduce() in pyspark: Pyspark reducebykey() transformation is used to merge the values of each key using an associative reduce function on pyspark rdd. Rdd = sc.parallelize([1, 2, 3, 4, 5]) # define a function to. Spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in. Pyspark Reduce Example.

From fyojprmwb.blob.core.windows.net

Partition By Key Pyspark at Marjorie Lamontagne blog Pyspark Reduce Example To summarize reduce, excluding driver side processing, uses exactly the same mechanisms. Learn to use reduce() with java, python examples Let's consider the pair rdd: I’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to spark dataframes. Pyspark reducebykey() transformation is used to merge the values of each key using an associative. Pyspark Reduce Example.