Time Distributed Layer Explained . The input should be at least 3d, and the dimension of index. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. timedistributed layer is very useful to work with time series data or video frames. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. That means that instead of having several input. It allows to use a layer for each input. this wrapper allows to apply a layer to every temporal slice of an input. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment.

from fyosjxcyr.blob.core.windows.net

timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. The input should be at least 3d, and the dimension of index. That means that instead of having several input. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer is very useful to work with time series data or video frames. this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. It allows to use a layer for each input.

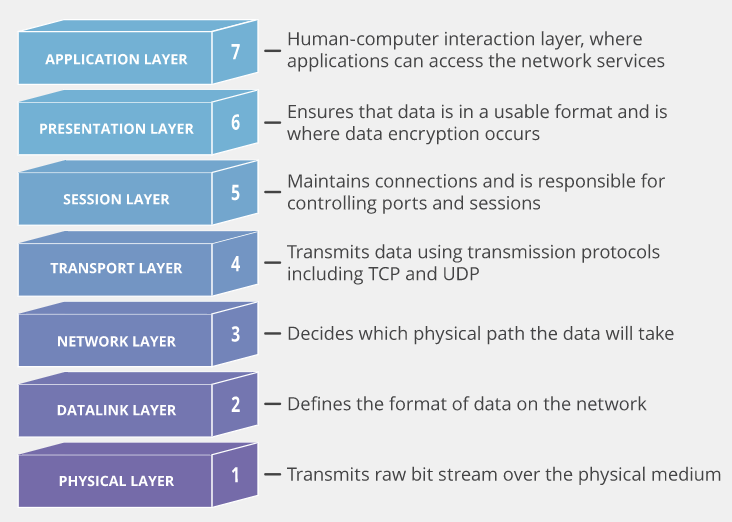

Network Layers Computer Science at Trinidad Bautista blog

Time Distributed Layer Explained timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. timedistributed layer is very useful to work with time series data or video frames. That means that instead of having several input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. The input should be at least 3d, and the dimension of index. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. It allows to use a layer for each input. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. this wrapper allows to apply a layer to every temporal slice of an input.

From www.researchgate.net

Detailed architecture with visualization of timedistributed layer Time Distributed Layer Explained keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. The input should be at least 3d, and the dimension of index. this wrapper allows to apply a layer to every temporal slice. Time Distributed Layer Explained.

From www.researchgate.net

Chemical reaction time distributions for a system with N = 2 and M = 1 Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. the timedistributed layer in keras is a wrapper layer that allows for the application. Time Distributed Layer Explained.

From www.researchgate.net

Time Distributed Stacked LSTM Model Download Scientific Diagram Time Distributed Layer Explained the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. timedistributed layer is very useful to work with time series data or video frames. this wrapper allows to apply a layer to every temporal slice of an input. It allows to use a layer for. Time Distributed Layer Explained.

From www.researchgate.net

The latitude‐time distribution by tensor and averaging Time Distributed Layer Explained 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. That means that instead of having several input. timedistributed layer is very useful to work with time series data or video frames. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to. Time Distributed Layer Explained.

From www.researchgate.net

The architecture of the utilized neural network. The input sequence Time Distributed Layer Explained That means that instead of having several input. this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. It allows to use a layer for each input. keras.layers.timedistributed(layer, **kwargs) this wrapper. Time Distributed Layer Explained.

From www.pythonfixing.com

[FIXED] How to implement timedistributed dense (TDD) layer in PyTorch Time Distributed Layer Explained keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. That means that instead of having several input. The input should be at least 3d, and the dimension of index. this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed achieves this trick by applying. Time Distributed Layer Explained.

From www.researchgate.net

DeepFake Video Detection A TimeDistributed Approach Request PDF Time Distributed Layer Explained 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for.. Time Distributed Layer Explained.

From www.slideshare.net

What Distribution Does the Time Time Distributed Layer Explained keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. The input should be at least 3d, and the dimension of index. That means that instead of having several input. the timedistributed layer. Time Distributed Layer Explained.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer Explained timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. It allows to use a layer for each input. the timedistributed layer in keras is a. Time Distributed Layer Explained.

From www.researchgate.net

Detailed architecture with visualization of timedistributed layer Time Distributed Layer Explained the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. timedistributed layer is very useful to work with time series data or video frames. That means that instead of having several input. It allows to use a layer for each input. timedistributed is a wrapper. Time Distributed Layer Explained.

From www.researchgate.net

Realtime Distributed architecture Download Scientific Diagram Time Distributed Layer Explained It allows to use a layer for each input. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. That means that instead of having several input. the timedistributed layer in keras is. Time Distributed Layer Explained.

From www.researchgate.net

a Basic framework of 2D convolution layer for handling time series Time Distributed Layer Explained timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. It allows to use a layer for each input. this wrapper allows to apply a layer to every temporal slice of an input. 💡 the power of time distributed layer is that, wherever it is placed, before or after. Time Distributed Layer Explained.

From link.springer.com

Correction to Speech emotion recognition using time distributed 2D Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. this wrapper allows to apply a layer to every temporal slice of an input. It allows to use a layer for. Time Distributed Layer Explained.

From stackoverflow.com

keras Confused about how to implement timedistributed LSTM + LSTM Time Distributed Layer Explained That means that instead of having several input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The input should be at least 3d, and the dimension of index. 💡 the power. Time Distributed Layer Explained.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer Explained 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to. Time Distributed Layer Explained.

From github.com

GitHub riyaj8888/TimeDistributedLayerwithMultiHeadAttentionfor Time Distributed Layer Explained The input should be at least 3d, and the dimension of index. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. timedistributed is a wrapper layer. Time Distributed Layer Explained.

From www.researchgate.net

Structure of Time Distributed CNN model Download Scientific Diagram Time Distributed Layer Explained It allows to use a layer for each input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. this wrapper allows to apply a layer to every temporal slice of an input.. Time Distributed Layer Explained.

From www.researchgate.net

The structure of time distributed convolutional gated recurrent unit Time Distributed Layer Explained timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. That means that instead of having several input. It allows to use a layer for each input. this wrapper allows to apply a layer to every temporal slice of an input. The input should be at least 3d, and the. Time Distributed Layer Explained.

From www.nokia.com

Providing accurate time synchronization for financial trading Nokia Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. . Time Distributed Layer Explained.

From towardsdatascience.com

Difference between Local Response Normalization and Batch Normalization Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. timedistributed layer is very useful to work with time series data or video frames.. Time Distributed Layer Explained.

From www.researchgate.net

Block diagram of the line based time distributed architecture Time Distributed Layer Explained the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. That means that instead of having several input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. timedistributed is a wrapper layer that will apply a. Time Distributed Layer Explained.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer Explained timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. It allows to use a layer for each input. The input should be at least 3d, and the dimension of index. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. this wrapper. Time Distributed Layer Explained.

From www.ionos.ca

Distributed computing functions, advantages, types, and applications Time Distributed Layer Explained the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. . Time Distributed Layer Explained.

From exophnret.blob.core.windows.net

Time Distributed Lstm at John Carroll blog Time Distributed Layer Explained keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. It allows to use a layer for each input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. timedistributed is a wrapper layer that will apply a layer the temporal dimension of. Time Distributed Layer Explained.

From medium.com

How to work with Time Distributed data in a neural network by Patrice Time Distributed Layer Explained this wrapper allows to apply a layer to every temporal slice of an input. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. The input should be at least 3d, and the dimension of. Time Distributed Layer Explained.

From medium.com

How to work with Time Distributed data in a neural network by Patrice Time Distributed Layer Explained this wrapper allows to apply a layer to every temporal slice of an input. timedistributed layer is very useful to work with time series data or video frames. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. the timedistributed layer in keras is a wrapper layer that. Time Distributed Layer Explained.

From zhuanlan.zhihu.com

『迷你教程』LSTM网络下如何正确使用时间分布层 知乎 Time Distributed Layer Explained It allows to use a layer for each input. That means that instead of having several input. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. timedistributed is a wrapper layer that. Time Distributed Layer Explained.

From www.slideserve.com

PPT UTCN PowerPoint Presentation, free download ID1332107 Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. timedistributed layer is very useful to work with time series data or video frames. That means that instead of having several input. The input should be at least 3d, and the dimension of index. 💡 the power of time. Time Distributed Layer Explained.

From link.springer.com

Correction to Speech emotion recognition using time distributed 2D Time Distributed Layer Explained keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. That means that instead of having several input. It allows to use a layer for each input. The input should. Time Distributed Layer Explained.

From www.researchgate.net

Plots for Single Time distributed layer Model Download Scientific Diagram Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the. Time Distributed Layer Explained.

From orangematter.solarwinds.com

What Is a Distributed System? Orange Matter Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. keras.layers.timedistributed(layer, **kwargs) this wrapper allows to apply a layer to every temporal slice of an input. 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the. Time Distributed Layer Explained.

From www.youtube.com

PYTHON What is the role of TimeDistributed layer in Keras? YouTube Time Distributed Layer Explained timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. the timedistributed layer in keras is a wrapper layer that allows for the application of a layer to every time step of. It allows to use a layer for each input. this wrapper allows to apply a layer to every temporal. Time Distributed Layer Explained.

From builtin.com

Fully Connected Layer vs Convolutional Layer Explained Built In Time Distributed Layer Explained 💡 the power of time distributed layer is that, wherever it is placed, before or after lstm, each temporal data will undergo the same treatment. the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. this wrapper allows to apply a layer to every temporal slice of an input.. Time Distributed Layer Explained.

From www.altoros.com

Distributed TensorFlow and Classification of Time Series Data Using Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. That means that instead of having several input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. The input should be at least 3d, and the dimension of index. keras.layers.timedistributed(layer,. Time Distributed Layer Explained.

From fyosjxcyr.blob.core.windows.net

Network Layers Computer Science at Trinidad Bautista blog Time Distributed Layer Explained the timedistributed achieves this trick by applying the same dense layer (same weights) to the lstms outputs for. timedistributed is a wrapper layer that will apply a layer the temporal dimension of an input. timedistributed layer applies the layer wrapped inside it to each timestep so the input shape to the dense_layer. It allows to use a. Time Distributed Layer Explained.