Neural Network Stopping Criteria . The general set of strategies against this curse of overfitting is called. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. validation can be used to detect when overfitting starts during supervised training of a neural network; in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. regularization and early stopping: in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks.

from www.slideserve.com

enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. validation can be used to detect when overfitting starts during supervised training of a neural network; The general set of strategies against this curse of overfitting is called. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. regularization and early stopping: in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks.

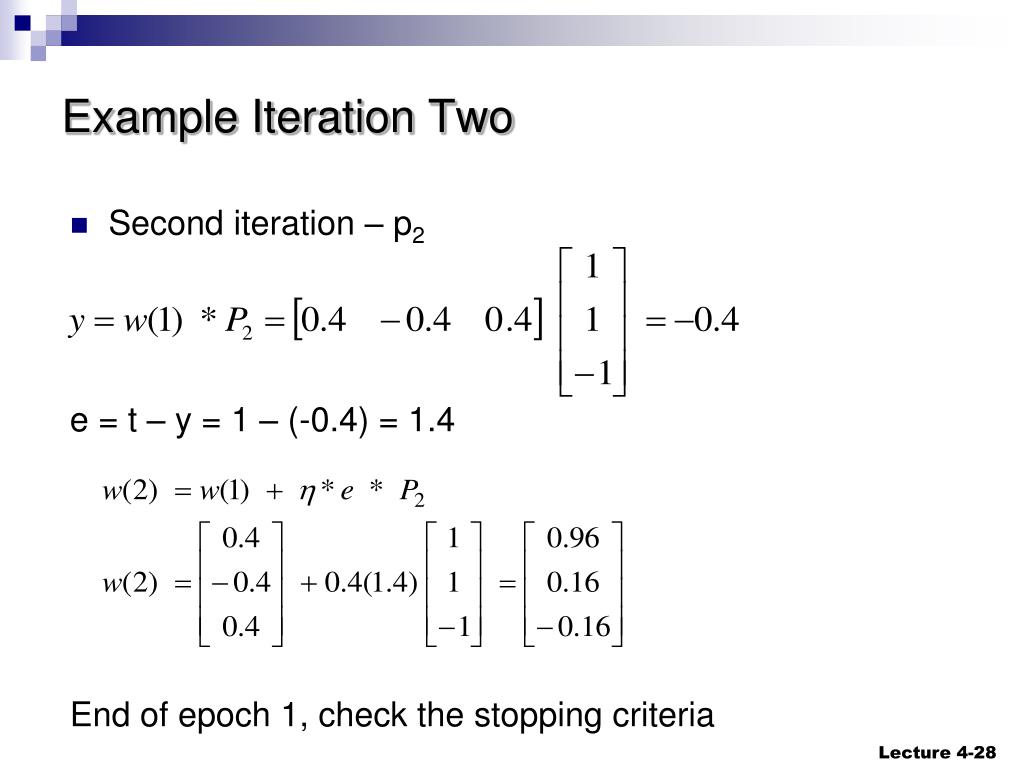

PPT Neural Networks Lecture 4 Least Mean Square algorithm for Single

Neural Network Stopping Criteria regularization and early stopping: The general set of strategies against this curse of overfitting is called. regularization and early stopping: 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. validation can be used to detect when overfitting starts during supervised training of a neural network; in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to.

From www.slideserve.com

PPT Neural Networks Lecture 4 Least Mean Square algorithm for Single Neural Network Stopping Criteria in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. regularization and early stopping: early stopping is basically stopping the training once your loss. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 4 from Early Stopping Criteria for LevenbergMarquardt Based Neural Network Stopping Criteria 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. The general set of strategies against this curse of overfitting is called. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. validation can be used to detect. Neural Network Stopping Criteria.

From jameskle.com

Neural Networks 101 — James Le Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; The general set of strategies against this curse of overfitting is called. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. in this tutorial, you discovered the keras api. Neural Network Stopping Criteria.

From www.semanticscholar.org

[PDF] An Adaptive Stopping Criterion for Backpropagation Learning in Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. The general set of strategies against this curse of overfitting is called. in this paper, we have. Neural Network Stopping Criteria.

From www.researchgate.net

(PDF) Iterative Decoding of K/N Convolutional Codes based on Recurrent Neural Network Stopping Criteria in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. The general set of strategies against this curse of overfitting is called. validation can be used to detect when overfitting starts during supervised training of a neural network; 10 rows early stopping is a regularization technique for deep neural. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 1 from Comparison of Early Stopping Criteria for Neural Network Stopping Criteria enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. The general set. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 2 from A General Rate K/N Convolutional Decoder Based on Neural Neural Network Stopping Criteria regularization and early stopping: enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. validation can be used to detect when overfitting starts during supervised training of a neural network; The general set. Neural Network Stopping Criteria.

From www.ai2news.com

Improved Precision and Recall Metric for Assessing Generative Models AI牛丝 Neural Network Stopping Criteria early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks.. Neural Network Stopping Criteria.

From www.researchgate.net

Performance of neural network. Download Scientific Diagram Neural Network Stopping Criteria early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in this. Neural Network Stopping Criteria.

From www.researchgate.net

Stopping () criteria met by algorithm for different test cases Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. regularization and early stopping: enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 4 from Early Stopping Criteria for LevenbergMarquardt Based Neural Network Stopping Criteria enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin. Neural Network Stopping Criteria.

From www.researchgate.net

How to determine HMM inference stopping criteria. Download Scientific Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. The general set of strategies against this curse of overfitting is called. 10 rows early stopping is a regularization technique for deep neural. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Neural networks PowerPoint Presentation, free download ID3337378 Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; regularization and early stopping: 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. The general set of strategies against this curse of overfitting is called. . Neural Network Stopping Criteria.

From www.mlfactor.com

Chapter 7 Neural networks Machine Learning for Factor Investing Neural Network Stopping Criteria 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. regularization and early stopping: in this tutorial, you discovered the keras api for adding. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Neural Networks Lecture 4 Least Mean Square algorithm for Single Neural Network Stopping Criteria enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. The general set of strategies against this curse of overfitting is called. early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. in this tutorial, you discovered the keras api for. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Optimizing Neural Network Learning Speed PowerPoint Presentation Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this paper,. Neural Network Stopping Criteria.

From www.researchgate.net

Stopping criteria. In most cases, the FPE criterion can provide the Neural Network Stopping Criteria in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. The general set of strategies against this curse of overfitting is called. in this paper,. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Artificial Neural Network PowerPoint Presentation, free download Neural Network Stopping Criteria 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. The general set of strategies against this curse of overfitting is called. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. in this paper,. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Neural networks PowerPoint Presentation, free download ID3337378 Neural Network Stopping Criteria regularization and early stopping: 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. validation can be used to detect when overfitting starts during. Neural Network Stopping Criteria.

From www.researchgate.net

Stopping criteria for a neural network using a test set not used to Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this paper, we have proposed. Neural Network Stopping Criteria.

From www.youtube.com

Early Stopping Criteria in Neural Network (CSE425) YouTube Neural Network Stopping Criteria 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network.. Neural Network Stopping Criteria.

From www.researchgate.net

(PDF) Early Stopping Criteria for LevenbergMarquardt Based Neural Neural Network Stopping Criteria in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. regularization and early stopping: early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. The general set of strategies against this curse of overfitting is called. validation. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 1 from An Adaptive Stopping Criterion for Backpropagation Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. early stopping is basically stopping the training once your loss starts to. Neural Network Stopping Criteria.

From www.researchgate.net

Algorithm 1 NRVQA‐ELM with stop criteria (NRVQA‐ELMsc) Download Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. early stopping is basically stopping the training once your loss starts to increase (or in other words. Neural Network Stopping Criteria.

From www.researchgate.net

Schematic Representation of Neural Circuitry Mediating ‘‘Waiting’’ and Neural Network Stopping Criteria 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. regularization and early stopping: in this tutorial, you discovered the keras api for adding early stopping to. Neural Network Stopping Criteria.

From www.researchgate.net

Process for stopping the training of a Neural Network of the study Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. validation can be. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 1 from Iterative Decoding of K/N Convolutional Codes based on Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this tutorial,. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 4 from A General Rate K/N Convolutional Decoder Based on Neural Neural Network Stopping Criteria validation can be used to detect when overfitting starts during supervised training of a neural network; in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. The general set of strategies against this curse of overfitting is called. early stopping is basically stopping the training once your loss starts. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 7 from A General Rate K/N Convolutional Decoder Based on Neural Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this paper,. Neural Network Stopping Criteria.

From www.researchgate.net

Algorithm for Stopping Criteria Download Scientific Diagram Neural Network Stopping Criteria enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. validation can be used to detect when overfitting starts during supervised training of a neural network; The general set of strategies against this curse of overfitting is called. regularization and early stopping: early stopping is basically stopping the training once your. Neural Network Stopping Criteria.

From www.aiproblog.com

How to Stop Training Deep Neural Networks At the Right Time Using Early Neural Network Stopping Criteria regularization and early stopping: 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. validation can be used to detect when overfitting starts during. Neural Network Stopping Criteria.

From www.semanticscholar.org

Figure 1 from A General Rate K/N Convolutional Decoder Based on Neural Neural Network Stopping Criteria early stopping is basically stopping the training once your loss starts to increase (or in other words validation accuracy starts to. regularization and early stopping: The general set of strategies against this curse of overfitting is called. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer. Neural Network Stopping Criteria.

From stackoverflow.com

deep learning Stopping a neural network/LSTM at optimum result/epoch Neural Network Stopping Criteria The general set of strategies against this curse of overfitting is called. in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. 10 rows early stopping is a regularization technique for deep neural networks that stops training when parameter updates no longer begin to yield improves. in this tutorial,. Neural Network Stopping Criteria.

From www.researchgate.net

Process for stopping the training of a Neural Network of the study Neural Network Stopping Criteria in this paper, we have proposed a new stopping criterion to stop the learning processes of neural networks. enter early stopping, a powerful technique that helps prevent overfitting by stopping training when the. The general set of strategies against this curse of overfitting is called. 10 rows early stopping is a regularization technique for deep neural networks. Neural Network Stopping Criteria.

From www.slideserve.com

PPT Stopping Criteria PowerPoint Presentation, free download ID3302535 Neural Network Stopping Criteria in this tutorial, you discovered the keras api for adding early stopping to overfit deep learning neural network. validation can be used to detect when overfitting starts during supervised training of a neural network; The general set of strategies against this curse of overfitting is called. 10 rows early stopping is a regularization technique for deep neural. Neural Network Stopping Criteria.