Torch.einsum Explained . In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as.

from blog.csdn.net

Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations.

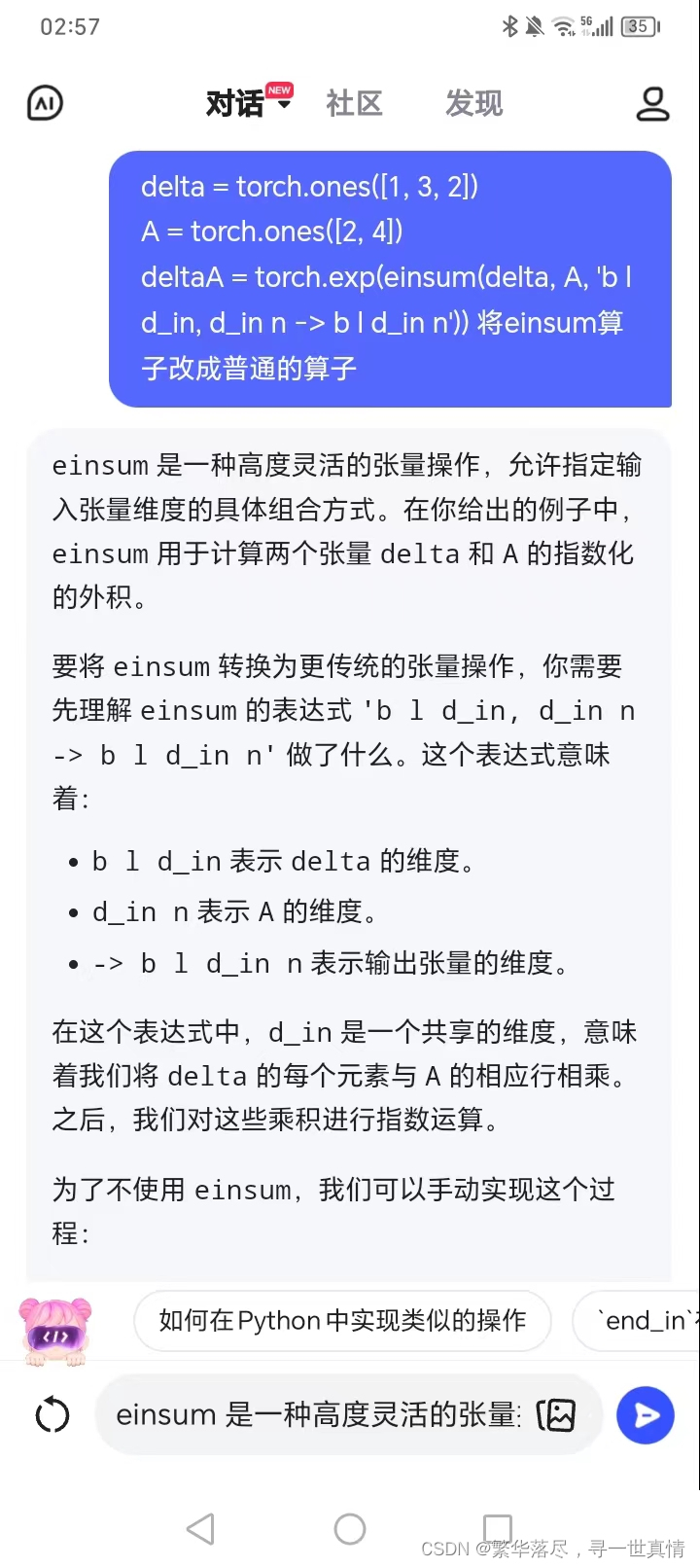

【深度学习模型移植】用torch普通算子组合替代torch.einsum方法_torch.einsum 替换CSDN博客

Torch.einsum Explained Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as.

From www.circuitdiagram.co

Schematic Diagram Of An Electric Torch Circuit Diagram Torch.einsum Explained Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. In this article, we provide code using einsum and visualizations for several tensor. Torch.einsum Explained.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. With the einstein notation and the einsum. Torch.einsum Explained.

From circuitpaugayjq.z21.web.core.windows.net

Main Parts Of A Torch Circuit Torch.einsum Explained I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. In this article, we. Torch.einsum Explained.

From github.com

GitHub hhaoyan/opteinsumtorch Memoryefficient optimum einsum Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow —. Torch.einsum Explained.

From github.com

Could torch.einsum gain speed boost ? · Issue 394 · NVIDIA/apex · GitHub Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow —. Torch.einsum Explained.

From blog.csdn.net

【深度学习模型移植】用torch普通算子组合替代torch.einsum方法_torch.einsum 替换CSDN博客 Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Torch.einsum() is. Torch.einsum Explained.

From www.zhihu.com

Pytorch比较torch.einsum和torch.matmul函数,选哪个更好? 知乎 Torch.einsum Explained Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. I will use pytorch’s einsum function in. Torch.einsum Explained.

From zanote.net

【Pytorch】torch.einsumの引数・使い方を徹底解説!アインシュタインの縮約規則を利用して複雑なテンソル操作を短い文字列を使って行う Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and. Torch.einsum Explained.

From hxetkiwaz.blob.core.windows.net

Torch Einsum Speed at Cornelius Dixon blog Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Pytorch's. Torch.einsum Explained.

From askfilo.com

Diagram of a torch is shown here. Identify the parts of the torch and the.. Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and. Torch.einsum Explained.

From github.com

torch.einsum 400x slower than numpy.einsum on a simple contraction Torch.einsum Explained I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. With the einstein notation. Torch.einsum Explained.

From morioh.com

Einsum Is All You Need NumPy, PyTorch and TensorFlow Torch.einsum Explained With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow —. Torch.einsum Explained.

From gitcode.csdn.net

「解析」如何优雅的学习 torch.einsum()_numpy_ViatorSunGitCode 开源社区 Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Torch.einsum(). Torch.einsum Explained.

From blog.csdn.net

einops库 rearrange, repeat, einsum,reduce用法_from einops import rearrange Torch.einsum Explained Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Einsum (equation, * operands). Torch.einsum Explained.

From baekyeongmin.github.io

Einsum 사용하기 Yeongmin’s Blog Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare. Torch.einsum Explained.

From github.com

When I use opt_einsum optimizes torch.einum, the running time after Torch.einsum Explained Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Torch.einsum() is a versatile and powerful tool. Torch.einsum Explained.

From llllline.com

Standing Torch 3D Model Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a. Torch.einsum Explained.

From hxetkiwaz.blob.core.windows.net

Torch Einsum Speed at Cornelius Dixon blog Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. In this article, we. Torch.einsum Explained.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Explained With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Torch.einsum() is a versatile and powerful. Torch.einsum Explained.

From discuss.pytorch.org

Speed difference in torch.einsum and torch.bmm when adding an axis Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along.. Torch.einsum Explained.

From github.com

[pytorch] torch.einsum processes ellipsis differently from NumPy Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Torch.einsum() is. Torch.einsum Explained.

From github.com

Link to `torch.einsum` in `torch.tensordot` · Issue 50802 · pytorch Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. With. Torch.einsum Explained.

From github.com

Support sublist arguments for torch.einsum · Issue 21412 · pytorch Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and. Torch.einsum Explained.

From www.cnblogs.com

笔记 EINSUM IS ALL YOU NEED EINSTEIN SUMMATION IN DEEP LEARNING Rogn Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only. Torch.einsum Explained.

From enginedataleonie88.z13.web.core.windows.net

Diagram Of A Torch Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. Since the description of einsum is skimpy in. Torch.einsum Explained.

From schematicwiringhumberto.z13.web.core.windows.net

A Circuit Diagram Of A Torch Torch.einsum Explained Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. I will use pytorch’s einsum function in the upcoming code, but you. Torch.einsum Explained.

From github.com

The speed of `torch.einsum` and `torch.matmul` when using `fp16` is Torch.einsum Explained Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of. Torch.einsum Explained.

From github.com

Support broadcasting in einsum · Issue 30194 · pytorch/pytorch · GitHub Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Since. Torch.einsum Explained.

From brainly.in

explain the working of an electric torch with the help of a labelled Torch.einsum Explained With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. In this. Torch.einsum Explained.

From brainly.in

explain the function of different parts of a torch with minute details Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. In this article, we. Torch.einsum Explained.

From www.ppmy.cn

torch.einsum() 用法说明 Torch.einsum Explained Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. Since the description of. Torch.einsum Explained.

From blog.csdn.net

torch.einsum详解CSDN博客 Torch.einsum Explained Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. I will use pytorch’s einsum function in the upcoming code, but you. Torch.einsum Explained.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Explained In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as tensor compressions. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. With. Torch.einsum Explained.

From github.com

Optimize torch.einsum · Issue 60295 · pytorch/pytorch · GitHub Torch.einsum Explained Pytorch's torch.einsum function leverages this notation to perform efficient and expressive tensor operations. I will use pytorch’s einsum function in the upcoming code, but you may use numpy’s or the one from tensorflow — they are interchangeable. With the einstein notation and the einsum function, we can calculate with vectors and matrixes using only a single function: Einsum (equation, *. Torch.einsum Explained.

From blog.csdn.net

【深度学习模型移植】用torch普通算子组合替代torch.einsum方法_torch.einsum 替换CSDN博客 Torch.einsum Explained Since the description of einsum is skimpy in torch documentation, i decided to write this post to document, compare and contrast. In this article, we provide code using einsum and visualizations for several tensor operations, thinking of these operations as. Torch.einsum() is a versatile and powerful tool for expressing complex tensor operations in pytorch. I will use pytorch’s einsum function. Torch.einsum Explained.