Spark.yarn.access.hadoop Filesystem . the hadoop filesystem api provides a way to interact with the hdfs for such operations. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. in spark.properties you probably want some settings that look like this:. we can make a look into the documentation of org.apache.hadoop.fs.filesystem:

from data-flair.training

we can make a look into the documentation of org.apache.hadoop.fs.filesystem: It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. the hadoop filesystem api provides a way to interact with the hdfs for such operations. in spark.properties you probably want some settings that look like this:.

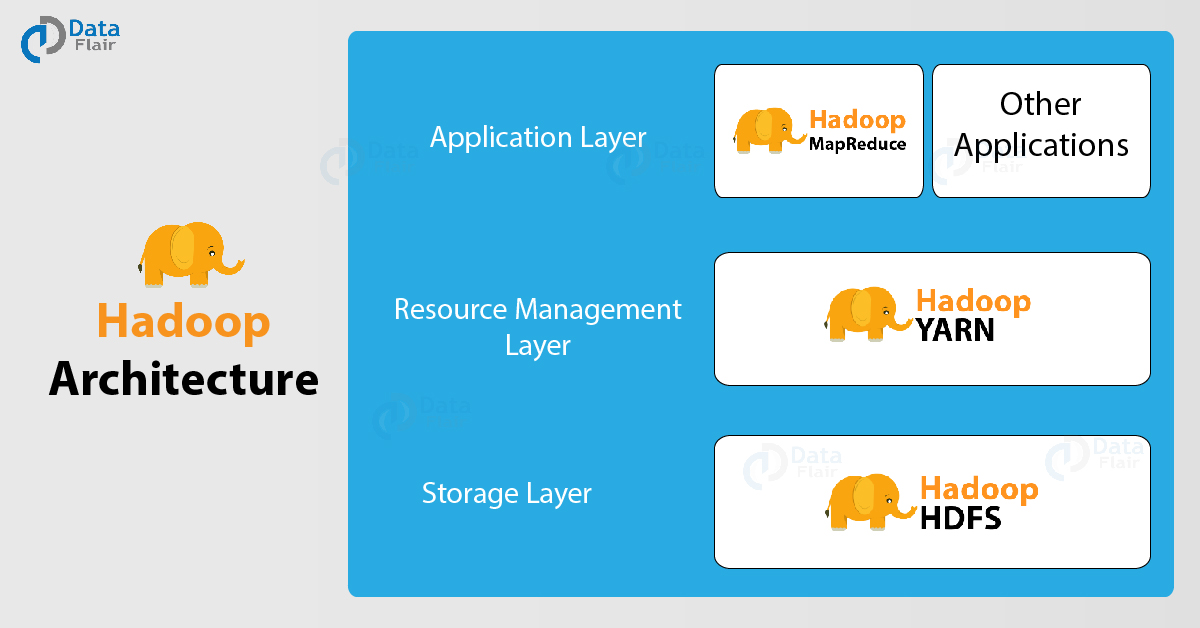

Hadoop Architecture in Detail HDFS, Yarn & MapReduce DataFlair

Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. Hadoop yarn is basically a component of hadoop. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. the hadoop filesystem api provides a way to interact with the hdfs for such operations. It is a abstract class in java which.

From aamargajbhiye.medium.com

Apache Spark and Inmemory Hadoop File System (IGFS) by Amar Gajbhiye Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. It is a abstract class in java which. in spark.properties you probably want some settings that look like this:. the hadoop filesystem api provides a way to interact with the hdfs for such operations. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: basically, what you want. Spark.yarn.access.hadoop Filesystem.

From hangmortimer.medium.com

62 Big data technology (part 2) Hadoop architecture, HDFS, YARN, Map Spark.yarn.access.hadoop Filesystem the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: It is a abstract class in java which. in. Spark.yarn.access.hadoop Filesystem.

From docs.oracle.com

Oracle Table Access for Hadoop and Spark (OTA4H) Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. the hadoop filesystem api provides a way to interact with the hdfs for such operations. in spark.properties you probably want some settings that look like this:. It. Spark.yarn.access.hadoop Filesystem.

From www.nitendratech.com

Hadoop Yarn and Its Commands Technology and Trends Spark.yarn.access.hadoop Filesystem in spark.properties you probably want some settings that look like this:. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. the hadoop filesystem api provides a way to interact with the hdfs for such operations. we can make a look into the documentation of org.apache.hadoop.fs.filesystem:. Spark.yarn.access.hadoop Filesystem.

From www.researchgate.net

The Cluster Running Hadoop YARN And Spark With 10 Machine Workers Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. in spark.properties you probably want some settings that look like this:. . Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

Spark运行环境——Yarn模式_spark配置yarn模式CSDN博客 Spark.yarn.access.hadoop Filesystem the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: basically, what you want is to make sure that. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

Hadoop与Spark等大数据框架介绍_大数据框架hadoop和sparkCSDN博客 Spark.yarn.access.hadoop Filesystem we can make a look into the documentation of org.apache.hadoop.fs.filesystem: the hadoop filesystem api provides a way to interact with the hdfs for such operations. Hadoop yarn is basically a component of hadoop. It is a abstract class in java which. basically, what you want is to make sure that you have followed the distcp between ha. Spark.yarn.access.hadoop Filesystem.

From www.researchgate.net

Sparksubmit command launching a Spark Application with YARN Download Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. in spark.properties you probably want some settings that look like this:. It is a abstract class in java which. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a look into the documentation. Spark.yarn.access.hadoop Filesystem.

From techvidvan.com

Hadoop Spark Compatibility Hadoop+Spark better together TechVidvan Spark.yarn.access.hadoop Filesystem we can make a look into the documentation of org.apache.hadoop.fs.filesystem: the hadoop filesystem api provides a way to interact with the hdfs for such operations. It is a abstract class in java which. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. in spark.properties you. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

Hadoop,HDFS,Map Reduce,Spark,Hive,Yarn之间的关系_hadoop,mapreduce,hdfs关系CSDN博客 Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. the hadoop filesystem api provides a way to interact with the hdfs for such operations. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. basically, what you want is to make sure that you have followed. Spark.yarn.access.hadoop Filesystem.

From www.waitingforcode.com

YARN or for Apache Spark? on articles Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. in spark.properties you probably want some settings that look like this:. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. Hadoop yarn is basically a component of hadoop.. Spark.yarn.access.hadoop Filesystem.

From medium.com

Apache Hadoop (HDFS YARN MapReduce) and Apache Hive Echosystem by M Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. in spark.properties you probably want some settings that look like this:. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. the hadoop filesystem api provides a way. Spark.yarn.access.hadoop Filesystem.

From www.edureka.co

Apache Hadoop YARN Introduction to YARN Architecture Edureka Spark.yarn.access.hadoop Filesystem the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. in spark.properties you probably want some settings that look like this:. It is a abstract class in java which. Hadoop. Spark.yarn.access.hadoop Filesystem.

From www.researchgate.net

Operation architecture of spark in yarn cluster mode. Download Spark.yarn.access.hadoop Filesystem one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: It is a abstract class. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

hadoop 3.x大数据集群搭建系列4安装Spark_hadoop集群安装sparkCSDN博客 Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. the hadoop filesystem api provides a way to interact with the hdfs for such operations. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. Hadoop yarn is basically a component of hadoop. we can make a look into. Spark.yarn.access.hadoop Filesystem.

From www.altexsoft.com

Apache Hadoop vs Spark Main Big Data Tools Explained Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a. Spark.yarn.access.hadoop Filesystem.

From www.cnblogs.com

Spark On Yarn的两种模式yarncluster和yarnclient深度剖析 ^_TONY_^ 博客园 Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. in spark.properties you probably want some settings. Spark.yarn.access.hadoop Filesystem.

From data-flair.training

Hadoop Architecture in Detail HDFS, Yarn & MapReduce DataFlair Spark.yarn.access.hadoop Filesystem basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way. Spark.yarn.access.hadoop Filesystem.

From www.cnblogs.com

Hadoop+Spark大数据巨量分析与机器学习整合开发实战 Elaphurus 博客园 Spark.yarn.access.hadoop Filesystem one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. in spark.properties you probably want some settings that look like this:. Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. . Spark.yarn.access.hadoop Filesystem.

From sparkdatabox.com

Hadoop YARN Spark Databox Spark.yarn.access.hadoop Filesystem we can make a look into the documentation of org.apache.hadoop.fs.filesystem: Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. It is a abstract class in java which. basically, what you want is to make sure that you have followed the distcp between ha. Spark.yarn.access.hadoop Filesystem.

From github.com

GitHub CaptainIRS/hadoopyarnk8s A sandbox for running a Hadoop Spark.yarn.access.hadoop Filesystem the hadoop filesystem api provides a way to interact with the hdfs for such operations. Hadoop yarn is basically a component of hadoop. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. It is a abstract class in java which. basically, what you want. Spark.yarn.access.hadoop Filesystem.

From jelvix.com

Spark vs Hadoop What to Choose to Process Big Data Spark.yarn.access.hadoop Filesystem in spark.properties you probably want some settings that look like this:. Hadoop yarn is basically a component of hadoop. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. basically, what you want is to make sure that you have followed the distcp between ha. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

Spark安装2_hadoop changed on src filesystem (expected 1698823CSDN博客 Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. we can make a look into the. Spark.yarn.access.hadoop Filesystem.

From sparkbyexamples.com

Spark StepbyStep Setup on Hadoop Yarn Cluster Spark By {Examples} Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. It is a abstract class in java which. the hadoop filesystem api provides a way to interact with the hdfs for such operations. in spark.properties you probably. Spark.yarn.access.hadoop Filesystem.

From www.fblinux.com

Spark on Yarn 两种模式执行流程 西门飞冰的博客 Spark.yarn.access.hadoop Filesystem one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. the hadoop filesystem api provides a way to interact with the hdfs for such operations. Hadoop yarn is basically a component of hadoop. in spark.properties you probably want some settings that look like this:. It. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

Hadoop3.x集成Spark_spark3.0.2binhadoop3.2版本CSDN博客 Spark.yarn.access.hadoop Filesystem basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. in spark.properties you probably want some settings that look like this:.. Spark.yarn.access.hadoop Filesystem.

From www.linode.com

How to Run Spark on Top of a Hadoop YARN Cluster Linode Docs Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. the hadoop filesystem api provides a way. Spark.yarn.access.hadoop Filesystem.

From towardsdatascience.com

Big Data Analysis Spark and Hadoop by Pier Paolo Ippolito Towards Spark.yarn.access.hadoop Filesystem the hadoop filesystem api provides a way to interact with the hdfs for such operations. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. Hadoop yarn is basically a component of hadoop. basically, what you want is to make sure that you have followed. Spark.yarn.access.hadoop Filesystem.

From stackoverflow.com

scala Spark Yarn Architecture Stack Overflow Spark.yarn.access.hadoop Filesystem basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: one of the modules of hadoop is the hdfs (hadoop distributed. Spark.yarn.access.hadoop Filesystem.

From www.interviewbit.com

Apache Spark Architecture Detailed Explanation InterviewBit Spark.yarn.access.hadoop Filesystem Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. in spark.properties you probably want some settings that look like this:. It is a abstract class in java which. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles. Spark.yarn.access.hadoop Filesystem.

From blog.csdn.net

【原创 Hadoop&Spark 动手实践 4】Hadoop2.7.3 YARN原理与动手实践CSDN博客 Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. the hadoop filesystem api provides a way to interact with the hdfs for such operations. in spark.properties you probably want some settings that look like this:. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: basically, what you want is to make sure that you have followed. Spark.yarn.access.hadoop Filesystem.

From sparkdatabox.com

Hadoop YARN Spark Databox Spark.yarn.access.hadoop Filesystem we can make a look into the documentation of org.apache.hadoop.fs.filesystem: in spark.properties you probably want some settings that look like this:. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. Hadoop yarn is basically a component of hadoop. basically, what you want is. Spark.yarn.access.hadoop Filesystem.

From www.youtube.com

FREE Spark and Hadoop VM Access Apache Hadoop Part 4 DM Spark.yarn.access.hadoop Filesystem basically, what you want is to make sure that you have followed the distcp between ha clusters setup, that both. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. Hadoop yarn is basically a component of hadoop. in spark.properties you probably want some settings. Spark.yarn.access.hadoop Filesystem.

From www.altexsoft.com

Apache Hadoop vs Spark Main Big Data Tools Explained Spark.yarn.access.hadoop Filesystem one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a look into the documentation of org.apache.hadoop.fs.filesystem: the hadoop filesystem api provides a way to interact with the hdfs for such operations. basically, what you want is to make sure that. Spark.yarn.access.hadoop Filesystem.

From github.com

GitHub lupodda/Sparkhadoopyarnmultinodedockercluster A docker Spark.yarn.access.hadoop Filesystem It is a abstract class in java which. Hadoop yarn is basically a component of hadoop. the hadoop filesystem api provides a way to interact with the hdfs for such operations. one of the modules of hadoop is the hdfs (hadoop distributed file system) that handles data redundancy and scalability across nodes [1]. we can make a. Spark.yarn.access.hadoop Filesystem.