Torch Transformer Src_Key_Padding_Mask . So far i focused on. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The architecture is based on the paper “attention is all you. User is able to modify the attributes as needed. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. For purely educational purposes, my goal is to implement basic transformer architecture from scratch.

from www.ichenhua.cn

The architecture is based on the paper “attention is all you. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So far i focused on.

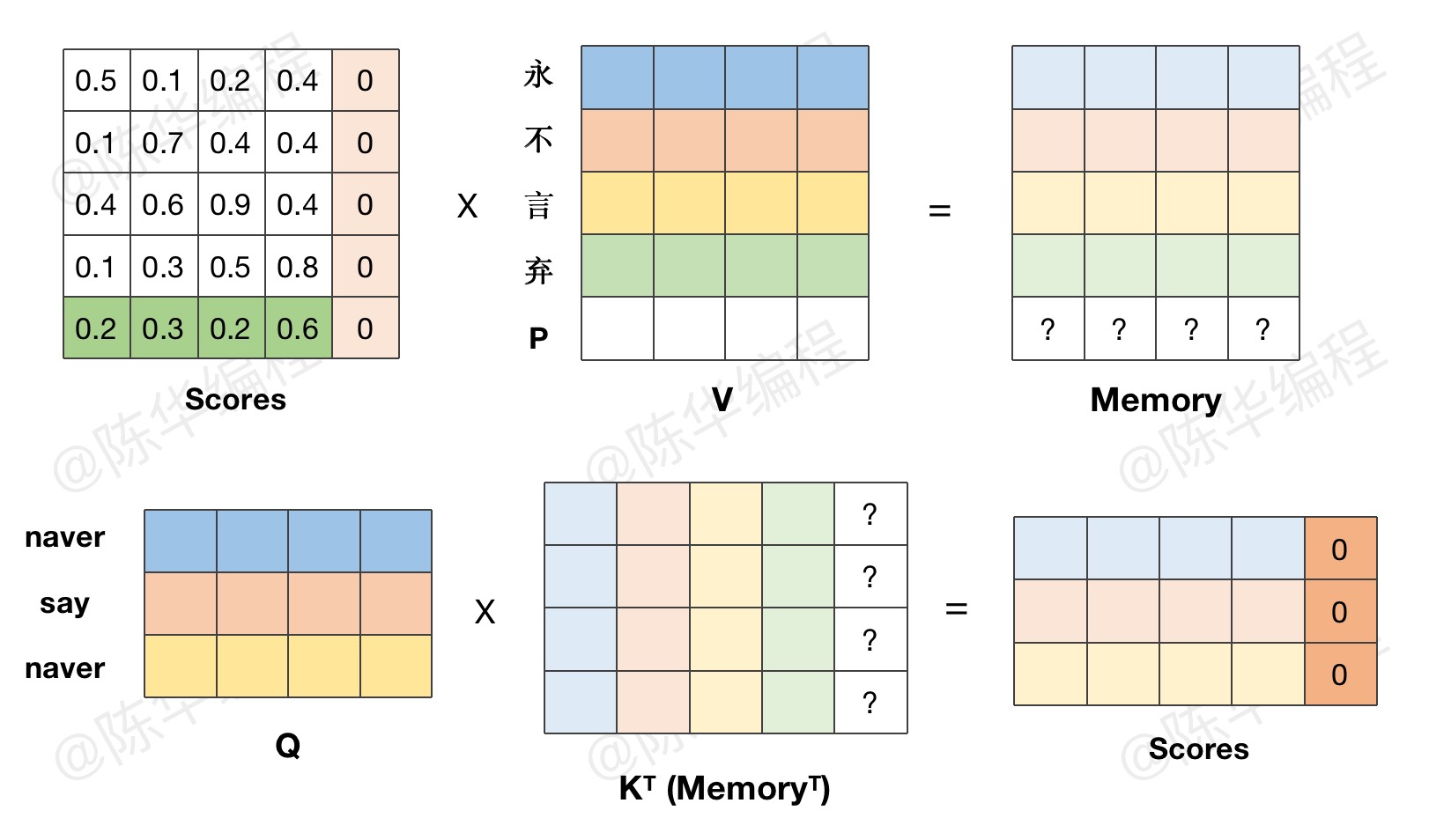

Transformer P8 Attention处理Key_Padding_Mask 陈华编程

Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. User is able to modify the attributes as needed. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. The architecture is based on the paper “attention is all you. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,.

From www.zhihu.com

pytorch的key_padding_mask和参数attn_mask有什么区别? 知乎 Torch Transformer Src_Key_Padding_Mask For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The architecture is based on the paper “attention is all you. User is able to modify the attributes as needed. The main difference is that ‘src_key_padding_mask’ looks at masks. Torch Transformer Src_Key_Padding_Mask.

From data-science-blog.com

Positional encoding, residual connections, padding masks covering the Torch Transformer Src_Key_Padding_Mask So far i focused on. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. User. Torch Transformer Src_Key_Padding_Mask.

From github.com

Transformer Encoder Layer with src_key_padding makes NaN · Issue 24816 Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. So far i focused on. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. User is able to modify the. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think, when using src_mask, we need. Torch Transformer Src_Key_Padding_Mask.

From github.com

Transformer Encoder Layer with src_key_padding makes NaN · Issue 24816 Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The architecture is based on the paper “attention is all you. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. User is able. Torch Transformer Src_Key_Padding_Mask.

From blog.csdn.net

torch.nn.Transformer解读与应用_nn.transformerencoderlayerCSDN博客 Torch Transformer Src_Key_Padding_Mask The architecture is based on the paper “attention is all you. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. So far i focused on. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. For purely educational purposes, my goal is. Torch Transformer Src_Key_Padding_Mask.

From paddlepedia.readthedocs.io

Transformer — PaddleEdu documentation Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So for example, when you set a value in. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask So far i focused on. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So for example, when you set a value. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The architecture is based on the paper “attention is. Torch Transformer Src_Key_Padding_Mask.

From www.ichenhua.cn

Transformer P8 Attention处理Key_Padding_Mask 陈华编程 Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The architecture is based on the paper “attention is all you. I think, when using src_mask, we need to provide. Torch Transformer Src_Key_Padding_Mask.

From github.com

TransformerEncoder src_key_padding_mask does not work in eval() · Issue Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The architecture is based on the paper “attention is all you. So far. Torch Transformer Src_Key_Padding_Mask.

From www.zhihu.com

nn.Transformer怎么使用? 知乎 Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. User is able to modify the attributes as needed. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think,. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So for example, when you set a value in. Torch Transformer Src_Key_Padding_Mask.

From discuss.pytorch.org

[Transformer] Difference between src_mask and src_key_padding_mask Torch Transformer Src_Key_Padding_Mask So far i focused on. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. User is able to modify the attributes as needed. So for example, when you set a value in. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask The architecture is based on the paper “attention is all you. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main. Torch Transformer Src_Key_Padding_Mask.

From github.com

Padding mask · Issue 34 · yaohungt/MultimodalTransformer · GitHub Torch Transformer Src_Key_Padding_Mask For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. The architecture is based on the paper “attention is all you. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens.. Torch Transformer Src_Key_Padding_Mask.

From github.com

Transformer Encoder Layer with src_key_padding makes NaN · Issue 24816 Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer architecture from scratch.. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

Pytorch一行代码便可以搭建整个transformer模型 知乎 Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So far i focused on. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,.. Torch Transformer Src_Key_Padding_Mask.

From discuss.pytorch.org

Transformer What should I put in src_key_padding_mask ? PyTorch Forums Torch Transformer Src_Key_Padding_Mask The architecture is based on the paper “attention is all you. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. For purely educational purposes, my goal is to. Torch Transformer Src_Key_Padding_Mask.

From discuss.pytorch.org

Transformer What should I put in src_key_padding_mask ? PyTorch Forums Torch Transformer Src_Key_Padding_Mask The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think, when using src_mask, we need to provide a matrix. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

PyTorch的Transformer 知乎 Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So far i focused on. The architecture is based on the paper “attention is all you. The main difference is that ‘src_key_padding_mask’. Torch Transformer Src_Key_Padding_Mask.

From www.ichenhua.cn

Transformer P8 Attention处理Key_Padding_Mask 陈华编程 Torch Transformer Src_Key_Padding_Mask So far i focused on. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The architecture is based on the paper “attention is all you. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens.. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

极简翻译模型Demo,彻底理解Transformer 知乎 Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. User is able to modify the attributes as needed. So far i focused on. The architecture is based on the paper “attention is all you. The main difference is that ‘src_key_padding_mask’ looks at masks applied to. Torch Transformer Src_Key_Padding_Mask.

From github.com

torch.nn.MultiheadAttention key_padding_mask and is_causal breaks Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. For purely educational purposes, my goal. Torch Transformer Src_Key_Padding_Mask.

From leftasexercise.com

Mastering large language models Part X Transformer blocks Torch Transformer Src_Key_Padding_Mask The architecture is based on the paper “attention is all you. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference. Torch Transformer Src_Key_Padding_Mask.

From github.com

nn.TransformerEncoder all nan values issues when src_key_padding_mask Torch Transformer Src_Key_Padding_Mask I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The architecture is based on the paper “attention is all you. So far i focused on. User is able to modify the attributes as needed. For purely educational purposes, my goal is to implement basic transformer. Torch Transformer Src_Key_Padding_Mask.

From github.com

transformer results are not consistent for the case that src_key Torch Transformer Src_Key_Padding_Mask User is able to modify the attributes as needed. The architecture is based on the paper “attention is all you. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. So far i focused on. I think, when using src_mask,. Torch Transformer Src_Key_Padding_Mask.

From discuss.pytorch.org

Transformer What should I put in src_key_padding_mask ? PyTorch Forums Torch Transformer Src_Key_Padding_Mask User is able to modify the attributes as needed. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. So far i focused on. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that. Torch Transformer Src_Key_Padding_Mask.

From discuss.pytorch.org

Why does PyTorch's Transformer model implementation `torch.nn Torch Transformer Src_Key_Padding_Mask So far i focused on. User is able to modify the attributes as needed. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. The architecture is based on the paper “attention is. Torch Transformer Src_Key_Padding_Mask.

From github.com

TransformerEncoder truncates output when some token positions are Torch Transformer Src_Key_Padding_Mask User is able to modify the attributes as needed. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence. Torch Transformer Src_Key_Padding_Mask.

From www.zhihu.com

pytorch的key_padding_mask和参数attn_mask有什么区别? 知乎 Torch Transformer Src_Key_Padding_Mask For purely educational purposes, my goal is to implement basic transformer architecture from scratch. So for example, when you set a value in the mask tensor to ‘true’, you are essentially. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length, for example,. The main difference is that. Torch Transformer Src_Key_Padding_Mask.

From zhuanlan.zhihu.com

【Pytorch】Transformer中的mask 知乎 Torch Transformer Src_Key_Padding_Mask The architecture is based on the paper “attention is all you. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. I think, when using src_mask, we need to provide a matrix of shape (s, s), where s is our source sequence length,. Torch Transformer Src_Key_Padding_Mask.

From sungwookyoo.github.io

Transformer, Multihead Attetnion Pytorch Guide Focusing on Masking Torch Transformer Src_Key_Padding_Mask So for example, when you set a value in the mask tensor to ‘true’, you are essentially. For purely educational purposes, my goal is to implement basic transformer architecture from scratch. The main difference is that ‘src_key_padding_mask’ looks at masks applied to entire tokens. User is able to modify the attributes as needed. So far i focused on. The architecture. Torch Transformer Src_Key_Padding_Mask.