Dynamic Quantization Vs Static Quantization . Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It fuses activations into preceding layers where possible. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. It requires calibration with a. Static quantization quantizes the weights and activations of the model.

from www.slideserve.com

It fuses activations into preceding layers where possible. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization quantizes the weights and activations of the model. It requires calibration with a.

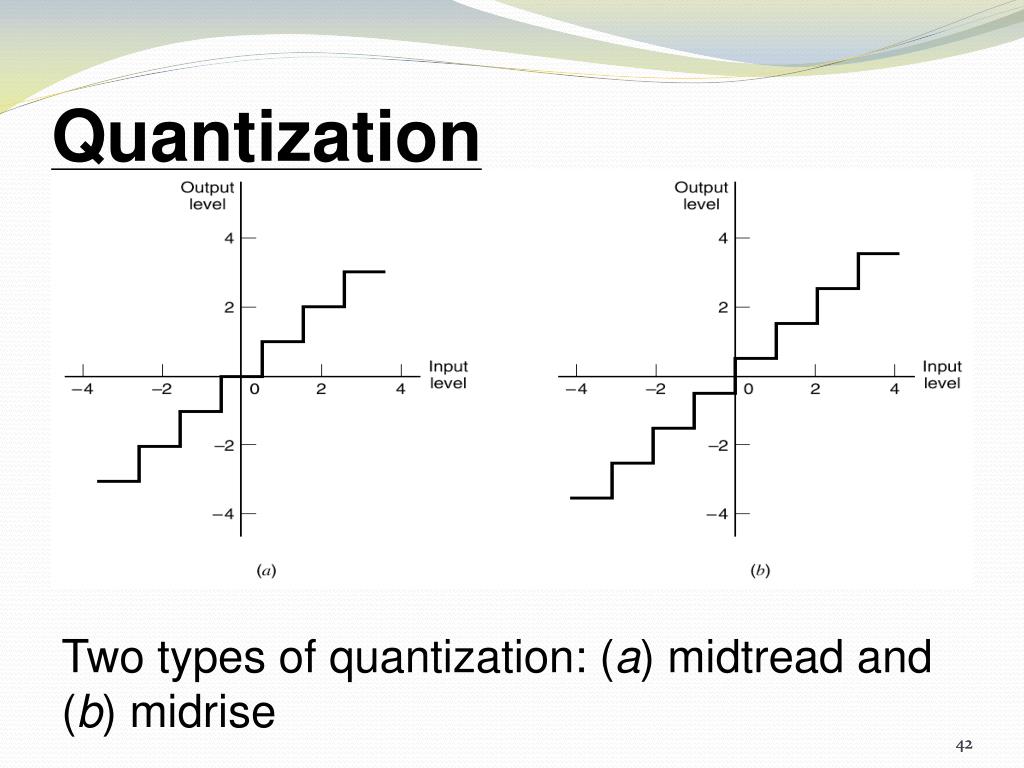

PPT PULSE MODULATION TECHNIQUES PowerPoint Presentation, free

Dynamic Quantization Vs Static Quantization It requires calibration with a. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. It fuses activations into preceding layers where possible. It requires calibration with a.

From r4j4n.github.io

Quantization in PyTorch Optimizing Architectures for Enhanced Dynamic Quantization Vs Static Quantization Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. It fuses activations into preceding layers where possible. Static quantization involves reducing the numerical. Dynamic Quantization Vs Static Quantization.

From discuss.pytorch.org

PyTorch Dynamic Quantization clarification quantization PyTorch Forums Dynamic Quantization Vs Static Quantization Static quantization quantizes the weights and activations of the model. It fuses activations into preceding layers where possible. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized). Dynamic Quantization Vs Static Quantization.

From hillhouse4design.com

8bit quantization example Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization quantizes the weights and. Dynamic Quantization Vs Static Quantization.

From furiosa-ai.github.io

Model Quantization — Furiosa SDK Documentation furiosadocs documentation Dynamic Quantization Vs Static Quantization It requires calibration with a. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It fuses activations. Dynamic Quantization Vs Static Quantization.

From www.slideshare.net

quantization Dynamic Quantization Vs Static Quantization Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations into preceding. Dynamic Quantization Vs Static Quantization.

From jhss.github.io

A survey of Quantization Methods for Efficient Neural Network 정리 Dynamic Quantization Vs Static Quantization Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations into preceding layers where possible. It requires calibration with a. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization (weight is statically quantized, activation. Dynamic Quantization Vs Static Quantization.

From www.mdpi.com

Applied Sciences Free FullText ClippingBased Post Training 8Bit Dynamic Quantization Vs Static Quantization Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization quantizes the weights and activations. Dynamic Quantization Vs Static Quantization.

From wiki-power.com

ADC Static Parameters Power's Wiki Dynamic Quantization Vs Static Quantization Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. Static quantization quantizes the weights and activations of the model. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It fuses activations into preceding layers where possible. Dynamic quantization (weight is statically quantized, activation is dynamically quantized). Dynamic Quantization Vs Static Quantization.

From www.mdpi.com

Applied Sciences Free FullText ClippingBased Post Training 8Bit Dynamic Quantization Vs Static Quantization It requires calibration with a. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and.. Dynamic Quantization Vs Static Quantization.

From www.youtube.com

Quantization Part 2 Quantization Understanding YouTube Dynamic Quantization Vs Static Quantization Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically. Dynamic Quantization Vs Static Quantization.

From intel.github.io

Quantization — Intel® Extension for Transformers 1.2 documentation Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It requires calibration with a. Static quantization quantizes the weights and. Dynamic Quantization Vs Static Quantization.

From www.vedereai.com

Practical Quantization in PyTorch Vedere AI Dynamic Quantization Vs Static Quantization Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization involves reducing. Dynamic Quantization Vs Static Quantization.

From gaussian37.github.io

딥러닝의 Quantization (양자화)와 Quantization Aware Training gaussian37 Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. It requires calibration with a. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Static quantization involves reducing the numerical precision of model. Dynamic Quantization Vs Static Quantization.

From crosspointe.net

What is the difference between static type checking and dynamic type Dynamic Quantization Vs Static Quantization It requires calibration with a. It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation. Dynamic Quantization Vs Static Quantization.

From www.mechical.com

Statics Vs Dynamics Definition, Types, Differences Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Unlike tensorflow 2.3.0 which supports. Dynamic Quantization Vs Static Quantization.

From www.researchgate.net

Static vs dynamic structure functions Download Scientific Diagram Dynamic Quantization Vs Static Quantization It requires calibration with a. Static quantization quantizes the weights and activations of the model. It fuses activations into preceding layers where possible. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization skips the calibration step, uses dynamically computed quantization. Dynamic Quantization Vs Static Quantization.

From myscale.com

Enhancing Efficiency Static vs Dynamic Quantization Dynamic Quantization Vs Static Quantization Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips. Dynamic Quantization Vs Static Quantization.

From buxianchen.github.io

(P0) Pytorch Quantization Humanpia Dynamic Quantization Vs Static Quantization Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization quantizes the weights and activations of the model. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations into preceding layers where possible. Dynamic quantization. Dynamic Quantization Vs Static Quantization.

From www.semanticscholar.org

Figure 2 from Differentiable Dynamic Quantization with Mixed Precision Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It requires calibration with a. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Static quantization quantizes the weights and activations of the model. It fuses activations into preceding. Dynamic Quantization Vs Static Quantization.

From www.semanticscholar.org

[PDF] PostTraining Quantization for Vision Transformer Semantic Scholar Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It fuses activations into preceding layers where possible. Static quantization involves reducing the numerical. Dynamic Quantization Vs Static Quantization.

From onnxruntime.ai

Quantize ONNX models onnxruntime Dynamic Quantization Vs Static Quantization It requires calibration with a. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0. Dynamic Quantization Vs Static Quantization.

From r4j4n.github.io

Quantization in PyTorch Optimizing Architectures for Enhanced Dynamic Quantization Vs Static Quantization Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights. Dynamic Quantization Vs Static Quantization.

From tukioka-clinic.com

🎉 Quantization process. compression. 20190114 Dynamic Quantization Vs Static Quantization Static quantization quantizes the weights and activations of the model. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It fuses activations into preceding layers where possible. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization involves reducing the numerical precision of model. Dynamic Quantization Vs Static Quantization.

From davidswiston.blogspot.com

Dave Swiston November 2014 Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. It fuses activations into preceding layers where possible. It requires calibration with a. Static quantization involves reducing the numerical precision of model. Dynamic Quantization Vs Static Quantization.

From rogerspy.github.io

模型压缩——网络量化 Rogerspy's Home Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Unlike tensorflow 2.3.0 which supports integer quantization using. Dynamic Quantization Vs Static Quantization.

From modeldatabase.com

Introduction to Quantization cooked in 🤗 with 💗🧑🍳 Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. It fuses activations into preceding layers where possible. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization (weight is statically quantized, activation. Dynamic Quantization Vs Static Quantization.

From www.mdpi.com

Electronics Free FullText Improving Model Capacity of Quantized Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations into preceding. Dynamic Quantization Vs Static Quantization.

From www.slideserve.com

PPT PULSE MODULATION TECHNIQUES PowerPoint Presentation, free Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization quantizes the weights and activations of the model. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. It. Dynamic Quantization Vs Static Quantization.

From www.edge-ai-vision.com

Exploring AIMET’s PostTraining Quantization Methods Edge AI and Dynamic Quantization Vs Static Quantization Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Unlike tensorflow 2.3.0 which supports integer quantization using. Dynamic Quantization Vs Static Quantization.

From www.researchgate.net

Gluonbaryon interaction with an exchanged Pomeron featuring the Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It requires calibration with a. Unlike tensorflow 2.3.0 which supports. Dynamic Quantization Vs Static Quantization.

From gaussian37.github.io

딥러닝의 Quantization (양자화)와 Quantization Aware Training gaussian37 Dynamic Quantization Vs Static Quantization Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Static quantization quantizes the weights and activations of the model. It requires calibration with a. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically. Dynamic Quantization Vs Static Quantization.

From engineerstutor.com

Quantization in PCM with example Quantization in PCM with example Dynamic Quantization Vs Static Quantization Static quantization quantizes the weights and activations of the model. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. It requires calibration with a. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. It fuses activations. Dynamic Quantization Vs Static Quantization.

From www.semanticscholar.org

Figure 8 from Dynamic Quantization Range Control for AnaloginMemory Dynamic Quantization Vs Static Quantization Static quantization quantizes the weights and activations of the model. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. It fuses activations into preceding layers where possible. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. It. Dynamic Quantization Vs Static Quantization.

From tukioka-clinic.com

🎉 Quantization process. compression. 20190114 Dynamic Quantization Vs Static Quantization Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Static quantization quantizes the weights and activations of the model. It requires calibration with a. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization (both weight and. It fuses activations into. Dynamic Quantization Vs Static Quantization.

From www.youtube.com

Quantization Part 3 Quantization understanding with equations YouTube Dynamic Quantization Vs Static Quantization It fuses activations into preceding layers where possible. Static quantization involves reducing the numerical precision of model parameters before inference, optimizing computational. Dynamic quantization skips the calibration step, uses dynamically computed quantization parameters during inference,. Unlike tensorflow 2.3.0 which supports integer quantization using arbitrary bitwidth from 2 to. Dynamic quantization (weight is statically quantized, activation is dynamically quantized) static quantization. Dynamic Quantization Vs Static Quantization.