Calibration Data Quantization . Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. More technically model quantization is a technique used to reduce the precision of the numerical representations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance.

from www.slideserve.com

More technically model quantization is a technique used to reduce the precision of the numerical representations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of.

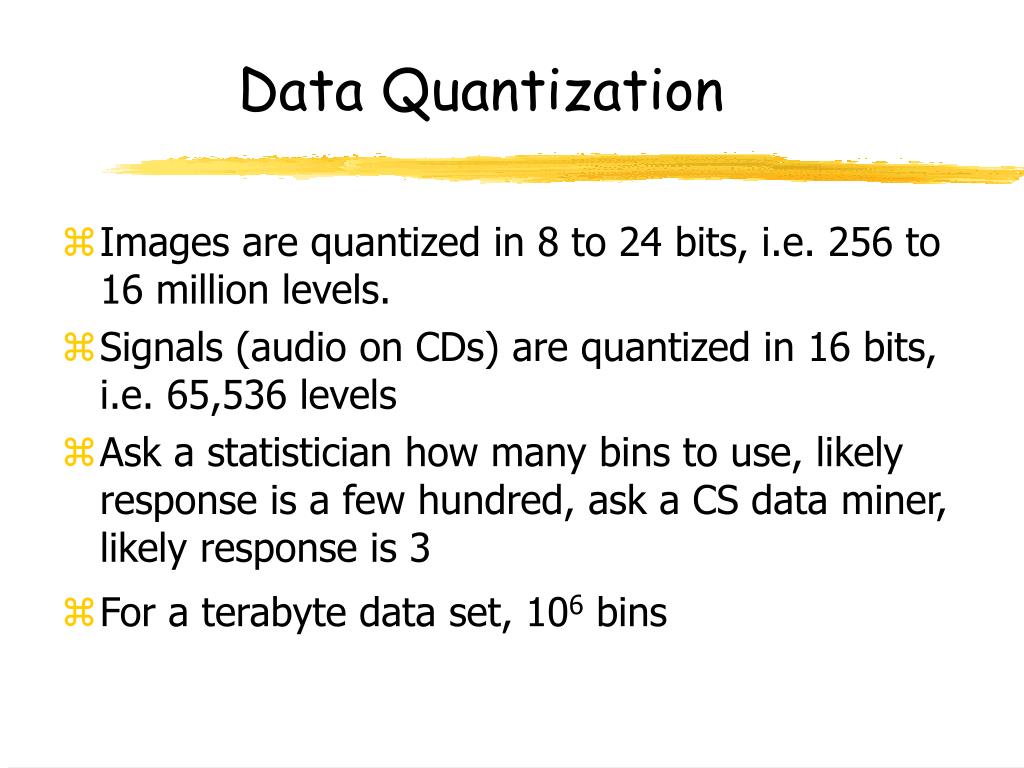

PPT Data Compression by Quantization PowerPoint Presentation, free

Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of.

From www.researchgate.net

3 Two quantizations of size N " 200 of a centered Gaussian vector with Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. More technically model quantization is. Calibration Data Quantization.

From www.researchgate.net

Softtohard quantization rule illustration. Download Scientific Diagram Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing. Calibration Data Quantization.

From www.semanticscholar.org

Figure 1 from A CalibrationFree FractionalN Analog PLL With Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques. Calibration Data Quantization.

From www.youtube.com

PDQuant PostTraining Quantization based on Prediction Difference Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. More technically model quantization is a technique used to reduce the precision of the numerical representations. Post training quantization (ptq) is a. Calibration Data Quantization.

From www.youtube.com

Calibration Curve Tutorial Lesson 1 Plotting Calibration Data YouTube Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper,. Calibration Data Quantization.

From www.researchgate.net

(PDF) Is InDomain Data Really Needed? A Pilot Study on CrossDomain Calibration Data Quantization Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the. Calibration Data Quantization.

From www.researchgate.net

(PDF) SelectQ Calibration Data Selection for PostTraining Quantization Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. In this paper, we. Calibration Data Quantization.

From coremltools.readme.io

Quantization Overview Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Calibration is the. Calibration Data Quantization.

From www.researchgate.net

Typical calibration data plot for the NDMT system compliance Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization. Calibration Data Quantization.

From www.researchgate.net

Calibration data compared with previous data Download Scientific Diagram Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. More technically model quantization is a technique used to reduce the precision of the numerical representations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques. Calibration Data Quantization.

From www.researchgate.net

Calibration spectrum highlighting the different event samples used in Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model. Calibration Data Quantization.

From www.researchgate.net

Quantization schemes comparison. Download Scientific Diagram Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization refers to techniques for performing. Calibration Data Quantization.

From www.researchgate.net

4 Calibration results with observed data from Tampa; (a) Calibration Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model. Calibration Data Quantization.

From www.tidyverse.org

Model Calibration Calibration Data Quantization Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is. Calibration Data Quantization.

From www.researchgate.net

Polynomial fit of the calibration data I (B) for pixel 700 × 1000 in Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt. Calibration Data Quantization.

From www.researchgate.net

Fitting map of measured data and model calibration data Download Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we present the first extensive. Calibration Data Quantization.

From www.researchgate.net

Calibration data with standard deviation (dots) and fitted curve Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the. Calibration Data Quantization.

From www.researchgate.net

Calibration data for the CDC N1 RTqPCR assay. Left Assay dynamic Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we. Calibration Data Quantization.

From www.researchgate.net

Calibration of a digital ammeter using the PQCG and identification of Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques. Calibration Data Quantization.

From www.researchgate.net

Calibration data for the conventional time domain reflectome Download Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model. Calibration Data Quantization.

From www.researchgate.net

The selection of the essential elements of the quantization set Q 500 n Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model. Calibration Data Quantization.

From www.youtube.com

Quantization Part 2 Quantization Understanding YouTube Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Calibration is the tensorrt terminology of passing data. Calibration Data Quantization.

From www.researchgate.net

Calibration of a digital ammeter using the PQCG and identification of Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization. Calibration Data Quantization.

From www.researchgate.net

8 The calibration tests of accuracy of postprocessing algorithm a The Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques. Calibration Data Quantization.

From www.researchgate.net

Regression coefficients ofPLS model for calibration data using full Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model. Calibration Data Quantization.

From www.researchgate.net

(PDF) Vector Quantization Based Data Selection for HandEye Calibration. Calibration Data Quantization Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the. Calibration Data Quantization.

From www.researchgate.net

Calibration data for coil response and nullfield cancellation. The Calibration Data Quantization In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. More. Calibration Data Quantization.

From www.researchgate.net

PTQ Posttraining quantization benchmark on the dataset with Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of. Calibration Data Quantization.

From furiosa-ai.github.io

Model Quantization — Furiosa SDK Documentation 0.10.1 documentation Calibration Data Quantization More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Calibration is the tensorrt terminology of passing data. Calibration Data Quantization.

From www.researchgate.net

Rhodamine B calibration data for samples from 0.1 to 10 µM; measured in Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model quantization is a technique used to reduce the precision of the numerical representations. In this paper, we. Calibration Data Quantization.

From www.researchgate.net

The quantization result with and calibration method. The Calibration Data Quantization Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model. Calibration Data Quantization.

From www.researchgate.net

Calibration data set regression and individual data points. Download Calibration Data Quantization Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model quantization is a technique used to reduce the precision of the numerical representations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of. Calibration Data Quantization.

From www.researchgate.net

Experiment on sequential quantization calibration. Download Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. In this paper, we present the first extensive empirical study on the effect of calibration data upon llm performance. Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. More technically model quantization is. Calibration Data Quantization.

From www.slideserve.com

PPT Data Compression by Quantization PowerPoint Presentation, free Calibration Data Quantization Quantization refers to techniques for performing computations and storing tensors at lower bitwidths than floating point precision. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. More technically model. Calibration Data Quantization.

From www.youtube.com

LECT29 Quantization Process & Quantization noise YouTube Calibration Data Quantization Calibration is the tensorrt terminology of passing data samples to the quantizer and deciding the best amax for activations. Post training quantization (ptq) is a technique to reduce the required computational resources for inference while still preserving the accuracy of. More technically model quantization is a technique used to reduce the precision of the numerical representations. Quantization refers to techniques. Calibration Data Quantization.