Torch Distributed Gather . You can find the documentation here. Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Below is how i used torch.distributed.gather (). Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: sends tensor to root process, which store it in. The root rank is specified as an argument when calling the gather function. Basically this allows you to gather any. You can use all_gather_object from torch.distributed. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce.

from blog.csdn.net

Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. You can find the documentation here. Basically this allows you to gather any. Below is how i used torch.distributed.gather (). The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: You can use all_gather_object from torch.distributed. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their.

PyTorch中torch.gather()函数CSDN博客

Torch Distributed Gather The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. The root rank is specified as an argument when calling the gather function. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. You can use all_gather_object from torch.distributed. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): Basically this allows you to gather any. Below is how i used torch.distributed.gather (). sends tensor to root process, which store it in. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. You can find the documentation here. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps:

From machinelearningknowledge.ai

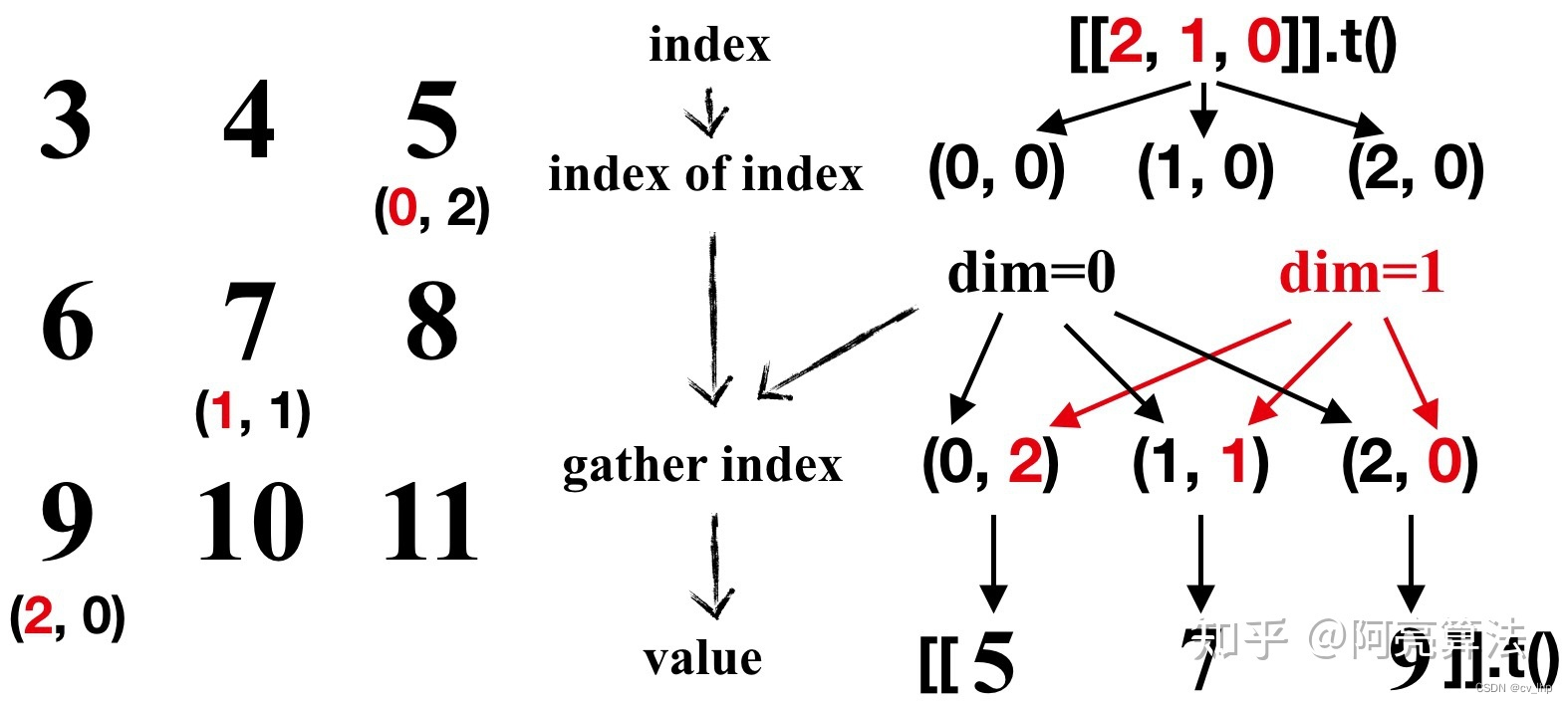

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch Distributed Gather You can use all_gather_object from torch.distributed. The root rank is specified as an argument when calling the gather function. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Below is how i used torch.distributed.gather (). Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors. Torch Distributed Gather.

From github.com

torch.distributed.gather() the type of gather_list parameter must be Torch Distributed Gather Basically this allows you to gather any. You can use all_gather_object from torch.distributed. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their.. Torch Distributed Gather.

From github.com

torch/distributed/distributed_c10d.py", line 1870, in all_gather work Torch Distributed Gather As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: You can find the documentation here. The root rank is specified as an argument when calling the gather function. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Basically this allows you to gather. Torch Distributed Gather.

From zhuanlan.zhihu.com

图解PyTorch中的torch.gather函数 知乎 Torch Distributed Gather The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Basically this allows you to gather any. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Below is how i used torch.distributed.gather. Torch Distributed Gather.

From zhuanlan.zhihu.com

Pytorch 分布式通信原语(附源码) 知乎 Torch Distributed Gather Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): The root rank is specified as an argument when calling the gather function. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor. Torch Distributed Gather.

From blog.csdn.net

torch.distributedCSDN博客 Torch Distributed Gather sends tensor to root process, which store it in. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: You can use all_gather_object from. Torch Distributed Gather.

From blog.csdn.net

【PyTorch】Torch.gather()用法详细图文解释CSDN博客 Torch Distributed Gather The root rank is specified as an argument when calling the gather function. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Below is how i used torch.distributed.gather (). As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: The gather. Torch Distributed Gather.

From blog.csdn.net

Pytorch DDP分布式数据合并通信 torch.distributed.all_gather()_ddp中指标的数据归约CSDN博客 Torch Distributed Gather The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Hi, i am trying to use torch.distributed.all_gather function and i’m. Torch Distributed Gather.

From github.com

torch.distributed._all_gather_base will be deprecated · Issue 19091 Torch Distributed Gather You can find the documentation here. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): Basically this allows you to gather any. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. You can use. Torch Distributed Gather.

From github.com

[BUG] AttributeError module 'torch.distributed' has no attribute Torch Distributed Gather Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. sends tensor to root process, which store it in. You can use all_gather_object from torch.distributed. Below is how i used torch.distributed.gather (). Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶. Torch Distributed Gather.

From blog.csdn.net

torch.distributed多卡/多GPU/分布式DPP(一) —— torch.distributed.launch & all Torch Distributed Gather The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. Below is how i used torch.distributed.gather (). Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. As. Torch Distributed Gather.

From github.com

torch.distributed.all_gather function stuck · Issue 10680 · openmmlab Torch Distributed Gather Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. Basically this allows you to gather any. Below is how i used torch.distributed.gather (). The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as. Torch Distributed Gather.

From blog.csdn.net

torch.gather的三维实例_torch.gether三维CSDN博客 Torch Distributed Gather You can find the documentation here. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): Below is how i used torch.distributed.gather (). Hi, i am trying. Torch Distributed Gather.

From codeantenna.com

Pytorch DDP分布式数据合并通信 torch.distributed.all_gather() CodeAntenna Torch Distributed Gather As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: Basically this allows you to gather any. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as. Torch Distributed Gather.

From github.com

How to use torch.distributed.gather? · Issue 14536 · pytorch/pytorch Torch Distributed Gather Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. The distributed. Torch Distributed Gather.

From www.ppmy.cn

PyTorch基础(16) torch.gather()方法 Torch Distributed Gather The root rank is specified as an argument when calling the gather function. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Basically this allows you to gather any. Gather (tensor, gather_list = none, dst. Torch Distributed Gather.

From medium.com

Example on torch.distributed.gather by Laksheen Mendis Medium Torch Distributed Gather The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. Gather (tensor, gather_list = none, dst = 0, group = none,. Torch Distributed Gather.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch Distributed Gather You can find the documentation here. Basically this allows you to gather any. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: The root rank is specified as an argument when calling. Torch Distributed Gather.

From github.com

GitHub neonbjb/torchdistributedbench Bench test torch.distributed Torch Distributed Gather The root rank is specified as an argument when calling the gather function. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. sends tensor to root process, which store it in. As it is not. Torch Distributed Gather.

From zhuanlan.zhihu.com

两张图帮你理解torch.gather 知乎 Torch Distributed Gather The root rank is specified as an argument when calling the gather function. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. sends tensor to root process, which store it in. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in. Torch Distributed Gather.

From blog.csdn.net

PyTorch中torch.gather()函数CSDN博客 Torch Distributed Gather sends tensor to root process, which store it in. The root rank is specified as an argument when calling the gather function. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. The distributed package included. Torch Distributed Gather.

From blog.csdn.net

torch.gather()使用解析CSDN博客 Torch Distributed Gather Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. You can use all_gather_object from torch.distributed. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate. Torch Distributed Gather.

From blog.csdn.net

torch DDP模式并行CSDN博客 Torch Distributed Gather Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. The root rank is specified as an argument when calling the gather function. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The gather operation in torch.distributed is used. Torch Distributed Gather.

From blog.csdn.net

(已解决)安装apex——报错:AttributeError module ‘torch.distributed‘ has no Torch Distributed Gather sends tensor to root process, which store it in. Below is how i used torch.distributed.gather (). The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. You can find the documentation here. You can use all_gather_object. Torch Distributed Gather.

From github.com

torch.distributed.init_process_group setting variables · Issue 13 Torch Distributed Gather The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. sends tensor. Torch Distributed Gather.

From github.com

Add alltoall collective communication support to torch.distributed Torch Distributed Gather You can find the documentation here. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. sends tensor to root process, which store. Torch Distributed Gather.

From morioh.com

Introducing PyTorch Fully Sharded Data Parallel (FSDP) API Torch Distributed Gather Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Basically this allows you to gather any. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. sends tensor to root process, which store it in.. Torch Distributed Gather.

From zhuanlan.zhihu.com

Torch DDP入门 知乎 Torch Distributed Gather sends tensor to root process, which store it in. The root rank is specified as an argument when calling the gather function. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. As it is not directly possible to gather using built in methods, we need to write custom function with the following steps:. Torch Distributed Gather.

From machinelearningknowledge.ai

[Diagram] How to use torch.gather() Function in PyTorch with Examples Torch Distributed Gather You can find the documentation here. The root rank is specified as an argument when calling the gather function. Import torch.distributed as dist def gather(tensor, tensor_list=none, root=0, group=none): The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. You can use all_gather_object from torch.distributed. sends tensor to root process, which store it in. Basically. Torch Distributed Gather.

From blog.csdn.net

torch.gather函数的简单理解与使用_th.gatherCSDN博客 Torch Distributed Gather You can use all_gather_object from torch.distributed. Basically this allows you to gather any. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶. Torch Distributed Gather.

From blog.csdn.net

torch 多进程训练(详细例程)CSDN博客 Torch Distributed Gather You can use all_gather_object from torch.distributed. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. sends tensor to root process, which store it in. The distributed package included in pytorch (i.e., torch.distributed) enables researchers and practitioners to easily parallelize their. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and. Torch Distributed Gather.

From github.com

miniconda3/envs/proj1/lib/python3.9/sitepackages/torch/distributed Torch Distributed Gather sends tensor to root process, which store it in. The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. The root rank is. Torch Distributed Gather.

From github.com

Does tensors got from torch.distributed.all_gather in order? · Issue Torch Distributed Gather As it is not directly possible to gather using built in methods, we need to write custom function with the following steps: The pytorch distributed communication layer (c10d) offers both collective communication apis (e.g., all_reduce. Hi, i am trying to use torch.distributed.all_gather function and i’m confused with the parameter ‘tensor_list’. You can use all_gather_object from torch.distributed. sends tensor to root. Torch Distributed Gather.

From aws.amazon.com

Distributed training with Amazon EKS and Torch Distributed Elastic Torch Distributed Gather Gather (tensor, gather_list = none, dst = 0, group = none, async_op = false) [source] ¶ gathers a list of tensors in a single. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Hi, i. Torch Distributed Gather.

From www.ppmy.cn

PyTorch基础(16) torch.gather()方法 Torch Distributed Gather Basically this allows you to gather any. Below is how i used torch.distributed.gather (). sends tensor to root process, which store it in. The gather operation in torch.distributed is used to collect tensors from multiple gpus or processes and concatenate them into a single tensor on one of the gpus or processes, known as the root rank. Hi, i am. Torch Distributed Gather.