What Is A Periodic Markov Chain . The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov chain describes a system whose state changes over time.

from www.slideserve.com

⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Imagine the following markov chain: The changes are not completely predictable, but rather are governed by probability distributions. A markov chain describes a system whose state changes over time. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial.

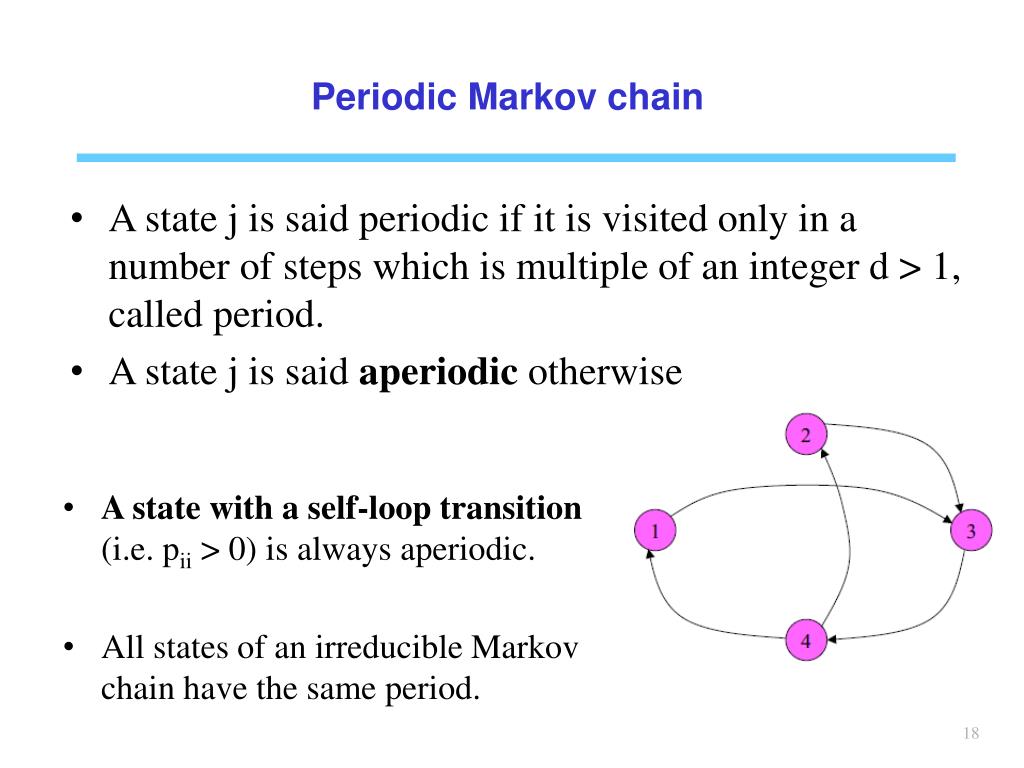

PPT Chapter 4 Discrete time Markov Chain PowerPoint Presentation

What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial.

From www.youtube.com

(ML 18.5) Examples of Markov chains with various properties (part 2 What Is A Periodic Markov Chain A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. Imagine the following markov chain: The changes are not completely predictable, but rather are governed by probability. What Is A Periodic Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state,. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A Periodic Markov Chain Imagine the following markov chain: Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. The changes. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Part1 Markov Models for Pattern Recognition Introduction What Is A Periodic Markov Chain Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov chain describes a system whose state changes over time. Imagine the following markov chain: The. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get. What Is A Periodic Markov Chain.

From www.chegg.com

Solved (a) (10 marks) be a Markov chain with state space What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: A markov chain describes a system whose state changes over time. Markov. What Is A Periodic Markov Chain.

From www.youtube.com

Markov Chains Recurrence, Irreducibility, Classes Part 2 YouTube What Is A Periodic Markov Chain A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Imagine the following markov chain: Markov chain (discrete time and. What Is A Periodic Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is A Periodic Markov Chain A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Imagine the following markov chain: Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a. What Is A Periodic Markov Chain.

From www.geeksforgeeks.org

Finding the probability of a state at a given time in a Markov chain What Is A Periodic Markov Chain A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Chapter 4 Discrete time Markov Chain PowerPoint Presentation What Is A Periodic Markov Chain A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain describes a. What Is A Periodic Markov Chain.

From www.chegg.com

5 Markov Chain Terminology In this question, we will What Is A Periodic Markov Chain Imagine the following markov chain: Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0. What Is A Periodic Markov Chain.

From medium.com

Markov Chain & Stationary Distribution Kim Hyungjun Medium What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. A markov chain describes a system whose state changes over time. Imagine the following markov chain: A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0. What Is A Periodic Markov Chain.

From math.stackexchange.com

number theory Periodic Markov chain contains all sufficient large What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. Imagine the following markov chain: A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0. What Is A Periodic Markov Chain.

From brilliant.org

Markov Chains Stationary Distributions Practice Problems Online What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Imagine the following markov. What Is A Periodic Markov Chain.

From www.researchgate.net

Periodic Markov chain with eight states and four classes. Download What Is A Periodic Markov Chain Imagine the following markov chain: Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The changes are not completely predictable, but rather are governed by probability. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. The changes are not completely predictable, but rather are governed by probability distributions. A markov chain describes a system whose state changes over time. A markov chain is a mathematical. What Is A Periodic Markov Chain.

From stats.stackexchange.com

How can i identify wether a Markov Chain is irreducible? Cross Validated What Is A Periodic Markov Chain Imagine the following markov chain: A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a. What Is A Periodic Markov Chain.

From gregorygundersen.com

Ergodic Markov Chains What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to. What Is A Periodic Markov Chain.

From stats.stackexchange.com

stochastic processes Intuitive explanation for periodicity in Markov What Is A Periodic Markov Chain A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0. What Is A Periodic Markov Chain.

From www.youtube.com

L25.6 Periodic States YouTube What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Imagine the following markov chain: A markov chain describes a. What Is A Periodic Markov Chain.

From ktr0921.github.io

Markov Chain Kel’Logg What Is A Periodic Markov Chain Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1. What Is A Periodic Markov Chain.

From www.globaltechcouncil.org

Overview of Markov Chains Global Tech Council What Is A Periodic Markov Chain A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain describes a system whose state changes over. What Is A Periodic Markov Chain.

From math.stackexchange.com

probability Can two nodes in a Markov chain have transitions that don What Is A Periodic Markov Chain A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Bayesian Methods with Monte Carlo Markov Chains II PowerPoint What Is A Periodic Markov Chain A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical. What Is A Periodic Markov Chain.

From medium.com

Markov Chain & Stationary Distribution Kim Hyungjun Medium What Is A Periodic Markov Chain Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. The changes are not completely predictable, but rather are governed by probability distributions. A markov chain describes a system whose state changes over time. Markov. What Is A Periodic Markov Chain.

From www.youtube.com

[CS 70] Markov Chains Finding Stationary Distributions YouTube What Is A Periodic Markov Chain Imagine the following markov chain: ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov chain describes a. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Models PowerPoint Presentation, free download ID2389554 What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The changes are not completely predictable, but rather are governed. What Is A Periodic Markov Chain.

From gregorygundersen.com

A Romantic View of Markov Chains What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. A markov chain describes a system whose state changes over. What Is A Periodic Markov Chain.

From www.chegg.com

Solved 16. Which of the following Markov chains with What Is A Periodic Markov Chain ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. Markov chain (discrete time and state, time homogeneous) we say. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chain Part 1 PowerPoint Presentation, free download ID What Is A Periodic Markov Chain Imagine the following markov chain: A markov chain describes a system whose state changes over time. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always. What Is A Periodic Markov Chain.

From dvdbrwn.medium.com

Markov Chains in Data Science When to Use, When Not to, and Best What Is A Periodic Markov Chain Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain describes a system whose state changes over time. Imagine the following markov chain: A markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. ⎡⎣⎢0. What Is A Periodic Markov Chain.

From www.youtube.com

Transient, recurrent states, and irreducible, closed sets in the Markov What Is A Periodic Markov Chain Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. A markov chain describes a system whose state changes over time. The changes are not completely predictable, but rather are governed by probability distributions. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5. What Is A Periodic Markov Chain.

From www.slideserve.com

PPT Markov Chains Lecture 5 PowerPoint Presentation, free download What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Markov chain (discrete time and state, time homogeneous) we say that (xi)1 is a markov chain on state space i with i=0 initial. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to. What Is A Periodic Markov Chain.

From www.shiksha.com

Markov Chain Types, Properties and Applications Shiksha Online What Is A Periodic Markov Chain The changes are not completely predictable, but rather are governed by probability distributions. Imagine the following markov chain: A markov chain describes a system whose state changes over time. ⎡⎣⎢0 1 1 0.5 0 0 0.5 0 0 ⎤⎦⎥ [0 0.5 0.5 1 0 0 1 0 0] we always get back to state 1 in two time periods. A. What Is A Periodic Markov Chain.