Airflow.providers.amazon.aws.hooks.s3 . This is a provider package for amazon provider. To transform the data from one amazon s3 object and save it to another object you can use. Follow the steps below to get started with airflow s3 hook: All classes for this provider package are in airflow.providers.amazon python package. All classes for this package are included in the airflow.providers.amazon python package. Use airflow s3 hook to implement a dag. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Configure the airflow s3 hook and its connection parameters; I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This package is for the amazon provider. Transform an amazon s3 object. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook.

from velog.io

I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This package is for the amazon provider. Transform an amazon s3 object. All classes for this provider package are in airflow.providers.amazon python package. To transform the data from one amazon s3 object and save it to another object you can use. Follow the steps below to get started with airflow s3 hook: Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Configure the airflow s3 hook and its connection parameters; Use airflow s3 hook to implement a dag.

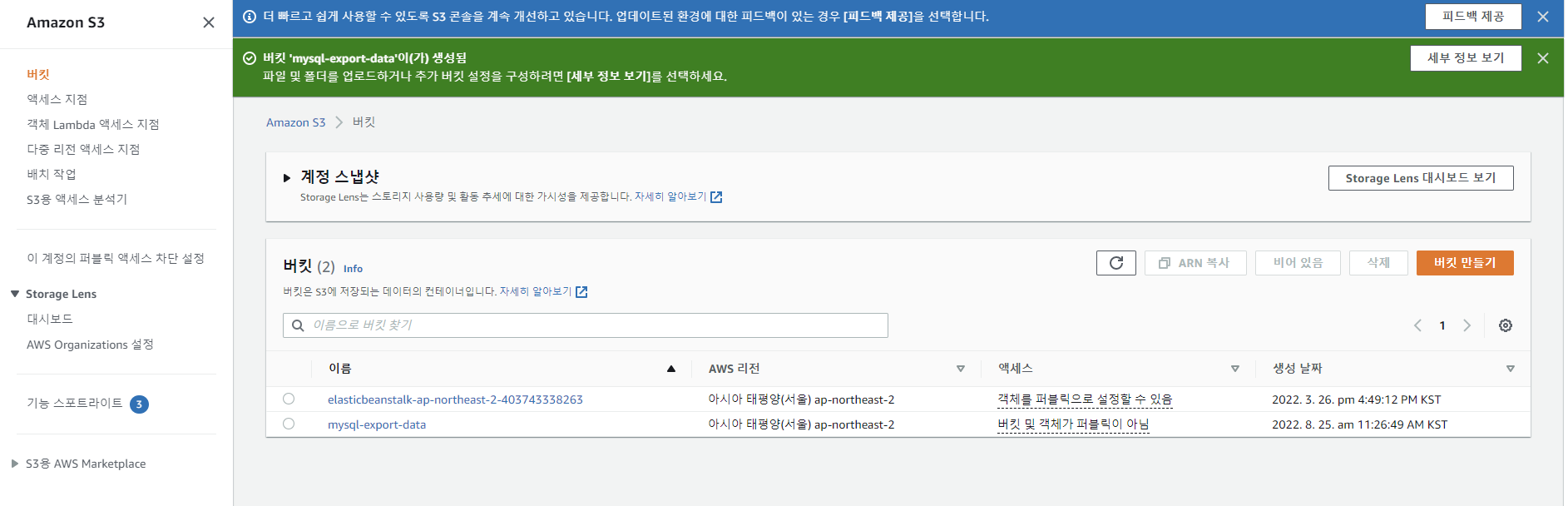

Airflow Pipeline 만들기 AWS S3에 파일 업로드하기

Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Use airflow s3 hook to implement a dag. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. To transform the data from one amazon s3 object and save it to another object you can use. Provide a bucket name taken from the connection if no bucket name has been passed to the function. All classes for this provider package are in airflow.providers.amazon python package. This package is for the amazon provider. All classes for this package are included in the airflow.providers.amazon python package. This is a provider package for amazon provider. Transform an amazon s3 object. Configure the airflow s3 hook and its connection parameters; Follow the steps below to get started with airflow s3 hook:

From airflow.apache.org

AWS Secrets Manager Backend — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.hooks.s3 Use airflow s3 hook to implement a dag. This is a provider package for amazon provider. All classes for this package are included in the airflow.providers.amazon python package. This package is for the amazon provider. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. To transform the data from one amazon s3 object. Airflow.providers.amazon.aws.hooks.s3.

From www.simplilearn.com

What is AWS S3 Overview, Features & Storage Classes Explained Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. All classes for this package are included in the airflow.providers.amazon python package. Follow the steps below to get started with airflow s3 hook: To transform the data from one amazon s3 object and save it to another object you can use. Transform an amazon s3 object. Configure the airflow s3 hook and. Airflow.providers.amazon.aws.hooks.s3.

From www.linkedin.com

What Is Managed Workflows for Apache Airflow On AWS And Why Companies Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. Provide a bucket name taken from the connection if no bucket name has been passed to the function. All classes for this provider package are in airflow.providers.amazon python package. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to. Airflow.providers.amazon.aws.hooks.s3.

From github.com

GitHub RandSaleh/aws_hooks_connection_airflow This is a sample Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. Configure the airflow s3 hook and its connection parameters; All classes for this provider package are in airflow.providers.amazon python package. This is a provider package for amazon provider. Provide a bucket name taken from the connection if no bucket name has been. Airflow.providers.amazon.aws.hooks.s3.

From blog.csdn.net

好用的Airflow Platform_airflow.providers.amazon.aws.hooks.s3 是哪个包CSDN博客 Airflow.providers.amazon.aws.hooks.s3 Provide a bucket name taken from the connection if no bucket name has been passed to the function. Use airflow s3 hook to implement a dag. Follow the steps below to get started with airflow s3 hook: Transform an amazon s3 object. All classes for this provider package are in airflow.providers.amazon python package. To transform the data from one amazon. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

Migrating from selfmanaged Apache Airflow to Amazon Managed Workflows Airflow.providers.amazon.aws.hooks.s3 This package is for the amazon provider. Configure the airflow s3 hook and its connection parameters; I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. All classes for this package are included in the airflow.providers.amazon python package. All classes for this provider package are in airflow.providers.amazon python package. Provide a bucket name taken from the connection if no bucket name has. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

Amazon Simple Storage Services (S3) AWS Architecture Blog Airflow.providers.amazon.aws.hooks.s3 Follow the steps below to get started with airflow s3 hook: I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Transform an amazon s3 object. To transform the data from one amazon s3 object and save it to another object you can use. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Use airflow. Airflow.providers.amazon.aws.hooks.s3.

From fyoippdrf.blob.core.windows.net

Airflow Aws Example at David Lewandowski blog Airflow.providers.amazon.aws.hooks.s3 This is a provider package for amazon provider. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. This package is for the amazon provider. To transform the data from one amazon s3 object and save it to another object you can use. Follow the steps below to get started with airflow s3 hook:. Airflow.providers.amazon.aws.hooks.s3.

From fig.io

airflow providers hooks Fig Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Transform an amazon s3 object. Follow the steps below to get started with airflow s3 hook: Provide a bucket name taken from the connection if no bucket name has been passed to the function. All classes for this provider package are in airflow.providers.amazon python package. To transform the data from one amazon. Airflow.providers.amazon.aws.hooks.s3.

From velog.io

Airflow Pipeline 만들기 AWS S3에 파일 업로드하기 Airflow.providers.amazon.aws.hooks.s3 All classes for this provider package are in airflow.providers.amazon python package. Configure the airflow s3 hook and its connection parameters; All classes for this package are included in the airflow.providers.amazon python package. To transform the data from one amazon s3 object and save it to another object you can use. Provide a bucket name taken from the connection if no. Airflow.providers.amazon.aws.hooks.s3.

From github.com

GitHub santiagogiordano/SkillUpDAcPython Alkemy SkillUp Data Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. This is a provider package for amazon provider. To transform the data from one amazon s3 object and save it to another object you can use. Use airflow s3 hook to implement a dag. Configure the. Airflow.providers.amazon.aws.hooks.s3.

From blog.csdn.net

好用的Airflow Platform_airflow.providers.amazon.aws.hooks.s3 是哪个包CSDN博客 Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Transform an amazon s3 object. Use airflow s3 hook to implement a dag. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This is a provider package. Airflow.providers.amazon.aws.hooks.s3.

From airflow.apache.org

AWS auth manager — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. Use airflow s3 hook to implement a dag. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This package is for the amazon provider. All classes for this package are included in the airflow.providers.amazon python package. Follow the steps below to get started. Airflow.providers.amazon.aws.hooks.s3.

From www.codenong.com

关于Amazon S3:如何使用LocalStack S3端点以编程方式设置Airflow 1.10日志记录? 码农家园 Airflow.providers.amazon.aws.hooks.s3 Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. All classes for this provider package are in airflow.providers.amazon python package. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. All classes for this package are included in the airflow.providers.amazon python package. Configure the airflow s3 hook and its connection parameters; To transform the data. Airflow.providers.amazon.aws.hooks.s3.

From velog.io

Airflow Pipeline 만들기 AWS S3에 파일 업로드하기 Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Configure the airflow s3 hook and its connection parameters; All classes for this package are included in the airflow.providers.amazon python package. To transform the data from one amazon s3 object and save it to another object you can use. This is a provider package for amazon provider. This package is for the. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

Workflow Management Amazon Managed Workflows for Apache Airflow (MWAA Airflow.providers.amazon.aws.hooks.s3 Use airflow s3 hook to implement a dag. This is a provider package for amazon provider. Configure the airflow s3 hook and its connection parameters; All classes for this package are included in the airflow.providers.amazon python package. Follow the steps below to get started with airflow s3 hook: Transform an amazon s3 object. All classes for this provider package are. Airflow.providers.amazon.aws.hooks.s3.

From www.youtube.com

Airflow Hooks S3 PostgreSQL Airflow Tutorial P13 YouTube Airflow.providers.amazon.aws.hooks.s3 This is a provider package for amazon provider. All classes for this package are included in the airflow.providers.amazon python package. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. This package is for the amazon. Airflow.providers.amazon.aws.hooks.s3.

From stackoverflow.com

amazon s3 creating boto3 s3 client on Airflow with an s3 connection Airflow.providers.amazon.aws.hooks.s3 Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Follow the steps below to get started with airflow s3 hook: This package is for the amazon provider. Use airflow s3 hook to implement a dag. Provide a bucket name taken from the connection if no bucket name has been passed to the function.. Airflow.providers.amazon.aws.hooks.s3.

From velog.io

Airflow Pipeline 만들기 AWS S3에 파일 업로드하기 Airflow.providers.amazon.aws.hooks.s3 This is a provider package for amazon provider. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Use airflow s3 hook to implement a dag. To transform the data from one amazon s3 object and save it to another object you can use. All classes for this provider package are in. Airflow.providers.amazon.aws.hooks.s3.

From hevodata.com

Setting Up Airflow S3 Hook 4 Easy Steps Learn Hevo Airflow.providers.amazon.aws.hooks.s3 Follow the steps below to get started with airflow s3 hook: Transform an amazon s3 object. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This is a provider package for amazon provider. This package is for the amazon provider. Provide a bucket name taken. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

Analyze your Amazon S3 spend using AWS Glue and Amazon Redshift AWS Airflow.providers.amazon.aws.hooks.s3 This is a provider package for amazon provider. Transform an amazon s3 object. Provide a bucket name taken from the connection if no bucket name has been passed to the function. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. All classes for this provider package are in airflow.providers.amazon python package. To transform the data from one amazon s3 object and. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

Synchronizing Amazon S3 Buckets Using AWS Step Functions AWS Compute Blog Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. All classes for this provider package are in airflow.providers.amazon python package. Use airflow s3 hook to implement a dag. Configure the airflow s3 hook and its connection parameters; Follow the steps below to get started with airflow s3 hook: Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to. Airflow.providers.amazon.aws.hooks.s3.

From tianzhui.cloud

AWS reInvent 2020 Data pipelines with Amazon Managed Workflows for Airflow.providers.amazon.aws.hooks.s3 This package is for the amazon provider. This is a provider package for amazon provider. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Transform an amazon s3 object. All classes for this package are included in the airflow.providers.amazon python package. Additional arguments (such as ``aws_conn_id``) may be specified and are. Airflow.providers.amazon.aws.hooks.s3.

From dev.classmethod.jp

Amazon MWAAでapacheairflowprovidersamazonを使ってRedshiftと繋いでみた Airflow.providers.amazon.aws.hooks.s3 All classes for this provider package are in airflow.providers.amazon python package. All classes for this package are included in the airflow.providers.amazon python package. Use airflow s3 hook to implement a dag. This is a provider package for amazon provider. Transform an amazon s3 object. This package is for the amazon provider. Additional arguments (such as ``aws_conn_id``) may be specified and. Airflow.providers.amazon.aws.hooks.s3.

From airflow.apache.org

Writing logs to Amazon S3 — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.hooks.s3 This is a provider package for amazon provider. All classes for this provider package are in airflow.providers.amazon python package. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Configure the airflow s3 hook and its connection parameters; Provide a bucket name taken from the connection if no bucket name has been passed to the function. Use airflow s3 hook to implement. Airflow.providers.amazon.aws.hooks.s3.

From airflow.apache.org

AWS Secrets Manager Backend — apacheairflowprovidersamazon Documentation Airflow.providers.amazon.aws.hooks.s3 To transform the data from one amazon s3 object and save it to another object you can use. Configure the airflow s3 hook and its connection parameters; I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. This package is for the amazon provider. Follow the steps below to get started with airflow s3 hook: This is a provider package for amazon. Airflow.providers.amazon.aws.hooks.s3.

From blog.csdn.net

好用的Airflow Platform_airflow.providers.amazon.aws.hooks.s3 是哪个包CSDN博客 Airflow.providers.amazon.aws.hooks.s3 This package is for the amazon provider. All classes for this provider package are in airflow.providers.amazon python package. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Follow the steps below to get started with airflow s3 hook: Configure the airflow s3 hook and its. Airflow.providers.amazon.aws.hooks.s3.

From aws.amazon.com

How USAA built an Amazon S3 malware scanning solution AWS Airflow.providers.amazon.aws.hooks.s3 All classes for this provider package are in airflow.providers.amazon python package. This package is for the amazon provider. This is a provider package for amazon provider. All classes for this package are included in the airflow.providers.amazon python package. Follow the steps below to get started with airflow s3 hook: I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Transform an amazon. Airflow.providers.amazon.aws.hooks.s3.

From github.com

GitHub BROC95/SkillUpDAcPython ETL airflow Airflow.providers.amazon.aws.hooks.s3 Use airflow s3 hook to implement a dag. Provide a bucket name taken from the connection if no bucket name has been passed to the function. All classes for this package are included in the airflow.providers.amazon python package. Follow the steps below to get started with airflow s3 hook: Configure the airflow s3 hook and its connection parameters; This package. Airflow.providers.amazon.aws.hooks.s3.

From stackoverflow.com

python How to handle Permission errors when connecting with AWS s3 in Airflow.providers.amazon.aws.hooks.s3 Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. All classes for this provider package are in airflow.providers.amazon python package. I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Follow the steps below to get started with airflow s3 hook: This package is for the amazon provider. All classes for this package are included. Airflow.providers.amazon.aws.hooks.s3.

From cloudonaut.io

Builder's Diary Vol. 2 Serverless ETL with Airflow and Athena cloudonaut Airflow.providers.amazon.aws.hooks.s3 All classes for this provider package are in airflow.providers.amazon python package. To transform the data from one amazon s3 object and save it to another object you can use. Provide a bucket name taken from the connection if no bucket name has been passed to the function. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to. Airflow.providers.amazon.aws.hooks.s3.

From github.com

GitHub askintamanli/DataEngineerAirflowProjectFromPostgresto Airflow.providers.amazon.aws.hooks.s3 Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. Follow the steps below to get started with airflow s3 hook: All classes for this provider package are in airflow.providers.amazon python package. This package is for the amazon provider. This is a provider package for amazon provider. Provide a bucket name taken from the. Airflow.providers.amazon.aws.hooks.s3.

From www.reddit.com

I want to practice my skills in Airflow, AWS cloud provider, and Airflow.providers.amazon.aws.hooks.s3 Configure the airflow s3 hook and its connection parameters; Transform an amazon s3 object. Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. To transform the data from one amazon s3 object and save it to another object you can use. Follow the steps below to get started with airflow s3 hook: I. Airflow.providers.amazon.aws.hooks.s3.

From velog.io

Airflow Pipeline 만들기 AWS S3에 파일 업로드하기 Airflow.providers.amazon.aws.hooks.s3 I create a hook from airflow.providers.amazon.aws.hooks.s3 which implementing. Provide a bucket name taken from the connection if no bucket name has been passed to the function. All classes for this provider package are in airflow.providers.amazon python package. Use airflow s3 hook to implement a dag. Transform an amazon s3 object. Additional arguments (such as ``aws_conn_id``) may be specified and are. Airflow.providers.amazon.aws.hooks.s3.

From betterdatascience-page.pages.dev

Apache Airflow for Data Science How to Download Files from Amazon S3 Airflow.providers.amazon.aws.hooks.s3 Use airflow s3 hook to implement a dag. Configure the airflow s3 hook and its connection parameters; Additional arguments (such as ``aws_conn_id``) may be specified and are passed down to the underlying awsbasehook. All classes for this package are included in the airflow.providers.amazon python package. All classes for this provider package are in airflow.providers.amazon python package. Follow the steps below. Airflow.providers.amazon.aws.hooks.s3.