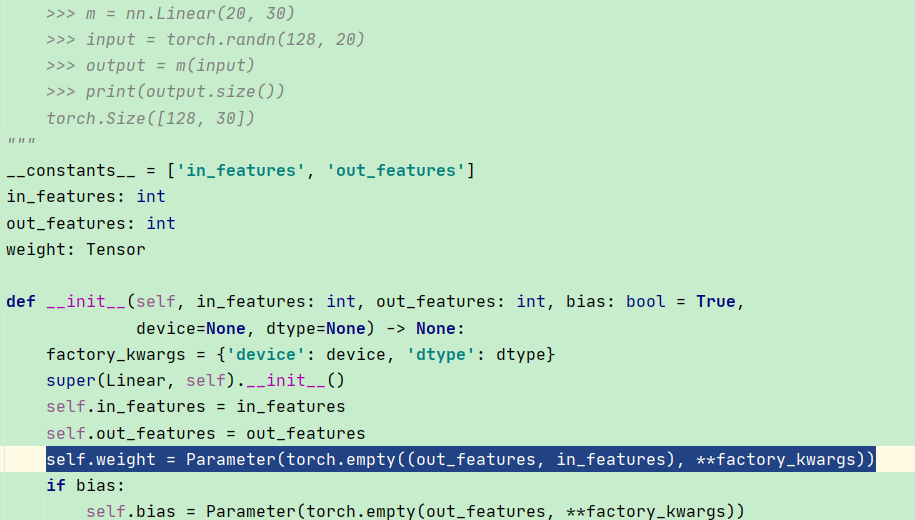

Torch Nn.linear Weight Initialization . However, it’s a good idea to use a. If you are using other. To initialize the weights of a single layer, use a function from torch.nn.init. The layers are initialized in some way after creation. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m):

from www.tutorialexample.com

To initialize the weights of a single layer, use a function from torch.nn.init. Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. If you are using other. However, it’s a good idea to use a. The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): The layers are initialized in some way after creation.

torch.nn.Linear() weight Shape Explained PyTorch Tutorial

Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. The conv layer is initialized like this. If you are using other. However, it’s a good idea to use a. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. To initialize the weights of a single layer, use a function from torch.nn.init. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): The layers are initialized in some way after creation. Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc.

From jamesmccaffrey.wordpress.com

Understanding the PyTorch Linear Layer Default Weight and Bias Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers are initialized in some way after creation. Example layers include linear, conv2d, rnn etc. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function. Torch Nn.linear Weight Initialization.

From blog.csdn.net

对 torch.nn.Linear 的理解CSDN博客 Torch Nn.linear Weight Initialization The conv layer is initialized like this. To initialize the weights of a single layer, use a function from torch.nn.init. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers. Torch Nn.linear Weight Initialization.

From github.com

how we can do the Weight Initialization for nn.linear? · Issue 19613 Torch Nn.linear Weight Initialization The layers are initialized in some way after creation. If you are using other. Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation. Torch Nn.linear Weight Initialization.

From jamesmccaffrey.wordpress.com

PyTorch Explicit vs. Implicit Weight and Bias Initialization James D Torch Nn.linear Weight Initialization Example layers include linear, conv2d, rnn etc. However, it’s a good idea to use a. Most layers are initialized using kaiming uniform method. If you are using other. The conv layer is initialized like this. To initialize the weights of a single layer, use a function from torch.nn.init. The layers are initialized in some way after creation. Class torch.nn.linear(in_features, out_features,. Torch Nn.linear Weight Initialization.

From www.cnblogs.com

torch.nn.Linear解释 祥瑞哈哈哈 博客园 Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. If you are using other. However, it’s a good idea to use a. The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Example layers include linear, conv2d,. Torch Nn.linear Weight Initialization.

From jamesmccaffrey.wordpress.com

More About PyTorch Neural Network Weight Initialization James D Torch Nn.linear Weight Initialization Example layers include linear, conv2d, rnn etc. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. If you are using other. The. Torch Nn.linear Weight Initialization.

From jamesmccaffrey.wordpress.com

PyTorch Custom Weight Initialization Example James D. McCaffrey Torch Nn.linear Weight Initialization The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): If you are using other. The layers are initialized in some way after creation. Most layers are initialized using kaiming uniform method. To initialize the weights. Torch Nn.linear Weight Initialization.

From blog.csdn.net

torch.nn.Linear和torch.nn.MSELoss_torch mseloss指定维度CSDN博客 Torch Nn.linear Weight Initialization To initialize the weights of a single layer, use a function from torch.nn.init. However, it’s a good idea to use a. The conv layer is initialized like this. Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. The layers are initialized in some way after creation. # defining a method for initialization of linear. Torch Nn.linear Weight Initialization.

From blog.csdn.net

PyTorch的nn.Linear()详解_pytorch nn.linearCSDN博客 Torch Nn.linear Weight Initialization Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers are initialized in some way after creation. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): If you are using other. Example layers include linear, conv2d, rnn etc. However,. Torch Nn.linear Weight Initialization.

From onexception.dev

Using Torch.nn.functional.linear A Comprehensive Guide Torch Nn.linear Weight Initialization Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers are initialized in some way after creation. Most layers are initialized using kaiming uniform method. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): However, it’s a good idea. Torch Nn.linear Weight Initialization.

From exobrbkfr.blob.core.windows.net

Torch.nn.functional.linear at Jordan Bryant blog Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Example layers include linear, conv2d, rnn etc. To initialize the weights of a single layer, use a function from torch.nn.init. Most layers are initialized using kaiming uniform method. The conv layer is initialized. Torch Nn.linear Weight Initialization.

From zhuanlan.zhihu.com

Pytorch深入剖析 1torch.nn.Module方法及源码 知乎 Torch Nn.linear Weight Initialization Example layers include linear, conv2d, rnn etc. The layers are initialized in some way after creation. The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Most layers are initialized using kaiming uniform method. However, it’s. Torch Nn.linear Weight Initialization.

From blog.csdn.net

(五)处理多维特征的输入(上)+torch.nn.Linear(8,1)表示什么+代码_nn.linear(8, 1)有什么实际意义CSDN博客 Torch Nn.linear Weight Initialization If you are using other. The conv layer is initialized like this. Example layers include linear, conv2d, rnn etc. Most layers are initialized using kaiming uniform method. The layers are initialized in some way after creation. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation. Torch Nn.linear Weight Initialization.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch Nn.linear Weight Initialization Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. Example layers include linear, conv2d, rnn etc. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): If you are using other. However, it’s a good idea to use a. To initialize. Torch Nn.linear Weight Initialization.

From machinelearningmastery.com

Weight Initialization for Deep Learning Neural Networks Torch Nn.linear Weight Initialization However, it’s a good idea to use a. Most layers are initialized using kaiming uniform method. The layers are initialized in some way after creation. To initialize the weights of a single layer, use a function from torch.nn.init. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of. Torch Nn.linear Weight Initialization.

From blog.csdn.net

torch学习笔记(二) nn类结构Linear_bubbleoooooo的博客CSDN博客 Torch Nn.linear Weight Initialization The conv layer is initialized like this. To initialize the weights of a single layer, use a function from torch.nn.init. Example layers include linear, conv2d, rnn etc. If you are using other. The layers are initialized in some way after creation. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers. Torch Nn.linear Weight Initialization.

From blog.csdn.net

torch.nn.init.kaiming_normal__torch haiming initCSDN博客 Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): To initialize the weights of a single layer, use a function from torch.nn.init. The layers are initialized in some way after creation. Example layers include linear, conv2d, rnn etc. However, it’s a good. Torch Nn.linear Weight Initialization.

From blog.csdn.net

PyTorch中的torch.nn.Linear详解CSDN博客 Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers are initialized in some way after creation. If you are using other. To initialize the weights of a single layer,. Torch Nn.linear Weight Initialization.

From velog.io

Weight initialization Torch Nn.linear Weight Initialization Example layers include linear, conv2d, rnn etc. However, it’s a good idea to use a. If you are using other. To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. The layers are initialized in some way after creation. Most layers are initialized using kaiming uniform method. # defining a. Torch Nn.linear Weight Initialization.

From opensourcebiology.eu

PyTorch Linear and PyTorch Embedding Layers Open Source Biology Torch Nn.linear Weight Initialization If you are using other. Most layers are initialized using kaiming uniform method. The layers are initialized in some way after creation. However, it’s a good idea to use a. To initialize the weights of a single layer, use a function from torch.nn.init. # defining a method for initialization of linear weights # the initialization will be applied to all. Torch Nn.linear Weight Initialization.

From www.youtube.com

Tutorial 11 Various Weight Initialization Techniques in Neural Network Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. However, it’s a good idea to use a. The layers are initialized in some way after creation. If you are using other. To initialize the weights of a single layer, use a function from torch.nn.init. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine. Torch Nn.linear Weight Initialization.

From github.com

nn.Linear weight initalization uniform or kaiming_uniform? · Issue Torch Nn.linear Weight Initialization The layers are initialized in some way after creation. Example layers include linear, conv2d, rnn etc. To initialize the weights of a single layer, use a function from torch.nn.init. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Most layers are initialized. Torch Nn.linear Weight Initialization.

From www.tutorialexample.com

torch.nn.Linear() weight Shape Explained PyTorch Tutorial Torch Nn.linear Weight Initialization Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. If you are using other. The layers are initialized in some way after creation. Most layers are initialized using kaiming uniform method. The conv layer is initialized like this. Example layers include linear, conv2d, rnn etc. # defining a method for initialization of linear weights # the initialization will. Torch Nn.linear Weight Initialization.

From sebarnold.net

nn package — PyTorch Tutorials 0.2.0_4 documentation Torch Nn.linear Weight Initialization Example layers include linear, conv2d, rnn etc. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): However, it’s a good idea to use a. The conv layer is initialized like this. Most layers are initialized using kaiming uniform method. Class torch.nn.linear(in_features, out_features,. Torch Nn.linear Weight Initialization.

From blog.csdn.net

【PyTorch】nn.Linear()用法示例_pytorch linear函数使用示例CSDN博客 Torch Nn.linear Weight Initialization To initialize the weights of a single layer, use a function from torch.nn.init. However, it’s a good idea to use a. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Example layers include linear, conv2d, rnn etc. The conv layer is initialized. Torch Nn.linear Weight Initialization.

From zhuanlan.zhihu.com

torch.nn 之 Normalization Layers 知乎 Torch Nn.linear Weight Initialization The layers are initialized in some way after creation. If you are using other. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. Example layers include linear, conv2d, rnn etc. To. Torch Nn.linear Weight Initialization.

From www.youtube.com

Torch.nn.Linear Module explained YouTube Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. Example layers. Torch Nn.linear Weight Initialization.

From www.deeplearningwizard.com

Weight Initialization and Activation Functions Deep Learning Wizard Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. If you. Torch Nn.linear Weight Initialization.

From www.deeplearningwizard.com

Weight Initialization and Activation Functions Deep Learning Wizard Torch Nn.linear Weight Initialization # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. Most layers. Torch Nn.linear Weight Initialization.

From velog.io

PyTorch Tutorial 01. Linear Layer & nn.Module Torch Nn.linear Weight Initialization Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. Most layers are initialized using kaiming uniform method. The conv layer is initialized like this. The layers are initialized in some way after creation. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function. Torch Nn.linear Weight Initialization.

From velog.io

DL Basic 추가논의) Weight Initialize를 하는 이유? Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. Example layers include linear, conv2d, rnn etc. If you are using other. However, it’s. Torch Nn.linear Weight Initialization.

From amalaj7.medium.com

Weight Initialization Technique in Neural Networks Medium Torch Nn.linear Weight Initialization The layers are initialized in some way after creation. To initialize the weights of a single layer, use a function from torch.nn.init. The conv layer is initialized like this. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. If you are using other. However, it’s a good idea to use a. Example layers include linear, conv2d, rnn etc.. Torch Nn.linear Weight Initialization.

From chanpinxue.cn

pytorch torch.nn.Linear 用法 蒋智昊的博客 Torch Nn.linear Weight Initialization If you are using other. To initialize the weights of a single layer, use a function from torch.nn.init. However, it’s a good idea to use a. Class torch.nn.linear(in_features, out_features, bias=true, device=none, dtype=none) [source] applies an affine linear. The layers are initialized in some way after creation. Example layers include linear, conv2d, rnn etc. # defining a method for initialization of. Torch Nn.linear Weight Initialization.

From www.youtube.com

Learnable module torch.nn.Linear 설명2 YouTube Torch Nn.linear Weight Initialization Most layers are initialized using kaiming uniform method. Example layers include linear, conv2d, rnn etc. If you are using other. The layers are initialized in some way after creation. The conv layer is initialized like this. However, it’s a good idea to use a. # defining a method for initialization of linear weights # the initialization will be applied to. Torch Nn.linear Weight Initialization.

From laptrinhx.com

Weight Initialization for Deep Learning Neural Networks LaptrinhX Torch Nn.linear Weight Initialization The conv layer is initialized like this. # defining a method for initialization of linear weights # the initialization will be applied to all linear layers # irrespective of their activation function def init_weights(m): However, it’s a good idea to use a. To initialize the weights of a single layer, use a function from torch.nn.init. Example layers include linear, conv2d,. Torch Nn.linear Weight Initialization.