Torch.nn.parallel.scatter Gather . torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. When training with fsdp, the gpu. this function is useful for gathering the results of a distributed computation. It takes a sequence of objects, one for each gpu,. You can vote up the ones you like or vote down. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). in general, pytorch’s nn.parallel primitives can be used independently.

from zhuanlan.zhihu.com

Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. this function is useful for gathering the results of a distributed computation. in general, pytorch’s nn.parallel primitives can be used independently. You can vote up the ones you like or vote down. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. When training with fsdp, the gpu. It takes a sequence of objects, one for each gpu,. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input.

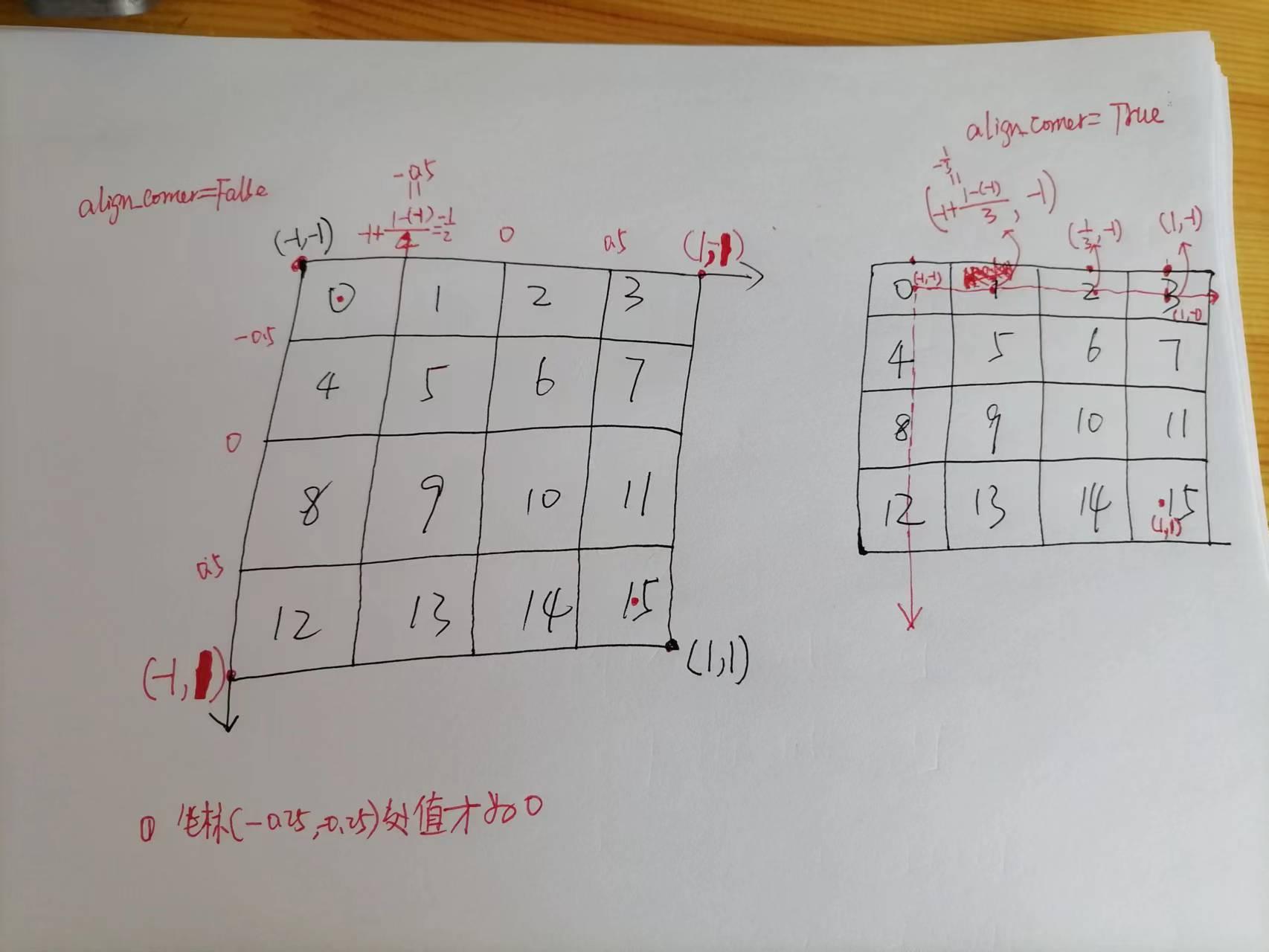

TORCH.NN.FUNCTIONAL.GRID_SAMPLE 知乎

Torch.nn.parallel.scatter Gather fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. It takes a sequence of objects, one for each gpu,. this function is useful for gathering the results of a distributed computation. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. You can vote up the ones you like or vote down. in general, pytorch’s nn.parallel primitives can be used independently. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input.

From dzone.com

ScatterGather in Mule ESB DZone Integration Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. in general, pytorch’s nn.parallel primitives can be used independently. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. It takes a sequence of objects, one for each gpu,. . Torch.nn.parallel.scatter Gather.

From github.com

pytorch/scatter_gather.py at master · pytorch/pytorch · GitHub Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. When training with fsdp, the gpu. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). in general, pytorch’s nn.parallel primitives can be used independently. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers. Torch.nn.parallel.scatter Gather.

From zhuanlan.zhihu.com

TORCH.NN.FUNCTIONAL.GRID_SAMPLE 知乎 Torch.nn.parallel.scatter Gather You can vote up the ones you like or vote down. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). It takes a sequence of objects, one for each gpu,. the torch.distributed package provides pytorch support and communication primitives. Torch.nn.parallel.scatter Gather.

From juejin.cn

秒懂 torch.scatter_ 函数的意义(详解) 掘金 Torch.nn.parallel.scatter Gather in general, pytorch’s nn.parallel primitives can be used independently. You can vote up the ones you like or vote down. When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. It takes a sequence of objects, one for each gpu,. fsdp. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch深度学习实战lesson31_nn.parallel.scatterCSDN博客 Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. in general, pytorch’s nn.parallel primitives can be used independently. When training with fsdp, the gpu. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. It takes a sequence of objects, one for each gpu,. . Torch.nn.parallel.scatter Gather.

From jan.ucc.nau.edu

Scatter/Gather Pedagogic Modules Torch.nn.parallel.scatter Gather the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. in general, pytorch’s nn.parallel primitives can be used independently. this function is useful for gathering the results of a distributed computation. When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an. Torch.nn.parallel.scatter Gather.

From medium.com

Distributed System Scatter/Gather Pattern by Bindu C Medium Torch.nn.parallel.scatter Gather When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. this function is useful for gathering the results of a distributed computation. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input.. Torch.nn.parallel.scatter Gather.

From www.cnblogs.com

Torch scatter、scatter_add和gather X1OO 博客园 Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. this function is useful for gathering the results of a distributed computation. When training with fsdp, the gpu.. Torch.nn.parallel.scatter Gather.

From www.slideserve.com

PPT Gather/Scatter, Parallel Scan, and Applications PowerPoint Torch.nn.parallel.scatter Gather in general, pytorch’s nn.parallel primitives can be used independently. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along. Torch.nn.parallel.scatter Gather.

From www.researchgate.net

One iteration in the ScatterGather model. In the Scatter phase, each Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). in general, pytorch’s nn.parallel primitives can be used independently. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by.. Torch.nn.parallel.scatter Gather.

From support.terra.bio

Scattergather parallelism Terra Support Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. When training with fsdp, the gpu. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. You can vote up the ones you like or vote down. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). Gather. Torch.nn.parallel.scatter Gather.

From medium.com

Understand torch.scatter. Hope there are less programmers… by Mike Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. in general, pytorch’s nn.parallel primitives can be used independently. You can vote up the ones you like or. Torch.nn.parallel.scatter Gather.

From www.cnblogs.com

Torch scatter、scatter_add和gather X1OO 博客园 Torch.nn.parallel.scatter Gather fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. It takes a sequence of objects, one for each gpu,. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. the torch.distributed package provides pytorch support and communication primitives. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

【Pytorch学习笔记】torch.gather()与tensor.scatter_()_torch.gather和CSDN博客 Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). You can vote up the ones you like or vote down. When training with fsdp, the gpu. It takes a sequence of objects, one for each gpu,. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. this function is. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch分布式训练(二):torch.nn.parallel.DistributedDataParallel_2 torch.nn Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). You can vote up the ones you like or vote down. this function is useful for gathering the results of a distributed computation. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. in general, pytorch’s nn.parallel primitives. Torch.nn.parallel.scatter Gather.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.parallel.scatter Gather in general, pytorch’s nn.parallel primitives can be used independently. this function is useful for gathering the results of a distributed computation. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch分布式训练(二):torch.nn.parallel.DistributedDataParallel_2 torch.nn Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. When training with fsdp, the gpu. It takes a sequence of objects, one for each gpu,. in general, pytorch’s nn.parallel primitives can be used independently. this function is. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

图解PyTorch中的torch.gather函数和 scatter 函数CSDN博客 Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). this function is useful for gathering the results of a distributed computation. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. When training with fsdp, the gpu. torch.gather creates a new tensor from the input tensor by taking the values from each row along the. Torch.nn.parallel.scatter Gather.

From www.youtube.com

Parallel analog to torch.nn.Sequential container YouTube Torch.nn.parallel.scatter Gather fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. this function is useful for gathering the results of a distributed computation. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). Gather (input, dim, index, *, sparse_grad. Torch.nn.parallel.scatter Gather.

From www.slideserve.com

PPT Lecture 2 PowerPoint Presentation, free download ID1441221 Torch.nn.parallel.scatter Gather in general, pytorch’s nn.parallel primitives can be used independently. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. the following are. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch分布式训练(二):torch.nn.parallel.DistributedDataParallel_2 torch.nn Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. You can vote up the ones you like or vote down. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the following are. Torch.nn.parallel.scatter Gather.

From www.sharetechnote.com

ShareTechnote 5G What is 5G Torch.nn.parallel.scatter Gather Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. this function is useful for gathering. Torch.nn.parallel.scatter Gather.

From www.tutorialexample.com

Understand torch.nn.functional.pad() with Examples PyTorch Tutorial Torch.nn.parallel.scatter Gather It takes a sequence of objects, one for each gpu,. in general, pytorch’s nn.parallel primitives can be used independently. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. this function is useful for gathering the results of a distributed computation. You can vote up the ones you like. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

gather torch_torch.nn.DataParallel中数据Gather的问题:维度不匹配CSDN博客 Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). the torch.distributed package provides pytorch support and communication primitives for multiprocess. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

【Pytorch学习笔记】torch.gather()与tensor.scatter_()_torch.gather和CSDN博客 Torch.nn.parallel.scatter Gather the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. You can vote up the ones you like or vote down. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. fsdp is a type of data. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

torch.nn.parallel.DistributedDataParallel 使用笔记CSDN博客 Torch.nn.parallel.scatter Gather the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. in general, pytorch’s nn.parallel primitives can be used independently. It takes a sequence of objects, one for each gpu,. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. You can vote up the ones you like. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch深度学习实战lesson31_nn.parallel.scatterCSDN博客 Torch.nn.parallel.scatter Gather torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. It takes a sequence of objects, one for each gpu,. You can vote up the ones you like or vote down.. Torch.nn.parallel.scatter Gather.

From www.youtube.com

Scatter To Gather Transformation Intro to Parallel Programming YouTube Torch.nn.parallel.scatter Gather in general, pytorch’s nn.parallel primitives can be used independently. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. When training with fsdp, the gpu. You can vote up the ones you like or vote down. this function is useful for gathering the results of a distributed. Torch.nn.parallel.scatter Gather.

From www.researchgate.net

Looplevel representation for torch.nn.Linear(32, 32) through Torch.nn.parallel.scatter Gather It takes a sequence of objects, one for each gpu,. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. this function is useful for gathering the results of a distributed computation. When training with fsdp, the gpu. the torch.distributed package provides pytorch support and communication primitives. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch.nn.parallel.scatter Gather fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. the torch.distributed package provides pytorch support and communication primitives for multiprocess. Torch.nn.parallel.scatter Gather.

From zhuanlan.zhihu.com

torch_satter scatter_add 计算过程 知乎 Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. You can vote up the ones you like or vote down. fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks. When training with fsdp, the gpu. Gather (input, dim, index, *, sparse_grad = false, out = none). Torch.nn.parallel.scatter Gather.

From www.cnblogs.com

Torch scatter、scatter_add和gather X1OO 博客园 Torch.nn.parallel.scatter Gather You can vote up the ones you like or vote down. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. the following are 30 code examples of torch.nn.parallel.scatter_gather.gather(). fsdp is a type of data parallelism that shards model parameters, optimizer states and gradients across ddp ranks.. Torch.nn.parallel.scatter Gather.

From www.youtube.com

Scatter To Gather Transformation Intro to Parallel Programming YouTube Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. in general, pytorch’s nn.parallel primitives can be used independently. torch.gather creates a new tensor from the input tensor by taking the values from each row along the input. You can vote up the ones you like or vote down. the torch.distributed package provides pytorch. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

pytorch深度学习实战lesson31_nn.parallel.scatterCSDN博客 Torch.nn.parallel.scatter Gather When training with fsdp, the gpu. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. It takes a sequence of objects, one for each gpu,. this function is useful for gathering the results of a distributed computation. in general, pytorch’s nn.parallel primitives can be used independently. the following are 30 code examples of. Torch.nn.parallel.scatter Gather.

From blog.csdn.net

「详解」torch.nn.Fold和torch.nn.Unfold操作_torch.unfoldCSDN博客 Torch.nn.parallel.scatter Gather this function is useful for gathering the results of a distributed computation. the torch.distributed package provides pytorch support and communication primitives for multiprocess parallelism. Gather (input, dim, index, *, sparse_grad = false, out = none) → tensor ¶ gathers values along an axis specified by. torch.gather creates a new tensor from the input tensor by taking the. Torch.nn.parallel.scatter Gather.