Torch.einsum Onnx . Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. This is a minimal example: I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Here is a short list: In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Converts models from lightgbm, xgboost,. Converts models from tensorflow, onnxmltools: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Exporting models which use einsum in their forward() method does not work.

from blog.csdn.net

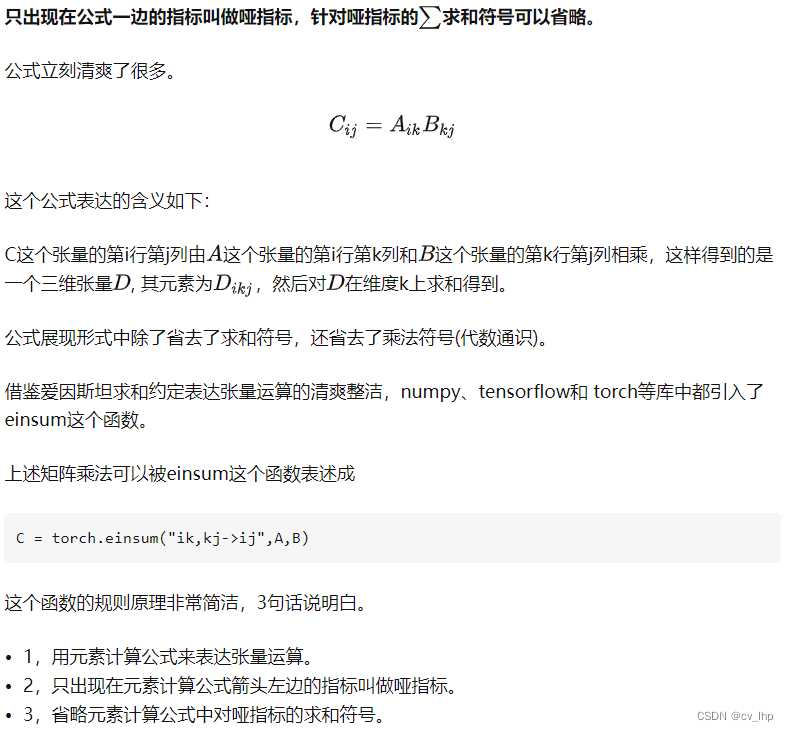

Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Converts models from lightgbm, xgboost,. Converts models from tensorflow, onnxmltools: This is a minimal example: Here is a short list: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Exporting models which use einsum in their forward() method does not work.

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客

Torch.einsum Onnx This is a minimal example: Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Converts models from tensorflow, onnxmltools: This is a minimal example: I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Exporting models which use einsum in their forward() method does not work. Here is a short list: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Converts models from lightgbm, xgboost,. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified.

From imagetou.com

Pytorch Onnx Conversion For Dynamic Model Image to u Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Converts models from tensorflow,. Torch.einsum Onnx.

From blog.csdn.net

【深度学习模型移植】用torch普通算子组合替代torch.einsum方法_torch.einsum 替换CSDN博客 Torch.einsum Onnx Converts models from lightgbm, xgboost,. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with. Torch.einsum Onnx.

From zanote.net

【Pytorch】torch.einsumの引数・使い方を徹底解説!アインシュタインの縮約規則を利用して複雑なテンソル操作を短い文字列を使って行う Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Here is a short list: This is a minimal example: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Einsum (equation, * operands) → tensor [source] ¶ sums. Torch.einsum Onnx.

From www.ppmy.cn

torch.einsum() 用法说明 Torch.einsum Onnx Converts models from tensorflow, onnxmltools: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. This is a minimal example: Here is a short list: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. The einsum operator evaluates algebraic tensor. Torch.einsum Onnx.

From www.ppmy.cn

torch.einsum() 用法说明 Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Einsum (equation, *. Torch.einsum Onnx.

From github.com

torch.einsum() is not supported? · Issue 1362 · Tencent/TNN · GitHub Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Here is a short list: Exporting models which use einsum in their forward() method does. Torch.einsum Onnx.

From segmentfault.com

python 如何调用 torch.onnx.export 导出的模型? SegmentFault 思否 Torch.einsum Onnx Converts models from lightgbm, xgboost,. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Here is a short list: I found the solution by simly adding do_constant_folding=false. Torch.einsum Onnx.

From github.com

GitHub justinchuby/torchonnxintegrationtests Can this model be Torch.einsum Onnx I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. This is a minimal example: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation. Torch.einsum Onnx.

From www.zhihu.com

Pytorch比较torch.einsum和torch.matmul函数,选哪个更好? 知乎 Torch.einsum Onnx Converts models from tensorflow, onnxmltools: I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Here is a short list: This is a minimal example: Converts models from lightgbm, xgboost,. In this tutorial, we are. Torch.einsum Onnx.

From github.com

[RFC] Adding an ONNX to Torch conversion · Issue 1639 · onnx/onnxmlir Torch.einsum Onnx Here is a short list: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. This is a minimal example: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Exporting models which use einsum in their forward() method does not work. I found the. Torch.einsum Onnx.

From github.com

[ONNX] Remove einsum_helper from opset12 public functions · Issue Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Exporting models which use einsum in their forward() method does not work. Converts models from tensorflow, onnxmltools: Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with. Torch.einsum Onnx.

From baekyeongmin.github.io

Einsum 사용하기 Yeongmin’s Blog Torch.einsum Onnx This is a minimal example: In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Exporting models which use einsum in their forward() method does not work. Converts models from tensorflow, onnxmltools: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements. Torch.einsum Onnx.

From github.com

torch.onnx.export primConstant type and ONNX Expand input shape Torch.einsum Onnx Here is a short list: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. T in (. Torch.einsum Onnx.

From www.scaler.com

Exporting to ONNX using torch.onnx API Scaler Topics Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. This is a minimal example: Converts models from tensorflow, onnxmltools: I found the solution by simly. Torch.einsum Onnx.

From blog.csdn.net

【深度学习模型移植】用torch普通算子组合替代torch.einsum方法_torch.einsum 替换CSDN博客 Torch.einsum Onnx Exporting models which use einsum in their forward() method does not work. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Converts models from tensorflow, onnxmltools: T in ( tensor (bfloat16), tensor (double), tensor. Torch.einsum Onnx.

From gitcode.csdn.net

「解析」如何优雅的学习 torch.einsum()_numpy_ViatorSunGitCode 开源社区 Torch.einsum Onnx Converts models from lightgbm, xgboost,. Exporting models which use einsum in their forward() method does not work. Converts models from tensorflow, onnxmltools: In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements. Torch.einsum Onnx.

From blog.csdn.net

torch转onnx遇到的坑(二)_tracerwarning torch.tensor results are registeredCSDN博客 Torch.einsum Onnx The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Converts models from tensorflow, onnxmltools: Converts models. Torch.einsum Onnx.

From discuss.pytorch.org

Torch.onnx.export for nn.LocalResponseNorm deployment PyTorch Forums Torch.einsum Onnx I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. This is a minimal example: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the. Torch.einsum Onnx.

From blog.csdn.net

torch.einsum详解CSDN博客 Torch.einsum Onnx Converts models from lightgbm, xgboost,. Here is a short list: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. This is a minimal example: Exporting models which use einsum in their forward() method does not work. The einsum operator evaluates algebraic tensor operations on a sequence of tensors,. Torch.einsum Onnx.

From xadupre.github.io

Compares implementations of Einsum — Python Runtime for ONNX Torch.einsum Onnx Converts models from tensorflow, onnxmltools: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Here is a short list: In this tutorial, we are going to expand this to describe. Torch.einsum Onnx.

From blog.csdn.net

TensorRT 喜大普奔,TensorRT8.2 EA起开始支持Einsum爱因斯坦求和算子_onnx einsumCSDN博客 Torch.einsum Onnx This is a minimal example: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Here is a short list: Exporting models which use einsum in their forward() method does not work. T. Torch.einsum Onnx.

From blog.csdn.net

深度学习9.20(仅自己学习使用)_torch.einsum('nkctv,kvw>nctw', (x, a))CSDN博客 Torch.einsum Onnx This is a minimal example: The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions. Torch.einsum Onnx.

From www.cnblogs.com

笔记 EINSUM IS ALL YOU NEED EINSTEIN SUMMATION IN DEEP LEARNING Rogn Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Exporting models which. Torch.einsum Onnx.

From discuss.pytorch.org

Pytorch 1.13 onnx export is with TensorRT conversion Torch.einsum Onnx Exporting models which use einsum in their forward() method does not work. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Converts models from lightgbm, xgboost,. Similar to batchnorm, addmm and softmax etc, it would be. Torch.einsum Onnx.

From discuss.pytorch.org

Speed difference in torch.einsum and torch.bmm when adding an axis Torch.einsum Onnx T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Exporting. Torch.einsum Onnx.

From github.com

torch.einsum 400x slower than numpy.einsum on a simple contraction Torch.einsum Onnx This is a minimal example: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Converts models from lightgbm, xgboost,. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. The einsum operator evaluates algebraic tensor operations on a sequence of tensors,. Torch.einsum Onnx.

From hxetkiwaz.blob.core.windows.net

Torch Einsum Speed at Cornelius Dixon blog Torch.einsum Onnx Converts models from lightgbm, xgboost,. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. This is a minimal example: In this tutorial, we are going to expand this. Torch.einsum Onnx.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Onnx Converts models from lightgbm, xgboost,. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Here is a short list: T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Similar to batchnorm, addmm and softmax etc, it would be better. Torch.einsum Onnx.

From hxetkiwaz.blob.core.windows.net

Torch Einsum Speed at Cornelius Dixon blog Torch.einsum Onnx I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. Here is a short list: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with. Torch.einsum Onnx.

From github.com

[Shape Inference] Einsum Ellipsis represents dimensions Torch.einsum Onnx Here is a short list: Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch. Torch.einsum Onnx.

From github.com

onnx export einsum not supported · Issue 26893 · pytorch/pytorch · GitHub Torch.einsum Onnx Converts models from tensorflow, onnxmltools: Here is a short list: Converts models from lightgbm, xgboost,. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with onnx::einsum. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. Exporting models which use. Torch.einsum Onnx.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Onnx In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Here is a short list: Exporting models which use einsum in their forward() method does not work. Similar to batchnorm, addmm and softmax etc, it would be better aesthetically and performance wise to export aten::einsum with. Torch.einsum Onnx.

From blog.csdn.net

torch.einsum()_kvs = torch.einsum("lhm,lhd>hmd", ks, vs)CSDN博客 Torch.einsum Onnx Converts models from lightgbm, xgboost,. T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Exporting models which use einsum in their forward() method does not work. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. This is a minimal. Torch.einsum Onnx.

From github.com

GitHub PINTO0309/spo4onnx Simple tool for partial optimization of Torch.einsum Onnx Converts models from lightgbm, xgboost,. In this tutorial, we are going to expand this to describe how to convert a model defined in pytorch into the onnx format using. Exporting models which use einsum in their forward() method does not work. The einsum operator evaluates algebraic tensor operations on a sequence of tensors, using the einstein summation convention. Here is. Torch.einsum Onnx.

From discuss.pytorch.org

Module torch.onnx export create a graph containing an operator named Torch.einsum Onnx T in ( tensor (bfloat16), tensor (double), tensor (float), tensor (float16), tensor (int32), tensor (int64), tensor (uint32), tensor. Einsum (equation, * operands) → tensor [source] ¶ sums the product of the elements of the input operands along dimensions specified. I found the solution by simly adding do_constant_folding=false at torch.onnx.export at mmdeploy/apis/onnx/export.py, it works for. This is a minimal example: Exporting. Torch.einsum Onnx.