Dice Coefficient Pytorch . Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). Even the one on torchmetrics seems to not be a. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. I'm assuming your images/segmentation maps. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. How dice calcualtion could break the computation graph?

from towardsdatascience.com

The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. I'm assuming your images/segmentation maps. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. Even the one on torchmetrics seems to not be a. How dice calcualtion could break the computation graph? Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs).

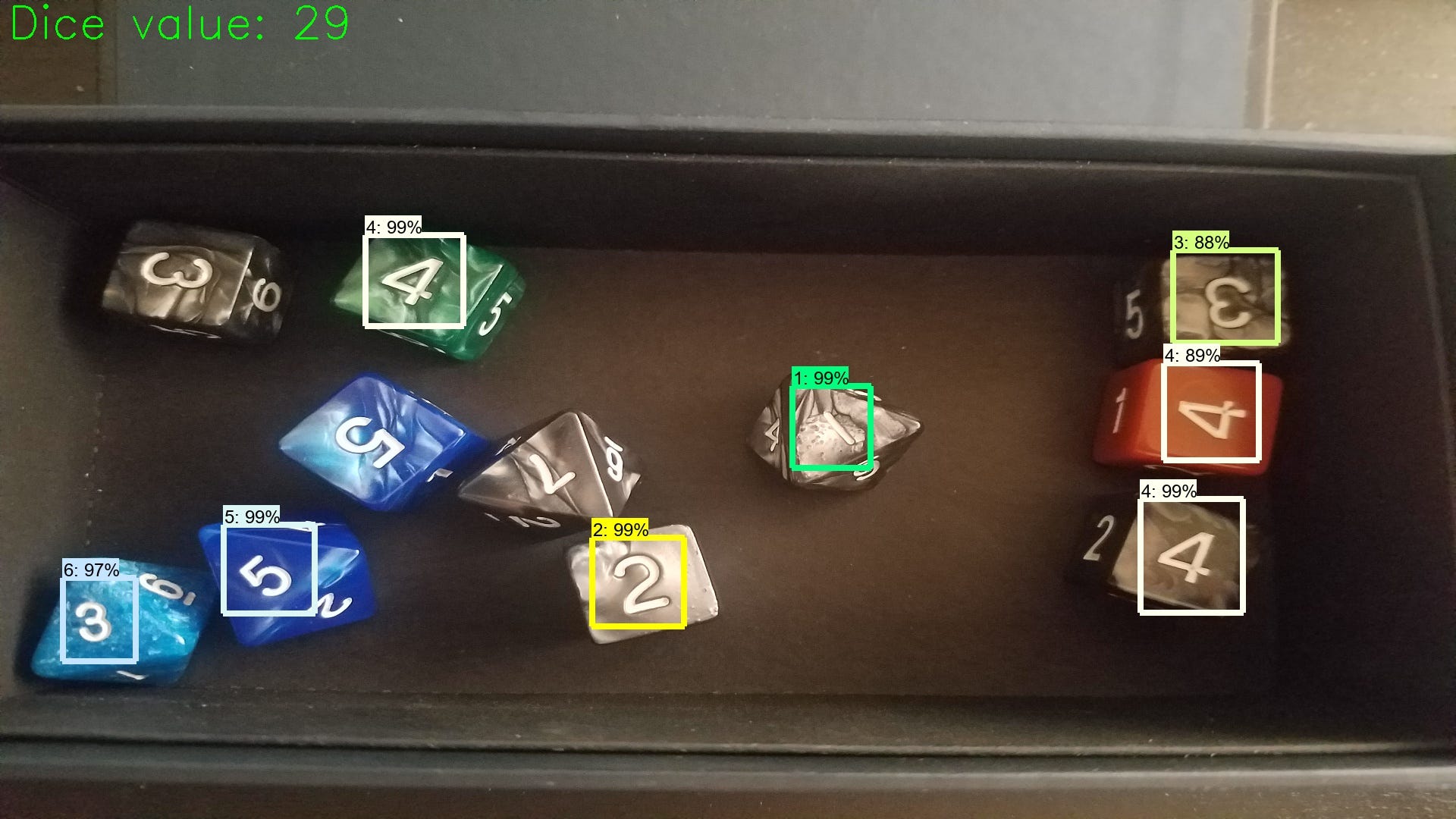

A Two Stage Stage Approach to Counting Dice Values with Tensorflow and

Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). How dice calcualtion could break the computation graph? Even the one on torchmetrics seems to not be a. I'm assuming your images/segmentation maps. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint.

From learnopencv.com

Document Segmentation Using Deep Learning in PyTorch Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Even the one on torchmetrics seems to not be a.. Dice Coefficient Pytorch.

From discuss.pytorch.org

Dice loss negative PyTorch Forums Dice Coefficient Pytorch I'm assuming your images/segmentation maps. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. How dice calcualtion could break the computation graph? The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Even. Dice Coefficient Pytorch.

From stackoverflow.com

tensorflow How to create Hybrid loss consisting from dice loss and Dice Coefficient Pytorch Even the one on torchmetrics seems to not be a. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index. Dice Coefficient Pytorch.

From www.researchgate.net

The dice coefficient distribution of different methods Download Dice Coefficient Pytorch I'm assuming your images/segmentation maps. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Dice (zero_division = 0, num_classes = none, threshold. Dice Coefficient Pytorch.

From blog.csdn.net

医学图象分割常用损失函数(附Pytorch和Keras代码)_bcedice loss介绍CSDN博客 Dice Coefficient Pytorch The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. How dice calcualtion could break the computation graph? The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. I'm assuming your images/segmentation maps. Dice. Dice Coefficient Pytorch.

From blog.csdn.net

Dice coefficient 和 Dice loss_dice coefficient lossCSDN博客 Dice Coefficient Pytorch You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average =. Dice Coefficient Pytorch.

From www.researchgate.net

Dice coefficient, Precision, recall and accuracy graphs for 3stage Dice Coefficient Pytorch You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. I'm assuming your images/segmentation maps. Even the one on torchmetrics seems to not be a. How dice calcualtion could break the computation graph? Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average. Dice Coefficient Pytorch.

From www.researchgate.net

(A) Distribution of Dice coefficient between the CBCTs and μCT ROI Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The graidents are updated on the basis of loss, while. Dice Coefficient Pytorch.

From www.researchgate.net

Boxplot of Dice Coefficient Score (DSC), mean surface distance (MSD Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint.. Dice Coefficient Pytorch.

From discuss.pytorch.org

Increase dice score vision PyTorch Forums Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). I'm assuming your images/segmentation maps. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from. Dice Coefficient Pytorch.

From www.researchgate.net

Distribution of Dice coefficients, measuring the performance of our CNV Dice Coefficient Pytorch Even the one on torchmetrics seems to not be a. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. I'm assuming your. Dice Coefficient Pytorch.

From github.com

Dice Coefficient Not changing · Issue 240 · · GitHub Dice Coefficient Pytorch The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. How dice calcualtion could break the computation graph? Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient. Dice Coefficient Pytorch.

From github.com

how to get approximate value as the description Dice coefficient of 0. Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). I'm assuming your images/segmentation maps. Even the one on torchmetrics seems to not be a. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. You can use dice_score for binary classes. Dice Coefficient Pytorch.

From github.com

Dice coefficient no change during training,is always very close to 0 Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. I'm assuming your images/segmentation maps. How dice calcualtion could break the computation graph? The graidents are updated on the basis of loss,. Dice Coefficient Pytorch.

From www.researchgate.net

Calculation of segmentation quality metrics Dice similarity Dice Coefficient Pytorch I'm assuming your images/segmentation maps. How dice calcualtion could break the computation graph? The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score.. Dice Coefficient Pytorch.

From towardsdatascience.com

A Two Stage Stage Approach to Counting Dice Values with Tensorflow and Dice Coefficient Pytorch Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. How dice calcualtion could break the computation graph? Even the one on torchmetrics seems to not be a. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The graidents are updated on the basis of. Dice Coefficient Pytorch.

From www.quantib.com

How to evaluate AI radiology algorithms Dice Coefficient Pytorch I'm assuming your images/segmentation maps. How dice calcualtion could break the computation graph? Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. Even the one on torchmetrics seems to not be a. Dice (zero_division. Dice Coefficient Pytorch.

From github.com

GitHub shuaizzZ/DiceLossPyTorch implementation of the Dice Loss in Dice Coefficient Pytorch Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. I'm assuming your images/segmentation maps. How dice calcualtion could break the computation graph? The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. You can use dice_score for binary classes and then use binary maps. Dice Coefficient Pytorch.

From www.researchgate.net

Dice coefficients comparing the thresholded positive and negative Dice Coefficient Pytorch You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. The dice coefficient ranges. Dice Coefficient Pytorch.

From www.researchgate.net

(a) Dice coefficients between manual and automated segmentations for Dice Coefficient Pytorch The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). How dice calcualtion could break the computation graph? The dice coefficient. Dice Coefficient Pytorch.

From github.com

GitHub miaotianyi/pytorchconfusionmatrix Differentiable precision Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). I'm assuming your images/segmentation maps. How dice calcualtion could break the computation graph? The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best. Dice Coefficient Pytorch.

From github.com

Dice coefficient no change during training,is always very close to 0 Dice Coefficient Pytorch You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). The dice coefficient ranges from 0 to 1, where a. Dice Coefficient Pytorch.

From www.researchgate.net

The curve of the Dice coefficient over the epochs Download Scientific Dice Coefficient Pytorch The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. Even the one on torchmetrics seems to not be a. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. How dice calcualtion could break the computation graph? The graidents are updated on the basis. Dice Coefficient Pytorch.

From blog.csdn.net

Dice Coefficient Pytorch Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). Even the one on torchmetrics seems to not be a. You can use dice_score for binary classes and then use binary maps. Dice Coefficient Pytorch.

From github.com

how to get approximate value as the description Dice coefficient of 0. Dice Coefficient Pytorch Even the one on torchmetrics seems to not be a. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. How dice calcualtion could break the computation graph? The dice coefficient ranges from 0 to 1,. Dice Coefficient Pytorch.

From www.researchgate.net

Example of Dice coefficient. Download Scientific Diagram Dice Coefficient Pytorch How dice calcualtion could break the computation graph? The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient. Dice Coefficient Pytorch.

From minimin2.tistory.com

[딥러닝] Dice Coefficient 설명, pytorch 코드(segmentation 평가방법) Dice Coefficient Pytorch Even the one on torchmetrics seems to not be a. I'm assuming your images/segmentation maps. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score.. Dice Coefficient Pytorch.

From theaisummer.com

Deep learning in medical imaging 3D medical image segmentation with Dice Coefficient Pytorch The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. Even the one on torchmetrics seems to not be a. How dice calcualtion. Dice Coefficient Pytorch.

From github.com

Dice coefficient no change during training,is always very close to 0 Dice Coefficient Pytorch Even the one on torchmetrics seems to not be a. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. I'm assuming your images/segmentation. Dice Coefficient Pytorch.

From oncologymedicalphysics.com

Image Registration Oncology Medical Physics Dice Coefficient Pytorch I'm assuming your images/segmentation maps. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Even the one on torchmetrics seems to not be. Dice Coefficient Pytorch.

From www.researchgate.net

Validation set trends of loss and Dice coefficients for each method in Dice Coefficient Pytorch Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). Even the one on torchmetrics seems to not be a. How dice calcualtion could break the computation graph? Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. The dice coefficient ranges. Dice Coefficient Pytorch.

From www.programmersought.com

[Pytorch] Dice coefficient and Dice Loss loss function implementation Dice Coefficient Pytorch The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none,. Dice Coefficient Pytorch.

From towardsdatascience.com

A Two Stage Stage Approach to Counting Dice Values with Tensorflow and Dice Coefficient Pytorch How dice calcualtion could break the computation graph? The graidents are updated on the basis of loss, while dice score is the evaluation critertion to save the best model checkpoint. Dice (zero_division = 0, num_classes = none, threshold = 0.5, average = 'micro', mdmc_average = 'global', ignore_index = none, top_k = none, multiclass = none, ** kwargs). I'm assuming your. Dice Coefficient Pytorch.

From www.programmersought.com

[Pytorch] Dice coefficient and Dice Loss loss function implementation Dice Coefficient Pytorch How dice calcualtion could break the computation graph? You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree of overlap and thus better segmentation. The graidents are updated on. Dice Coefficient Pytorch.

From www.researchgate.net

2Plot for IoU & Dice Coefficient vs Epoch The plots of IoU and Dice Dice Coefficient Pytorch Ignite.metrics.dicecoefficient(cm, ignore_index=none) [source] calculates dice coefficient for a given confusionmatrix metric. How dice calcualtion could break the computation graph? You can use dice_score for binary classes and then use binary maps for all the classes repeatedly to get a multiclass dice score. The dice coefficient ranges from 0 to 1, where a value closer to 1 indicates a higher degree. Dice Coefficient Pytorch.