Rdd Getnumpartitions . Returns the number of partitions in rdd. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Rdd.getnumpartitions() → int [source] ¶. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. For showing partitions on pyspark rdd use: In the case of scala,.

from www.linuxprobe.com

Returns the number of partitions in rdd. Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In the case of scala,. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Rdd.getnumpartitions() → int [source] ¶.

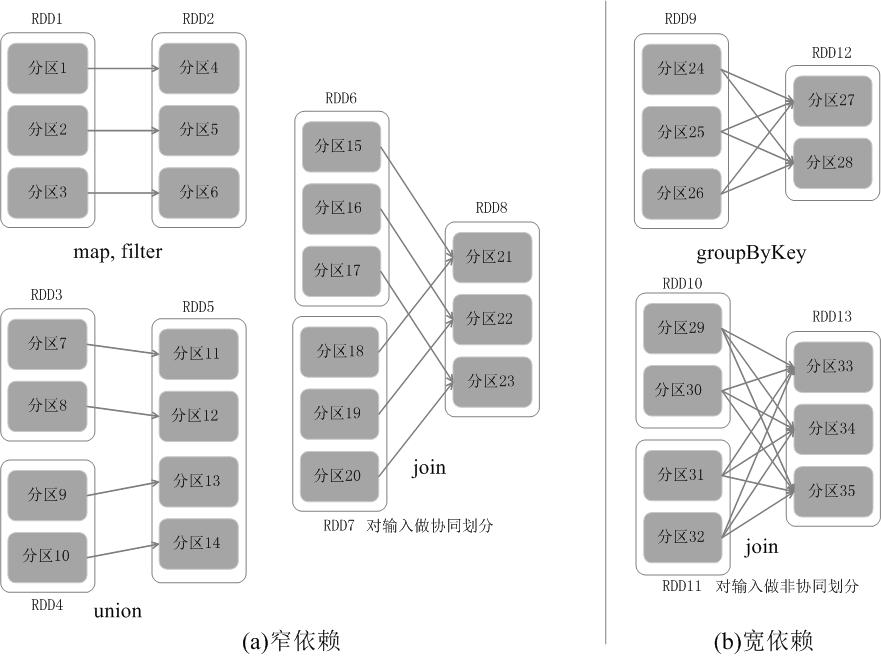

RDD的运行机制 《Linux就该这么学》

Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). For showing partitions on pyspark rdd use: Returns the number of partitions in rdd. Returns the number of partitions in rdd. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. In the case of scala,. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Rdd.getnumpartitions() → int [source] ¶. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions.

From blog.csdn.net

spark学习13之RDD的partitions数目获取_spark中的一个ask可以处理一个rdd中客个partition的数CSDN博客 Rdd Getnumpartitions In the case of scala,. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: In apache spark, you can. Rdd Getnumpartitions.

From erikerlandson.github.io

Some Implications of Supporting the Scala drop Method for Spark RDDs Rdd Getnumpartitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). For showing partitions on pyspark rdd use: Returns the number. Rdd Getnumpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Rdd Getnumpartitions For showing partitions on pyspark rdd use: In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In the case of scala,. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. In this method, we are going to find the number of partitions in a data frame using getnumpartitions. Rdd Getnumpartitions.

From slidesplayer.org

Apache Spark Tutorial 빅데이터 분산 컴퓨팅 박영택. ppt download Rdd Getnumpartitions >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: In this method, we are going to find the number of partitions in a data frame using getnumpartitions (). Rdd Getnumpartitions.

From abs-tudelft.github.io

Resilient Distributed Datasets for Big Data Lab Manual Rdd Getnumpartitions For showing partitions on pyspark rdd use: In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. In the case of scala,. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size. Rdd Getnumpartitions.

From www.simplilearn.com

RDDs in Spark Tutorial Simplilearn Rdd Getnumpartitions For showing partitions on pyspark rdd use: To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns the number of partitions in rdd. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). In apache spark, you can. Rdd Getnumpartitions.

From intellipaat.com

What is RDD in Spark Learn about spark RDD Intellipaat Rdd Getnumpartitions Returns the number of partitions in rdd. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Returns the number of partitions in rdd. For showing partitions on pyspark rdd use: Once you have the number of partitions, you can calculate. Rdd Getnumpartitions.

From www.hadoopinrealworld.com

What is RDD? Hadoop In Real World Rdd Getnumpartitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). In the case of scala,. For showing partitions on pyspark. Rdd Getnumpartitions.

From www.researchgate.net

RDD in mouse liver and adipose identified by RNASeq. (A) RDD numbers Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. In the case of scala,. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions. Rdd Getnumpartitions.

From matnoble.github.io

图解Spark RDD的五大特性 MatNoble Rdd Getnumpartitions Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In apache spark, you can use the rdd.getnumpartitions() method to get. Rdd Getnumpartitions.

From blog.csdn.net

Python大数据之PySpark(五)RDD详解_pyspark rddCSDN博客 Rdd Getnumpartitions In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Once you have the number of partitions, you can calculate the. Rdd Getnumpartitions.

From www.hadoopinrealworld.com

What is RDD? Hadoop In Real World Rdd Getnumpartitions In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on pyspark rdd use: In the case of scala,. In apache spark, you. Rdd Getnumpartitions.

From www.chegg.com

Solved Use the following line of code to create an RDD Rdd Getnumpartitions Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd. Rdd Getnumpartitions.

From www.geeksforgeeks.org

Show partitions on a Pyspark RDD Rdd Getnumpartitions For showing partitions on pyspark rdd use: In the case of scala,. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. Returns the number of. Rdd Getnumpartitions.

From blex.me

Spark 맛보기 1. Spark란? — mildsalmon Rdd Getnumpartitions To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). >>> rdd =. Rdd Getnumpartitions.

From www.simplilearn.com

RDDs in Spark Tutorial Simplilearn Rdd Getnumpartitions In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In the case of scala,. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by. Rdd Getnumpartitions.

From www.cnblogs.com

4.RDD操作 斌BEN 博客园 Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Rdd.getnumpartitions() → int [source] ¶. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of. Rdd Getnumpartitions.

From github.com

GitHub hbaserdd/hbaserddexamples HBase RDD example project Rdd Getnumpartitions In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Returns the number of partitions in rdd. Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an. Rdd Getnumpartitions.

From www.201301.com

通过ZAT结合机器学习进行威胁检测(三)网盾安全培训 Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). For showing partitions on pyspark rdd use: In the case of scala,. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. In this. Rdd Getnumpartitions.

From www.databricks.com

Resilient Distributed Dataset (RDD) Databricks Rdd Getnumpartitions Returns the number of partitions in rdd. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions.. Rdd Getnumpartitions.

From klaojgfcx.blob.core.windows.net

How To Determine Number Of Partitions In Spark at Troy Powell blog Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Returns the number of partitions in rdd. In the case of scala,. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd. Rdd Getnumpartitions.

From zhuanlan.zhihu.com

RDD(一):基础概念 知乎 Rdd Getnumpartitions In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In the case of scala,. Returns the number of partitions in rdd. In apache spark, you can use. Rdd Getnumpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Rdd Getnumpartitions In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). For showing partitions on pyspark rdd use: >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Rdd.getnumpartitions() → int [source] ¶. In apache. Rdd Getnumpartitions.

From www.turing.com

Resilient Distribution Dataset Immutability in Apache Spark Rdd Getnumpartitions >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In the case of scala,. In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). You need to call getnumpartitions(). Rdd Getnumpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Rdd Getnumpartitions Rdd.getnumpartitions() → int [source] ¶. Returns the number of partitions in rdd. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In the case of scala,. Returns. Rdd Getnumpartitions.

From zhuanlan.zhihu.com

RDD(二):RDD算子 知乎 Rdd Getnumpartitions In the case of scala,. Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. In apache spark, you can use the rdd.getnumpartitions() method to. Rdd Getnumpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Rdd Getnumpartitions In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns the number of partitions in rdd. Returns the number of partitions in. Rdd Getnumpartitions.

From loensgcfn.blob.core.windows.net

Rdd.getnumpartitions Pyspark at James Burkley blog Rdd Getnumpartitions To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. For showing partitions on pyspark rdd use: >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Returns the number of partitions in rdd. In the case of scala,. Once you have the number of partitions, you can calculate the approximate. Rdd Getnumpartitions.

From erikerlandson.github.io

Implementing an RDD scanLeft Transform With Cascade RDDs tool monkey Rdd Getnumpartitions Returns the number of partitions in rdd. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in. Rdd Getnumpartitions.

From www.linuxprobe.com

RDD的运行机制 《Linux就该这么学》 Rdd Getnumpartitions You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Returns the number of partitions in rdd. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Returns the number of partitions in rdd. In the case of scala,.. Rdd Getnumpartitions.

From sparkbyexamples.com

PySpark Convert DataFrame to RDD Spark By {Examples} Rdd Getnumpartitions In apache spark, you can use the rdd.getnumpartitions() method to get the number of partitions in an rdd (resilient distributed dataset). Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. Rdd.getnumpartitions() → int [source] ¶. >>> rdd = sc.parallelize([1, 2,. Rdd Getnumpartitions.

From blog.csdn.net

Spark 创建RDD、DataFrame各种情况的默认分区数_sparkdataframe.getnumpartCSDN博客 Rdd Getnumpartitions In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. In pyspark, you can use the rdd.getnumpartitions() method to find out the number of partitions of a dataframe. Returns the number of partitions in rdd. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. Rdd.getnumpartitions() →. Rdd Getnumpartitions.

From minman2115.github.io

Spark core concepts Minman's Data Science Study Notes Rdd Getnumpartitions You need to call getnumpartitions() on the dataframe's underlying rdd, e.g., df.rdd.getnumpartitions(). Rdd.getnumpartitions() → int [source] ¶. Once you have the number of partitions, you can calculate the approximate size of each partition by dividing the total size of the rdd by the number of partitions. In the case of scala,. Returns the number of partitions in rdd. In apache. Rdd Getnumpartitions.

From www.researchgate.net

RDD conversion flowchart in the local clustering stage. Download Rdd Getnumpartitions To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In the case of scala,. In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. >>> rdd = sc.parallelize([1, 2, 3, 4], 2) >>>. For showing. Rdd Getnumpartitions.

From www.oreilly.com

1. Introduction to Apache Spark A Unified Analytics Engine Learning Rdd Getnumpartitions For showing partitions on pyspark rdd use: In this method, we are going to find the number of partitions in a data frame using getnumpartitions () function in a data frame. To get the number of partitions on pyspark rdd, you need to convert the data frame to rdd data frame. In apache spark, you can use the rdd.getnumpartitions() method. Rdd Getnumpartitions.