Sagemaker Open S3 File . This guide has shown you how to do. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. Ability to create a pipeline to automate the machine. Use pip or conda to install s3fs. This code sample to import csv file from s3, tested at sagemaker notebook. Automatically spin down hardware resources once the task is complete. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. In this tutorial, you'll learn how to load data from. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets.

from www.activeloop.ai

In this tutorial, you'll learn how to load data from. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. This code sample to import csv file from s3, tested at sagemaker notebook. Use pip or conda to install s3fs. Ability to create a pipeline to automate the machine. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. This guide has shown you how to do. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job.

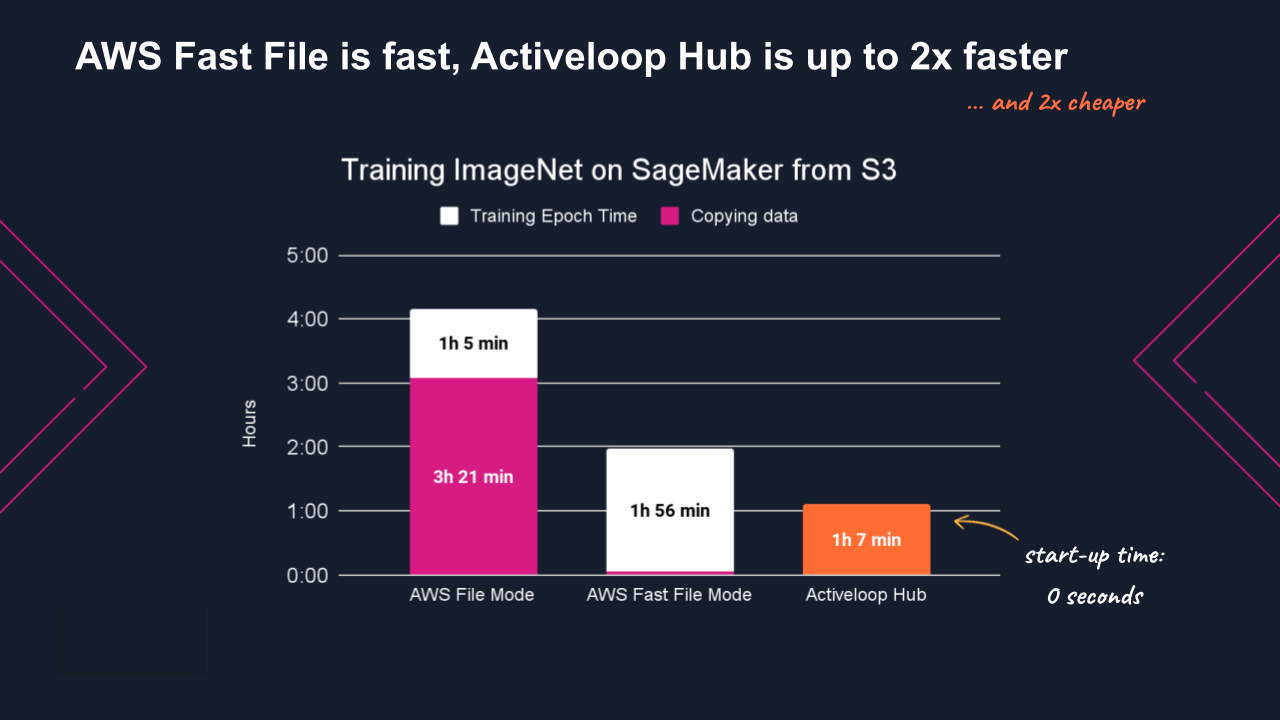

Low AWS GPU usage? Achieve up to 95 GPU utilization in SageMaker with Hub

Sagemaker Open S3 File I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. This guide has shown you how to do. In this tutorial, you'll learn how to load data from. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. Automatically spin down hardware resources once the task is complete. Use pip or conda to install s3fs. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Ability to create a pipeline to automate the machine. This code sample to import csv file from s3, tested at sagemaker notebook.

From docs.netapp.com

Part 1 Integrating AWS FSx for NetApp ONTAP (FSxN) as a private S3 Sagemaker Open S3 File This code sample to import csv file from s3, tested at sagemaker notebook. This guide has shown you how to do. Ability to create a pipeline to automate the machine. Automatically spin down hardware resources once the task is complete. In this tutorial, you'll learn how to load data from. Most convenient way to store data for machine learning abd. Sagemaker Open S3 File.

From docs.amazonaws.cn

Getting started with using Amazon SageMaker Canvas Amazon SageMaker Sagemaker Open S3 File Automatically spin down hardware resources once the task is complete. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among. Sagemaker Open S3 File.

From dev.classmethod.jp

Amazon SageMakerからS3に保存したデータを操作する方法について(EMR,Glueを利用する) DevelopersIO Sagemaker Open S3 File Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. Use pip or conda. Sagemaker Open S3 File.

From docs.amazonaws.cn

访问训练数据 Amazon SageMaker Sagemaker Open S3 File Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for. Sagemaker Open S3 File.

From docs.netapp.com

Part 1 Integrating AWS FSx for NetApp ONTAP (FSxN) as a private S3 Sagemaker Open S3 File Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. This. Sagemaker Open S3 File.

From github.com

S3 manifest file cannot be used for SageMaker Processing job defined by Sagemaker Open S3 File Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Ability to create a. Sagemaker Open S3 File.

From www.vedereai.com

Deploy and manage machine learning pipelines with Terraform using Sagemaker Open S3 File Ability to create a pipeline to automate the machine. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. The easiest way i’ve found to do this (as an. Sagemaker Open S3 File.

From www.sqlshack.com

How to create an AWS SageMaker Instance Sagemaker Open S3 File In this tutorial, you'll learn how to load data from. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. Ability to create a pipeline to automate the machine. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Automatically spin down hardware resources. Sagemaker Open S3 File.

From stackoverflow.com

amazon s3 KeyError 'ETag' while trying to load data from S3 to Sagemaker Open S3 File Automatically spin down hardware resources once the task is complete. This code sample to import csv file from s3, tested at sagemaker notebook. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Use. Sagemaker Open S3 File.

From blog.claydesk.com

Amazon SageMaker Studio Integrated Development Environment For ML Sagemaker Open S3 File You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Automatically spin down hardware resources once the task is complete. This guide has shown you how to do. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among. Sagemaker Open S3 File.

From forums.fast.ai

SageMaker & S3 Bucket Images Part 1 (2019) fast.ai Course Forums Sagemaker Open S3 File Use pip or conda to install s3fs. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. This code sample to import csv file from s3, tested at sagemaker notebook. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows. Sagemaker Open S3 File.

From www.youtube.com

Tutorial AWS SageMaker, preprocessing data with Spark and S3 YouTube Sagemaker Open S3 File Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. Use pip or conda to install s3fs. Ability to create a pipeline to automate the machine. This guide has shown you how to do. Automatically spin down hardware resources once the task is complete. In this tutorial,. Sagemaker Open S3 File.

From docs.netapp.com

Part 1 Integrating AWS FSx for NetApp ONTAP (FSxN) as a private S3 Sagemaker Open S3 File Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. This guide has shown you how to do. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. This code sample to import csv file from s3, tested at. Sagemaker Open S3 File.

From dev.classmethod.jp

Amazon SageMakerからS3に保存したデータを操作する方法について(EMR,Glueを利用する) DevelopersIO Sagemaker Open S3 File Automatically spin down hardware resources once the task is complete. This code sample to import csv file from s3, tested at sagemaker notebook. Ability to create a pipeline to automate the machine. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. The easiest way i’ve found to do this (as. Sagemaker Open S3 File.

From www.youtube.com

Working With Custom S3 Buckets and AWS SageMaker YouTube Sagemaker Open S3 File Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. In this tutorial, you'll learn how to load data from. Use pip or conda to install s3fs. This guide has shown you how to do. This code sample to import csv file from s3, tested at sagemaker notebook. The easiest way. Sagemaker Open S3 File.

From livebook.manning.com

B Setting up and using S3 to store files · Machine Learning for Sagemaker Open S3 File Ability to create a pipeline to automate the machine. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. In this tutorial, you'll learn how to load data. Sagemaker Open S3 File.

From sagemaker-examples.readthedocs.io

Importing Dataset into Data Wrangler using SageMaker Studio — Amazon Sagemaker Open S3 File Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. This guide has shown you. Sagemaker Open S3 File.

From www.run.ai

SageMaker Tutorial 3 Steps to Run Your Own Model in SageMaker Sagemaker Open S3 File This code sample to import csv file from s3, tested at sagemaker notebook. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. This guide has shown you how to do. Ability to create a pipeline to automate the machine. Most convenient way to store data for machine. Sagemaker Open S3 File.

From towardsdatascience.com

A quick guide to using Spot instances with Amazon SageMaker by Sagemaker Open S3 File I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. Use pip or conda to install s3fs. In this tutorial, you'll learn how to load data from. This guide has shown you how to do. The easiest way i’ve found to do this (as an aws beginner) is. Sagemaker Open S3 File.

From www.persistent.com

Understanding AWS SageMaker Capabilities A Detailed Exploration Sagemaker Open S3 File Use pip or conda to install s3fs. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Ability to create a pipeline to automate the machine. This guide has shown you how to do.. Sagemaker Open S3 File.

From sagemaker-examples.readthedocs.io

Importing Dataset into Data Wrangler using SageMaker Studio — Amazon Sagemaker Open S3 File This guide has shown you how to do. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. In this tutorial, you'll learn how to load data from. Most convenient way to store data. Sagemaker Open S3 File.

From dev.classmethod.jp

Amazon SageMakerからS3に保存したデータを操作する方法について(EMR,Glueを利用する) | Developers.IO Sagemaker Open S3 File Ability to create a pipeline to automate the machine. This code sample to import csv file from s3, tested at sagemaker notebook. This guide has shown you how to do. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things). Sagemaker Open S3 File.

From github.com

sagemaker.session.download_data() is unable to download S3 content Sagemaker Open S3 File In this tutorial, you'll learn how to load data from. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. This guide has shown you how to do. This code sample to import csv file from s3, tested at sagemaker notebook. Set up a s3 bucket to upload training datasets and save training output data for. Sagemaker Open S3 File.

From www.activeloop.ai

Low AWS GPU usage? Achieve up to 95 GPU utilization in SageMaker with Hub Sagemaker Open S3 File Automatically spin down hardware resources once the task is complete. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. In this tutorial, you'll learn how to load data from. Most convenient way to. Sagemaker Open S3 File.

From ec2-18-141-20-153.ap-southeast-1.compute.amazonaws.com

How to build Machine Learning Models quickly using Amazon Sagemaker Sagemaker Open S3 File This guide has shown you how to do. In this tutorial, you'll learn how to load data from. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Ability to create a pipeline to. Sagemaker Open S3 File.

From www.missioncloud.com

Amazon SageMaker Studio Tutorial Sagemaker Open S3 File The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Automatically spin down hardware resources once the task is complete. I have focussed on amazon sagemaker in this article, but if you have the. Sagemaker Open S3 File.

From 000200.awsstudygroup.com

Export Data tới S3 SageMaker Sagemaker Open S3 File This guide has shown you how to do. Automatically spin down hardware resources once the task is complete. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. Ability to create a pipeline. Sagemaker Open S3 File.

From www.reddit.com

Issue Sagemaker Deploying trained model from S3 returns r/aws Sagemaker Open S3 File In this tutorial, you'll learn how to load data from. Ability to create a pipeline to automate the machine. You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Use pip or conda to install s3fs. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on. Sagemaker Open S3 File.

From github.com

jupyterlabs3browser/SAGEMAKER.md at master · IBM/jupyterlabs3 Sagemaker Open S3 File Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. I. Sagemaker Open S3 File.

From predictivehacks.com

How to Connect Amazon SageMaker Studio Lab with S3 Predictive Hacks Sagemaker Open S3 File This guide has shown you how to do. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning job. This code sample to import csv file from s3, tested. Sagemaker Open S3 File.

From www.youtube.com

How To Pull Data into S3 using AWS Sagemaker YouTube Sagemaker Open S3 File Use pip or conda to install s3fs. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. Automatically spin down hardware resources once the task is complete. This code sample to import csv file from s3, tested at sagemaker notebook. The easiest way i’ve found to do this. Sagemaker Open S3 File.

From www.edlitera.com

The Ultimate Guide to Amazon SageMaker Edlitera Sagemaker Open S3 File The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. Ability. Sagemaker Open S3 File.

From aws.amazon.com

Build a powerful question answering bot with Amazon SageMaker, Amazon Sagemaker Open S3 File In this tutorial, you'll learn how to load data from. Ability to create a pipeline to automate the machine. The easiest way i’ve found to do this (as an aws beginner) is to set up iam role for all of your sagemaker notebooks, which allows them (among other things) to read data from s3 buckets. Set up a s3 bucket. Sagemaker Open S3 File.

From saturncloud.io

Loading S3 Data into Your AWS SageMaker Notebook A Guide Saturn Sagemaker Open S3 File You can load data from aws s3 into aws sagemaker using boto3 or awswranger. Automatically spin down hardware resources once the task is complete. I have focussed on amazon sagemaker in this article, but if you have the boto3 sdk set up correctly on your local. In this tutorial, you'll learn how to load data from. Use pip or conda. Sagemaker Open S3 File.

From www.youtube.com

How to Query CSV, json files in S3 using Python and Sagemaker YouTube Sagemaker Open S3 File Most convenient way to store data for machine learning abd analysis is s3 bucket, which could contain any types of data, like csv,. In this tutorial, you'll learn how to load data from. Ability to create a pipeline to automate the machine. Set up a s3 bucket to upload training datasets and save training output data for your hyperparameter tuning. Sagemaker Open S3 File.