Databricks Describe Partitions . Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause.

from data-flair.training

Returns the basic metadata information of a table. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small.

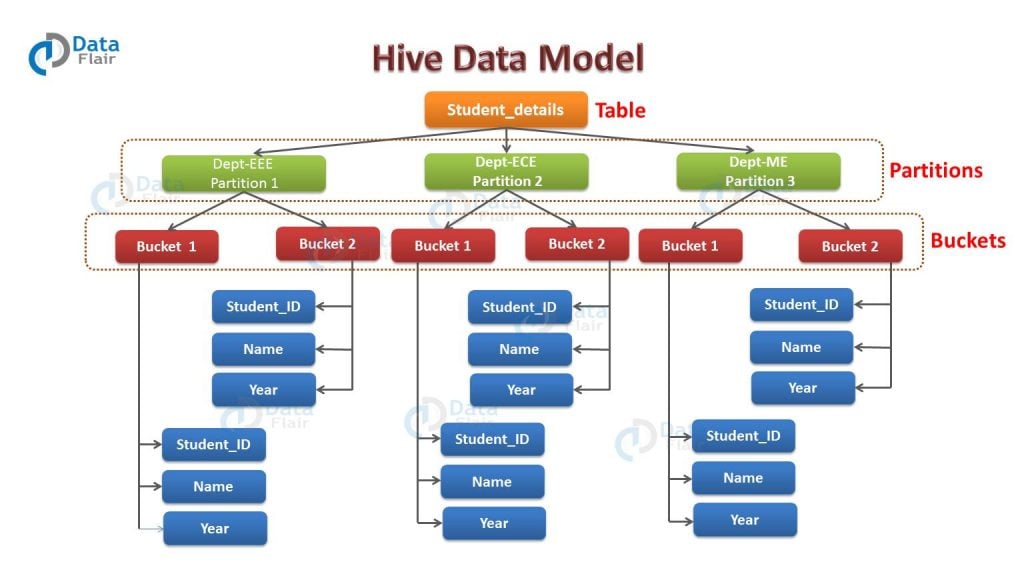

Hive Partitioning vs Bucketing Advantages and Disadvantages DataFlair

Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. Returns the basic metadata information of a table. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use.

From www.linkedin.com

Databricks SQL How (not) to partition your way out of performance Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small. Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. To use partitions, you define the set of. Databricks Describe Partitions.

From github.com

dbtdatabricks 1.6.X overeager introspection (`describe extended Databricks Describe Partitions This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. To use partitions, you. Databricks Describe Partitions.

From exoxwqtpl.blob.core.windows.net

Partition Table Databricks at Jamie Purington blog Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Returns the basic metadata information of a table. To use. Databricks Describe Partitions.

From www.databricks.com

Serverless Continuous Delivery with Databricks and AWS CodePipeline Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around. Databricks Describe Partitions.

From www.kytheralabs.com

Kythera Labs Improving Data Quality Through the Medallion Architecture Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. The 200 partitions might be too large if a user is working with small. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column,. Databricks Describe Partitions.

From pdeyhim.medium.com

Running the most efficient data warehouse with Databricks serverless Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use.. Databricks Describe Partitions.

From www.exam4training.com

Which of the following locations hosts the driver and worker nodes of a Databricks Describe Partitions You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The 200 partitions. Databricks Describe Partitions.

From exolwjxvu.blob.core.windows.net

Partition Key Databricks at Cathy Dalzell blog Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. The 200 partitions might be too large if a user is working with small. Replace with the name of your delta table, <<strong>partition</strong>_column> with. Databricks Describe Partitions.

From thebrandhopper.com

Databricks Success Story A Data Storage Giant Born Out Of UC Berkeley Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. This article provides an overview of how you can partition tables on. Databricks Describe Partitions.

From www.databricks.com

Orchestrate Databricks on AWS with Airflow Databricks Blog Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. To use partitions, you define the set of partitioning column when you create a table. Databricks Describe Partitions.

From select.dev

Introduction to Snowflake's MicroPartitions Databricks Describe Partitions You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small. Returns the basic metadata information of. Databricks Describe Partitions.

From www.confluent.io

Databricks Databricks Describe Partitions This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. This article provides. Databricks Describe Partitions.

From amandeep-singh-johar.medium.com

Maximizing Performance and Efficiency with Databricks ZOrdering Databricks Describe Partitions The 200 partitions might be too large if a user is working with small. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Returns the basic metadata information of a table. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when. Databricks Describe Partitions.

From data-flair.training

Hive Partitioning vs Bucketing Advantages and Disadvantages DataFlair Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. Returns the basic metadata information of a table. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. To use. Databricks Describe Partitions.

From datasolut.com

Der Databricks Unity Catalog einfach erklärt Datasolut GmbH Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. To use partitions, you define the set of partitioning column when you create a table by including. Databricks Describe Partitions.

From loetqojdd.blob.core.windows.net

Partition Real Analysis at Christine Philbrick blog Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. The. Databricks Describe Partitions.

From www.datainaction.dev

Databricks Como funciona o Column Mapping (Rename e Drop columns) Databricks Describe Partitions Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. This article provides an overview of how you. Databricks Describe Partitions.

From www.databricks.com

Databricks Workflows Databricks Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. This article provides an overview of how you can partition. Databricks Describe Partitions.

From exolwjxvu.blob.core.windows.net

Partition Key Databricks at Cathy Dalzell blog Databricks Describe Partitions To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. Returns the basic metadata information of a table. Spark by default uses 200 partitions when doing transformations. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides. Databricks Describe Partitions.

From meme2515.github.io

Databricks 와 관리형 분산 처리 서비스 Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. To use partitions, you define the set of partitioning column when. Databricks Describe Partitions.

From www.graphable.ai

Databricks Architecture A Concise Explanation Databricks Describe Partitions This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. This article provides an overview of how you can partition tables on databricks and specific recommendations around. Databricks Describe Partitions.

From www.databricks.com

Databricks Terraform Provider Is Now Generally Available Databricks Blog Databricks Describe Partitions This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. You can retrieve. Databricks Describe Partitions.

From docs.acceldata.io

Databricks Jobs Visualizations Acceldata Data Observability Cloud Databricks Describe Partitions You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Returns the basic metadata information of a table. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. The 200 partitions might be too large if a user is working with small. To use. Databricks Describe Partitions.

From www.youtube.com

Databricks and the Data Lakehouse YouTube Databricks Describe Partitions Returns the basic metadata information of a table. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. This article provides an overview of how you can partition tables on databricks and specific recommendations. Databricks Describe Partitions.

From docs.gcp.databricks.com

Data lakehouse architecture Databricks wellarchitected framework Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use.. Databricks Describe Partitions.

From www.nextlytics.com

Databricks SQL and Qlik Comparing Data Visualization Tools Databricks Describe Partitions Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. Returns the basic metadata information of a table. The 200 partitions might be too large if a user is working with small. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Spark by. Databricks Describe Partitions.

From www.databricks.com

Databricks Assistant Databricks Databricks Describe Partitions This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. You can retrieve detailed. Databricks Describe Partitions.

From doitsomething.com

What Is Databricks The Complete Beginner’s Guide [2023] Do It Something Databricks Describe Partitions Returns the basic metadata information of a table. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. The 200 partitions might be too large if a user is working with small. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. To use. Databricks Describe Partitions.

From www.cockroachlabs.com

What is data partitioning, and how to do it right Databricks Describe Partitions The 200 partitions might be too large if a user is working with small. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. Returns the basic metadata information of a table. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and.. Databricks Describe Partitions.

From www.youtube.com

11. DataFrame Functions Describe in DatabricksDatabricks Tutorial for Databricks Describe Partitions You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. To use. Databricks Describe Partitions.

From docs.microsoft.com

Stream processing with Databricks Azure Reference Architectures Databricks Describe Partitions Returns the basic metadata information of a table. Spark by default uses 200 partitions when doing transformations. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. Replace with the. Databricks Describe Partitions.

From medium.com

Databricks CLI Medium Databricks Describe Partitions Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause.. Databricks Describe Partitions.

From www.databricks.com

Take Reports From Concept to Production with PySpark and Databricks Databricks Describe Partitions This article provides an overview of how you can partition tables on azure databricks and specific recommendations around when you should use. Spark by default uses 200 partitions when doing transformations. Returns the basic metadata information of a table. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause.. Databricks Describe Partitions.

From exoxwqtpl.blob.core.windows.net

Partition Table Databricks at Jamie Purington blog Databricks Describe Partitions Replace with the name of your delta table, <<strong>partition</strong>_column> with the name of your partition column, and. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. This article provides an overview of how you can partition tables on databricks and specific recommendations around when you should use. The. Databricks Describe Partitions.

From dxokdcibm.blob.core.windows.net

List Tables In A Database Databricks at Thomas Rawlins blog Databricks Describe Partitions Spark by default uses 200 partitions when doing transformations. To use partitions, you define the set of partitioning column when you create a table by including the partitioned by clause. You can retrieve detailed information about a delta table (for example, number of files, data size) using describe detail. This article provides an overview of how you can partition tables. Databricks Describe Partitions.